Introduction

LLMs are all the fashion, and the tool-calling function has broadened the scope of giant language fashions. As an alternative of producing solely texts, it enabled LLMs to perform complicated automation duties that have been beforehand unimaginable, comparable to dynamic UI era, agentic automation, and many others.

These fashions are educated over an enormous quantity of knowledge. Therefore, they perceive and may generate structured knowledge, making them superb for tool-calling functions requiring exact outputs. This has pushed the widespread adoption of LLMs in AI-driven software program improvement, the place tool-calling—starting from easy capabilities to stylish brokers—has grow to be a focus.

On this article, you’ll go from studying the basics of LLM instrument calling to implementing it to construct brokers utilizing open-source instruments.

Studying Goals

- Be taught what LLM instruments are.

- Perceive the basics of instrument calling and use circumstances.

- Discover how instrument calling works in OpenAI (ChatCompletions API, Assistants API, Parallel instrument calling, and Structured Output), Anthropic fashions, and LangChain.

- Be taught to construct succesful AI brokers utilizing open-source instruments.

This text was revealed as part of the Information Science Blogathon.

Instruments are objects that permit LLMs to work together with exterior environments. These instruments are capabilities made out there to LLMs, which will be executed individually each time the LLM determines that their use is acceptable.

Often, there are three parts of a instrument definition.

- Identify: A significant identify of the perform/instrument.

- Description: An in depth description of the instrument.

- Parameters: A JSON schema of parameters of the perform/instrument.

Instrument calling permits the mannequin to generate a response for a immediate that aligns with a user-defined schema for a perform. In different phrases, when the LLM determines {that a} instrument must be used, it generates a structured output that matches the schema for the instrument’s arguments.

As an illustration, in case you have supplied a schema of a get_weather perform to the LLM and ask it for the climate of a metropolis, as an alternative of producing a textual content response, it returns a formatted schema of capabilities arguments, which you should utilize to execute the perform to get the climate of a metropolis.

Regardless of the identify “instrument calling,” the mannequin doesn’t truly execute any instrument itself. As an alternative, it produces a structured output formatted in accordance with the outlined schema. Then, You may provide this output to the corresponding perform to run it in your finish.

AI labs like OpenAI and Anthropic have educated fashions in an effort to present the LLM with many instruments and have it choose the correct one in accordance with the context.

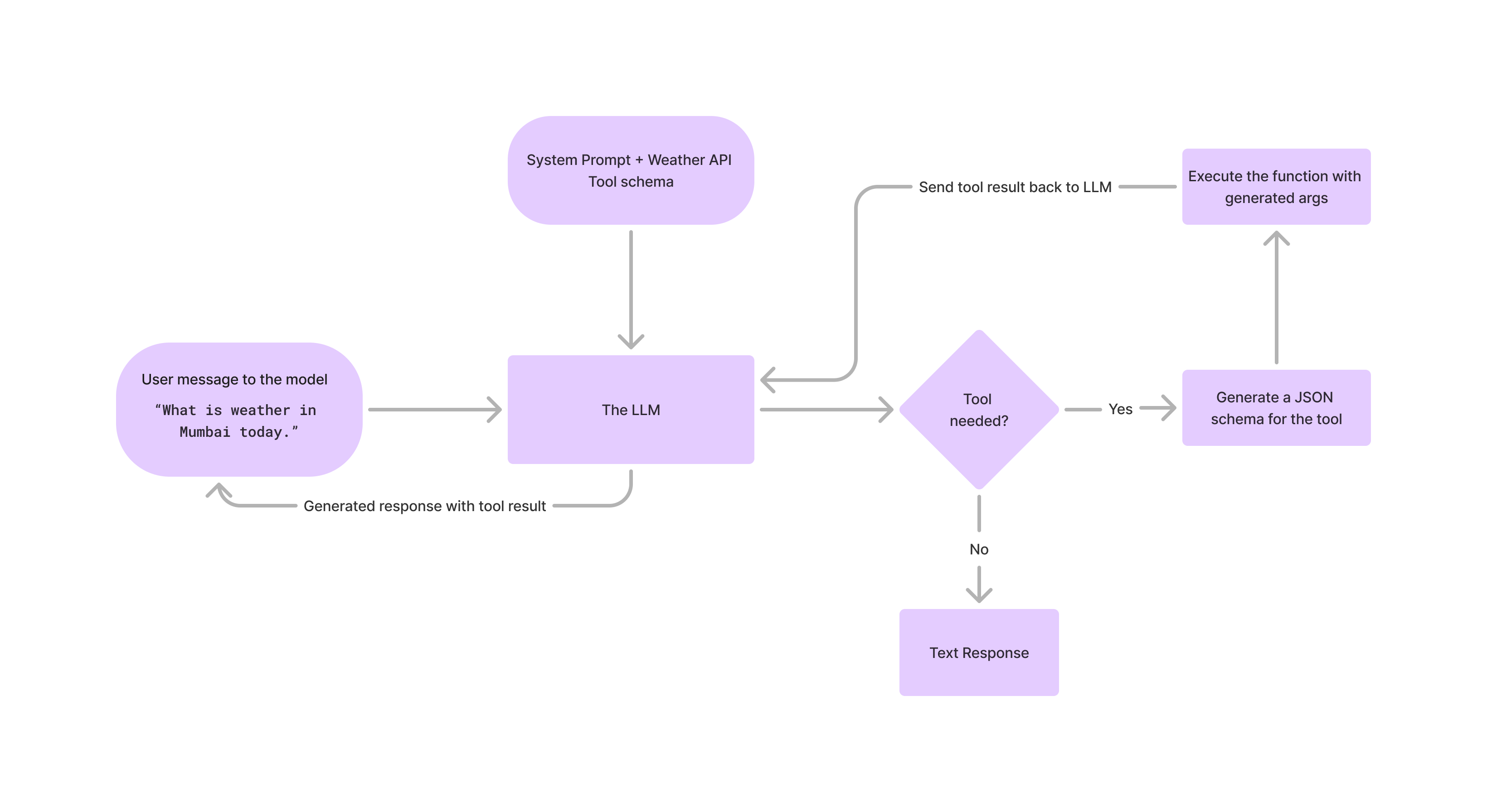

Every supplier has a special approach of dealing with instrument invocations and response dealing with. Right here’s the overall move of how instrument calling works if you cross a immediate and instruments to the LLM:

- Outline Instruments and Present a Consumer Immediate

- Outline instruments and capabilities with names, descriptions, and structured schema for arguments.

- Additionally embody a user-provided textual content, e.g., “What’s the climate like in New York right this moment?”

- The LLM Decides to Use a Instrument

- The Assistant assesses if a instrument is required.

- If sure, it halts the textual content era.

- The Assistant generates a JSON formatted response with the instrument’s parameter values.

- Extract Instrument Enter, Run Code, and Return Outputs

- Extract the parameters supplied within the perform name.

- Run the perform by passing the parameters.

- Move the outputs again to the LLM.

- Generate Solutions from Instrument Outputs

- The LLM makes use of the instrument outputs to formulate a basic reply.

Instance Use Instances

- Enabling LLMs to take motion: Join LLMs with exterior functions like Gmail, GitHub, and Discord to automate actions comparable to sending an e mail, pushing a PR, and sending a message.

- Offering LLMs with knowledge: Fetch knowledge from data bases like the net, Wikipedia, and Climate APIs to offer area of interest data to LLMs.

- Dynamic UIs: Updating UIs of your functions primarily based on person inputs.

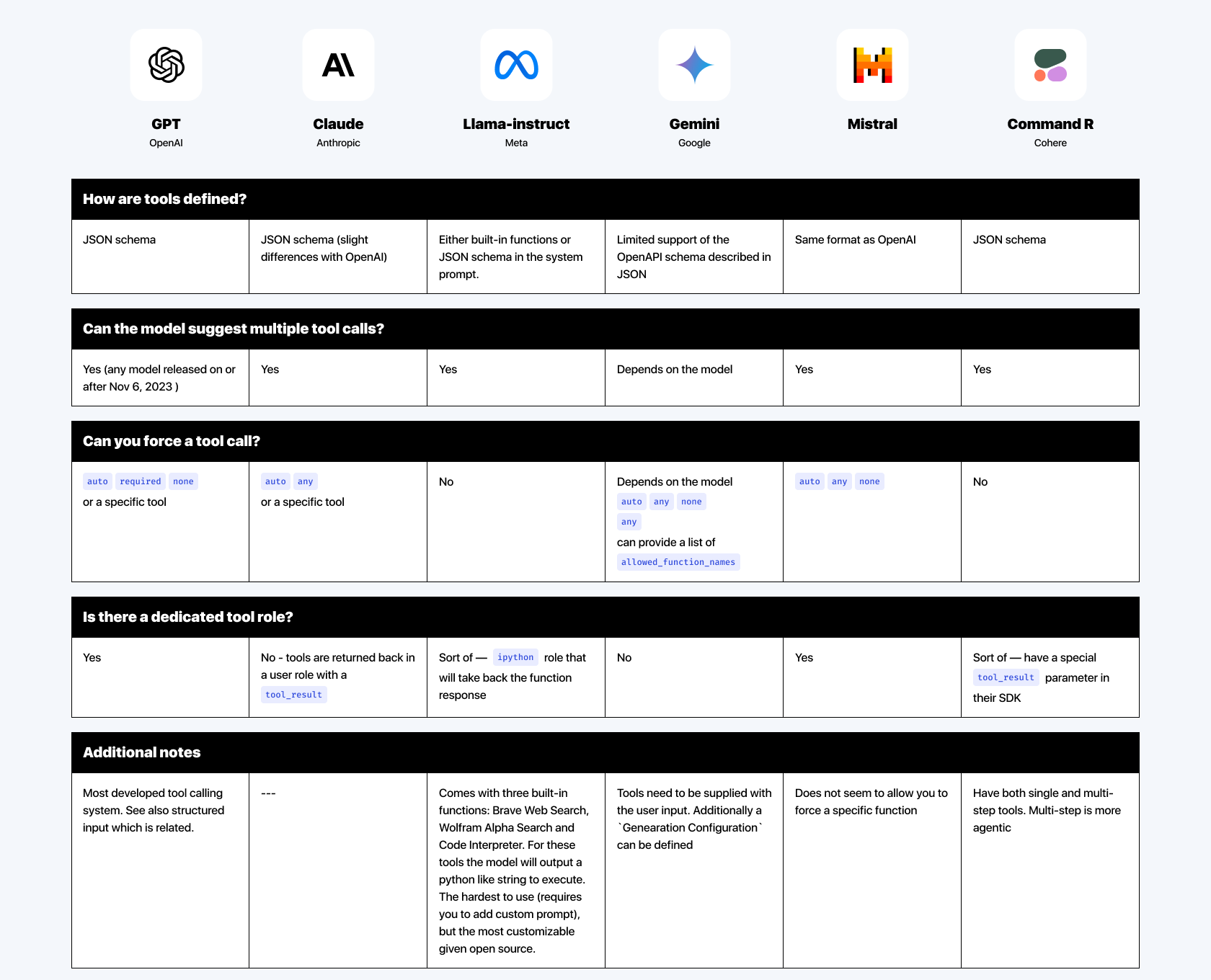

Totally different mannequin suppliers take completely different approaches to dealing with instrument calling. This text will talk about the tool-calling approaches of OpenAI, Anthropic, and LangChain. You may also use open-source fashions like Llama 3 and inference suppliers like Groq for instrument calling.

Presently, OpenAI has 4 completely different fashions (GPT-4o. GPT-4o-mini, GPT-4-turbo, and GPT-3.5-turbo). All these fashions assist instrument calling.

Let’s perceive it utilizing a easy calculator perform instance.

def calculator(operation, num1, num2):

if operation == "add":

return num1 + num2

elif operation == "subtract":

return num1 - num2

elif operation == "multiply":

return num1 * num2

elif operation == "divide":

return num1 / num2Create a instrument calling schema for the Calculator perform.

import openai

openai.api_key = OPENAI_API_KEY

# Outline the perform schema (that is what GPT-4 will use to know the best way to name the perform)

calculator_function = {

"identify": "calculator",

"description": "Performs fundamental arithmetic operations",

"parameters": {

"sort": "object",

"properties": {

"operation": {

"sort": "string",

"enum": ["add", "subtract", "multiply", "divide"],

"description": "The operation to carry out"

},

"num1": {

"sort": "quantity",

"description": "The primary quantity"

},

"num2": {

"sort": "quantity",

"description": "The second quantity"

}

},

"required": ["operation", "num1", "num2"]

}

}A typical OpenAI perform/instrument calling schema has a reputation, description, and parameter part. Contained in the parameters part, you’ll be able to present the main points for the perform’s arguments.

- Every property has a knowledge sort and outline.

- Optionally, an enum which defines particular values the parameter expects. On this case, the “operation” parameter expects any of “add”, “subtract”, multiply, and “divide”.

- Required sections point out the parameters the mannequin should generate.

Now, use the outlined schema of the perform to get response from the chat completion endpoint.

# Instance of calling the OpenAI API with a instrument

response = openai.chat.completions.create(

mannequin="gpt-4-0613", # You need to use any model that helps perform calling

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "What is 3 plus 4?"},

],

capabilities=[calculator_function],

function_call={"identify": "calculator"}, # Instruct the mannequin to name the calculator perform

)

# Extracting the perform name and its arguments from the response

function_call = response.decisions[0].message.function_call

identify = function_call.identify

arguments = function_call.argumentsNow you can cross the arguments to the Calculator perform to get an output.

import json

args = json.hundreds(arguments)

consequence = calculator(args['operation'], args['num1'], args['num2'])

# Output the consequence

print(f"Outcome: {consequence}")That is the only approach to make use of instrument calling utilizing OpenAI fashions.

Utilizing the Assistant API

You may also use instrument calling with the Assistant API. This supplies extra freedom and management over the complete workflow, permitting you to” construct brokers to perform complicated automation duties.

Right here is the best way to use instrument calling with Assistant API.

We are going to use the identical calculator instance.

from openai import OpenAI

shopper = OpenAI(api_key=OPENAI_API_KEY)

assistant = shopper.beta.assistants.create(

directions="You're a climate bot. Use the supplied capabilities to reply questions.",

mannequin="gpt-4o",

instruments=[{

"type":"function",

"function":{

"name": "calculator",

"description": "Performs basic arithmetic operations",

"parameters": {

"type": "object",

"properties": {

"operation": {

"type": "string",

"enum": ["add", "subtract", "multiply", "divide"],

"description": "The operation to carry out"

},

"num1": {

"sort": "quantity",

"description": "The primary quantity"

},

"num2": {

"sort": "quantity",

"description": "The second quantity"

}

},

"required": ["operation", "num1", "num2"]

}

}

}

]

)Create a thread and a message

thread = shopper.beta.threads.create()

message = shopper.beta.threads.messages.create(

thread_id=thread.id,

position="person",

content material="What's 3 plus 4?",

)Provoke a run

run = shopper.beta.threads.runs.create_and_poll(

thread_id=thread.id,

assistant_id="assistant.id")Retrieve the arguments and run the Calculator perform

arguments = run.required_action.submit_tool_outputs.tool_calls[0].perform.arguments

import json

args = json.hundreds(arguments)

consequence = calculator(args['operation'], args['num1'], args['num2'])Loop via the required motion and add it to an inventory

#tool_outputs = []

# Loop via every instrument within the required motion part

for instrument in run.required_action.submit_tool_outputs.tool_calls:

if instrument.perform.identify == "calculator":

tool_outputs.append({

"tool_call_id": instrument.id,

"output": str(consequence)

})Submit the instrument outputs to the API and generate a response

# Submit the instrument outputs to the API

shopper.beta.threads.runs.submit_tool_outputs_and_poll(

thread_id=thread.id,

run_id=run.id,

tool_outputs=tool_outputs

)

messages = shopper.beta.threads.messages.checklist(

thread_id=thread.id

)

print(messages.knowledge[0].content material[0].textual content.worth)It will output a response `3 plus 4 equals 7`.

Parallel Perform Calling

You may also use a number of instruments concurrently for extra sophisticated use circumstances. As an illustration, getting the present climate at a location and the possibilities of precipitation. To attain this, you should utilize the parallel perform calling function.

Outline two dummy capabilities and their schemas for instrument calling

from openai import OpenAI

shopper = OpenAI(api_key=OPENAI_API_KEY)

def get_current_temperature(location, unit="Fahrenheit"):

return {"location": location, "temperature": "72", "unit": unit}

def get_rain_probability(location):

return {"location": location, "likelihood": "40"}

assistant = shopper.beta.assistants.create(

directions="You're a climate bot. Use the supplied capabilities to reply questions.",

mannequin="gpt-4o",

instruments=[

{

"type": "function",

"function": {

"name": "get_current_temperature",

"description": "Get the current temperature for a specific location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g., San Francisco, CA"

},

"unit": {

"type": "string",

"enum": ["Celsius", "Fahrenheit"],

"description": "The temperature unit to make use of. Infer this from the person's location."

}

},

"required": ["location", "unit"]

}

}

},

{

"sort": "perform",

"perform": {

"identify": "get_rain_probability",

"description": "Get the likelihood of rain for a selected location",

"parameters": {

"sort": "object",

"properties": {

"location": {

"sort": "string",

"description": "The town and state, e.g., San Francisco, CA"

}

},

"required": ["location"]

}

}

}

]

)Now, create a thread and provoke a run. Primarily based on the immediate, this can output the required JSON schema of perform parameters.

thread = shopper.beta.threads.create()

message = shopper.beta.threads.messages.create(

thread_id=thread.id,

position="person",

content material="What is the climate in San Francisco right this moment and the chance it's going to rain?",

)

run = shopper.beta.threads.runs.create_and_poll(

thread_id=thread.id,

assistant_id=assistant.id,

)Parse the instrument parameters and name the capabilities

import json

location = json.hundreds(run.required_action.submit_tool_outputs.tool_calls[0].perform.arguments)

climate = json.loa"s(run.requir"d_action.submit_t"ol_out"uts.tool_calls[1].perform.arguments)

temp = get_current_temperature(location['location'], location['unit'])

rain_p"ob = get_rain_pro"abilit"(climate['location'])

# Output the consequence

print(f"Outcome: {temp}")

print(f"Outcome: {rain_prob}")Outline an inventory to retailer instrument outputs

# Outline the checklist to retailer instrument outputs

tool_outputs = []

# Loop via every instrument within the required motion part

for instrument in run.required_action.submit_tool_outputs.tool_calls:

if instrument.perform.identify == "get_current_temperature":

tool_outputs.append({

"tool_call_id": instrument.id,

"output": str(temp)

})

elif instrument.perform.identify == "get_rain_probability":

tool_outputs.append({

"tool_call_id": instrument.id,

"output": str(rain_prob)

})Submit instrument outputs and generate a solution

# Submit all instrument outputs without delay after gathering them in tool_outputs:

attempt:

run = shopper.beta.threads.runs.submit_tool_outputs_and_poll(

thread_id=thread.id,

run_id=run.id,

tool_outputs=tool_outputs

)

print("Instrument outputs submitted efficiently.")

besides Exception as e:

print("Didn't submit instrument outputs:", e)

else:

print("No instrument outputs to submit.")

if run.standing == 'accomplished':

messages = shopper.beta.threads.messages.checklist(

thread_id=thread.id

)

print(messages.knowledge[0].content material[0].textual content.worth)

else:

print(run.standing)The mannequin will generate an entire reply primarily based on the instrument’s outputs. `The present temperature in San Francisco, CA, is 72°F. There’s a 40% likelihood of rain right this moment.`

Seek advice from the official documentation for extra.

Structured Output

Not too long ago, OpenAI launched structured output, which ensures that the arguments generated by the mannequin for a perform name exactly match the JSON schema you supplied. This function prevents the mannequin from producing incorrect or sudden enum values, retaining its responses aligned with the required schema.

To make use of Structured Output for instrument calling, set strict: True. The API will pre-process the equipped schema and constrain the mannequin to stick strictly to your schema.

from openai import OpenAI

shopper = OpenAI()

assistant = shopper.beta.assistants.create(

directions="You're a climate bot. Use the supplied capabilities to reply questions.",

mannequin="gpt-4o-2024-08-06",

instruments=[

{

"type": "function",

"function": {

"name": "get_current_temperature",

"description": "Get the current temperature for a specific location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g., San Francisco, CA"

},

"unit": {

"type": "string",

"enum": ["Celsius", "Fahrenheit"],

"description": "The temperature unit to make use of. Infer this from the person's location."

}

},

"required": ["location", "unit"],

"additionalProperties": False

},

"strict": True

}

},

{

"sort": "perform",

"perform": {

"identify": "get_rain_probability",

"description": "Get the likelihood of rain for a selected location",

"parameters": {

"sort": "object",

"properties": {

"location": {

"sort": "string",

"description": "The town and state, e.g., San Francisco, CA"

}

},

"required": ["location"],

"additionalProperties": False

},

// highlight-start

"strict": True

// highlight-end

}

}

]

)The preliminary request will take a couple of seconds. Nevertheless, subsequently, the cached artefacts shall be used for instrument calls.

Anthropic’s Claude household of fashions is environment friendly at instrument calling as nicely.

The workflow for calling instruments with Claude is much like that of OpenAI. Nevertheless, the vital distinction is in how instrument responses are dealt with. In OpenAI’s setup, instrument responses are managed underneath a separate position, whereas in Claude’s fashions, instrument responses are integrated immediately inside the Consumer roles.

A typical instrument definition in Claude consists of the perform’s identify, description, and JSON schema.

import anthropic

shopper = anthropic.Anthropic()

response = shopper.messages.create(

mannequin="claude-3-5-sonnet-20240620",

max_tokens=1024,

instruments=[

{

"name": "get_weather",

"description": "Get the current weather in a given location",

"input_schema": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA"

},

"unit": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

"description": "The unit of temperature, both 'Celsius' or 'fahrenheit'"

}

},

"required": ["location"]

}

},

],

messages=[

{

"role": "user",

"content": "What is the weather like in New York?"

}

]

)

print(response)The capabilities’ schema definition is much like the schema definition in OpenAI’s chat completion API, which we mentioned earlier.

Nevertheless, the response differentiates Claude’s fashions from these of OpenAI.

{

"id": "msg_01Aq9w938a90dw8q",

"mannequin": "claude-3-5-sonnet-20240620",

"stop_reason": "tool_use",

"position": "assistant",

"content material": [

{

"type": "text",

"text": "<thinking>I need to call the get_weather function, and the user wants SF, which is likely San Francisco, CA.</thinking>"

},

{

"type": "tool_use",

"id": "toolu_01A09q90qw90lq917835lq9",

"name": "get_weather",

"input": {"location": "San Francisco, CA", "unit": "celsius"}

}

]

}You may extract the arguments, execute the unique perform, and cross the output to LLM for a textual content response with added data from perform calls.

response = shopper.messages.create(

mannequin="claude-3-5-sonnet-20240620",

max_tokens=1024,

instruments=[

{

"name": "get_weather",

"description": "Get the current weather in a given location",

"input_schema": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA"

},

"unit": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

"description": "The unit of temperature, both 'celsius' or 'fahrenheit'"

}

},

"required": ["location"]

}

}

],

messages=[

{

"role": "user",

"content": "What's the weather like in San Francisco?"

},

{

"role": "assistant",

"content": [

{

"type": "text",

"text": "<thinking>I need to use get_weather, and the user wants SF, which is likely San Francisco, CA.</thinking>"

},

{

"type": "tool_use",

"id": "toolu_01A09q90qw90lq917835lq9",

"name": "get_weather",

"input": {"location": "San Francisco, CA", "unit": "celsius"}

}

]

},

{

"position": "person",

"content material": [

{

"type": "tool_result",

"tool_use_id": "toolu_01A09q90qw90lq917835lq9", # from the API response

"content": "65 degrees" # from running your tool

}

]

}

]

)

print(response)Right here, you’ll be able to observe that we handed the tool-calling output underneath the person position.

For extra on Claude’s instrument calling, seek advice from the official documentation.

Here’s a comparative overview of tool-calling options throughout completely different LLM suppliers.

Managing a number of LLM suppliers can rapidly grow to be tough whereas constructing complicated AI functions. Therefore, frameworks like LangChain have created a unified interface for dealing with instrument calls from a number of LLM suppliers.

Create a customized instrument utilizing @instrument decorator in LangChain.

from langchain_core.instruments import instrument

@instrument

def add(a: int, b: int) -> int:

"""Provides a and b.

Args:

a: first int

b: second int

"""

return a + b

@instrument

def multiply(a: int, b: int) -> int:

"""Multiplies a and b.

Args:

a: first int

b: second int

"""

return a * b

instruments = [add, multiply]Initialise an LLM,

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(mannequin="gpt-3.5-turbo-0125")Use the bind instrument methodology so as to add the outlined instruments to the LLMs.

llm_with_tools = llm.bind_tools(instruments)Typically, you must power the LLMs to make use of sure instruments. Many LLM suppliers permit this behaviour. To acheive this in LangChain, use

always_multiply_llm = llm.bind_tools([multiply], tool_choice="multiply")And if you wish to name any of the instruments supplied

always_call_tool_llm = llm.bind_tools([add, multiply], tool_choice="any")Schema Definition Utilizing Pydantic

You may also use Pydantic to outline instrument schema. That is helpful when the instrument has a posh schema.

from langchain_core.pydantic_v1 import BaseModel, Discipline

# Observe that the docstrings listed here are essential, as they are going to be handed alongside

# to the mannequin and the category identify.

class add(BaseModel):

"""Add two integers collectively."""

a: int = Discipline(..., description="First integer")

b: int = Discipline(..., description="Second integer")

class multiply(BaseModel):

"""Multiply two integers collectively."""

a: int = Discipline(..., description="First integer")

b: int = Discipline(..., description="Second integer")

instruments = [add, multiply]Guarantee detailed docstring and clear parameter descriptions for optimum outcomes.

Brokers are automated packages powered by LLMs that work together with exterior environments. As an alternative of executing one motion after one other in a sequence, the brokers can determine which actions to take primarily based on some circumstances.

Getting structured responses from LLMs to work with AI brokers was once tedious. Nevertheless, instrument calling made getting the specified structured response from LLMs moderately easy. This major function is main the AI agent revolution now.

So, let’s see how one can construct a real-world agent, comparable to a GitHub PR reviewer utilizing OpenAI SDK and an open-source toolset known as Composio.

What’s Composio?

Composio is an open-source tooling resolution for constructing AI brokers. To assemble complicated agentic automation, it provides out-of-the-box integrations for functions like GitHub, Notion, Slack, and many others. It helps you to combine instruments with brokers with out worrying about complicated app authentication strategies like OAuth.

These instruments can be utilized with LLMs. They’re optimized for agentic interactions, which makes them extra dependable than easy perform calls. Additionally they deal with person authentication and authorization.

You need to use these instruments with OpenAI SDK, LangChain, LlamaIndex, and many others.

Let’s see an instance the place you’ll construct a GitHub PR evaluate agent utilizing OpenAI SDK.

Set up OpenAI SDK and Composio.

pip set up openai composioLogin to your Composio person account.

composio loginAdd GitHub integration by finishing the mixing move.

composio add github composio apps replaceAllow a set off to obtain PRs when created.

composio triggers allow github_pull_request_eventCreate a brand new file, import libraries, and outline the instruments.

import os

from composio_openai import Motion, ComposioToolSet

from openai import OpenAI

from composio.shopper.collections import TriggerEventData

composio_toolset = ComposioToolSet()

pr_agent_tools = composio_toolset.get_actions(

actions=[

Action.GITHUB_GET_CODE_CHANGES_IN_PR, # For a given PR, it gets all the changes

Action.GITHUB_PULLS_CREATE_REVIEW_COMMENT, # For a given PR, it creates a comment

Action.GITHUB_ISSUES_CREATE, # If required, allows you to create issues on github

]

)Initialise an OpenAI occasion and outline a immediate.

openai_client = OpenAI()

code_review_assistant_prompt = (

"""

You're an skilled code reviewer.

Your process is to evaluate the supplied file diff and provides constructive suggestions.

Comply with these steps:

1. Establish if the file incorporates important logic modifications.

2. Summarize the modifications within the diff in clear and concise English inside 100 phrases.

3. Present actionable strategies if there are any points within the code.

Upon getting selected the modifications for any TODOs, create a Github difficulty.

"""

)Create an OpenAI assistant thread with the prompts and the instruments.

# Give openai entry to all of the instruments

assistant = openai_client.beta.assistants.create(

identify="PR Overview Assistant",

description="An assistant that can assist you with reviewing PRs",

directions=code_review_assistant_prompt,

mannequin="gpt-4o",

instruments=pr_agent_tools,

)

print("Assistant is prepared")Now, arrange a webhook to obtain the PRs fetched by the triggers and a callback perform to course of them.

## Create a set off listener

listener = composio_toolset.create_trigger_listener()

## Triggers when a brand new PR is opened

@listener.callback(filters={"trigger_name": "github_pull_request_event"})

def review_new_pr(occasion: TriggerEventData) -> None:

# Utilizing the data from Set off, execute the agent

code_to_review = str(occasion.payload)

thread = openai_client.beta.threads.create()

openai_client.beta.threads.messages.create(

thread_id=thread.id, position="person", content material=code_to_review

)

## Let's print our thread

url = f"https://platform.openai.com/playground/assistants?assistant={assistant.id}&thread={thread.id}"

print("Go to this URL to view the thread: ", url)

# Execute Agent with integrations

# begin the execution

run = openai_client.beta.threads.runs.create(

thread_id=thread.id, assistant_id=assistant.id

)

composio_toolset.wait_and_handle_assistant_tool_calls(

shopper=openai_client,

run=run,

thread=thread,

)

print("Listener began!")

print("Create a pr to get the evaluate")

listener.pay attention()Right here is what’s going on within the above code block

- Initialize Listener and Outline Callback: We outlined an occasion listener with a filter with the set off identify and a callback perform. The callback perform known as when the occasion listener receives an occasion from the required set off, i,e. github_pull_request_event.

- Course of PR Content material: Extracts the code diffs from the occasion payload.

- Run Assistant Agent: Create a brand new OpenAI thread and ship the codes to the GPT mannequin.

- Handle Instrument Calls and Begin Listening: Handles instrument calls throughout execution and prompts the listener for ongoing PR monitoring.

With this, you’ll have a totally practical AI agent to evaluate new PR requests. Each time a brand new pull request is raised, the webhook triggers the callback perform, and at last, the agent posts a abstract of the code diffs as a remark to the PR.

Conclusion

Instrument calling by the Giant Language Mannequin is on the forefront of the agentic revolution. It has enabled use circumstances that have been beforehand unimaginable, comparable to letting machines work together with exterior functions as and when wanted, dynamic UI era, and many others. Builders can construct complicated agentic automation processes by leveraging instruments and frameworks like OpenAI SDK, LangChain, and Composio.

Key Takeaways

- Instruments are objects that permit the LLMs interface with exterior functions.

- Instrument calling is the tactic the place LLMs generate structured schema for a required perform primarily based on person message.

- Nevertheless, main LLM suppliers comparable to OpenAI and Anthropic provide perform calling with completely different implementations.

- LangChain provides a unified API for instrument calling utilizing LLMs.

- Composio provides instruments and integrations like GitHub, Slack, and Gmail for complicated agentic automation.

Often Requested Questions

A. Instruments are objects that permit the LLMs work together with exterior environments, comparable to Code interpreters, GitHub, Databases, the Web, and many others.

A. LLMs, or Giant Language Fashions, are superior AI methods designed to know, generate, and reply to human language by processing huge quantities of textual content knowledge.

A. Instrument calling permits LLMs to generate the structured schema of perform arguments as and when wanted.

A. AI brokers are methods powered by AI fashions that may autonomously carry out duties, work together with their setting, and make choices primarily based on their programming and the information they course of.

The media proven on this article just isn’t owned by Analytics Vidhya and is used on the Creator’s discretion.