1. Introduction

The phrase embeddings generated by our strategy have been evaluated by way of their usefulness for textual content classification, clustering, and the evaluation of sophistication distribution within the embedded illustration area.

2. Associated Works

Phrase embeddings, also referred to as distributed phrase representations, are sometimes created utilizing unsupervised strategies. Their reputation stems from the simplicity of the coaching course of, as solely a big corpus of textual content is required. The embedding vectors are first constructed from sparse representations after which mapped to a lower-dimensional area, producing vectors that correspond to particular phrases. To supply context for our analysis, we briefly describe the preferred approaches to constructing phrase embeddings.

3. Materials and Strategies

On this part, we describe our methodology for constructing phrase embeddings that incorporate elementary semantic data. We start by detailing the devoted dataset used within the experiments. Subsequent, we clarify our methodology, which modifies the hidden layer of a neural community initialized with pretrained embeddings. We then describe the fine-tuning course of, which relies on vector shifts. The ultimate subsection outlines the methodology used to judge the proposed strategy.

3.1. Dataset

For our experiments, we constructed a customized dataset consisting of 4 classes: animal, meal, car, and expertise. Every class accommodates six to seven key phrases, for a complete of 25 phrases.

We additionally collected 250 sentences for every key phrase (6250 in complete) utilizing net scraping from numerous on-line sources, together with most English dictionaries. The sentences comprise no multiple prevalence of the required key phrase.

The phrases defining every class had been chosen such that the boundaries between classes had been fuzzy. For example, the classes car and expertise, or meal and animal, overlap to some extent. Moreover, some key phrases had been chosen that might belong to multiple class however had been intentionally grouped into one. An instance is the phrase fish, which might confer with each a meals and an animal. This was carried out deliberately to make it tougher to categorise the textual content and spotlight the contribution our phrase embeddings intention to deal with.

- 1.

-

Change all letters to lowercase;

- 2.

-

Take away all non-alphabetic characters (punctuation, symbols, and numbers);

- 3.

-

Take away pointless whitespace characters: duplicated areas, tabs, or main areas at the start of a sentence;

- 4.

-

Take away chosen cease phrases utilizing a operate from the Gensim library: articles, pronouns, and different phrases that won’t profit the creation of embeddings;

- 5.

-

Tokenize, i.e., divide the sentence into phrases (tokens);

- 6.

-

Lemmatize, bringing variations of phrases into a typical type, permitting them to be handled as the identical phrase [36]. For instance, the phrase cats could be remodeled into cat, and went could be remodeled into go.

3.2. Methodology of Semantic Phrase Embedding Creation

On this part, we describe intimately our methodology for bettering phrase embeddings. This course of includes three steps: coaching a neural community to acquire an embedding layer, tuning the embeddings, and shifting the embeddings to include particular geometric properties.

3.2.1. Embedding Layer Coaching

For constructing phrase embeddings within the neural community, we use a hidden layer with preliminary weights set to pretrained embeddings. In our exams, the architectures with fewer layers carried out finest, possible because of the small gradient within the preliminary layers. Because the variety of layers elevated, the preliminary layers had been up to date much less successfully throughout backpropagation, inflicting the embedding layer to alter too slowly to provide strongly semantically associated phrase embeddings. Because of this, we used a smaller community for the ultimate experiments.

The algorithm for creating semantic embeddings utilizing a neural community layer is as follows:

- 1.

-

Load the pretrained embeddings.

- 2.

-

Preprocess the enter knowledge.

- 3.

-

Separate a portion of the coaching knowledge to create a validation set. In our strategy, the validation set was 20% of the coaching knowledge.

Subsequent, the preliminary phrase vectors had been mixed with the pretrained embedding knowledge. To attain this, an embedding matrix was created, the place the row at index i corresponds to the pretrained embedding of the phrase at index i within the vectorizer.

- 4.

-

Load the embedding matrix into the embedding layer of the neural community, initializing it with pretrained phrase embeddings as weights.

- 5.

-

Create a neural network-based embedding layer.

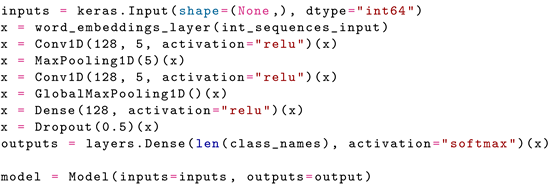

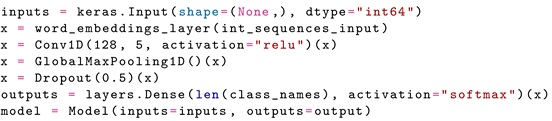

In our experiments, we examined a number of architectures. The one which labored finest was a CNN with the next configuration.

- 6.

-

Practice the mannequin utilizing the ready knowledge.

- 7.

-

Map the information into phrases in vocabulary embeddings created in hidden layer weights.

- 8.

-

Save the newly created embeddings in a pickle format.

|

3.2.2. Superb-Tuning of Pretrained Phrase Embeddings

This step makes use of phrase embeddings created in a self-supervised method by fine-tuning pretrained embeddings.

The complete course of for coaching embeddings is encapsulated within the following steps:

- 1.

-

Load the pretrained embeddings. The algorithm makes use of GloVe embeddings as enter, so the embeddings have to be transformed right into a dictionary.

- 2.

- 3.

-

Put together a co-occurrence matrix of phrases utilizing the GloVe mechanism.

- 4.

-

Practice the mannequin utilizing the operate offered by Mittens for fine-tuning GloVe embeddings [33].

- 5.

-

Save the newly created embeddings in a pickle format.

3.2.3. Embedding Shifts

-

Modification of the pretrained embeddings: Since Beringer et al. initially used embeddings from the SpaCy library as a base [38], although in addition they used the GloVe methodology for coaching, the information they used differed. Subsequently, it was needed to change how the vectors had been loaded. Whereas SpaCy gives a devoted operate for this process, it had to get replaced with an alternate.

-

Guide splitting of the coaching set into two smaller units, one for coaching (60%) and one for validation (40%), geared toward correct hyperparameter choice.

-

Adapting different options: Beringer et al. created embeddings by combining the embedding of a phrase and the embedding of the which means of that phrase, for instance, the phrase tree and its which means forest, or tree (construction). They known as them key phrase embeddings. Within the case of the experiment carried out on this thesis, the embeddings of the phrase and the class had been mixed, like cat and animal, or airplane (expertise). An embedding created on this means is known as a key phrase–class embedding. The time period key phrase embedding is used to explain any phrase contained in classes, akin to cat, canine, and many others. The time period class embedding describes the embedding of a selected class phrase, particularly animal, meal, expertise, or car.

-

Including hyperparameter choice optimization:

Lastly, for the sake of readability, the algorithm design was as follows:

- 1.

-

Add or recreate non-optimized key phrase–class embeddings. For every key phrase within the dataset and its corresponding class, we created an embedding, as proven in Equation (1), which included each pattern objects and class gadgets:

𝑘𝑐𝑓𝑖𝑠ℎ(𝑎𝑛𝑖𝑚𝑎𝑙)=𝑒(𝑓𝑖𝑠ℎ,𝑎𝑛𝑖𝑚𝑎𝑙)=𝑒(𝑓𝑖𝑠ℎ)+𝑒(𝑎𝑛𝑖𝑚𝑎𝑙)2kcfish(animal)=e(fish,animal)=e(fish)+e(animal)2

- 2.

-

Load and preprocess the texts used to coach the mannequin.

- 3.

-

For every epoch:

- 3.1.

-

For every sentence within the coaching set:

- 3.1.1.

-

Calculate the context embedding. The context itself is the set of phrases surrounding the key phrase, in addition to the key phrase itself. We create the context embedding by averaging the embeddings of all of the phrases that make up that context.

- 3.1.2.

-

Measure the cosine distance between the computed context embedding and all class embeddings. On this means, we choose the closest class embedding and verify whether or not it’s a right or incorrect match.

- 3.1.3.

-

Replace key phrase–class embeddings. The algorithm strikes the key phrase–class embedding nearer to the context embedding if the chosen class embedding seems to be right. If not, the algorithm strikes the key phrase–class embedding away from the context embedding. The alpha (𝛼α) and beta (𝛽β) coefficients decide how a lot we manipulate the vectors.

- 4.

-

Save the newly modified key phrase–class embeddings within the pickle format. The pickle module is a technique for serializing and deserializing objects, developed within the Python programming language [39].

The highest-k accuracy on the validation set was used as a measure of the standard of the embeddings for use in deciding on applicable hyperparameters and choosing the right embeddings. It is a metric that determines whether or not the proper class is within the prime ok classes, the place the enter knowledge are context embeddings from the validation set. As a result of small variety of classes (4 in complete), it was determined to judge the standard of the embeddings utilizing the top-1 accuracy. Lastly, a random search yielded the optimum hyperparameters.

3.3. Semantic Phrase Embedding Analysis

We carried out the analysis of our methodology for constructing the phrase embeddings utilizing two functions. We examined how they affect the standard of the classification, and we measured their distribution and plotted them utilizing PCA projections. We additionally evaluated how the introduction of the embeddings influences the unsupervised processing of the information.

3.3.1. Textual content Classification

The primary check for the newly created semantic phrase embeddings was primarily based on a prediction of the class from the sentence. This may be carried out in some ways, for instance, through the use of the embeddings as a layer in a deep neural community. Initially, nonetheless, it will be higher to check the capabilities of the embeddings themselves with out additional coaching on the brand new structure. Thus, we used a technique primarily based on measuring the gap between embeddings. On this case, our strategy consisted of the next steps:

- 1.

-

For every sentence within the coaching set:

- 1.1.

-

Preprocess the sentence.

- 1.2.

-

Convert the phrases from the sentence into phrase embeddings.

- 1.3.

-

Calculate the imply embedding from all of the embeddings within the sentence.

- 1.4.

-

Calculate the cosine distance between the imply sentence embedding and all class embeddings primarily based on Equation (2):

𝑑𝑖𝑠𝑡𝑎𝑛𝑐𝑒(𝑒̲,𝑐)=cos(𝑒̲,𝑐)=𝑒̲·𝑐∥𝑒̲∥∥𝑐∥distance(e¯,c)=cos(e¯,c)=e¯·ce¯c

the place 𝑒̲e¯ is the imply sentence embedding and c is the class embedding.

- 1.5.

-

Choose the assigned class by taking the class embedding with the smallest distance from the sentence embedding.

- 2.

-

Calculate the accuracy rating by taking the predictions for all sentences primarily based on Equation (3):

𝐴𝑐𝑐𝑢𝑟𝑎𝑐𝑦=𝑁𝑢𝑚𝑏𝑒𝑟𝑜𝑓𝑐𝑜𝑟𝑟𝑒𝑐𝑡𝑝𝑟𝑒𝑑𝑖𝑐𝑡𝑖𝑜𝑛𝑠𝑇𝑜𝑡𝑎𝑙𝑛𝑢𝑚𝑏𝑒𝑟𝑜𝑓𝑝𝑟𝑒𝑑𝑖𝑐𝑡𝑖𝑜𝑛𝑠Accuracy=NumberofcorrectpredictionsTotalnumberofpredictions

3.3.2. Separability and Spatial Distribution

𝑒(𝑐𝑜𝑚𝑝𝑢𝑡𝑒𝑟)+𝑒(𝐶𝑃𝑈)+𝑒(𝑘𝑒𝑦𝑏𝑜𝑎𝑟𝑑)+𝑒(𝑚𝑜𝑛𝑖𝑡𝑜𝑟)+𝑒(𝑇𝑉)+𝑒(𝑝ℎ𝑜𝑛𝑒)6≈𝑒(𝑡𝑒𝑐ℎ𝑛𝑜𝑙𝑜𝑔𝑦)e(pc)+e(CPU)+e(keyboard)+e(monitor)+e(TV)+e(telephone)6≈e(expertise)

𝑚𝑒𝑎𝑛𝐷𝑖𝑠𝑡𝑎𝑛𝑐𝑒𝑇𝑜𝐶𝑎𝑡𝑒𝑔𝑜𝑟𝑦(𝑐,𝐶)=1|𝐶|∑𝑘∈𝐶cos(𝑐,𝑘)=1|𝐶|∑𝑘∈𝐾𝑐·𝑘∥𝑐∥∥𝑘∥meanDistanceToCategory(c,C)=1|C|∑ok∈Ccos(c,ok)=1|C|∑ok∈Kc·kck

the place

𝑐𝑎𝑡𝑒𝑔𝑜𝑟𝑦𝐷𝑒𝑛𝑠𝑖𝑡𝑦(𝐶)=1|𝐶|∑𝑘∈𝐶cos⎛⎝⎜⎜𝑘,1|𝐶|∑𝑙∈𝐶𝑙⎞⎠⎟⎟categoryDensity(C)=1|C|∑ok∈Ccos(ok,1|C|∑l∈Cl)

the place

𝑠=𝑏−𝑎𝑚𝑎𝑥(𝑎,𝑏)s=b−amax(a,b)

You will need to be aware that the Silhouette Coefficient is computed for just one pattern, whereas if multiple pattern had been thought-about, it will be ample to take the common of the person values. With a view to check the separability of all courses, we calculated the common Silhouette Coefficient for all key phrase embeddings.

4. Outcomes

On this part, we current a abstract of the outcomes for creating semantic embeddings utilizing the strategies mentioned above. Every part refers to a selected methodology for testing the embeddings and gives the ends in each tabular and graphical type. To keep up methodological readability, we developed a naming scheme to indicate particular embedding strategies, particularly, unique embeddings (OE), the essential embeddings that served as the idea for the creation of others; neural embeddings (NE), embeddings created utilizing the embedding layer within the DNN-based classification course of; fine-tuned embeddings (FE), embeddings created with fine-tuning, that’s, utilizing the GloVe coaching methodology on new semantic knowledge; and geometrical embeddings (GE), phrase vectors that had been created by transferring vectors in area.

4.1. Textual content Classification

First, we examined the standard of textual content classification utilizing the semantic embeddings talked about above. It’s price recalling {that a} weighted variant of the vectors utilizing the IDF measure was additionally used for testing. So moreover, in classification experiments, we added the outcomes from the utilization of the re-weighed embedded representations: unique weighted embeddings (OWE), fine-tuned weighted embeddings (FWE), neural weighted embeddings (NWE), and geometrical weighted embeddings (GWE).

Determine 1. Classification outcomes for the closest neighbor methodology.

It is a logical consequence of how these embeddings had been educated because the geometrical embeddings underwent essentially the most drastic change and thus had been moved essentially the most in area in comparison with the opposite strategies. Shifting them precisely towards the class embeddings on the coaching stage led to higher outcomes. The opposite two strategies, particularly neural embeddings and fine-tuned embeddings, carried out comparably effectively, each round 87%. Every of the brand new strategies outperformed the unique embeddings (83.89%), so all of those strategies improved the classification high quality.

All of the weighted embeddings carry out considerably worse than their unweighted counterparts, with a distinction of 5 or 6 proportion factors, which was anticipated on condition that semantic embeddings happen in each sentence within the dataset. It seems that solely the geometrically weighted embeddings outperform the unique embeddings.

Determine 2. Classification outcomes for the random forest classifier with imply sentence embedding.

Thus, evidently regardless of the stronger shift within the vectors in area within the case of geometric embeddings, they lose some options and the classification suffers. Within the case of the imply sentence distance to a class embedding, geometric embeddings confirmed their full potential, since each the tactic for creating embeddings and the tactic for testing them had been primarily based on the geometric features of vectors in area. In distinction, the Random Forest classification relies on particular person options (vector dimensions), and every choice tree within the ensemble mannequin consists of a restricted variety of options, so it will be significant that particular person options convey as a lot worth as potential, relatively than all the vector as a complete.

Desk 1. Classification outcomes for weighted and unweighted semantic embeddings. (Underline—finest outcomes).

4.2. Separability and Spatial Distribution

Determine 3. PCA visualization of unique embeddings.

As will be seen, even right here, the courses appear to be comparatively effectively separated. The courses expertise and car appear to be far aside in area, whereas meal and animal are nearer collectively. Notice that the key phrase fish is way additional away from the opposite key phrases within the cluster, heading in the direction of the class meal. Additionally, the phrase kebab appears to be far-off from the proper cluster, although it refers to a meal and doesn’t have a which means correlated with animals.

Determine 4. PCA visualization of neural embeddings.

Determine 5. PCA visualization of fine-tuned embeddings.

Determine 6. PCA visualization of geometrical embeddings.

Determine 7. Outcomes for utilization of imply class distance.

Determine 8. Outcomes for imply class distance by class.

Determine 9. Outcomes for Class Density.

Determine 10. Outcomes for class density by class.

The outcomes for the earlier two metrics are additionally mirrored within the outcomes for the Silhouette Rating. The clusters for the geometric embeddings (0.678) are dense and effectively separated. The neural embeddings (0.37) nonetheless have higher cluster high quality than the unique embeddings (0.24), whereas the fine-tuned embeddings (0.144) are the worst. Notice that not one of the embeddings have a silhouette worth beneath zero, so there may be little overlap between the classes.

Desk 2. Outcomes for Ok-means clustering.

The one methodology with worse outcomes is once more the fine-tuning of pretrained phrase embeddings, which scores decrease than the others for every metric. The misclassified key phrases had been fish, which was added to the cluster meal as an alternative of animal; telephone, which was added to the cluster car as an alternative of expertise; and television, which was added to the cluster animal, as an alternative of expertise.

Desk 3. Summarized outcomes for separability and spatial distribution.

5. Dialogue

The contribution of our examine is the introduction of a supervised methodology for aligning phrase embeddings through the use of the hidden layer of a neural community, their fine-tuning, and vector shifting within the embedding area. For the textual content classification process, a less complicated methodology that examines the gap between the imply sentence embeddings and class embeddings confirmed that geometrical embeddings carried out finest. Nevertheless, it is very important be aware that these strategies rely closely on the gap between embeddings, which can not all the time be essentially the most related measure. When utilizing embeddings as enter knowledge for the Random Forest classifier, geometrical embeddings carried out considerably worse than the opposite two strategies, with fine-tuned embeddings attaining increased accuracy by greater than 5 proportion factors (97.95% in comparison with 92.39%).

The implication of that is that when creating embeddings utilizing the vector-shifting methodology, some data that could possibly be helpful for extra complicated duties is misplaced. Moreover, excessively shifting the vectors in area can isolate these embeddings, inflicting the remaining untrained ones, which intuitively ought to be shut by, to float aside with every iteration of coaching. This means that for small, remoted units of semantic phrase embeddings, geometrical embeddings will carry out fairly effectively. Nevertheless, for open-ended issues with a bigger vocabulary and extra blurred class boundaries, different strategies are more likely to be more practical.

Whereas fine-tuned embeddings carried out effectively within the textual content classification process, in addition they have their drawbacks. Analyzing the distribution of vectors in area reveals that embeddings inside a category have a tendency to maneuver farther aside, which weakens the semantic relationships between them. This turns into evident in check duties, akin to clustering, the place the fine-tuned embeddings carried out even worse than the unique embeddings. Alternatively, enriching embeddings with area data improves their efficiency. Embeddings educated with the GloVe methodology on specialised texts purchase extra semantic depth, as seen within the Random Forest classification outcomes, the place they achieved the second-best efficiency for class queries.

Essentially the most well-balanced methodology seems to be vector coaching utilizing the trainable embedding layer. Neural embeddings yield superb outcomes for textual content classification, each with the distance-based methodology and when utilizing Random Forest. Whereas the embeddings inside courses is probably not very dense, the courses themselves are extremely separable.

6. Conclusions and Future Works

On this paper, we suggest a technique for aligning phrase embeddings utilizing a supervised strategy that employs the hidden layer of a neural community and shifts the embeddings into the particular classes they correspond to. We consider our strategy from a number of views: each in an software for supervised and non-supervised duties and thru the evaluation of the vector distribution within the illustration area. The exams affirm the strategies’ usability and supply a deeper understanding of the traits of every proposed methodology.

By evaluating our outcomes with state-of-the-art approaches and attaining higher accuracy, we affirm the effectiveness of the proposed methodology. A deep evaluation of the vector distributions reveals that there isn’t a one-size-fits-all answer for producing semantic phrase embeddings; the selection of methodology ought to rely on particular design targets and anticipated outcomes. Nevertheless, our strategy extends conventional strategies for creating phrase vectors.

It’s subsequently worthwhile to discover whether or not semantic phrase embeddings can enhance efficiency. Within the context of check environments, embedding weights will be adjusted to boost prediction accuracy. Whereas IDF weights had been used to restrict the prediction mannequin, future work may experiment with weights that additional emphasize the semantics of the phrase vectors.

An attention-grabbing experiment may contain including phrases to the dataset which are strongly related to a number of classes. For example, the phrase jaguar is primarily linked to an animal but additionally steadily seems within the context of a automobile model. It will be worthwhile to see how totally different embedding creation strategies deal with such ambiguous knowledge.

When it comes to knowledge modification, leveraging well-established datasets from areas like textual content classification or data retrieval could possibly be useful. This could enable semantic embeddings to be examined on bigger datasets and in contrast towards state-of-the-art options. The approaches described on this paper have been confirmed to boost extensively used and well-researched phrase embedding strategies. The 𝑖𝑛𝑡𝑒𝑟𝑠𝑒𝑐𝑡_𝑤𝑜𝑟𝑑2𝑣𝑒𝑐_𝑓𝑜𝑟𝑚𝑎𝑡intersect_word2vec_format methodology from the Gensim library permits merging the enter hidden weight matrix from an current mannequin with a newly created vocabulary. By setting the lockf parameter to 1.0, the merged vectors will be up to date throughout coaching.

One other path price exploring to increase our analysis is testing totally different architectures for embedding creation, notably with modified value capabilities to include semantics. Future analysis may additionally increase this to incorporate extra languages, broadening the scope and applicability of those strategies.

[custom]