Within the period of synthetic intelligence, companies are always in search of revolutionary methods to reinforce buyer assist companies. One such strategy is leveraging AI brokers that work collaboratively to resolve buyer queries effectively. This text explores the implementation of a Concurrent Question Decision System utilizing CrewAI, OpenAI’s GPT fashions, and Google Gemini. This method employs a number of specialised brokers that function in parallel to deal with buyer queries seamlessly, lowering response time and enhancing accuracy.

Studying Aims

- Perceive how AI brokers can effectively deal with buyer queries by automating responses and summarizing key info.

- Learn the way CrewAI permits multi-agent collaboration to enhance buyer assist workflows.

- Discover various kinds of AI brokers, resembling question resolvers and summarizers, and their roles in customer support automation.

- Implement concurrent question processing utilizing Python’s asyncio to reinforce response effectivity.

- Optimize buyer assist methods by integrating AI-driven automation for improved accuracy and scalability.

This text was printed as part of the Information Science Blogathon.

How AI Brokers Work Collectively?

The Concurrent Question Decision System makes use of a multi-agent framework, assigning every agent a particular position. The system makes use of CrewAI, a framework that allows AI brokers to collaborate successfully.

The first elements of the system embody:

- Question Decision Agent: Accountable for understanding buyer queries and offering correct responses.

- Abstract Agent: Summarizes the decision course of for fast assessment and future reference.

- LLMs (Massive Language Fashions): Contains fashions like GPT-4o and Gemini, every with totally different configurations to stability velocity and accuracy.

- Activity Administration: Assigning duties dynamically to brokers to make sure concurrent question processing.

Implementation of Concurrent Question Decision System

To rework the AI agent framework from idea to actuality, a structured implementation strategy is crucial. Beneath, we define the important thing steps concerned in organising and integrating AI brokers for efficient question decision.

Step 1: Setting the API Key

The OpenAI API secret’s saved as an setting variable utilizing the os module. This enables the system to authenticate API requests securely with out hardcoding delicate credentials.

import os

# Set the API key as an setting variable

os.environ["OPENAI_API_KEY"] = ""The system makes use of the os module to work together with the working system.

The system units the OPENAI_API_KEY as an setting variable, permitting it to authenticate requests to OpenAI’s API.

Step 2: Importing Required Libraries

Vital libraries are imported, together with asyncio for dealing with asynchronous operations and crewai elements like Agent, Crew, Activity, and LLM. These are important for outlining and managing AI brokers.

import asyncio

from crewai import Agent, Crew, Activity, LLM, Course of

import google.generativeai as genai- asyncio: Python’s built-in module for asynchronous programming, enabling concurrent execution.

- Agent: Represents an AI employee with particular duties.

- Crew: Manages a number of brokers and their interactions.

- Activity: Defines what every agent is meant to do.

- LLM: Specifies the big language mannequin used.

- Course of: It defines how duties execute, whether or not sequentially or in parallel.

- google.generativeai: Library for working with Google’s generative AI fashions (not used on this snippet, however possible included for future growth).

Step 3: Initializing LLMs

Three totally different LLM cases (GPT-4o and GPT-4) are initialized with various temperature settings. The temperature controls response creativity, making certain a stability between accuracy and suppleness in AI-generated solutions.

# Initialize the LLM with Gemini

llm_1 = LLM(

mannequin="gpt-4o",

temperature=0.7)

llm_2 = LLM(

mannequin="gpt-4",

temperature=0.2)

llm_3 = LLM(

mannequin="gpt-4o",

temperature=0.3)The system creates three LLM cases, every with a distinct configuration.

Parameters:

- mannequin: Specifies which OpenAI mannequin to make use of (gpt-4o or gpt-4).

- temperature: Controls randomness in responses (0 = deterministic, 1 = extra inventive).

These totally different fashions and temperatures assist stability accuracy and creativity

Step 4: Defining AI Brokers

Every agent has a particular position and predefined targets. Two AI brokers are created:

- Question Resolver: Handles buyer inquiries and gives detailed responses.

- Abstract Generator: Summarizes the resolutions for fast reference.

Every agent has an outlined position, purpose, and backstory to information its interactions.

Question Decision Agent

query_resolution_agent = Agent(

llm=llm_1,

position="Question Resolver",

backstory="An AI agent that resolves buyer queries effectively and professionally.",

purpose="Resolve buyer queries precisely and supply useful options.",

verbose=True

)Let’s see what’s occurring on this code block

- Agent Creation: The query_resolution_agent is an AI-powered assistant accountable for resolving buyer queries.

- Mannequin Choice: It makes use of llm_1, configured as GPT-4o with a temperature of 0.7. This stability permits for inventive but correct responses.

- Position: The system designates the agent as a Question Resolver.

- Backstory: The builders program the agent to behave as an expert customer support assistant, making certain environment friendly {and professional} responses.

- Aim: To supply correct options to person queries.

- Verbose Mode: verbose=True ensures detailed logs, serving to builders debug and monitor its efficiency.

Abstract Agent

summary_agent = Agent(

llm=llm_2,

position="Abstract Generator",

backstory="An AI agent that summarizes the decision of buyer queries.",

purpose="Present a concise abstract of the question decision course of.",

verbose=True

)What Occurs Right here?

- Agent Creation: The summary_agent is designed to summarize question resolutions.

- Mannequin Choice: Makes use of llm_2 (GPT-4) with a temperature of 0.2, making its responses extra deterministic and exact.

- Position: This agent acts as a Abstract Generator.

- Backstory: It summarizes question resolutions concisely for fast reference.

- Aim: It gives a transparent and concise abstract of how buyer queries have been resolved.

- Verbose Mode: verbose=True ensures that debugging info is on the market if wanted.

Step 5: Defining Duties

The system dynamically assigns duties to make sure parallel question processing.

This part defines duties assigned to AI brokers within the Concurrent Question Decision System.

resolution_task = Activity(

description="Resolve the client question: {question}.",

expected_output="An in depth decision for the client question.",

agent=query_resolution_agent

)

summary_task = Activity(

description="Summarize the decision of the client question: {question}.",

expected_output="A concise abstract of the question decision.",

agent=summary_agent

)What Occurs Right here?

Defining Duties:

- resolution_task: This activity instructs the Question Resolver Agent to investigate and resolve buyer queries.

- summary_task: This activity instructs the Abstract Agent to generate a short abstract of the decision course of.

Dynamic Question Dealing with:

- The system replaces

{question}with an precise buyer question when executing the duty. - This enables the system to deal with any buyer question dynamically.

Anticipated Output:

- The resolution_task expects an in depth response to the question.

- The summary_task generates a concise abstract of the question decision.

Agent Task:

- The query_resolution_agent is assigned to deal with decision duties.

- The summary_agent is assigned to deal with summarization duties.

Why This Issues

- Activity Specialization: Every AI agent has a particular job, making certain effectivity and readability.

- Scalability: You’ll be able to add extra duties and brokers to deal with various kinds of buyer assist interactions.

- Parallel Processing: Duties will be executed concurrently, lowering buyer wait occasions.

Step 6: Executing a Question with AI Brokers

An asynchronous perform is created to course of a question. The Crew class organizes brokers and duties, executing them sequentially to make sure correct question decision and summarization.

async def execute_query(question: str):

crew = Crew(

brokers=[query_resolution_agent, summary_agent],

duties=[resolution_task, summary_task],

course of=Course of.sequential,

verbose=True

)

end result = await crew.kickoff_async(inputs={"question": question})

return end result

This perform defines an asynchronous course of to execute a question. It creates a Crew occasion, which incorporates:

- brokers: The AI brokers concerned within the course of (Question Resolver and Abstract Generator).

- duties: Duties assigned to the brokers (question decision and summarization).

- course of=Course of.sequential: Ensures duties are executed in sequence.

- verbose=True: Allows detailed logging for higher monitoring.

The perform makes use of await to execute the AI brokers asynchronously and returns the end result.

Step 7: Dealing with A number of Queries Concurrently

Utilizing asyncio.collect(), a number of queries will be processed concurrently. This reduces response time by permitting AI brokers to deal with totally different buyer points in parallel.

async def handle_two_queries(query_1: str, query_2: str):

# Run each queries concurrently

outcomes = await asyncio.collect(

execute_query(query_1),

execute_query(query_2)

)

return outcomesThis perform executes two queries concurrently. asyncio.collect() processes each queries concurrently, considerably lowering response time. The perform returns the outcomes of each queries as soon as execution is full

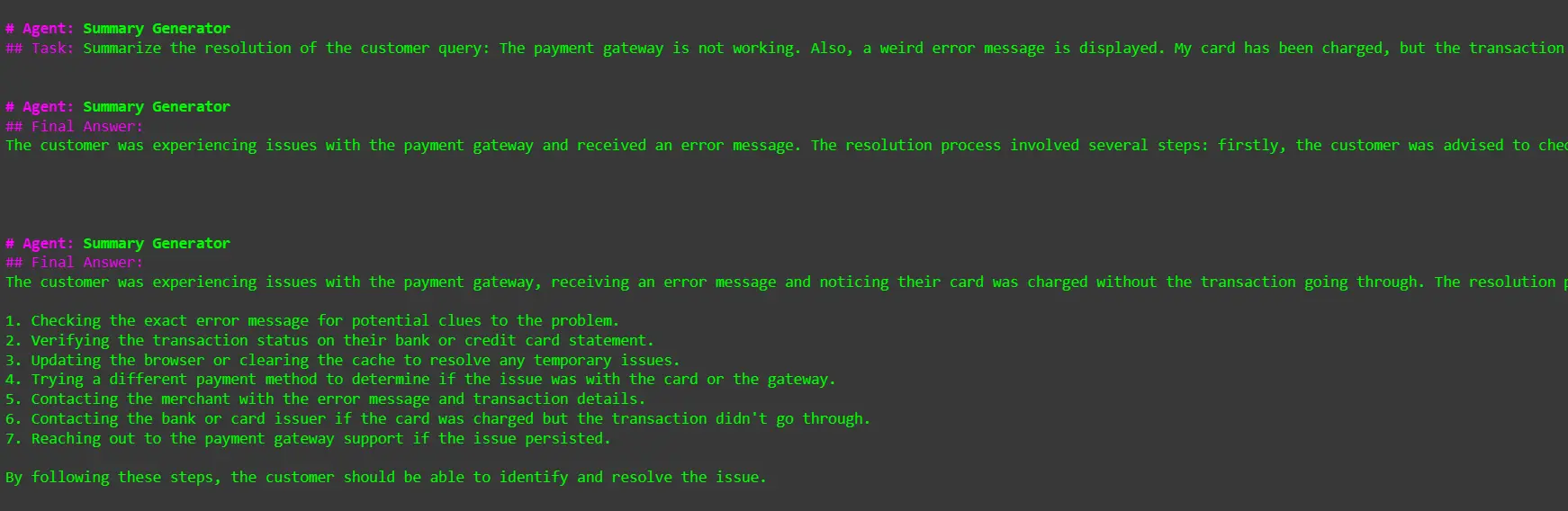

Step 8: Defining Instance Queries

Builders outline pattern queries to check the system, overlaying widespread buyer assist points like login failures and fee processing errors.

query_1 = "I'm unable to log in to my account. It says 'Invalid credentials', however I'm positive I'm utilizing the right username and password."

query_2 = "The fee gateway will not be working. Additionally, a bizarre error message is displayed. My card has been charged, however the transaction will not be going by."These are pattern queries to check the system.

Question 1 offers with login points, whereas Question 2 pertains to fee gateway errors.

Step 9: Setting Up the Occasion Loop

The system initializes an occasion loop to deal with asynchronous operations. If it doesn’t discover an current loop, it creates a brand new one to handle AI activity execution.

attempt:

loop = asyncio.get_event_loop()

besides RuntimeError:

loop = asyncio.new_event_loop()

asyncio.set_event_loop(loop)This part ensures that an occasion loop is on the market for operating asynchronous duties.

If the system detects no occasion loop (RuntimeError happens), it creates a brand new one and units it because the energetic loop.

Step 10: Dealing with Occasion Loops in Jupyter Pocket book/Google Colab

Since Jupyter and Colab have pre-existing occasion loops, nest_asyncio.apply() is used to stop conflicts, making certain easy execution of asynchronous queries.

# Verify if the occasion loop is already operating

if loop.is_running():

# If the loop is operating, use `nest_asyncio` to permit re-entrant occasion loops

import nest_asyncio

nest_asyncio.apply()Jupyter Notebooks and Google Colab have pre-existing occasion loops, which might trigger errors when operating async capabilities.

nest_asyncio.apply() permits nested occasion loops, resolving compatibility points.

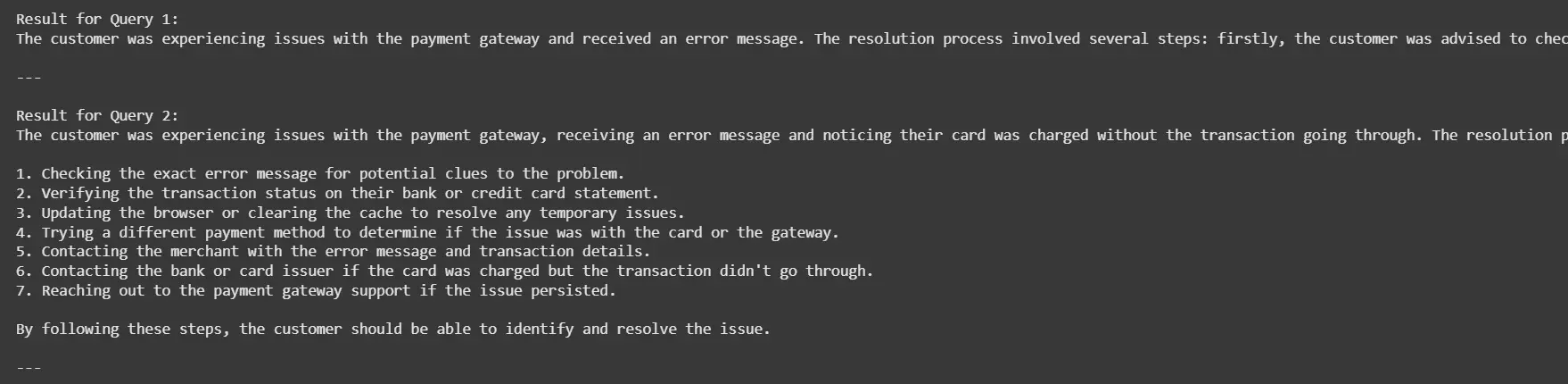

Step 11: Executing Queries and Printing Outcomes

The occasion loop runs handle_two_queries() to course of queries concurrently. The system prints the ultimate AI-generated responses, displaying question resolutions and summaries.

# Run the async perform

outcomes = loop.run_until_complete(handle_two_queries(query_1, query_2))

# Print the outcomes

for i, end in enumerate(outcomes):

print(f"End result for Question {i+1}:")

print(end result)

print("n---n")loop.run_until_complete() begins the execution of handle_two_queries(), which processes each queries concurrently.

The system prints the outcomes, displaying the AI-generated resolutions for every question.

Benefits of Concurrent Question Decision System

Beneath, we are going to see how the Concurrent Question Decision System enhances effectivity by processing a number of queries concurrently, resulting in quicker response occasions and improved person expertise.

- Sooner Response Time: Parallel execution resolves a number of queries concurrently.

- Improved Accuracy: Leveraging a number of LLMs ensures a stability between creativity and factual correctness.

- Scalability: The system can deal with a excessive quantity of queries with out human intervention.

- Higher Buyer Expertise: Automated summaries present a fast overview of question resolutions.

Purposes of Concurrent Question Decision System

We’ll now discover the assorted functions of the Concurrent Question Decision System, together with buyer assist automation, real-time question dealing with in chatbots, and environment friendly processing of large-scale service requests.

- Buyer Help Automation: Allows AI-driven chatbots to resolve a number of buyer queries concurrently, lowering response time.

- Actual-Time Question Processing: Enhances stay assist methods by dealing with quite a few queries in parallel, enhancing effectivity.

- E-commerce Help: Streamlines product inquiries, order monitoring, and fee concern resolutions in on-line buying platforms.

- IT Helpdesk Administration: Helps IT service desks by diagnosing and resolving a number of technical points concurrently.

- Healthcare & Telemedicine: Assists in managing affected person inquiries, appointment scheduling, and medical recommendation concurrently.

Conclusion

The Concurrent Question Decision System demonstrates how AI-driven multi-agent collaboration can revolutionize buyer assist. By leveraging CrewAI, OpenAI’s GPT fashions, and Google Gemini, companies can automate question dealing with, enhancing effectivity and person satisfaction. This strategy paves the way in which for extra superior AI-driven service options sooner or later.

Key Takeaways

- AI brokers streamline buyer assist, lowering response occasions.

- CrewAI permits specialised brokers to work collectively successfully.

- Utilizing asyncio, a number of queries are dealt with concurrently.

- Totally different LLM configurations stability accuracy and creativity.

- The system can handle excessive question volumes with out human intervention.

- Automated summaries present fast, clear question resolutions.

Incessantly Requested Questions

A. CrewAI is a framework that enables a number of AI brokers to work collaboratively on complicated duties. It permits activity administration, position specialization, and seamless coordination amongst brokers.

A. CrewAI defines brokers with particular roles, assigns duties dynamically, and processes them both sequentially or concurrently. It leverages AI fashions like OpenAI’s GPT and Google Gemini to execute duties effectively.

A. CrewAI makes use of Python’s asyncio.collect() to run a number of duties concurrently, making certain quicker question decision with out efficiency bottlenecks.

A. Sure, CrewAI helps numerous massive language fashions (LLMs), together with OpenAI’s GPT-4, GPT-4o, and Google’s Gemini, permitting customers to decide on primarily based on velocity and accuracy necessities.

A. By utilizing totally different AI fashions with various temperature settings, CrewAI balances creativity and factual correctness, making certain dependable responses.

The media proven on this article will not be owned by Analytics Vidhya and is used on the Creator’s discretion.