Introduction

AI is in every single place.

It’s arduous to not work together at the very least as soon as a day with a Giant Language Mannequin (LLM). The chatbots are right here to remain. They’re in your apps, they assist you to write higher, they compose emails, they learn emails…effectively, they do so much.

And I don’t suppose that that’s dangerous. Actually, my opinion is the opposite method – at the very least to date. I defend and advocate for using AI in our each day lives as a result of, let’s agree, it makes all the things a lot simpler.

I don’t must spend time double-reading a doc to seek out punctuation issues or sort. AI does that for me. I don’t waste time writing that follow-up e-mail each single Monday. AI does that for me. I don’t must learn an enormous and boring contract when I’ve an AI to summarize the principle takeaways and motion factors to me!

These are solely a few of AI’s nice makes use of. If you happen to’d wish to know extra use instances of LLMs to make our lives simpler, I wrote an entire ebook about them.

Now, considering as a knowledge scientist and searching on the technical facet, not all the things is that brilliant and glossy.

LLMs are nice for a number of basic use instances that apply to anybody or any firm. For instance, coding, summarizing, or answering questions on basic content material created till the coaching cutoff date. Nonetheless, in terms of particular enterprise purposes, for a single function, or one thing new that didn’t make the cutoff date, that’s when the fashions received’t be that helpful if used out-of-the-box – that means, they won’t know the reply. Thus, it’s going to want changes.

Coaching an LLM mannequin can take months and hundreds of thousands of {dollars}. What’s even worse is that if we don’t modify and tune the mannequin to our function, there shall be unsatisfactory outcomes or hallucinations (when the mannequin’s response doesn’t make sense given our question).

So what’s the answer, then? Spending some huge cash retraining the mannequin to incorporate our knowledge?

Not likely. That’s when the Retrieval-Augmented Era (RAG) turns into helpful.

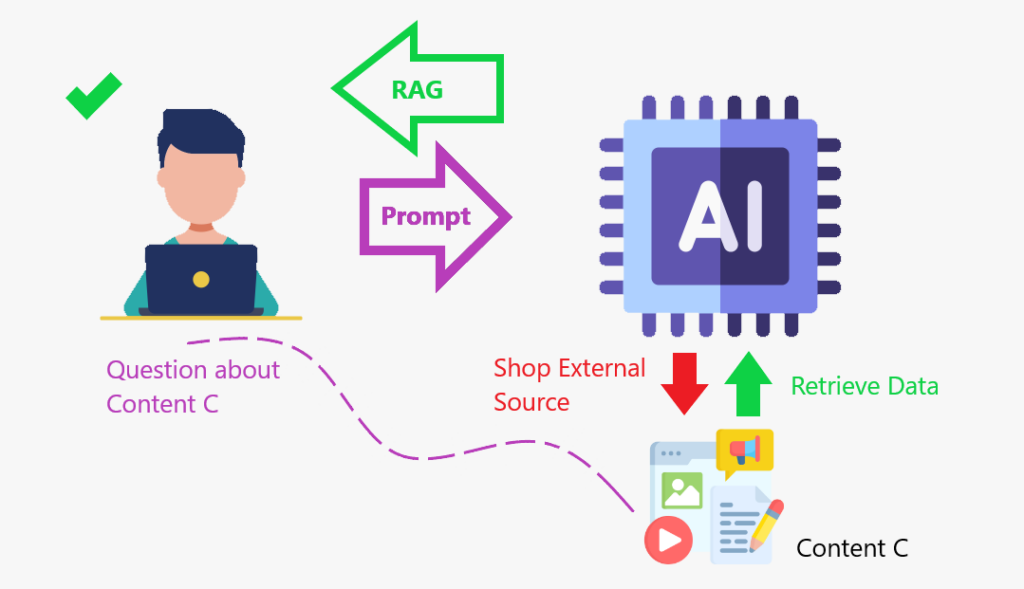

RAG is a framework that mixes getting info from an exterior information base with giant language fashions (LLMs). It helps AI fashions produce extra correct and related responses.

Let’s study extra about RAG subsequent.

What’s RAG?

Let me let you know a narrative as an example the idea.

I like motion pictures. For a while up to now, I knew which motion pictures had been competing for the very best film class on the Oscars or the very best actors and actresses. And I would definitely know which of them received the statue for that 12 months. However now I’m all rusty on that topic. If you happen to requested me who was competing, I’d not know. And even when I attempted to reply you, I’d offer you a weak response.

So, to offer you a top quality response, I’ll do what all people else does: seek for the knowledge on-line, receive it, after which give it to you. What I simply did is identical concept because the RAG: I obtained knowledge from an exterior database to present you a solution.

Once we improve the LLM with a content material retailer the place it could go and retrieve knowledge to increase (improve) its information base, that’s the RAG framework in motion.

RAG is like making a content material retailer the place the mannequin can improve its information and reply extra precisely.

Summarizing:

- Makes use of search algorithms to question exterior knowledge sources, corresponding to databases, information bases, and internet pages.

- Pre-processes the retrieved info.

- Incorporates the pre-processed info into the LLM.

Why use RAG?

Now that we all know what the RAG framework is let’s perceive why we needs to be utilizing it.

Listed below are a few of the advantages:

- Enhances factual accuracy by referencing actual knowledge.

- RAG may also help LLMs course of and consolidate information to create extra related solutions

- RAG may also help LLMs entry extra information bases, corresponding to inside organizational knowledge

- RAG may also help LLMs create extra correct domain-specific content material

- RAG may also help scale back information gaps and AI hallucination

As beforehand defined, I wish to say that with the RAG framework, we’re giving an inside search engine for the content material we would like it so as to add to the information base.

Effectively. All of that may be very fascinating. However let’s see an utility of RAG. We are going to learn to create an AI-powered PDF Reader Assistant.

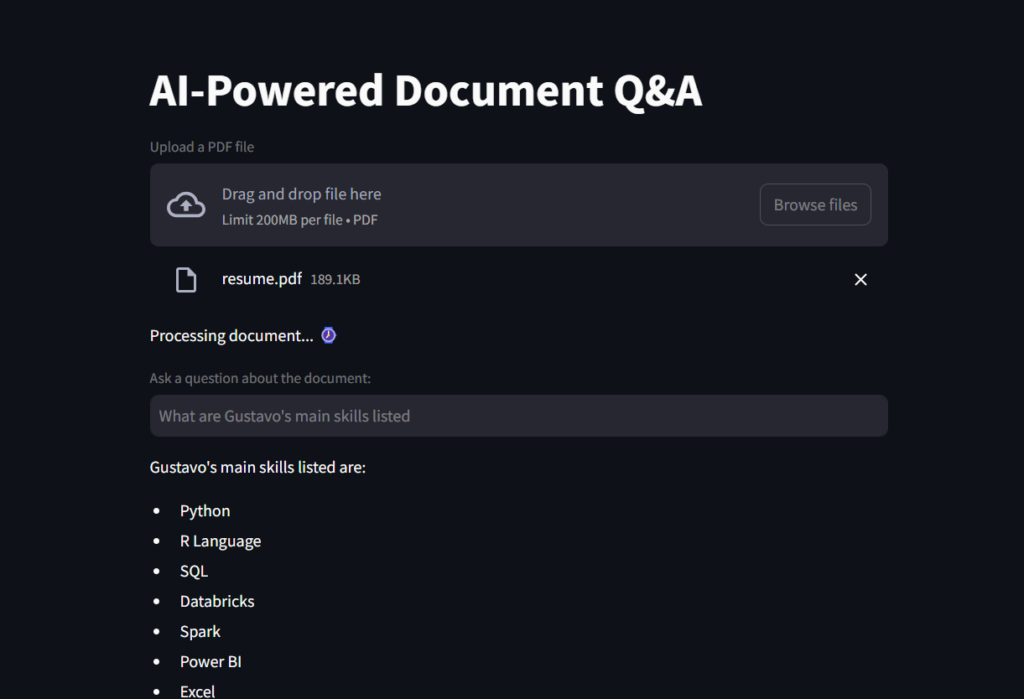

Challenge

That is an utility that permits customers to add a PDF doc and ask questions on its content material utilizing AI-powered pure language processing (NLP) instruments.

- The app makes use of

Streamlitbecause the entrance finish. Langchain, OpenAI’s GPT-4 mannequin, andFAISS(Fb AI Similarity Search) for doc retrieval and query answering within the backend.

Let’s break down the steps for higher understanding:

- Loading a PDF file and splitting it into chunks of textual content.

- This makes the info optimized for retrieval

- Current the chunks to an embedding device.

- Embeddings are numerical vector representations of knowledge used to seize relationships, similarities, and meanings in a method that machines can perceive. They’re extensively utilized in Pure Language Processing (NLP), recommender methods, and search engines like google and yahoo.

- Subsequent, we put these chunks of textual content and embeddings in the identical DB for retrieval.

- Lastly, we make it accessible to the LLM.

Knowledge preparation

Getting ready a content material retailer for the LLM will take some steps, as we simply noticed. So, let’s begin by making a operate that may load a file and break up it into textual content chunks for environment friendly retrieval.

# Imports

from langchain_community.document_loaders import PyPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

def load_document(pdf):

# Load a PDF

"""

Load a PDF and break up it into chunks for environment friendly retrieval.

:param pdf: PDF file to load

:return: Record of chunks of textual content

"""

loader = PyPDFLoader(pdf)

docs = loader.load()

# Instantiate Textual content Splitter with Chunk Dimension of 500 phrases and Overlap of 100 phrases in order that context shouldn't be misplaced

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=100)

# Cut up into chunks for environment friendly retrieval

chunks = text_splitter.split_documents(docs)

# Return

return chunksSubsequent, we are going to begin constructing our Streamlit app, and we’ll use that operate within the subsequent script.

Internet utility

We are going to start importing the mandatory modules in Python. Most of these will come from the langchain packages.

FAISS is used for doc retrieval; OpenAIEmbeddings transforms the textual content chunks into numerical scores for higher similarity calculation by the LLM; ChatOpenAI is what permits us to work together with the OpenAI API; create_retrieval_chain is what really the RAG does, retrieving and augmenting the LLM with that knowledge; create_stuff_documents_chain glues the mannequin and the ChatPromptTemplate.

Be aware: You have to to generate an OpenAI Key to have the ability to run this script. If it’s the primary time you’re creating your account, you get some free credit. However in case you have it for a while, it’s attainable that you’ll have to add 5 {dollars} in credit to have the ability to entry OpenAI’s API. An choice is utilizing Hugging Face’s Embedding.

# Imports

from langchain_community.vectorstores import FAISS

from langchain_openai import OpenAIEmbeddings

from langchain.chains import create_retrieval_chain

from langchain_openai import ChatOpenAI

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain_core.prompts import ChatPromptTemplate

from scripts.secret import OPENAI_KEY

from scripts.document_loader import load_document

import streamlit as stThis primary code snippet will create the App title, create a field for file add, and put together the file to be added to the load_document() operate.

# Create a Streamlit app

st.title("AI-Powered Doc Q&A")

# Load doc to streamlit

uploaded_file = st.file_uploader("Add a PDF file", sort="pdf")

# If a file is uploaded, create the TextSplitter and vector database

if uploaded_file :

# Code to work round doc loader from Streamlit and make it readable by langchain

temp_file = "./temp.pdf"

with open(temp_file, "wb") as file:

file.write(uploaded_file.getvalue())

file_name = uploaded_file.title

# Load doc and break up it into chunks for environment friendly retrieval.

chunks = load_document(temp_file)

# Message consumer that doc is being processed with time emoji

st.write("Processing doc... :watch:")Machines perceive numbers higher than textual content, so in the long run, we must present the mannequin with a database of numbers that it could evaluate and test for similarity when performing a question. That’s the place the embeddings shall be helpful to create the vector_db, on this subsequent piece of code.

# Generate embeddings

# Embeddings are numerical vector representations of knowledge, sometimes used to seize relationships, similarities,

# and meanings in a method that machines can perceive. They're extensively utilized in Pure Language Processing (NLP),

# recommender methods, and search engines like google and yahoo.

embeddings = OpenAIEmbeddings(openai_api_key=OPENAI_KEY,

mannequin="text-embedding-ada-002")

# Can even use HuggingFaceEmbeddings

# from langchain_huggingface.embeddings import HuggingFaceEmbeddings

# embeddings = HuggingFaceEmbeddings(model_name="sentence-transformers/all-MiniLM-L6-v2")

# Create vector database containing chunks and embeddings

vector_db = FAISS.from_documents(chunks, embeddings)Subsequent, we create a retriever object to navigate within the vector_db.

# Create a doc retriever

retriever = vector_db.as_retriever()

llm = ChatOpenAI(model_name="gpt-4o-mini", openai_api_key=OPENAI_KEY)Then, we are going to create the system_prompt, which is a set of directions to the LLM on find out how to reply, and we are going to create a immediate template, getting ready it to be added to the mannequin as soon as we get the enter from the consumer.

# Create a system immediate

# It units the general context for the mannequin.

# It influences tone, type, and focus earlier than consumer interplay begins.

# In contrast to consumer inputs, a system immediate shouldn't be seen to the tip consumer.

system_prompt = (

"You're a useful assistant. Use the given context to reply the query."

"If you do not know the reply, say you do not know. "

"{context}"

)

# Create a immediate Template

immediate = ChatPromptTemplate.from_messages(

[

("system", system_prompt),

("human", "{input}"),

]

)

# Create a series

# It creates a StuffDocumentsChain, which takes a number of paperwork (textual content knowledge) and "stuffs" them collectively earlier than passing them to the LLM for processing.

question_answer_chain = create_stuff_documents_chain(llm, immediate)Shifting on, we create the core of the RAG framework, pasting collectively the retriever object and the immediate. This object provides related paperwork from a knowledge supply (e.g., a vector database) and makes it able to be processed utilizing an LLM to generate a response.

# Creates the RAG

chain = create_retrieval_chain(retriever, question_answer_chain)Lastly, we create the variable query for the consumer enter. If this query field is stuffed with a question, we cross it to the chain, which calls the LLM to course of and return the response, which shall be printed on the app’s display screen.

# Streamlit enter for query

query = st.text_input("Ask a query concerning the doc:")

if query:

# Reply

response = chain.invoke({"enter": query})['answer']

st.write(response)Here’s a screenshot of the consequence.

And this can be a GIF so that you can see the File Reader Ai Assistant in motion!

Earlier than you go

On this mission, we realized what the RAG framework is and the way it helps the Llm to carry out higher and likewise carry out effectively with particular information.

AI may be powered with information from an instruction handbook, databases from an organization, some finance recordsdata, or contracts, after which grow to be fine-tuned to reply precisely to domain-specific content material queries. The information base is augmented with a content material retailer.

To recap, that is how the framework works:

1️⃣ Person Question → Enter textual content is obtained.

2️⃣ Retrieve Related Paperwork → Searches a information base (e.g., a database, vector retailer).

3️⃣ Increase Context → Retrieved paperwork are added to the enter.

4️⃣ Generate Response → An LLM processes the mixed enter and produces a solution.

GitHub repository

https://github.com/gurezende/Fundamental-Rag

About me

If you happen to favored this content material and wish to study extra about my work, right here is my web site, the place you too can discover all my contacts.

References

https://cloud.google.com/use-cases/retrieval-augmented-generation

https://www.ibm.com/suppose/subjects/retrieval-augmented-generation

https://python.langchain.com/docs/introduction

https://www.geeksforgeeks.org/how-to-get-your-own-openai-api-key