Just a few days in the past, Google DeepMind launched Gemma 3, and I used to be nonetheless exploring its capabilities. However now, there’s a serious growth: Mistral AI’s Small 3.1 has arrived, claiming to be the perfect mannequin in its weight class! This light-weight, quick, and extremely customizable marvel operates effortlessly on a single RTX 4090 or a Mac with 32GB RAM, making it good for on-device functions. On this article, I’ll break down the small print of Mistral Small 3.1 and supply hands-on examples to showcase its potential.

What’s Mistral Small 3.1?

Mistral Small 3.1 is a cutting-edge, open-source AI mannequin launched below the Apache 2.0 license by Mistral AI. Designed for effectivity, it helps multimodal inputs (textual content and pictures) and excels in multilingual duties with distinctive accuracy. With a 128k token context window, it’s constructed for long-context functions, making it a best choice for real-time conversational AI, automated workflows, and domain-specific fine-tuning.

Key Options

- Environment friendly Deployment: Runs on consumer-grade {hardware} like RTX 4090 or Mac with 32GB RAM.

- Multimodal Capabilities: Processes each textual content and picture inputs for versatile functions.

- Multilingual Assist: Delivers excessive efficiency throughout a number of languages.

- Prolonged Context: Handles as much as 128k tokens for advanced, long-context duties.

- Speedy Response: Optimized for low-latency, real-time conversational AI.

- Operate Execution: Permits fast and correct operate calling for automation.

- Customization: Simply fine-tuned for specialised domains like healthcare or authorized AI.

Mistral Small 3.1 vs Gemma 3 vs GPT 4o Mini vs Claude 3.5

Textual content Instruct Benchmarks

The picture compares 5 AI fashions throughout six benchmarks. Mistral Small 3.1 (24B) achieved the perfect efficiency in 4 benchmarks: GPQA Foremost, GPQA Diamond, MMLU, and HumanEval. Gemma 3-it (27B) leads in SimpleQA and MATH benchmarks.

Multimodal Instruct Benchmarks

This picture compares AI fashions throughout seven benchmarks. Mistral Small 3.1 (24B) leads in MMMU-Professional, MM-MT-Bench, ChartQA, and AI2D benchmarks. Gemma 3-it (27B) performs greatest in MathVista, MMMU, and DocVQA benchmarks.

Multilingual

This picture reveals AI mannequin efficiency throughout 4 cultural classes: Common, European, East Asian, and Center Japanese. Mistral Small 3.1 (24B) leads in Common, European, and East Asian classes, whereas Gemma 3-it (27B) is greatest within the Center Japanese class.

Lengthy Context

This picture compares 4 AI fashions throughout three benchmarks. Mistral Small 3.1 (24B) achieves highest efficiency on LongBench v2 and RULER 32k benchmarks, whereas Claude-3.5 Haiku scores highest within the RULER 128k benchmark.

Pretrained Efficiency

This picture compares two AI fashions: Mistral Small 3.1 Base (24B) and Gemma 3-pt (27B), throughout 5 benchmarks. Mistral performs higher on MMLU, MMLU Professional, GPQA, and MMMU. Gemma achieves the perfect outcome on the TriviaQA benchmark.

The way to Get Mistral Small 3.1 API?

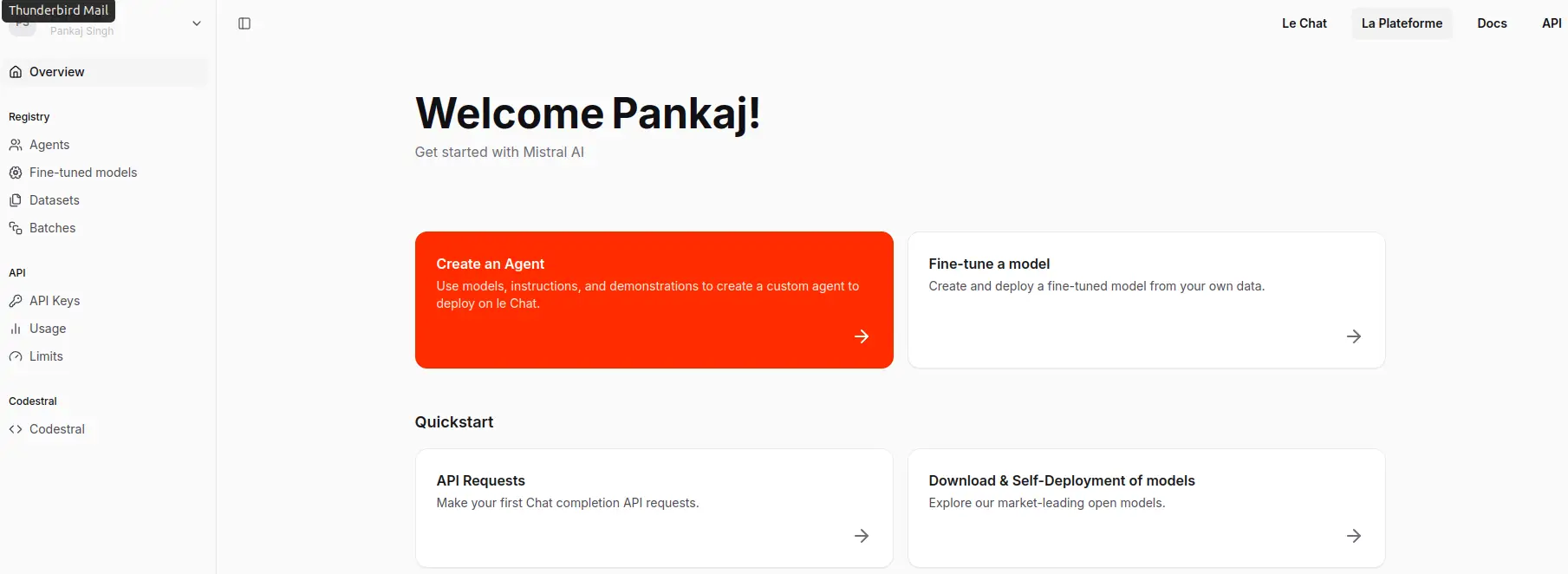

Step 1: Seek for the Mistral AI in your Browser

Step 2: Open the Mistral AI web site and click on on Attempt API

Step 3: Click on on the API Keys and Generate the important thing

Through La Plateforme (Mistral AI’s API)

- Join at console.mistral.ai.

- Activate funds to allow API keys (Mistral’s API requires this step).

- Use the API endpoint with a mannequin identifier like mistral-small-latest or mistral-small-2501 (examine Mistral’s documentation for the precise identify post-release).

Python consumer:

import requests

api_key = "your_api_key"

headers = {"Authorization": f"Bearer {api_key}", "Content material-Kind": "software/json"}

knowledge = {"mannequin": "mistral-small-latest", "messages": [{"role": "user", "content": "Test"}]}

response = requests.publish("https://api.mistral.ai/v1/chat/completions", json=knowledge, headers=headers)

print(response.json())Let’s Attempt Mistral Small 3.1

Instance 1: Textual content Era

!pip set up mistralaiimport os

from mistralai import Mistralfrom getpass import getpass

MISTRAL_KEY = getpass('Enter Mistral AI API Key: ')import os

os.environ['MISTRAL_API_KEY'] = MISTRAL_KEYmannequin = "mistral-small-2503"

consumer = Mistral(api_key=MISTRAL_KEY)

chat_response = consumer.chat.full(

mannequin= mannequin,Picsum ID: 237

messages = [

{

"role": "user",

"content": "What is the best French cheese?",

},

]

)

print(chat_response.decisions[0].message.content material)Output

Selecting the "greatest" French cheese will be extremely subjective, because it is determined by private style preferences. France is famend for its numerous and high-quality cheeses, with over 400 varieties. Listed here are just a few extremely regarded ones:1. **Camembert de Normandie**: A smooth, creamy cheese with a wealthy, buttery taste. It is usually thought of one of many most interesting examples of French cheese.

2. **Brie de Meaux**: One other smooth cheese, Brie de Meaux is understood for its creamy texture and earthy taste. It is usually served at room temperature to boost its aroma and style.

3. **Roquefort**: It is a robust, blue-veined cheese created from sheep's milk. It has a particular, tangy taste and is usually crumbled over salads or served with fruits and nuts.

4. **Comté**: A tough, cow's milk cheese from the Jura area, Comté has a fancy, nutty taste that varies relying on the age of the cheese.

5. **Munster-Gérardmer**: A powerful, pungent cheese from the Alsace area, Munster-Gérardmer is usually washed in brine, giving it a particular orange rind and strong taste.

6. **Chèvre (Goat Cheese)**: There are a lot of kinds of goat cheese in France, starting from smooth and creamy to agency and crumbly. Some widespread sorts embrace Sainte-Maure de Touraine and Crottin de Chavignol.

Every of those cheeses presents a novel style expertise, so the "greatest" one in the end is determined by your private desire.

Instance 2: Utilizing Mistral Small 2503 for Picture Description

import base64

def describe_image(image_path: str, immediate: str = "Describe this picture intimately."):

# Encode picture to base64

with open(image_path, "rb") as image_file:

base64_image = base64.b64encode(image_file.learn()).decode("utf-8")

# Create message with picture and textual content

messages = [{

"role": "user",

"content": [

{"type": "text", "text": prompt},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}" # Adjust MIME type if needed

}

}

]

}]

# Get response

chat_response = consumer.chat.full(

mannequin=mannequin,

messages=messages

)

return chat_response.decisions[0].message.content material

# Utilization Instance

image_description = describe_image("/content material/image_xnt9HBr.png")

print(image_description)Enter Picture

Output

The picture illustrates a course of involving the Gemini mannequin, which seems to be a sort of AI or machine studying system. Here's a detailed breakdown of the picture:1. **Enter Part**:

- There are three distinct inputs supplied to the Gemini system:

- The phrase "Cat" written in English.

- The character "猫" which is the Chinese language character for "cat."

- The phrase "कुत्ता" which is the Hindi phrase for "canine."2. **Processing Unit**:

- The inputs are directed in the direction of a central processing unit labeled "Gemini." This means that the Gemini system is processing the inputs in some method, possible for evaluation, translation, or some type of recognition.3. **Output Part**:

- On the appropriate aspect of the Gemini unit, there are three units of coloured dots:

- The primary set consists of blue dots.

- The second set consists of a mixture of blue and light-weight blue dots.

- The third set consists of yellow and orange dots.

- These coloured dots possible symbolize some type of encoded knowledge, embeddings, or characteristic representations generated by the Gemini system primarily based on the enter knowledge.**Abstract**:

The picture depicts an AI system named Gemini that takes in textual inputs in numerous languages (English, Chinese language, and Hindi) and processes these inputs to supply some type of encoded output, represented by coloured dots. This means that Gemini is able to dealing with multilingual inputs and producing corresponding knowledge representations, which may very well be used for numerous functions resembling language translation, semantic evaluation, or machine studying duties.

Instance 3: Picture Description

image_description = describe_image("/content material/yosemite.png")

print(image_description)Enter Picture

Output

The picture depicts a serene and picturesque pure panorama, possible in a nationwide park. The scene is dominated by towering granite cliffs and rock formations, which rise dramatically into a transparent blue sky with just a few scattered clouds. The cliffs are rugged and steep, showcasing the pure fantastic thing about the world.Within the foreground, there's a calm river or stream flowing gently over a rocky mattress. The water displays the encompassing panorama, including to the tranquility of the scene. The riverbank is lined with inexperienced vegetation, together with grasses and small vegetation, which add a contact of vibrant colour to the scene.

The background includes a dense forest of tall evergreen timber, primarily conifers, which give a lush inexperienced distinction to the grey and brown tones of the rock formations. The timber are scattered throughout the panorama, extending up the slopes of the cliffs, indicating a wholesome and thriving ecosystem.

General, the picture captures the majestic and peaceable fantastic thing about a pure panorama, possible in a well known park resembling Yosemite Nationwide Park, recognized for its iconic granite cliffs and scenic valleys.

Instance 4: Picture Description

image_description = describe_image("/content material/237-200x300.jpg")

print(image_description)Enter Picture

Output

The picture includes a black canine mendacity down on a picket floor. The canine has a brief, shiny black coat and is wanting straight on the digital camera with a relaxed and attentive expression. Its ears are perked up, and its eyes are huge open, giving it a curious and alert look. The picket floor beneath the canine has a country, textured look, with seen grain patterns and a heat, pure colour. The general setting seems to be indoors, and the lighting is smooth, highlighting the canine's options and the feel of the wooden.

The way to Entry Mistral Small 3.1 Utilizing Hugging Face?

Through Hugging Face

- Go to the Hugging Face web site and seek for “Mistral Small 3.1” or examine the Mistral AI group web page (e.g., mistralai/Mistral-Small-3.1).

- Obtain the mannequin recordsdata (possible together with weights and tokenizer configurations).

- Use a suitable framework like Hugging Face Transformers or Mistral’s official inference library:

Set up required libraries:

pip set up transformers torch (add mistral-inference if utilizing their official library)Load the mannequin in Python:

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "mistralai/Mistral-Small-3.1" # Regulate primarily based on precise identify

tokenizer = AutoTokenizer.from_pretrained(model_name)

mannequin = AutoModelForCausalLM.from_pretrained(model_name)Try this hyperlink for extra data: Mistral Small

Conclusion

Mistral Small 3.1 stands out as a robust, environment friendly, and versatile AI mannequin, providing top-tier efficiency in its class. With its skill to deal with multimodal inputs, multilingual duties, and long-context functions, it offers a compelling various to rivals like Gemma 3 and GPT-4o Mini.

Its light-weight deployment on consumer-grade {hardware}, mixed with real-time responsiveness and customization choices, makes it a wonderful alternative for AI-driven functions. Whether or not for conversational AI, automation, or domain-specific fine-tuning, Mistral Small 3.1 is a robust contender within the AI.

Login to proceed studying and luxuriate in expert-curated content material.