AI is fueling a brand new industrial revolution — one pushed by AI factories.

Not like conventional knowledge facilities, AI factories do greater than retailer and course of knowledge — they manufacture intelligence at scale, remodeling uncooked knowledge into real-time insights. For enterprises and international locations all over the world, this implies dramatically sooner time to worth — turning AI from a long-term funding into a direct driver of aggressive benefit. Corporations that spend money on purpose-built AI factories immediately will lead in innovation, effectivity and market differentiation tomorrow.

Whereas a conventional knowledge heart sometimes handles numerous workloads and is constructed for general-purpose computing, AI factories are optimized to create worth from AI. They orchestrate the complete AI lifecycle — from knowledge ingestion to coaching, fine-tuning and, most critically, high-volume inference.

For AI factories, intelligence isn’t a byproduct however the main one. This intelligence is measured by AI token throughput — the real-time predictions that drive selections, automation and fully new companies.

Whereas conventional knowledge facilities aren’t disappearing anytime quickly, whether or not they evolve into AI factories or hook up with them is dependent upon the enterprise enterprise mannequin.

No matter how enterprises select to adapt, AI factories powered by NVIDIA are already manufacturing intelligence at scale, remodeling how AI is constructed, refined and deployed.

The Scaling Legal guidelines Driving Compute Demand

Over the previous few years, AI has revolved round coaching giant fashions. However with the current proliferation of AI reasoning fashions, inference has develop into the primary driver of AI economics. Three key scaling legal guidelines spotlight why:

- Pretraining scaling: Bigger datasets and mannequin parameters yield predictable intelligence good points, however reaching this stage calls for important funding in expert consultants, knowledge curation and compute assets. During the last 5 years, pretraining scaling has elevated compute necessities by 50 million instances. Nevertheless, as soon as a mannequin is skilled, it considerably lowers the barrier for others to construct on high of it.

- Submit-training scaling: Fantastic-tuning AI fashions for particular real-world functions requires 30x extra compute throughout AI inference than pretraining. As organizations adapt current fashions for his or her distinctive wants, cumulative demand for AI infrastructure skyrockets.

- Take a look at-time scaling (aka lengthy considering): Superior AI functions equivalent to agentic AI or bodily AI require iterative reasoning, the place fashions discover a number of potential responses earlier than choosing the right one. This consumes as much as 100x extra compute than conventional inference.

Conventional knowledge facilities aren’t designed for this new period of AI. AI factories are purpose-built to optimize and maintain this huge demand for compute, offering a super path ahead for AI inference and deployment.

Reshaping Industries and Economies With Tokens

The world over, governments and enterprises are racing to construct AI factories to spur financial development, innovation and effectivity.

The European Excessive Efficiency Computing Joint Endeavor lately introduced plans to construct seven AI factories in collaboration with 17 European Union member nations.

This follows a wave of AI manufacturing facility investments worldwide, as enterprises and international locations speed up AI-driven financial development throughout each business and area:

These initiatives underscore a world actuality: AI factories are rapidly changing into important nationwide infrastructure, on par with telecommunications and power.

Inside an AI Manufacturing facility: The place Intelligence Is Manufactured

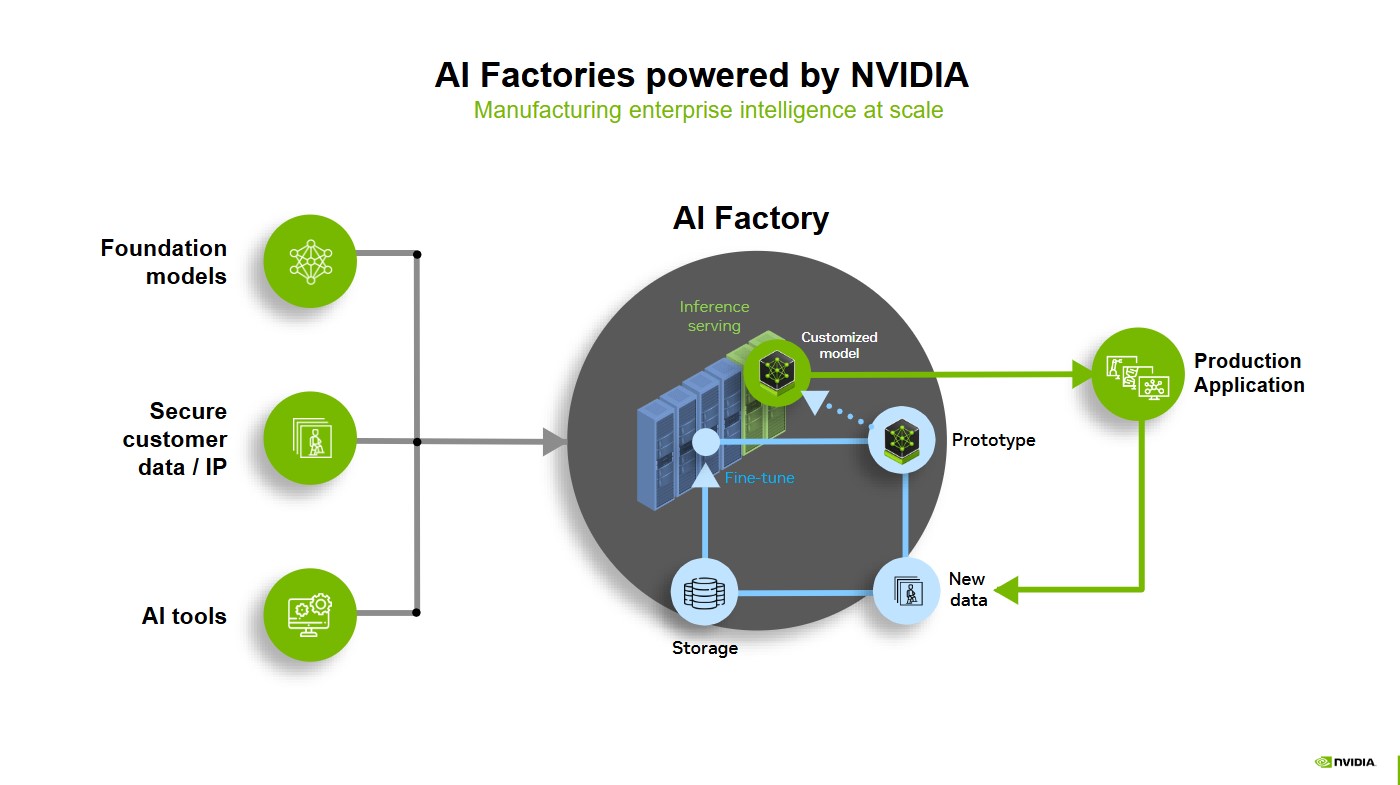

Basis fashions, safe buyer knowledge and AI instruments present the uncooked supplies for fueling AI factories, the place inference serving, prototyping and fine-tuning form highly effective, custom-made fashions able to be put into manufacturing.

As these fashions are deployed into real-world functions, they constantly study from new knowledge, which is saved, refined and fed again into the system utilizing a knowledge flywheel. This cycle of optimization ensures AI stays adaptive, environment friendly and at all times enhancing — driving enterprise intelligence at an unprecedented scale.

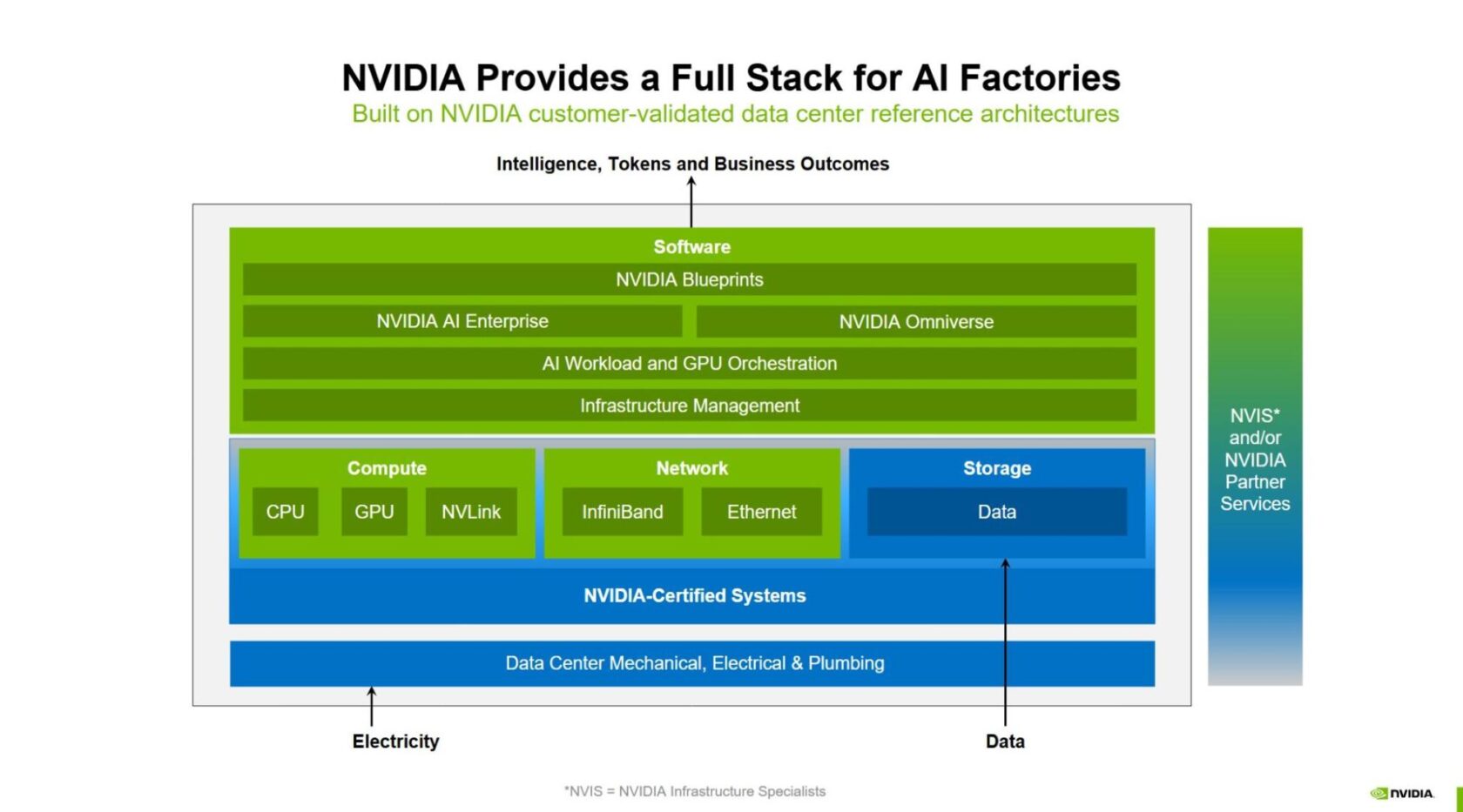

An AI Manufacturing facility Benefit With Full-Stack NVIDIA AI

NVIDIA delivers an entire, built-in AI manufacturing facility stack the place each layer — from the silicon to the software program — is optimized for coaching, fine-tuning, and inference at scale. This full-stack strategy ensures enterprises can deploy AI factories which are value efficient, high-performing and future-proofed for the exponential development of AI.

With its ecosystem companions, NVIDIA has created constructing blocks for the full-stack AI manufacturing facility, providing:

- Highly effective compute efficiency

- Superior networking

- Infrastructure administration and workload orchestration

- The biggest AI inference ecosystem

- Storage and knowledge platforms

- Blueprints for design and optimization

- Reference architectures

- Versatile deployment for each enterprise

Highly effective Compute Efficiency

The guts of any AI manufacturing facility is its compute energy. From NVIDIA Hopper to NVIDIA Blackwell, NVIDIA supplies the world’s strongest accelerated computing for this new industrial revolution. With the NVIDIA Blackwell Extremely-based GB300 NVL72 rack-scale answer, AI factories can obtain as much as 50X the output for AI reasoning, setting a brand new normal for effectivity and scale.

The NVIDIA DGX SuperPOD is the exemplar of the turnkey AI manufacturing facility for enterprises, integrating the perfect of NVIDIA accelerated computing. NVIDIA DGX Cloud supplies an AI manufacturing facility that delivers NVIDIA accelerated compute with excessive efficiency within the cloud.

International techniques companions are constructing full-stack AI factories for his or her clients based mostly on NVIDIA accelerated computing — now together with the NVIDIA GB200 NVL72 and GB300 NVL72 rack-scale options.

Superior Networking

Shifting intelligence at scale requires seamless, high-performance connectivity throughout the complete AI manufacturing facility stack. NVIDIA NVLink and NVLink Change allow high-speed, multi-GPU communication, accelerating knowledge motion inside and throughout nodes.

AI factories additionally demand a strong community spine. The NVIDIA Quantum InfiniBand, NVIDIA Spectrum-X Ethernet, and NVIDIA BlueField networking platforms scale back bottlenecks, guaranteeing environment friendly, high-throughput knowledge trade throughout huge GPU clusters. This end-to-end integration is crucial for scaling out AI workloads to million-GPU ranges, enabling breakthrough efficiency in coaching and inference.

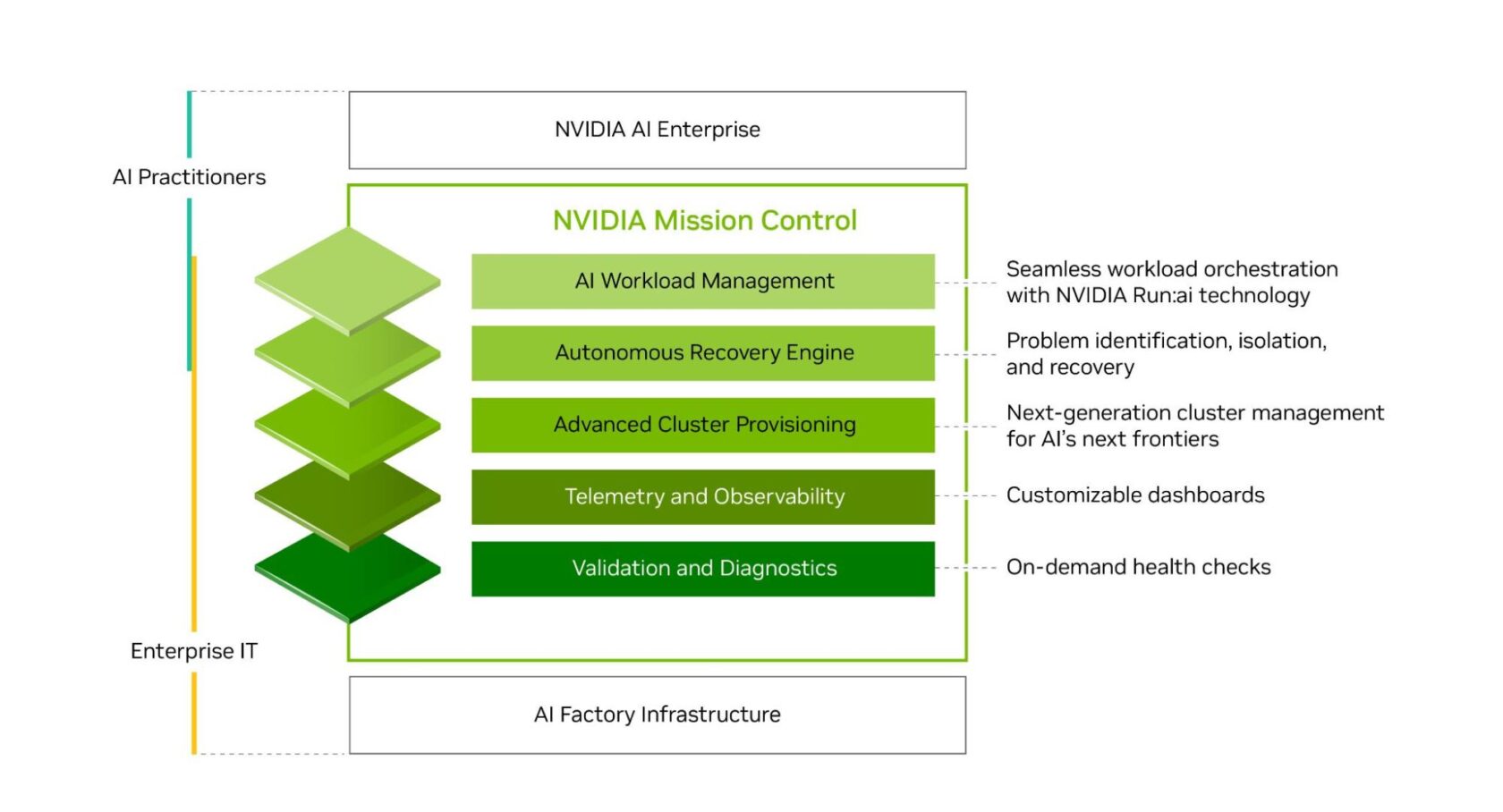

Infrastructure Administration and Workload Orchestration

Companies want a option to harness the facility of AI infrastructure with the agility, effectivity and scale of a hyperscaler, however with out the burdens of value, complexity and experience positioned on IT.

With NVIDIA Run:ai, organizations can profit from seamless AI workload orchestration and GPU administration, optimizing useful resource utilization whereas accelerating AI experimentation and scaling workloads. NVIDIA Mission Management software program, which incorporates NVIDIA Run:ai expertise, streamlines AI manufacturing facility operations from workloads to infrastructure whereas offering full-stack intelligence that delivers world-class infrastructure resiliency.

The Largest AI Inference Ecosystem

AI factories want the appropriate instruments to show knowledge into intelligence. The NVIDIA AI inference platform, spanning the NVIDIA TensorRT ecosystem, NVIDIA Dynamo and NVIDIA NIM microservices — all half (or quickly to be half) of the NVIDIA AI Enterprise software program platform — supplies the business’s most complete suite of AI acceleration libraries and optimized software program. It delivers most inference efficiency, ultra-low latency and excessive throughput.

Storage and Information Platforms

Information fuels AI functions, however the quickly rising scale and complexity of enterprise knowledge usually make it too expensive and time-consuming to harness successfully. To thrive within the AI period, enterprises should unlock the complete potential of their knowledge.

The NVIDIA AI Information Platform is a customizable reference design to construct a brand new class of AI infrastructure for demanding AI inference workloads. NVIDIA-Licensed Storage companions are collaborating with NVIDIA to create custom-made AI knowledge platforms that may harness enterprise knowledge to purpose and reply to complicated queries.

Blueprints for Design and Optimization

To design and optimize AI factories, groups can use the NVIDIA Omniverse Blueprint for AI manufacturing facility design and operations. The blueprint permits engineers to design, check and optimize AI manufacturing facility infrastructure earlier than deployment utilizing digital twins. By lowering danger and uncertainty, the blueprint helps forestall expensive downtime — a crucial issue for AI manufacturing facility operators.

For a 1 gigawatt-scale AI manufacturing facility, daily of downtime can value over $100 million. By fixing complexity upfront and enabling siloed groups in IT, mechanical, electrical, energy and community engineering to work in parallel, the blueprint accelerates deployment and ensures operational resilience.

Reference Architectures

NVIDIA Enterprise Reference Architectures and NVIDIA Cloud Accomplice Reference Architectures present a roadmap for companions designing and deploying AI factories. They assist enterprises and cloud suppliers construct scalable, high-performance and safe AI infrastructure based mostly on NVIDIA-Licensed Methods with the NVIDIA AI software program stack and companion ecosystem.

Each layer of the AI manufacturing facility stack depends on environment friendly computing to satisfy rising AI calls for. NVIDIA accelerated computing serves as the muse throughout the stack, delivering the best efficiency per watt to make sure AI factories function at peak power effectivity. With energy-efficient structure and liquid cooling, companies can scale AI whereas retaining power prices in test.

Versatile Deployment for Each Enterprise

With NVIDIA’s full-stack applied sciences, enterprises can simply construct and deploy AI factories, aligning with clients’ most well-liked IT consumption fashions and operational wants.

Some organizations go for on-premises AI factories to keep up full management over knowledge and efficiency, whereas others use cloud-based options for scalability and adaptability. Many additionally flip to their trusted world techniques companions for pre-integrated options that speed up deployment.

On Premises

NVIDIA DGX SuperPOD is a turnkey AI manufacturing facility infrastructure answer that gives accelerated infrastructure with scalable efficiency for probably the most demanding AI coaching and inference workloads. It contains a design-optimized mixture of AI compute, community cloth, storage and NVIDIA Mission Management software program, empowering enterprises to get AI factories up and working in weeks as an alternative of months — and with best-in-class uptime, resiliency and utilization.

AI manufacturing facility options are additionally supplied by means of the NVIDIA world ecosystem of enterprise expertise companions with NVIDIA-Licensed Methods. They ship main {hardware} and software program expertise, mixed with knowledge heart techniques experience and liquid-cooling improvements, to assist enterprises de-risk their AI endeavors and speed up the return on funding of their AI manufacturing facility implementations.

These world techniques companions are offering full-stack options based mostly on NVIDIA reference architectures — built-in with NVIDIA accelerated computing, high-performance networking and AI software program — to assist clients efficiently deploy AI factories and manufacture intelligence at scale.

Within the Cloud

For enterprises trying to make use of a cloud-based answer for his or her AI manufacturing facility, NVIDIA DGX Cloud delivers a unified platform on main clouds to construct, customise and deploy AI functions. Each layer of DGX Cloud is optimized and totally managed by NVIDIA, providing the perfect of NVIDIA AI within the cloud, and options enterprise-grade software program and large-scale, contiguous clusters on main cloud suppliers, providing scalable compute assets preferrred for even probably the most demanding AI coaching workloads.

DGX Cloud additionally features a dynamic and scalable serverless inference platform that delivers excessive throughput for AI tokens throughout hybrid and multi-cloud environments, considerably lowering infrastructure complexity and operational overhead.

By offering a full-stack platform that integrates {hardware}, software program, ecosystem companions and reference architectures, NVIDIA helps enterprises construct AI factories which are value efficient, scalable and high-performing — equipping them to satisfy the following industrial revolution.

Study extra about NVIDIA AI factories.

See discover concerning software program product data.