Advancing AI requires a full-stack strategy, with a robust basis of computing infrastructure — together with accelerated processors and networking applied sciences — linked to optimized compilers, algorithms and purposes.

NVIDIA Analysis is innovating throughout this spectrum, supporting nearly each trade within the course of. At this week’s Worldwide Convention on Studying Representations (ICLR), going down April 24-28 in Singapore, greater than 70 NVIDIA-authored papers introduce AI developments with purposes in autonomous autos, healthcare, multimodal content material creation, robotics and extra.

“ICLR is among the world’s most impactful AI conferences, the place researchers introduce vital technical improvements that transfer each trade ahead,” stated Bryan Catanzaro, vice chairman of utilized deep studying analysis at NVIDIA. “The analysis we’re contributing this 12 months goals to speed up each degree of the computing stack to amplify the influence and utility of AI throughout industries.”

Analysis That Tackles Actual-World Challenges

A number of NVIDIA-authored papers at ICLR cowl groundbreaking work in multimodal generative AI and novel strategies for AI coaching and artificial information technology, together with:

- Fugatto: The world’s most versatile audio generative AI mannequin, Fugatto generates or transforms any mixture of music, voices and sounds described with prompts utilizing any mixture of textual content and audio recordsdata. Different NVIDIA fashions at ICLR enhance audio massive language fashions (LLMs) to higher perceive speech.

- HAMSTER: This paper demonstrates {that a} hierarchical design for vision-language-action fashions can enhance their capability to switch information from off-domain fine-tuning information — cheap information that doesn’t must be collected on precise robotic {hardware} — to enhance a robotic’s abilities in testing eventualities.

- Hymba: This household of small language fashions makes use of a hybrid mannequin structure to create LLMs that mix the advantages of transformer fashions and state area fashions, enabling high-resolution recall, environment friendly context summarization and commonsense reasoning duties. With its hybrid strategy, Hymba improves throughput by 3x and reduces cache by nearly 4x with out sacrificing efficiency.

- LongVILA: This coaching pipeline allows environment friendly visible language mannequin coaching and inference for lengthy video understanding. Coaching AI fashions on lengthy movies is compute and memory-intensive — so this paper introduces a system that effectively parallelizes lengthy video coaching and inference, with coaching scalability as much as 2 million tokens on 256 GPUs. LongVILA achieves state-of-the-art efficiency throughout 9 fashionable video benchmarks.

- LLaMaFlex: This paper introduces a brand new zero-shot technology method to create a household of compressed LLMs based mostly on one massive mannequin. The researchers discovered that LLaMaFlex can generate compressed fashions which are as correct or higher than state-of-the artwork pruned, versatile and trained-from-scratch fashions — a functionality that might be utilized to considerably cut back the price of coaching mannequin households in comparison with strategies like pruning and information distillation.

- Proteina: This mannequin can generate numerous and designable protein backbones, the framework that holds a protein collectively. It makes use of a transformer mannequin structure with as much as 5x as many parameters as earlier fashions.

- SRSA: This framework addresses the problem of instructing robots new duties utilizing a preexisting ability library — so as an alternative of studying from scratch, a robotic can apply and adapt its current abilities to the brand new process. By creating a framework to foretell which preexisting ability can be most related to a brand new process, the researchers have been in a position to enhance zero-shot success charges on unseen duties by 19%.

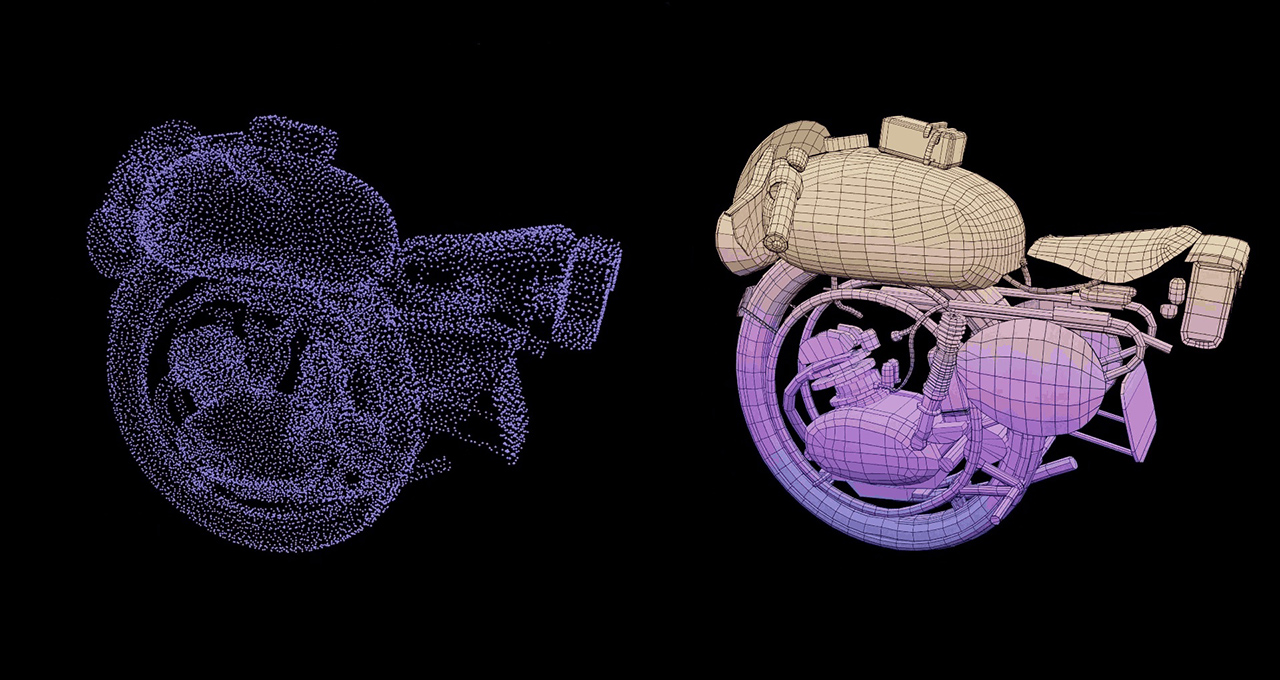

- STORM: This mannequin can reconstruct dynamic outside scenes — like vehicles driving or bushes swaying within the wind — with a exact 3D illustration inferred from only a few snapshots. The mannequin, which may reconstruct large-scale outside scenes in 200 milliseconds, has potential purposes in autonomous automobile improvement.

Uncover the newest work from NVIDIA Analysis, a world crew of round 400 specialists in fields together with pc structure, generative AI, graphics, self-driving vehicles and robotics.