In lots of Synthetic Intelligence (AI) functions reminiscent of Pure Language Processing (NLP) and Laptop Imaginative and prescient (CV), there’s a want for a unified pre-training framework (e.g. Florence-2) that may operate autonomously. The present datasets for specialised functions nonetheless want human labeling, which limits the event of foundational fashions for complicated CV-related duties.

Microsoft researchers created the Florence-2 mannequin (2023) that’s able to dealing with many pc imaginative and prescient duties. It efficiently solves the dearth of a unified mannequin structure and weak coaching knowledge.

About us: Viso.ai offers the end-to-end Laptop Imaginative and prescient Infrastructure, Viso Suite. It’s a strong all-in-one resolution for AI imaginative and prescient. Corporations worldwide use it to develop and ship real-world functions dramatically quicker. Get a demo in your firm.

Historical past of Florence-2 mannequin

In a nutshell, basis fashions are fashions which are pre-trained on some common duties (typically in self-supervised mode). In any other case, it’s unattainable to seek out a whole lot of labeled knowledge for absolutely supervised studying. They are often simply tailored to varied new duties (with or with out fine-tuning/extra coaching), inside context studying.

Researchers launched the time period ‘basis’ as a result of they’re the foundations for a lot of different issues/challenges. There are benefits to this course of (it’s straightforward to construct one thing new) and drawbacks (many will endure from a nasty basis).

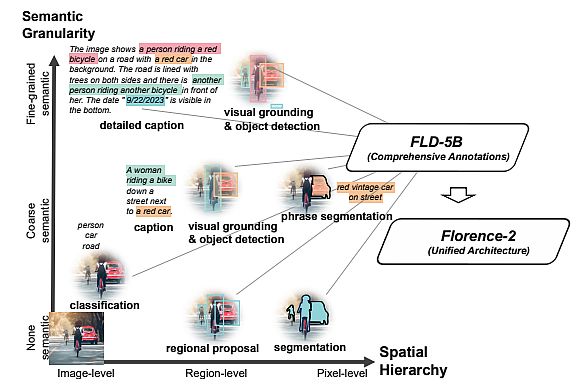

These fashions are usually not elementary for AI since they don’t seem to be a foundation for understanding or constructing intelligence or consciousness. To use basis fashions in CV duties, Microsoft researchers divided the vary of duties into three teams:

- House (scene classification, object detection)

- Time (statics, dynamics)

- Modality (RGB, depth).

Then they outlined the muse mannequin for CV as a pre-trained mannequin and adapters for fixing all issues on this House-Time-Modality with the flexibility to switch the zero studying sort.

They offered their work as a brand new paradigm for constructing a imaginative and prescient basis mannequin and referred to as it Florence-2 (the birthplace of the Renaissance). They take into account it an ecosystem of 4 giant areas:

- Information gathering

- Mannequin pre-training

- Activity diversifications

- Coaching infrastructure

What’s the Florence-2 mannequin?

Xiao et al. (Microsoft, 2023) developed the Florence-2 consistent with NLP goals of versatile mannequin improvement with a standard base. Florence-2 combines a multi-sequence studying paradigm and customary imaginative and prescient language modeling for a number of CV duties.

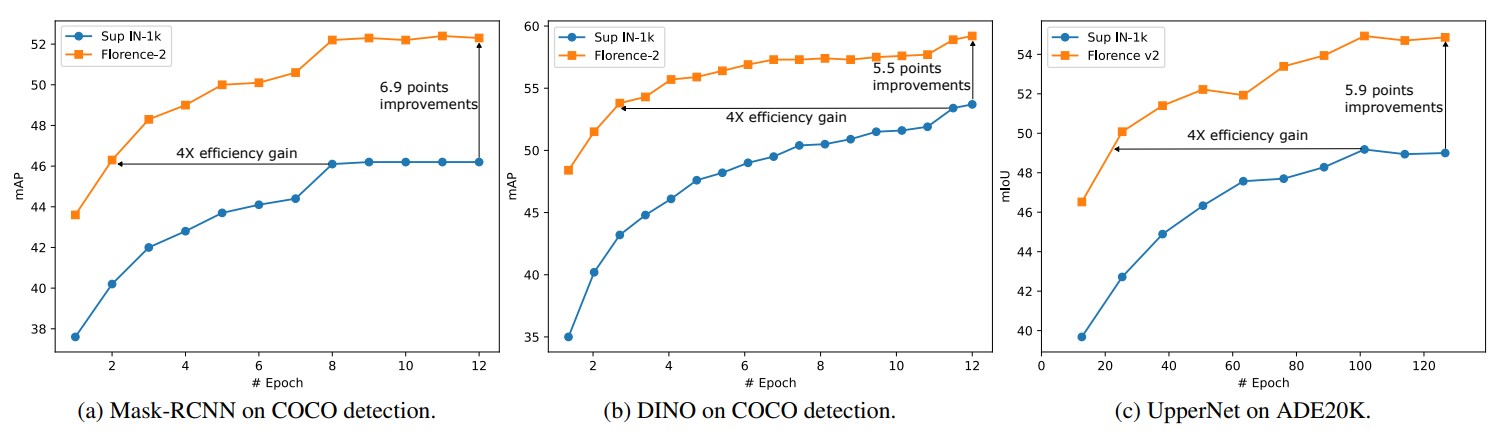

Florence-2 redefines efficiency requirements with its distinctive zero-shot and fine-tuning capabilities. It performs duties like captioning, expression interpretation, visible grounding, and object detection. Moreover, Florence-2 surpasses present specialised fashions and units new benchmarks utilizing publicly out there human-annotated knowledge.

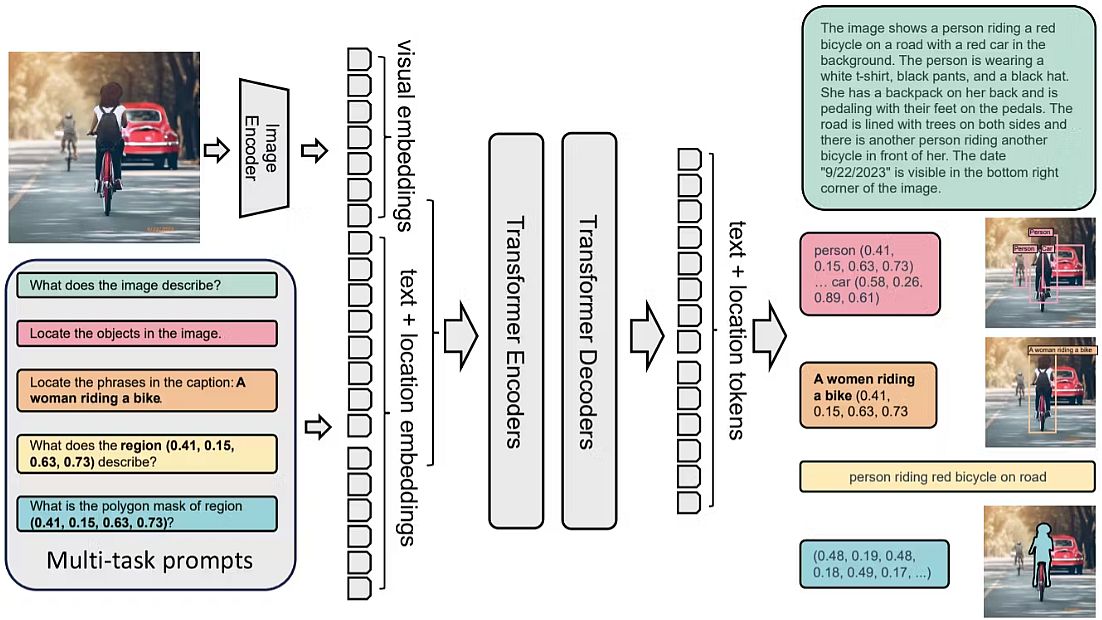

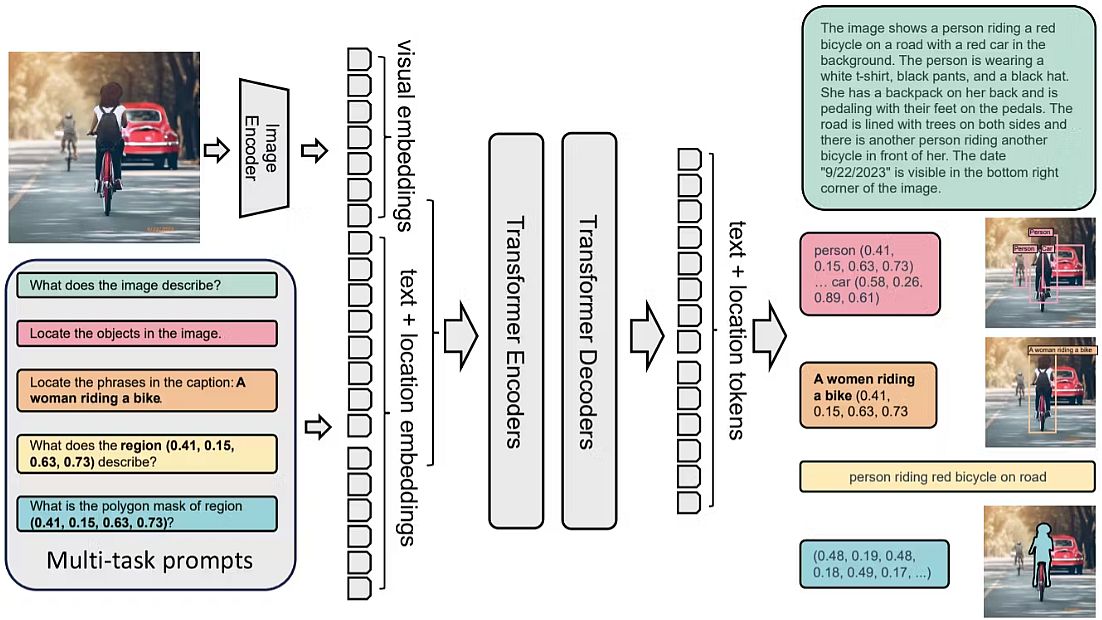

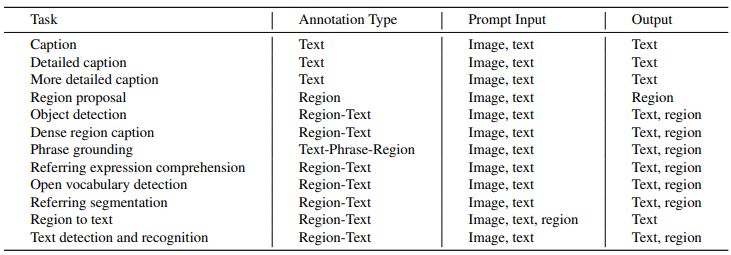

Florence-2 makes use of a multi-sequence structure to unravel varied pc imaginative and prescient duties. Each process is dealt with as a transiting drawback, through which the mannequin creates the suitable output reply given an enter picture and a task-specific immediate.

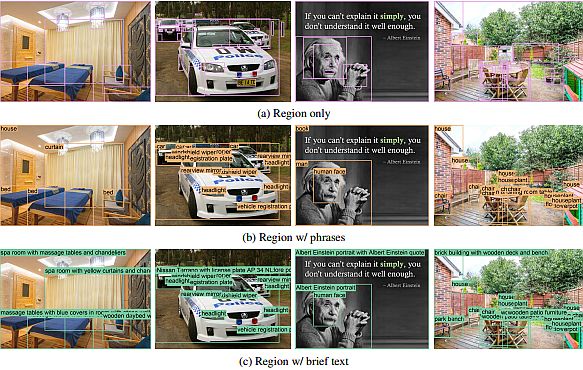

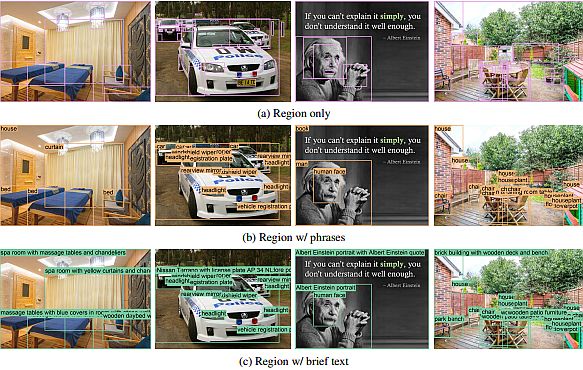

Duties can include geographical or textual content knowledge, and the mannequin adjusts its processing in keeping with the duty’s necessities. Researchers included location tokens within the tokenizer’s vocabulary record for duties particular to a given area. These tokens present a number of codecs, together with field, quad, and polygon illustration.

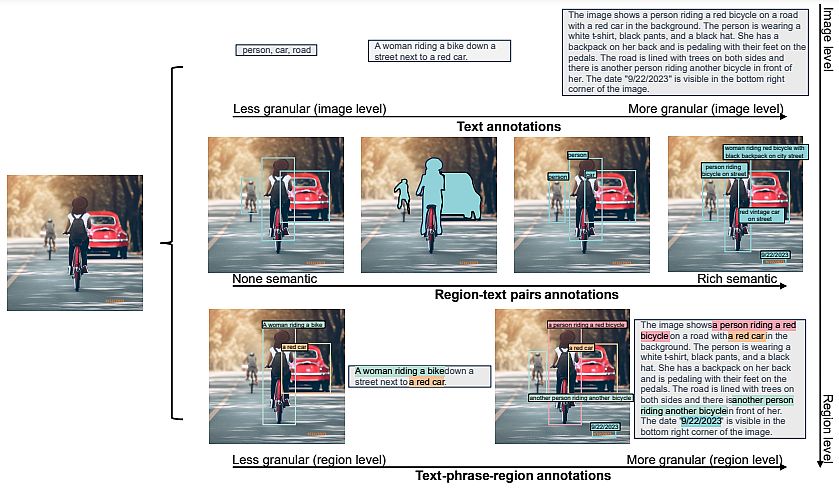

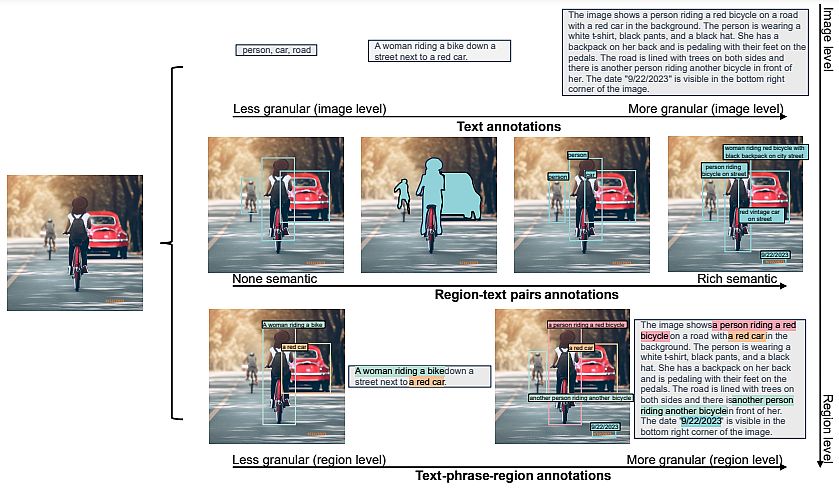

- Understanding photographs, and language descriptions that seize high-level semantics and facilitate a radical comprehension of visuals. Exemplar duties embrace picture classification, captioning, and visible query answering.

- Area recognition duties, enabling object recognition and entity localization inside photographs. They seize relationships between objects and their spatial context. As an example, object detection, occasion segmentation, and referring expression are such duties.

- Granular visual-semantic duties require a granular understanding of each textual content and picture. They contain finding the picture areas that correspond to the textual content phrases, reminiscent of objects, attributes, or relations.

Florence-2 Structure and Information Engine

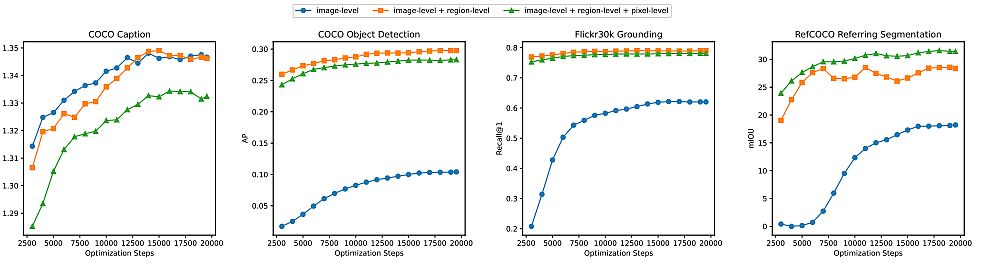

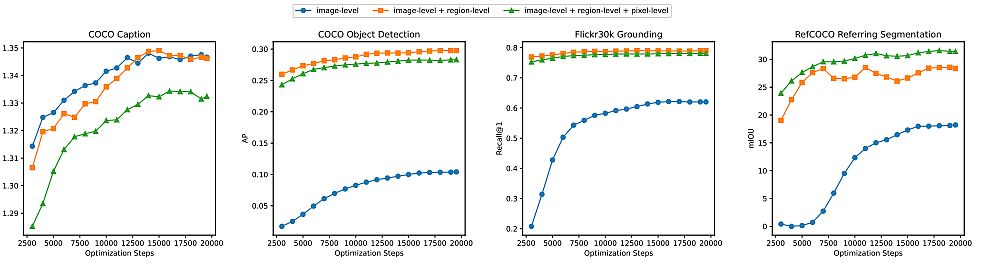

Being a common illustration mannequin, Florence-2 can resolve completely different CV duties with a single set of weights and a unified illustration structure. Because the determine under reveals, Florence-2 applies a multi-sequence studying algorithm, unifying all duties underneath a standard CV modeling objective.

The one mannequin takes photographs coupled with process prompts as directions and generates the fascinating labels in textual content varieties. It makes use of a imaginative and prescient encoder to transform photographs into visible token info. To generate the response, the tokens are paired with textual content info and processed by a transformer-based en/de-coder.

Microsoft researchers formulated every process as a translation drawback: given an enter picture and a task-specific immediate, they created the correct output response. Relying on the duty, the immediate and response might be both textual content or area.

- Textual content: When the immediate or reply is apparent textual content with out particular formatting, they maintained it of their ultimate multi-sequence format.

- Area: For region-specific duties, they added location tokens to the token’s vocabulary record, representing numerical coordinates. They created 1000 bins and represented areas utilizing codecs appropriate for the duty necessities.

Information Engine in Florence-2

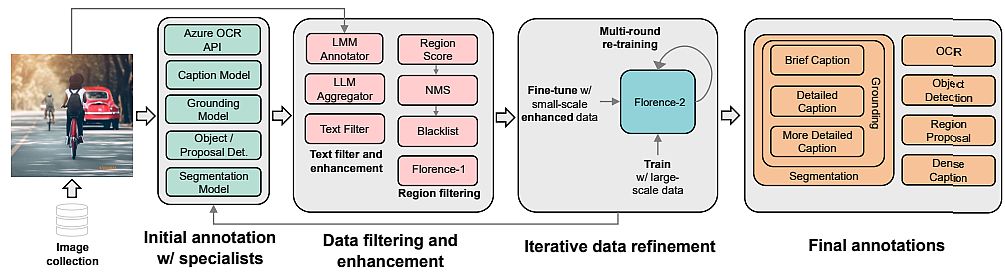

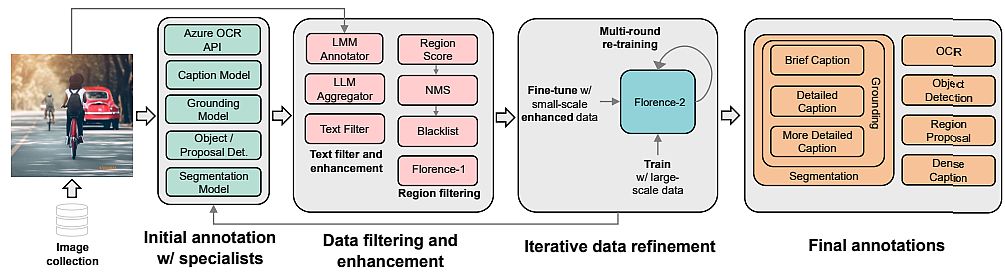

To coach their Florence-2 structure, researchers utilized a unified, large-volume, multitask dataset containing completely different picture knowledge points. Due to the dearth of such knowledge, they’ve developed a brand new multitask picture dataset.

Technical Challenges within the Mannequin Improvement

There are difficulties with picture descriptions as a result of completely different photographs find yourself underneath one description, and in FLD-900M for 350 M descriptions, there’s multiple picture.

This impacts the extent of the coaching process. In commonplace descriptive studying, it’s assumed that every image-text pair has a novel description, and all different descriptions are thought of damaging examples.

The researchers used unified image-text contrastive studying (UniCL, 2022). This Contrastive Studying is unified within the sense that in a standard image-description-label house it combines two studying paradigms:

- Discriminative (mapping a picture to a label, supervised studying) and

- Pre-training in an image-text (mapping an outline to a novel label, contrastive studying).

The structure has a picture encoder and a textual content encoder. The characteristic vectors from the encoders’ outputs are normalized and fed right into a bidirectional goal operate. Moreover, one element is chargeable for supervised image-to-text contrastive loss, and the second is in the wrong way for supervised text-to-image contrastive loss.

The fashions themselves are a typical 12-layer textual content transformer for textual content (256 M parameters) and a hierarchical Imaginative and prescient Transformer for photographs. It’s a particular modification of the Swin Transformer with convolutional embeddings like CvT, (635 M parameters).

In complete, the mannequin has 893 M parameters. They educated for 10 days on 512 machines A100-40Gb. After pre-training, they educated Florence-2 with a number of varieties of adapters.

Experiments and Outcomes

Researchers educated Florence-2 on finer-grained representations by detection. To do that, they added the dynamic head adapter, which is a specialised consideration mechanism for the pinnacle that does detection. They did recognition with the tensor options, by degree, place, and channel.

They educated on the FLOD-9M dataset (Florence Object detection Dataset), into which a number of current ones have been merged, together with COCO, LVIS, and OpenImages. Furthermore, they generated pseudo-bounding packing containers. In complete, there have been 8.9M photographs, 25190 object classes, and 33.4M bounding packing containers.

This was educated on image-text matching (ITM) loss and the traditional Roberto MLM loss. Then in addition they fine-tuned it for the VQA process and one other adapter for video recognition, the place they took the CoSwin picture encoder and changed 2D layers with 3D ones, convolutions, merge operators, and so forth.

Throughout initialization, they duplicated the pre-trained weights from 2D into new ones. There was some extra coaching right here the place fine-tuning for the duty was instantly executed.

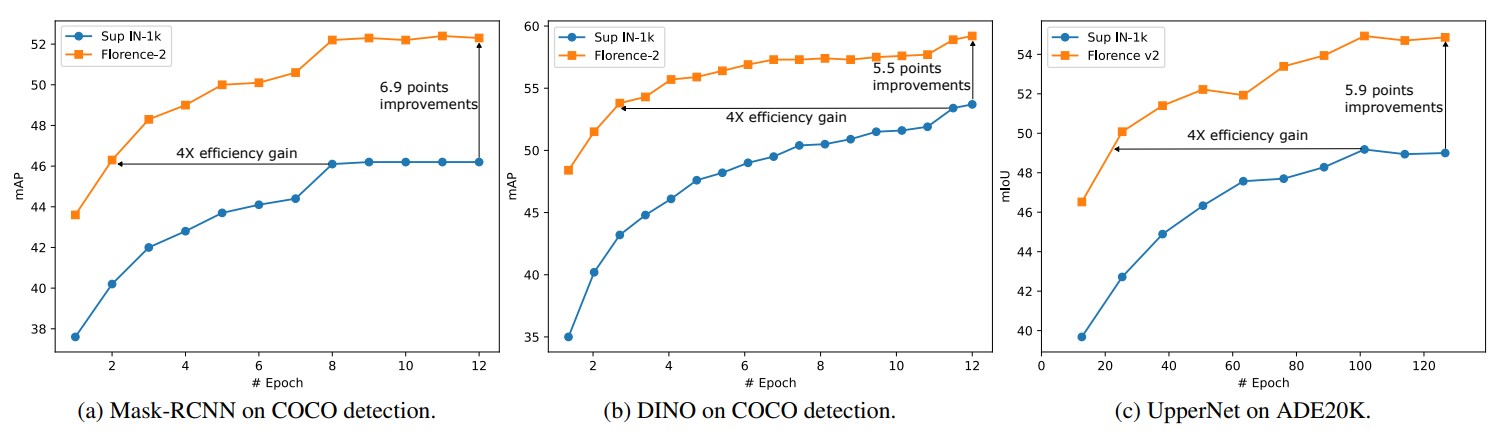

In fine-tuning Florence-2 underneath ImageNet, it’s barely worse than SoTA, but in addition 3 times smaller. For a number of photographs of cross-domain classification, it beat the benchmark chief, though the latter used ensemble and different methods.

For image-text retrieval in zero-shot, it matches or surpasses earlier outcomes, and in fine-tuning, it beats with a considerably smaller variety of epochs. It beats in object detection, VQA, and video motion recognition too.

Purposes of Florence-2 in Numerous Industries

Mixed text-region-image annotation might be helpful in a number of industries and right here we enlist its potential functions:

Medical Imaging

Medical practitioners use imaging with MRI, X-rays, and CT scans to detect anatomical options and anomalies. Then they apply text-image annotation to categorise and annotate medical photographs. This aids within the extra exact and efficient analysis and remedy of sufferers.

Florence-2 with its text-image annotation can acknowledge patterns and find fractures, tumors, abscesses, and quite a lot of different circumstances. Mixed annotation has the potential to scale back affected person wait instances, liberate pricey scanner slots, and improve the accuracy of diagnoses.

Transport

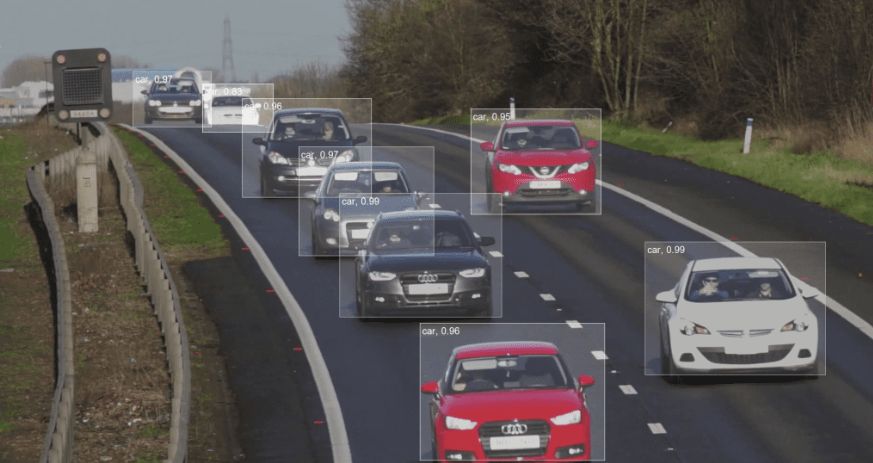

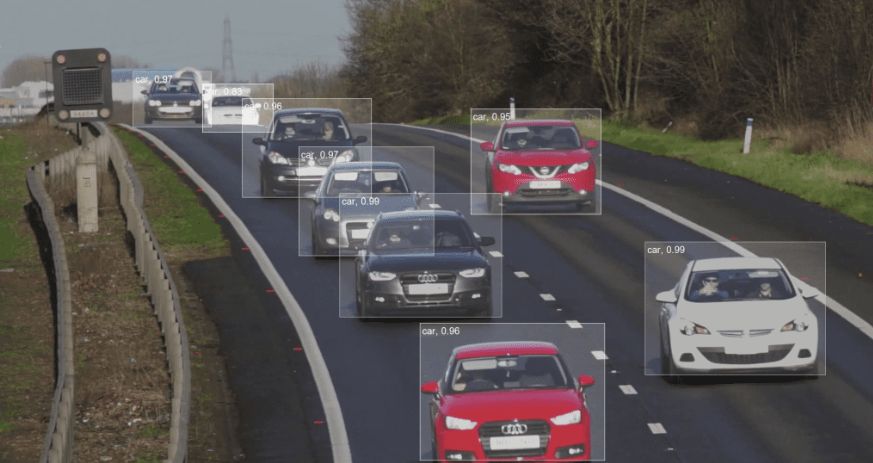

Textual content-image annotation is essential within the improvement of visitors and transport techniques. With the assistance of Florence-2 annotation, autonomous vehicles can acknowledge and interpret their environment, enabling them to make appropriate selections.

Annotation helps to differentiate several types of roads, reminiscent of metropolis streets and highways, and to determine gadgets (pedestrians, visitors indicators, and different vehicles). Figuring out object borders, places, and orientations, in addition to tagging automobiles, folks, visitors indicators, and highway markings, are essential duties.

Agriculture

Precision agriculture is a comparatively new subject that mixes conventional farming strategies with expertise to extend manufacturing, profitability, and sustainability. It makes use of robotics, drones, GPS sensors, and autonomous automobiles to hurry up totally handbook farming operations.

Textual content-image annotation is utilized in many duties, together with enhancing soil circumstances, forecasting agricultural yields, and assessing plant well being. Florence-2 can play a major position in these processes by enabling CV algorithms to acknowledge specific indicators like human farmers.

Safety and Surveillance

Textual content-image annotation makes use of 2D/3D bounding packing containers to determine people or objects from the gang. Florence-2 exactly labels the folks or gadgets by drawing a field round them. By observing human behaviors and placing them in distinct boundary packing containers, it may detect crimes.

The cameras along with labeled practice datasets are able to recognizing faces. Cameras determine folks along with automobile varieties, colours, weapons, instruments, and different equipment, which Florence-2 will annotate.

What’s subsequent for Florence-2?

Florence-2 units the stage for the event of pc imaginative and prescient fashions sooner or later. It reveals an unlimited potential for multitask studying and the combination of textual and visible info, making it an revolutionary CV mannequin. Subsequently, it offers a productive resolution for a variety of functions with out requiring a whole lot of fine-tuning.

The mannequin is able to dealing with duties starting from granular semantic changes to picture understanding. By showcasing the effectivity of a number of sequence studying, Florence-2’s structure raises the usual for full illustration studying.

Florence-2’s performances present alternatives for researchers to go farther into the fields of multi-task studying and cross-modal recognition as we comply with the quickly altering AI panorama.

Examine different CV fashions right here: