Following the groundbreaking influence of DeepSeek R1, DeepSeek AI continues to push the boundaries of innovation with its newest providing: Smallpond. This light-weight information processing framework combines the facility of DuckDB for SQL analytics and 3FS for high-performance distributed storage, designed to effectively deal with petabyte-scale datasets. Smallpond guarantees to simplify information processing for AI and massive information purposes, eliminating the necessity for long-running providers and complicated infrastructure, marking one other vital leap ahead from the DeepSeek staff. On this article, we’ll discover the options, parts, and purposes of DeepSeek AI’s Smallpond framework, and likewise learn to use it.

Studying Aims

- Be taught what DeepSeek Smallpond is and the way it extends DuckDB for distributed information processing.

- Perceive the way to set up Smallpond, arrange Ray clusters, and configure a computing surroundings.

- Learn to ingest, course of, and partition information utilizing Smallpond’s API.

- Determine sensible use circumstances like AI coaching, monetary analytics, and log processing.

- Weigh the benefits and challenges of utilizing Smallpond for distributed analytics.

This text was revealed as part of the Knowledge Science Blogathon.

What’s DeepSeek Smallpond?

Smallpond is an open-source, light-weight information processing framework developed by DeepSeek AI, designed to increase the capabilities of DuckDB—a high-performance, in-process analytical database—into distributed environments.

By integrating DuckDB with the Fireplace-Flyer File System (3FS), Smallpond affords a scalable resolution for dealing with petabyte-scale datasets with out the overhead of conventional huge information frameworks like Apache Spark.

Launched on February 28, 2025, as a part of DeepSeek’s Open Supply Week, Smallpond targets information engineers and scientists who want environment friendly, easy, and high-performance instruments for distributed analytics.

Be taught Extra: DeepSeek Releases 3FS & Smallpond Framework

Key Options of Smallpond

- Excessive Efficiency: Leverages DuckDB’s native SQL engine and 3FS’s multi-terabyte-per-minute throughput.

- Scalability: Processes petabyte-scale information throughout distributed nodes with guide partitioning.

- Simplicity: No long-running providers or advanced dependencies—deploy and use with minimal setup.

- Flexibility: Helps Python (3.8–3.12) and integrates with Ray for parallel processing.

- Open Supply: MIT-licensed, fostering group contributions and customization.

Core Parts of DeepSeek Smallpond

Now let’s perceive the core parts of DeepSeek’s Smallpond framework.

DuckDB

DuckDB is an embedded, in-process SQL OLAP database optimized for analytical workloads. It excels at executing advanced queries on massive datasets with minimal latency, making it best for single-node analytics. Smallpond extends DuckDB’s capabilities to distributed techniques, retaining its efficiency advantages.

3FS (Fireplace-Flyer File System)

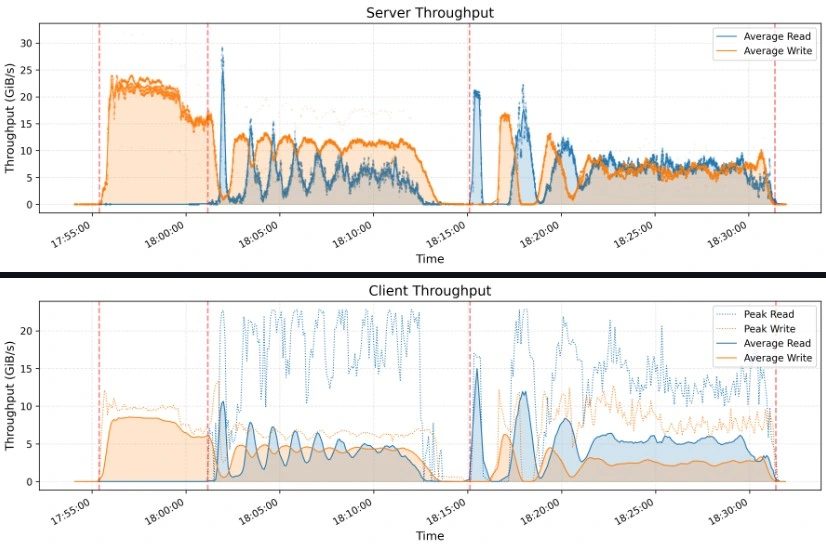

3FS is a distributed file system designed by DeepSeek for AI and high-performance computing (HPC) workloads. It leverages fashionable SSDs and RDMA networking to ship low-latency, high-throughput storage (e.g., 6.6 TiB/s learn throughput in a 180-node cluster). Not like conventional file techniques, 3FS prioritizes random reads over caching, aligning with the wants of AI coaching and analytics.

Integration of DuckDB and 3FS in Smallpond

Smallpond makes use of DuckDB as its compute engine and 3FS as its storage spine. Knowledge is saved in Parquet format on 3FS, partitioned manually by customers, and processed in parallel throughout nodes utilizing DuckDB cases coordinated by Ray. This integration combines DuckDB’s question effectivity with 3FS’s scalable storage, enabling seamless distributed analytics.

Getting Began with Smallpond

Now, let’s learn to set up and use Smallpond.

Step 1: Set up

Smallpond is Python-based and installable by way of pip obtainable just for Linux distros. Guarantee Python 3.8–3.11 is put in, together with a suitable 3FS cluster (or native filesystem for testing).

# Set up Smallpond with dependecies

pip set up smallpond

# Elective: Set up growth dependencies (e.g., for testing)

pip set up "smallpond[dev]"

# Set up Ray Clusters

pip set up 'ray[default]'For 3FS, clone and construct from the GitHub repository:

git clone https://github.com/deepseek-ai/3fs

cd 3fs

git submodule replace --init --recursive

./patches/apply.sh

# Set up dependencies (Ubuntu 20.04/22.04 instance)

sudo apt set up cmake libuv1-dev liblz4-dev libboost-all-dev

# Construct 3FS (check with 3FS docs for detailed directions)Step 2: Setting Up the Setting

Initialize a ray occasion for ray clusters if utilizing 3FS, comply with the codes beneath:

#intialize ray accordingly

ray begin --head --num-cpus=<NUM_CPUS> --num-gpus=<NUM_GPUS>Working the above code will produce output much like the picture beneath:

Now we will initialize Ray with 3FS by utilizing the deal with we acquired as proven above. To initialize Ray in smallpond, Configure a compute cluster (e.g., AWS EC2, on-premises) with 3FS deployed on SSD-equipped nodes or For native testing (Linux/Ubuntu), use a filesystem path.

import smallpond

# Initialize Smallpond session (native filesystem for testing)

sp = smallpond.init(data_root="Path/to/native/Storage",ray_address="192.168.214.165:6379")# Enter your individual ray deal with

# For 3FS cluster (replace along with your 3FS endpoint and ray deal with)

sp = smallpond.init(data_root="3fs://cluster_endpoint",ray_address="192.168.214.165:6379")# Enter your individual ray deal with

Step 3: Knowledge Ingestion and Preparation

Supported Knowledge Codecs

Smallpond primarily helps Parquet information, optimized for columnar storage and DuckDB compatibility. Different codecs (e.g., CSV) could also be supported by way of DuckDB’s native capabilities.

Studying and Writing Knowledge

Load and save information utilizing Smallpond’s high-level API.

# Learn Parquet file

df = sp.read_parquet("information/enter.costs.parquet")

# Course of information (instance: filter rows)

df = df.map("worth > 100") # SQL-like syntax

# Write outcomes again to Parquet

df.write_parquet("information/output/filtered.costs.parquet")Knowledge Partitioning Methods

Handbook partitioning is essential to Smallpond’s scalability. Select a technique based mostly in your information and workload:

- By File Rely: Break up into a hard and fast variety of information.

- By Rows: Distribute rows evenly.

- By Hash: Partition based mostly on a column’s hash for balanced distribution.

# Partition by file depend

df = df.repartition(3)

# Partition by rows

df = df.repartition(3, by_row=True)

# Partition by column hash (e.g., ticker)

df = df.repartition(3, hash_by="ticker")Step 4: API Referencing

Excessive-Stage API Overview

The high-level API simplifies information loading, transformation, and saving:

- read_parquet(path) : Masses Parquet information.

- write_parquet(path) : Saves processed information.

- repartition(n, [by_row, hash_by]) : Partitions information.

- map(expr) : Applies transformations.

Low-Stage API Overview

For superior use, Smallpond integrates DuckDB’s SQL engine and Ray’s job distribution immediately:

- Execute uncooked SQL by way of partial_sql

- Handle Ray duties for customized parallelism.

Detailed Perform Descriptions

- sp.read_parquet(path): Reads Parquet information right into a distributed DataFrame.

df = sp.read_parquet("3fs://information/enter/*.parquet")- df.map(expr): Applies SQL-like or Python transformations.

# SQL-like

df = df.map("SELECT ticker, worth * 1.1 AS adjusted_price FROM {0}")

# Python operate

df = df.map(lambda row: {"adjusted_price": row["price"] * 1.1})- df.partial_sql(question, df): Executes SQL on a DataFrame

df = sp.partial_sql("SELECT ticker, MIN(worth), MAX(worth) FROM {0} GROUP BY ticker", df)Efficiency Benchmarks

Smallpond’s efficiency shines in benchmarks like GraySort, sorting 110.5 TiB throughout 8,192 partitions in half-hour and 14 seconds (3.66 TiB/min throughput) on a 50-node compute cluster with 25 3FS storage nodes.

Finest Practices for Optimizing Efficiency

- Partition Correctly: Match partition measurement to node reminiscence and workload.

- Leverage 3FS: Use SSDs and RDMA for optimum I/O throughput.

- Decrease Shuffling: Pre-partition information to cut back community overhead.

Scalability Concerns

- 10TB–1PB: Ideally suited for Smallpond with a modest cluster.

- Over 1PB: Requires vital infrastructure (e.g., 180+ nodes).

- Cluster Administration: Use managed Ray providers (e.g., Anyscale) to simplify scaling.

Purposes of Smallpond

- AI Knowledge Pre-processing: Put together petabyte-scale coaching datasets.

- Monetary Analytics: Mixture and analyze market information throughout distributed nodes.

- Log Processing: Course of server logs in parallel for real-time insights.

- DeepSeek’s AI Coaching: Used Smallpond and 3FS to kind 110.5 TiB in beneath 31 minutes, supporting environment friendly mannequin coaching.

Benefits and Disadvantages of Smallpond

| Characteristic | Benefits | Disadvantages |

|---|---|---|

| Scalability | Handles petabyte-scale information effectively | Cluster administration overhead |

| Efficiency | Wonderful benchmark efficiency | Could not optimize single-node efficiency |

| Price | Open-source and cost-effective | Dependence on exterior frameworks |

| Usability | Person-friendly API for ML builders | Safety issues associated to DeepSeek’s AI fashions |

| Structure | Distributed computing with DuckDB and Ray Core | None |

Conclusion

Smallpond redefines distributed information processing by combining DuckDB’s analytical prowess with 3FS’s high-performance storage. Its simplicity, scalability, and open-source nature make it a compelling selection for contemporary information workflows. Whether or not you’re preprocessing AI datasets or analyzing terabytes of logs, Smallpond affords a light-weight but highly effective resolution. Dive in, experiment with the code, and be part of the group to form its future!

Key Takeaways

- Smallpond is an open-source, distributed information processing framework that extends DuckDB’s SQL capabilities utilizing 3FS and Ray.

- It at the moment helps solely Linux distros and requires Python 3.8–3.12.

- Smallpond is good for AI preprocessing, monetary analytics, and massive information workloads, however requires cautious cluster administration.

- It’s a cost-effective different to Apache Spark, with decrease overhead and ease of deployment.

- Regardless of its benefits, it requires infrastructure issues, reminiscent of cluster setup and safety issues with DeepSeek’s fashions.

The media proven on this article is just not owned by Analytics Vidhya and is used on the Creator’s discretion.

Ceaselessly Requested Questions

A. DeepSeek Smallpond is an open-source, light-weight information processing framework that extends DuckDB’s capabilities to distributed environments utilizing 3FS for scalable storage and Ray for parallel processing.

A. Smallpond is a light-weight different to Spark, providing high-performance distributed analytics with out advanced dependencies. Nevertheless, it requires guide partitioning and infrastructure setup, in contrast to Spark’s built-in useful resource administration.

A. Smallpond requires Python (3.8–3.12), a Linux-based OS, and a suitable 3FS cluster or native storage. For distributed workloads, a Ray cluster with SSD-equipped nodes is advisable.

A. Smallpond primarily helps Parquet information for optimized columnar storage however can deal with different codecs by DuckDB’s native capabilities.

A. Finest practices embrace guide information partitioning based mostly on workload, leveraging 3FS for high-speed storage, and minimizing information shuffling throughout nodes to cut back community overhead.

A. Smallpond excels at batch processing however is probably not best for real-time analytics. For low-latency streaming information, different frameworks like Apache Flink or Kafka Streams could be higher suited.

Login to proceed studying and luxuriate in expert-curated content material.