Think about having an AI assistant who doesn’t simply reply to your queries however thinks by means of issues systematically, learns from previous experiences, and plans a number of steps earlier than taking motion. Language Agent Tree Search (LATS) is a sophisticated AI framework that mixes the systematic reasoning of ReAct prompting with the strategic planning capabilities of Monte Carlo Tree Search.

LATS operates by sustaining a complete choice tree, exploring a number of potential options concurrently, and studying from every interplay to make more and more higher choices. With Vertical AI Brokers being the main target, on this article, we are going to focus on and implement the right way to make the most of LATS Brokers in motion utilizing LlamaIndex and SambaNova.AI.

Studying Aims

- Perceive the working circulation of ReAct (Reasoning + Performing) prompting framework and its thought-action-observation cycle implementation.

- As soon as we perceive the ReAct workflow, we are able to discover the developments made on this framework, notably within the type of the LATS Agent.

- Be taught to implement the Language Agent Tree Search (LATS) framework based mostly on Monte Carlo Tree Search with language mannequin capabilities.

- Discover the trade-offs between computational assets and consequence optimization in LATS implementation to grasp when it’s useful to make use of and when it’s not.

- Implement a Suggestion Engine utilizing LATS Agent from LlamaIndex utilizing SambaNova System as an LLM supplier.

This text was revealed as part of the Knowledge Science Blogathon.

What’s React Agent?

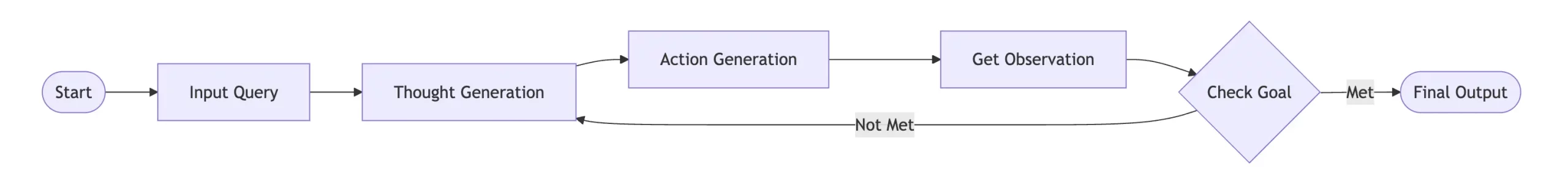

ReAct (Reasoning + Performing) is a prompting framework that permits language fashions to resolve duties by means of a thought, motion, and remark cycle. Consider it like having an assistant who thinks out loud, takes motion, and learns from what they observe. The agent follows a sample:

- Thought: Causes concerning the present scenario

- Motion: Decides what to do based mostly on that reasoning

- Statement: Will get suggestions from the surroundings

- Repeat: Use this suggestions to tell the subsequent thought

When applied, it permits language fashions to interrupt down issues into manageable elements, make choices based mostly on out there info, and regulate their strategy based mostly on suggestions. As an example, when fixing a multi-step math downside, the mannequin would possibly first take into consideration which mathematical ideas apply, then take motion by making use of a selected system, observe whether or not the outcome makes logical sense, and regulate its strategy if wanted. This structured cycle of reasoning and motion intently behaves as human problem-solving processes and results in extra dependable responses.

Earlier Learn: Implementation of ReAct Agent utilizing LlamaIndex and Gemini

What’s a Language Agent Tree Search Agent?

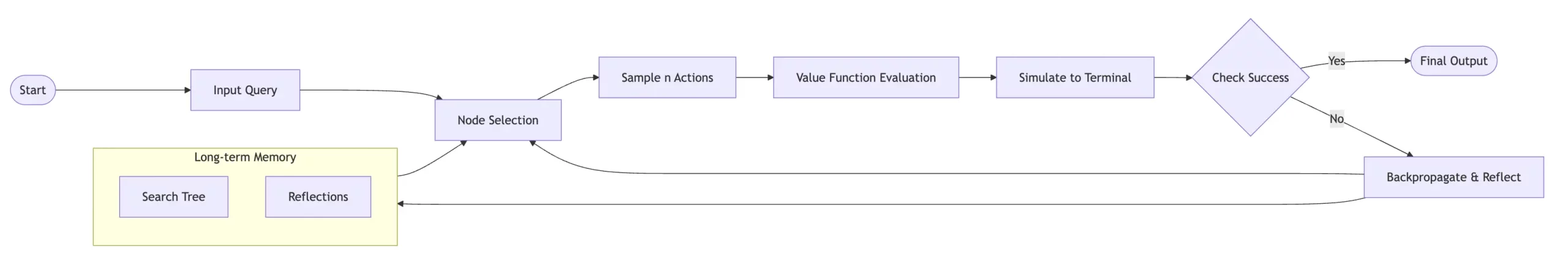

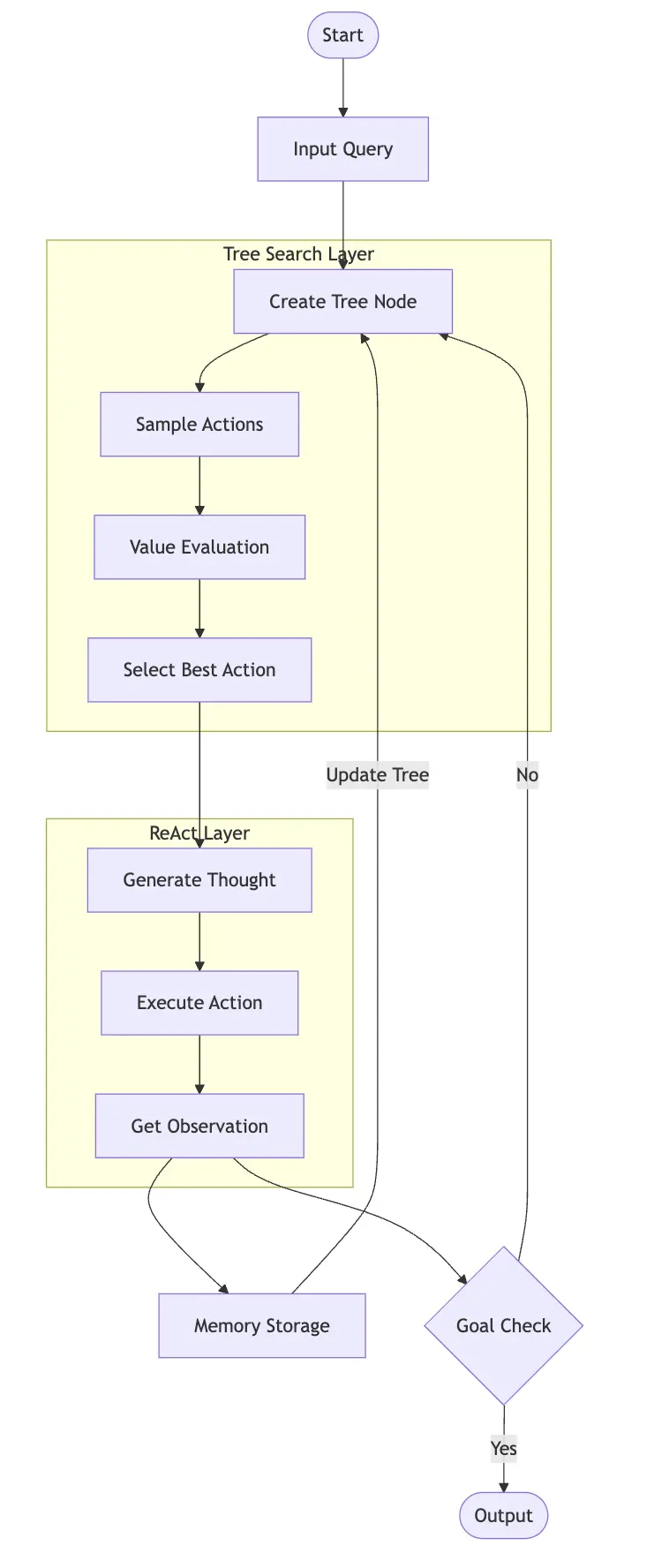

The Language Agent Tree Search (LATS) is a sophisticated Agentic framework that mixes the Monte Carlo Tree Search with language mannequin capabilities to create a greater subtle decision-making system for reasoning and planning.

It operates by means of a steady cycle of exploration, analysis, and studying, beginning with an enter question that initiates a structured search course of. The system maintains a complete long-term reminiscence containing each a search tree of earlier explorations and reflections from previous makes an attempt, which helps information future decision-making.

At its operational core, LATS follows a scientific workflow the place it first selects nodes based mostly on promising paths after which samples a number of potential actions at every choice level. Every potential motion undergoes a price operate analysis to evaluate its benefit, adopted by a simulation to a terminal state to find out its effectiveness.

Within the code demo, we are going to see how this Tree growth works and the way the analysis rating can be executed.

How do LATS use REACT?

LATS integrates ReAct’s thought-action-observation cycle into its tree search framework. Right here’s how:

At every node within the search tree, LATS makes use of ReAct’s:

- Thought era to cause concerning the state.

- Motion choice to decide on what to do.

- Statement assortment to get suggestions.

However LATS enhances this by:

- Exploring a number of potential ReAct sequences concurrently in Tree growth i.e., totally different nodes to suppose, and take motion.

- Utilizing previous experiences to information which paths to discover Studying from successes and failures systematically.

This strategy to implement may be very costly. Let’s perceive when and when to not use LATS.

Value Commerce-Offs: When to Use LATS?

Whereas the paper focuses on the upper benchmarks of LATS in comparison with COT, ReAcT, and different methods, the execution comes with a better price. The deeper the advanced duties get, the extra nodes are created for reasoning and planning, which suggests we are going to find yourself making a number of LLM calls – a setup that’s not perfect in manufacturing environments.

This computational depth turns into notably difficult when coping with real-time purposes the place response time is crucial, as every node growth and analysis requires separate API calls to the language mannequin. Moreover, organizations have to rigorously weigh the trade-off between LATS’s superior decision-making capabilities and the related infrastructure prices, particularly when scaling throughout a number of concurrent customers or purposes.

Right here’s when to make use of LATS:

- The duty is advanced and has a number of potential options (e.g., Programming duties the place there are numerous methods to resolve an issue).

- Errors are pricey and accuracy is essential (e.g., Monetary decision-making or medical analysis assist, Schooling curriculum preparation).

- The duty advantages from studying from previous makes an attempt (e.g.Advanced product searches the place person preferences matter)

Right here’s when to not use LATS:

- Easy, easy duties that want fast responses (e.g., primary customer support inquiries or information lookups)

- Time-sensitive operations the place fast choices are required (e.g., real-time buying and selling methods or emergency response)

- Useful resource-constrained environments with restricted computational energy or API finances (e.g., cellular purposes or edge units)

- Excessive-volume, repetitive duties the place easier fashions can present satisfactory outcomes (e.g., content material moderation or spam detection)

Nevertheless, for easy, easy duties the place fast responses are wanted, the easier ReAct framework may be extra acceptable.

Consider it this manner: ReAct is like making choices one step at a time, whereas LATS is like planning a fancy technique recreation – it takes extra time and assets however can result in higher outcomes in advanced conditions.

Construct a Suggestion System with LATS Agent utilizing LlamaIndex

If you happen to’re trying to construct a suggestion system that thinks and analyzes the web, let’s break down this implementation utilizing LATS (Language Agent Activity System) and LlamaIndex.

Step 1: Setting Up Your Surroundings

First up, we have to get our instruments so as. Run these pip set up instructions to get the whole lot we want:

!pip set up llama-index-agent-lats

!pip set up llama-index-core llama-index-readers-file

!pip set up duckduckgo-search

!pip set up llama-index-llms-sambanovasystems

import nest_asyncio

nest_asyncio.apply()Together with nest_asyncio for dealing with async operations in your notebooks.

Step 2: Configuration and API Setup

Right here’s the place we arrange our LLM – the SambaNova LLM. You’ll have to create your API key and plug it contained in the surroundings variable i.e., SAMBANOVA_API_KEY.

Comply with these steps to get your API key:

- Create your account at: https://cloud.sambanova.ai/

- Choose APIs and select the mannequin you’ll want to use.

- You may also click on on the Generate New API key and use that key to exchange the under surroundings variable.

import os

os.environ["SAMBANOVA_API_KEY"] = "<replace-with-your-key>"SambaNova Cloud is taken into account to have the World’s Quickest AI Inference, the place you will get the response from Llama open-source fashions inside seconds. When you outline the LLM from the LlamaIndex LLM integrations, you’ll want to override the default LLM utilizing Settings from LlamaIndex core. By default, Llamaindex makes use of OpenAI because the LLM.

from llama_index.core import Settings

from llama_index.llms.sambanovasystems import SambaNovaCloud

llm = SambaNovaCloud(

mannequin="Meta-Llama-3.1-70B-Instruct",

context_window=100000,

max_tokens=1024,

temperature=0.7,

top_k=1,

top_p=0.01,

)

Settings.llm = llmStep 3: Defining Software-Search

Now for the enjoyable half – we’re integrating DuckDuckGo search to assist our system discover related info. This software fetches real-world information for the given person query and fetches the max outcomes of 4.

To outline the software i.e., Perform calling within the LLMs at all times keep in mind these two steps:

- Correctly outline the info kind the operate will return, in our case it’s: -> str.

- All the time embrace docstrings to your operate name that must be added in Agentic Workflow or Perform calling. Since operate calling may help in question routing, the Agent must know when to decide on which software to motion, that is the place docstrings are very useful.

Now use FunctionTool from LlamaIndex and outline your customized operate.

from duckduckgo_search import DDGS

from llama_index.core.instruments import FunctionTool

def search(question:str) -> str:

"""

Use this operate to get outcomes from Net Search by means of DuckDuckGo

Args:

question: person immediate

return:

context (str): search outcomes to the person question

"""

# def search(question:str)

req = DDGS()

response = req.textual content(question,max_results=4)

context = ""

for end in response:

context += outcome['body']

return context

search_tool = FunctionTool.from_defaults(fn=search)Step 4: LlamaIndex Agent Runner – LATS

That is the ultimate a part of the Agent definition. We have to outline LATSAgent Employee from the LlamaIndex agent. Since this can be a Employee class, we additional can run it by means of AgentRunner the place we instantly make the most of the chat operate.

Be aware: The chat and different options will also be instantly known as from AgentWorker, however it’s higher to make use of AgentRunner, because it has been up to date with many of the newest adjustments executed within the framework.

Key hyperparameters:

- num_expansions: Variety of youngsters nodes to broaden.

- max_rollouts: Most variety of trajectories to pattern.

from llama_index.agent.lats import LATSAgentWorker

from llama_index.core.agent import AgentRunner

agent_worker = LATSAgentWorker(

instruments=[search_tool],

llm=llm,

num_expansions=2,

verbose=True,

max_rollouts=3)

agent = AgentRunner(agent_worker)

Step 5: Execute Agent

Lastly, it’s time to execute the LATS agent, simply ask the advice you’ll want to ask. Through the execution, observe the verbose logs:

- LATS Agent divides the person process into num_expansion.

- When it divides this process, it runs the thought course of after which makes use of the related motion to choose the software. In our case, it’s only one software.

- As soon as it runs the rollout and will get the remark, it evaluates the outcomes it generates.

- It repeats this course of and creates a tree node to get the perfect remark potential.

question = "On the lookout for a mirrorless digital camera underneath $2000 with good low-light efficiency"

response = agent.chat(question)

print(response.response)Output:

Listed here are the highest 5 mirrorless cameras underneath $2000 with good low-light efficiency:1. Nikon Zf – Contains a 240M full-frame BSI ONOS sensor, full-width 4K/30 video, cropped 4K/80, and stabilization rated to SEV.

2. Sony ZfC II – A compact, full-frame mirrorless digital camera with limitless 4K recording, even in low-light situations.

3. Fujijiita N-Yu – Gives an ABC-C format, 25.9M X-frame ONOS 4 sensor, and a large native sensitivity vary of ISO 160-12800 for higher efficiency.

4. Panasonic Lunix OHS – A ten Megapixel digital camera with a four-thirds ONOS sensor, able to limitless 4K recording even in low gentle.

5. Canon EOS R6 – Outfitted with a 280M full-frame ONOS sensor, 4K/60 video, stabilization rated to SEV, and improved low-light efficiency.

Be aware: The rating could fluctuate based mostly on particular person preferences and particular wants.

The above strategy works properly, however you have to be ready to deal with edge circumstances. Typically, if the person’s process question is extremely advanced or includes a number of num_expansion or rollouts, there’s a excessive probability the output will likely be one thing like, “I’m nonetheless considering.” Clearly, this response will not be acceptable. In such circumstances, there’s a hacky strategy you’ll be able to attempt.

Step 6: Error Dealing with and Hacky Approaches

For the reason that LATS Agent creates a node, every node generates a toddler tree. For every baby tree, the Agent retrieves observations. To examine this, you’ll want to test the listing of duties the Agent is executing. This may be executed by utilizing agent.list_tasks(). The operate will return a dictionary containing the state, from which you’ll establish the root_node and navigate to the final remark to investigate the reasoning executed by the Agent.

print(agent.list_tasks()[0])

print(agent.list_tasks()[0].extra_state.keys())

print(agent.list_tasks()[-1].extra_state["root_node"].youngsters[0].youngsters[0].current_reasoning[-1].remark)Now everytime you get I’m nonetheless considering simply use this hack strategy to get the end result of the outcome.

def process_recommendation(question: str, agent: AgentRunner):

"""Course of the advice question with error dealing with"""

attempt:

response = agent.chat(question).response

if "I'm nonetheless considering." in response:

return agent.list_tasks()[-1].extra_state["root_node"].youngsters[0].youngsters[0].current_reasoning[-1].remark

else:

return response

besides Exception as e:

return f"An error occurred whereas processing your request: {str(e)}"Conclusion

Language Agent Tree Search (LATS) represents a major development in AI agent architectures, combining the systematic exploration of Monte Carlo Tree Search with the reasoning capabilities of huge language fashions. Whereas LATS affords superior decision-making capabilities in comparison with easier approaches like Chain-of-Thought (CoT) or primary ReAct brokers, it comes with elevated computational overhead and complexity.

Key Takeaways

- Understood the ReAcT Agent, the approach that’s utilized in many of the Agentic frameworks for process execution.

- Analysis on Language Tree Search (LATS), the development for ReAcT agent that makes use of Monte Carlo Tree searches to additional enhance the output response for advanced duties.

- LATS Agent is just perfect for advanced, high-stakes situations requiring accuracy and studying the place latency will not be a problem.

- Implementation of LATS Agent utilizing a customized search software to get real-world responses for the given person process.

- As a result of complexity of LATS, error dealing with, and probably “hacky” approaches may be wanted to extract leads to sure situations.

Often Requested Questions

A. LATS enhances ReAct by exploring a number of potential sequences of ideas, actions, and observations concurrently inside a tree construction, utilizing previous experiences to information the search and studying from successes and failures systematically utilizing analysis the place LLM acts as a Decide.

A. Commonplace language fashions primarily concentrate on producing textual content based mostly on a given immediate. Brokers, like ReAct, go a step additional by with the ability to work together with their surroundings, take actions based mostly on reasoning, and observe the outcomes to enhance future actions.

A. When organising a LATS agent in LlamaIndex, key hyperparameters to contemplate embrace num_expansions, the breadth of the search by figuring out what number of baby nodes are explored from every level, and max_rollouts, the depth of the search by limiting the variety of simulated motion sequences. Moreover, max_iterations is one other optionally available parameter that limits the general reasoning cycles of the agent, stopping it from operating indefinitely and managing computational assets successfully.

A. The official implementation for “Language Agent Tree Search Unifies Reasoning, Performing, and Planning in Language Fashions” is accessible on GitHub: https://github.com/lapisrocks/LanguageAgentTreeSearch

The media proven on this article will not be owned by Analytics Vidhya and is used on the Creator’s discretion.