Since 2015, Microsoft has acknowledged the very actual reputational, emotional, and different devastating impacts that come up when intimate imagery of an individual is shared on-line with out their consent. Nevertheless, this problem has solely grow to be extra critical and extra advanced over time, as know-how has enabled the creation of more and more life like artificial or “deepfake” imagery, together with video.

The appearance of generative AI has the potential to supercharge this hurt – and there has already been a surge in abusive AI-generated content material. Intimate picture abuse overwhelmingly impacts girls and women and is used to disgrace, harass, and extort not solely political candidates or different girls with a public profile, but additionally personal people, together with teenagers. Whether or not actual or artificial, the discharge of such imagery has critical, real-world penalties: each from the preliminary launch and from the continued distribution throughout the net ecosystem. Our collective strategy to this whole-of-society problem subsequently have to be dynamic.

On July 30, Microsoft launched a coverage whitepaper, outlining a set of strategies for policymakers to assist defend Individuals from abusive AI deepfakes, with a concentrate on defending girls and kids from on-line exploitation. Advocating for modernized legal guidelines to guard victims is one factor of our complete strategy to deal with abusive AI-generated content material – as we speak we additionally present an replace on Microsoft’s world efforts to safeguard its companies and people from non-consensual intimate imagery (NCII).

Saying our partnership with StopNCII

We have now heard issues from victims, specialists, and different stakeholders that consumer reporting alone could not scale successfully for impression or adequately tackle the chance that imagery may be accessed by way of search. Because of this, as we speak we’re saying that we’re partnering with StopNCII to pilot a victim-centered strategy to detection in Bing, our search engine.

StopNCII is a platform run by SWGfL that allows adults from around the globe to guard themselves from having their intimate photographs shared on-line with out their consent. StopNCII allows victims to create a “hash” or digital fingerprint of their photographs, with out these photographs ever leaving their machine (together with artificial imagery). These hashes can then be utilized by a variety of business companions to detect that imagery on their companies and take motion consistent with their insurance policies. In March 2024, Microsoft donated a brand new PhotoDNA functionality to assist StopNCII’s efforts. We have now been piloting use of the StopNCII database to forestall this content material from being returned in picture search ends in Bing. We have now taken motion on 268,899 photographs as much as the top of August. We are going to proceed to guage efforts to increase this partnership. We encourage adults involved concerning the launch – or potential launch – of their photographs to report back to StopNCII.

Our strategy to addressing non-consensual intimate imagery

At Microsoft, we acknowledge that we’ve a duty to guard our customers from unlawful and dangerous on-line content material whereas respecting basic rights. We try to realize this throughout our various companies by taking a danger proportionate strategy: tailoring our security measures to the chance and to the distinctive service. Throughout our client companies, Microsoft doesn’t permit the sharing or creation of sexually intimate photographs of somebody with out their permission. This contains photorealistic NCII content material that was created or altered utilizing know-how. We don’t permit NCII to be distributed on our companies, nor will we permit any content material that praises, helps, or requests NCII.

Moreover, Microsoft doesn’t permit any threats to share or publish NCII — additionally known as intimate extortion. This contains asking for or threatening an individual to get cash, photographs, or different invaluable issues in trade for not making the NCII public. Along with this complete coverage, we’ve tailor-made prohibitions in place the place related, equivalent to for the Microsoft Retailer. The Code of Conduct for Microsoft Generative AI Providers additionally prohibits the creation of sexually specific content material.

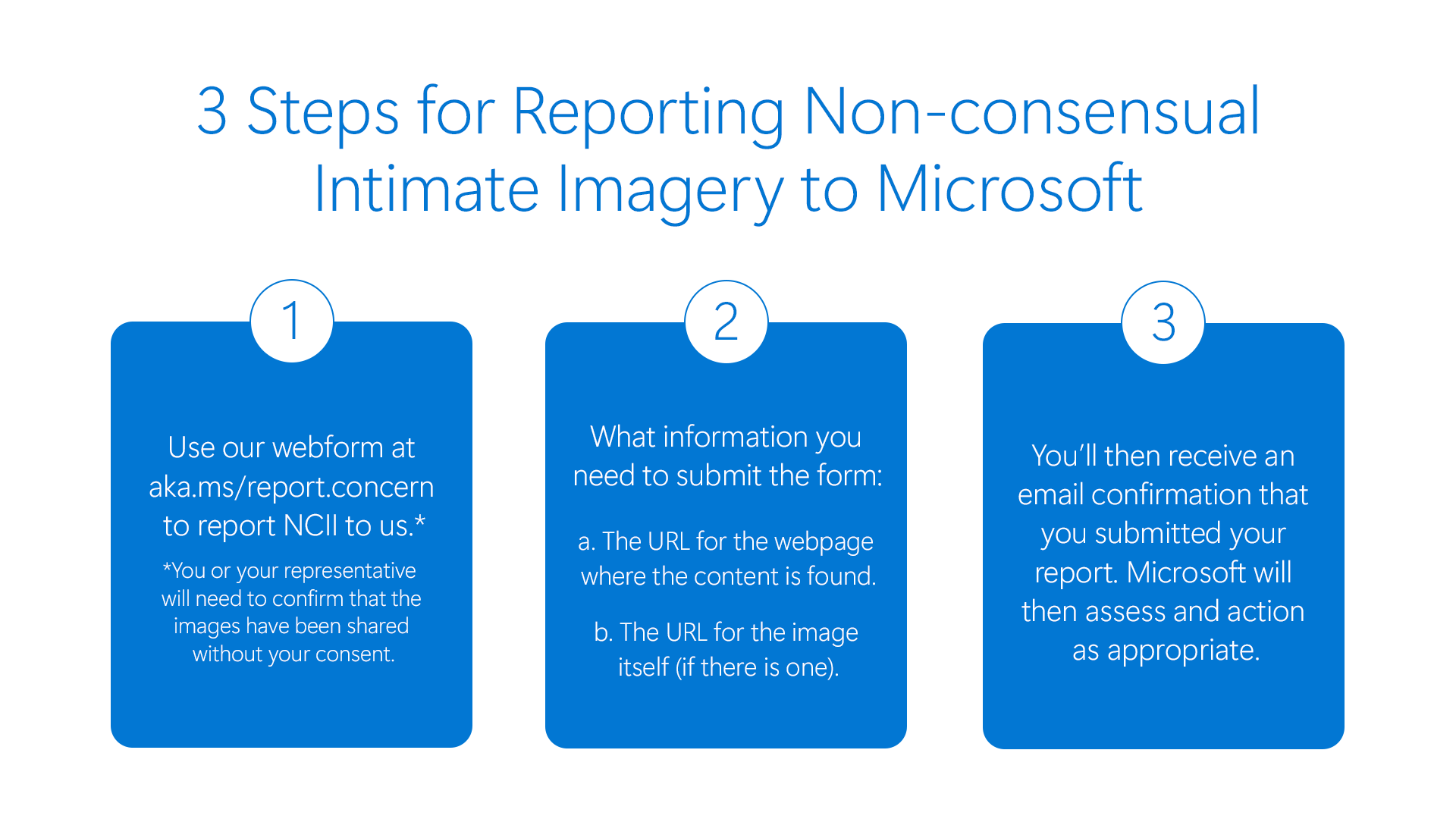

Reporting content material on to Microsoft

We are going to proceed to take away content material reported on to us on a worldwide foundation, in addition to the place violative content material is flagged to us by NGOs and different companions. In search, we additionally proceed to take a variety of measures to demote low high quality content material and to raise authoritative sources, whereas contemplating how we will additional evolve our strategy in response to professional and exterior suggestions.

Anybody can request the elimination of a nude or sexually specific picture or video of themselves which has been shared with out their consent by way of Microsoft’s centralized reporting portal.

* NCII reporting is for customers 18 years and over. For these beneath 18, please report as youngster sexual exploitation and abuse imagery.

As soon as that content material has been reviewed by Microsoft and confirmed as violating our NCII coverage, we take away reported hyperlinks to pictures and movies from search ends in Bing globally and/or take away entry to the content material itself if it has been shared on one in every of Microsoft’s hosted client companies. This strategy applies to each actual and artificial imagery. Some companies (equivalent to gaming and Bing) additionally present in-product reporting choices. Lastly, we offer transparency on our strategy by way of our Digital Security Content material Report.

Persevering with whole-of-society collaboration to satisfy the problem

As we’ve seen illustrated vividly by way of latest, tragic tales, artificial intimate picture abuse additionally impacts kids and youths. In April, we outlined our dedication to new security by design ideas, led by NGOs Thorn and All Tech is Human, supposed to scale back youngster sexual exploitation and abuse (CSEA) dangers throughout the event, deployment, and upkeep of our AI companies. As with artificial NCII, we’ll take steps to deal with any obvious CSEA content material on our companies, together with by reporting to the Nationwide Middle for Lacking and Exploited Kids (NCMEC). Younger people who find themselves involved concerning the launch of their intimate imagery also can report back to NCMEC’s Take It Down service.

Right now’s replace is however a time limit: these harms will proceed to evolve and so too should our strategy. We stay dedicated to working with leaders and specialists worldwide on this problem and to listening to views straight from victims and survivors. Microsoft has joined a brand new multistakeholder working group, chaired by the Middle for Democracy & Expertise, Cyber Civil Rights Initiative, and Nationwide Community to Finish Home Violence and we sit up for collaborating by way of this and different boards on evolving greatest practices. We additionally commend the concentrate on this hurt by way of the Government Order on the Secure, Safe, and Reliable Growth and Use of Synthetic Intelligence and sit up for persevering with to work with U.S. Division of Commerce’s Nationwide Institute of Requirements & Expertise and the AI Security Institute on greatest practices to scale back dangers of artificial NCII, together with as an issue distinct from artificial CSEA. And, we’ll proceed to advocate for coverage and legislative modifications to discourage unhealthy actors and guarantee justice for victims, whereas elevating consciousness of the impression on girls and women.