Digital paperwork have lengthy offered a twin problem for each human readers and automatic methods: preserving wealthy structural nuances whereas changing content material into machine-processable codecs. Conventional strategies, whether or not counting on complicated ensemble pipelines or huge foundational fashions, usually battle to stability accuracy with computational effectivity. SmolDocling emerges as a game-changing resolution, providing an ultra-compact 256M-parameter vision-language mannequin that performs end-to-end doc conversion with exceptional precision and velocity.

The Problem of Doc Conversion

For many years, changing complicated layouts starting from enterprise paperwork to educational papers into structured representations has been a tough job. Widespread points embrace:

- Structure Variability: Paperwork current a wide selection of layouts and kinds.

- Opaque Codecs: Codecs like PDF are optimized for printing moderately than semantic parsing, obscuring the underlying construction.

- Useful resource Calls for: Conventional large-scale fashions or ensemble options require intensive computational sources and complicated tuning.

These challenges have led to lots of analysis, however discovering an answer that’s each environment friendly and correct remains to be tough.

Introducing SmolDocling

SmolDocling addresses these hurdles head-on by leveraging a unified method:

- Finish-to-Finish Conversion: As a substitute of piecing collectively a number of specialised fashions, SmolDocling processes whole doc pages in a single go.

- Compact but Highly effective: With simply 256M parameters, it delivers efficiency similar to fashions as much as 27 occasions bigger.

- Sturdy Multi-Modal Capabilities: Whether or not coping with code listings, tables, equations, or complicated charts, SmolDocling adapts seamlessly throughout numerous doc varieties.

At its core, the mannequin introduces a novel markup format referred to as DocTags—a common customary that meticulously captures each ingredient’s content material, construction, and spatial context.

DocTags revolutionize the way in which doc parts are represented:

- Structured Vocabulary: Impressed by earlier work like OTSL, DocTags use XML-style tags to explicitly differentiate between textual content, photos, tables, code, and extra.

- Spatial Consciousness: Every ingredient is annotated with exact bounding field coordinates, guaranteeing that format context is preserved.

- Unified Illustration: Whether or not processing a full-page doc or an remoted ingredient (like a cropped desk), the format stays constant, boosting the mannequin’s means to study and generalize.

- <image> – Represents a picture or visible content material within the doc.

- <flow_chart> – Possible represents a diagram or structured graphical illustration.

- <caption> – Offers an outline or annotation for a picture or diagram.

- <otsl> – Probably represents a structured doc format for tables or layouts.

- <loc_XX> – Signifies the place of a component inside the doc.

- <ched> – Possible a shorthand for “header” or “categorical header” inside a desk.

- <fcel> – Most likely refers to “formatted cell,” indicating particular cell content material in tables.

- <nl> – Represents a brand new line or a break in textual content.

- <section_header_level_1> – Marks a serious part heading within the doc.

- <textual content> – Defines normal textual content content material inside the doc.

- <unordered_list> – Represents a bulleted or unordered record.

- <list_item> – Specifies a person merchandise inside a listing.

- <code> – Incorporates programming or script-related content material, formatted for readability.

This clear, structured format minimizes ambiguity, a standard problem with direct conversion strategies to codecs like HTML or Markdown.

Deep Dive: Dataset Coaching and Mannequin Structure

Dataset Coaching

A key pillar of SmolDocling’s success is its wealthy, numerous coaching knowledge:

- Pre-training Information:

- DocLayNet-PT: A 1.4M web page dataset extracted from distinctive PDF paperwork sourced from CommonCrawl, Wikipedia, and enterprise paperwork. This dataset is enriched with weak annotations protecting format parts, desk buildings, language, matters, and determine classifications.

- DocMatix: Tailored utilizing an identical weak annotation technique as DocLayNet-PT, this dataset consists of multi-task doc conversion duties.

- Process-Particular Information:

- Structure & Construction: Excessive-quality annotated pages from DocLayNet v2, WordScape, and synthetically generated pages from SynthDocNet guarantee strong format and desk construction studying.

- Charts, Code, and Equations: Customized-generated datasets present intensive visible variety. As an illustration, over 2.5 million charts are generated utilizing three totally different visualization libraries, whereas 9.3M rendered code snippets and 5.5M formulation present detailed protection of technical doc parts.

- Instruction Tuning: To bolster the popularity of various web page parts and introduce document-related options and no-code pipelines, rule-based strategies and the Granite-3.1-2b-instruct LLM had been leveraged. Utilizing samples from DocLayNet-PT pages, one instruction was generated by randomly sampling format parts from a web page. These directions included duties corresponding to:

- “Carry out OCR at bbox”

- “Determine web page ingredient sort at bbox”

- “Extract all part headers from the web page”

- Moreover, coaching with the Cauldron dataset helps keep away from catastrophic forgetting because of the introduction of quite a few dialog datasets.

Mannequin Structure of SmolDocling

SmolDocling builds upon the SmolVLM framework and incorporates a number of revolutionary strategies to make sure effectivity and effectiveness:

- Imaginative and prescient Encoder with SigLIP Spine: The mannequin makes use of a SigLIP base 16/512 encoder (93M parameters) which applies an aggressive pixel shuffle technique. This compresses every 512×512 picture patch into 64 visible tokens, considerably lowering the variety of picture hidden states.

- Enhanced Tokenization: By rising the pixel-to-token ratio (as much as 4096 pixels per token) and introducing particular tokens for sub-image separation, tokenization effectivity is markedly improved. This design ensures that each full-page paperwork and cropped parts are processed uniformly.

- Curriculum Studying Strategy: Coaching begins with freezing the imaginative and prescient encoder, specializing in aligning the language mannequin with the brand new DocTags format. As soon as the mannequin is aware of the output construction, the imaginative and prescient encoder is unfrozen and fine-tuned together with task-specific datasets, guaranteeing complete studying.

- Environment friendly Inference: With a most sequence size of 8,192 tokens and the flexibility to course of as much as three pages at a time, SmolDocling achieves web page conversion occasions of simply 0.35 seconds utilizing VLLM on an A100 GPU, whereas occupying solely 0.489 GB of VRAM.

Comparative Evaluation: SmolDocling Versus Different Fashions

An intensive analysis of SmolDocling towards main vision-language fashions highlights its aggressive edge:

Textual content Recognition (OCR) and Doc Formatting

| Methodology | Mannequin Dimension | Edit Distance ↓ | F1-score ↑ | Precision ↑ | Recall ↑ | BLEU ↑ | METEOR ↑ |

| Qwen2.5 VL [9] | 7B | 0.56 | 0.72 | 0.80 | 0.70 | 0.46 | 0.57 |

| GOT [89] | 580M | 0.61 | 0.69 | 0.71 | 0.73 | 0.48 | 0.59 |

| Nougat (base) [12] | 350M | 0.62 | 0.66 | 0.72 | 0.67 | 0.44 | 0.54 |

| SmolDocling (Ours) | 256M | 0.48 | 0.80 | 0.89 | 0.79 | 0.58 | 0.67 |

Insights: SmolDocling outperforms bigger fashions throughout all key metrics in full-page transcription. The numerous enhancements in F1-score, precision, and recall mirror its superior functionality in precisely reproducing textual parts and preserving studying order.

Specialised Duties: Code Listings and Equations

- Code Listings: For duties like code itemizing transcription, SmolDocling displays a powerful F1-score of 0.92 and precision of 0.94, highlighting its experience at dealing with indentation and syntax that carry semantic significance.

- Equations: Within the area of equation recognition, SmolDocling carefully matches or exceeds the efficiency of fashions like Qwen2.5 VL and GOT, reaching an F1-score of 0.95 and precision of 0.96.

These outcomes underscore SmolDocling’s means to not solely match however usually surpass the efficiency of fashions which might be considerably bigger in dimension, affirming {that a} compact mannequin will be each environment friendly and efficient when constructed with a targeted structure and optimized coaching methods.

Code Demonstration and Output Visualization

To offer a sensible glimpse into how SmolDocling operates, the next part features a pattern code snippet together with an illustration of the anticipated output. This instance demonstrates convert a doc picture into the DocTags markup format.

Instance 1: Pattern Code Snippet

!pip set up docling_core

!pip set up flash-attn

import torch

from docling_core.varieties.doc import DoclingDocument

from docling_core.varieties.doc.doc import DocTagsDocument

from transformers import AutoProcessor, AutoModelForVision2Seq

from transformers.image_utils import load_image

DEVICE = "cuda" if torch.cuda.is_available() else "cpu"

# Load photos

# Initialize processor and mannequin

processor = AutoProcessor.from_pretrained("ds4sd/SmolDocling-256M-preview")

mannequin = AutoModelForVision2Seq.from_pretrained(

"ds4sd/SmolDocling-256M-preview",

torch_dtype=torch.bfloat16,

_attn_implementation="flash_attention_2"# if DEVICE == "cuda" else "keen",

).to(DEVICE)

mannequin.system

# Load photos

picture = load_image("https://user-images.githubusercontent.com/12294956/47312583-697cfe00-d65a-11e8-930a-e15fd67a5bb1.png")

# Create enter messages

messages = [

{

"role": "user",

"content": [

{"type": "image"},

{"type": "text", "text": "Convert this page to docling."}

]

},

]

# Put together inputs

immediate = processor.apply_chat_template(messages, add_generation_prompt=True)

inputs = processor(textual content=immediate, photos=[image], return_tensors="pt")

inputs = inputs.to(DEVICE)

# Generate outputs

generated_ids = mannequin.generate(**inputs, max_new_tokens=8192)

prompt_length = inputs.input_ids.form[1]

trimmed_generated_ids = generated_ids[:, prompt_length:]

doctags = processor.batch_decode(

trimmed_generated_ids,

skip_special_tokens=False,

)[0].lstrip()

# Populate doc

doctags_doc = DocTagsDocument.from_doctags_and_image_pairs([doctags], [image])

print(doctags)

# create a docling doc

doc = DoclingDocument(title="Doc")

doc.load_from_doctags(doctags_doc)

from IPython.show import show, Markdown

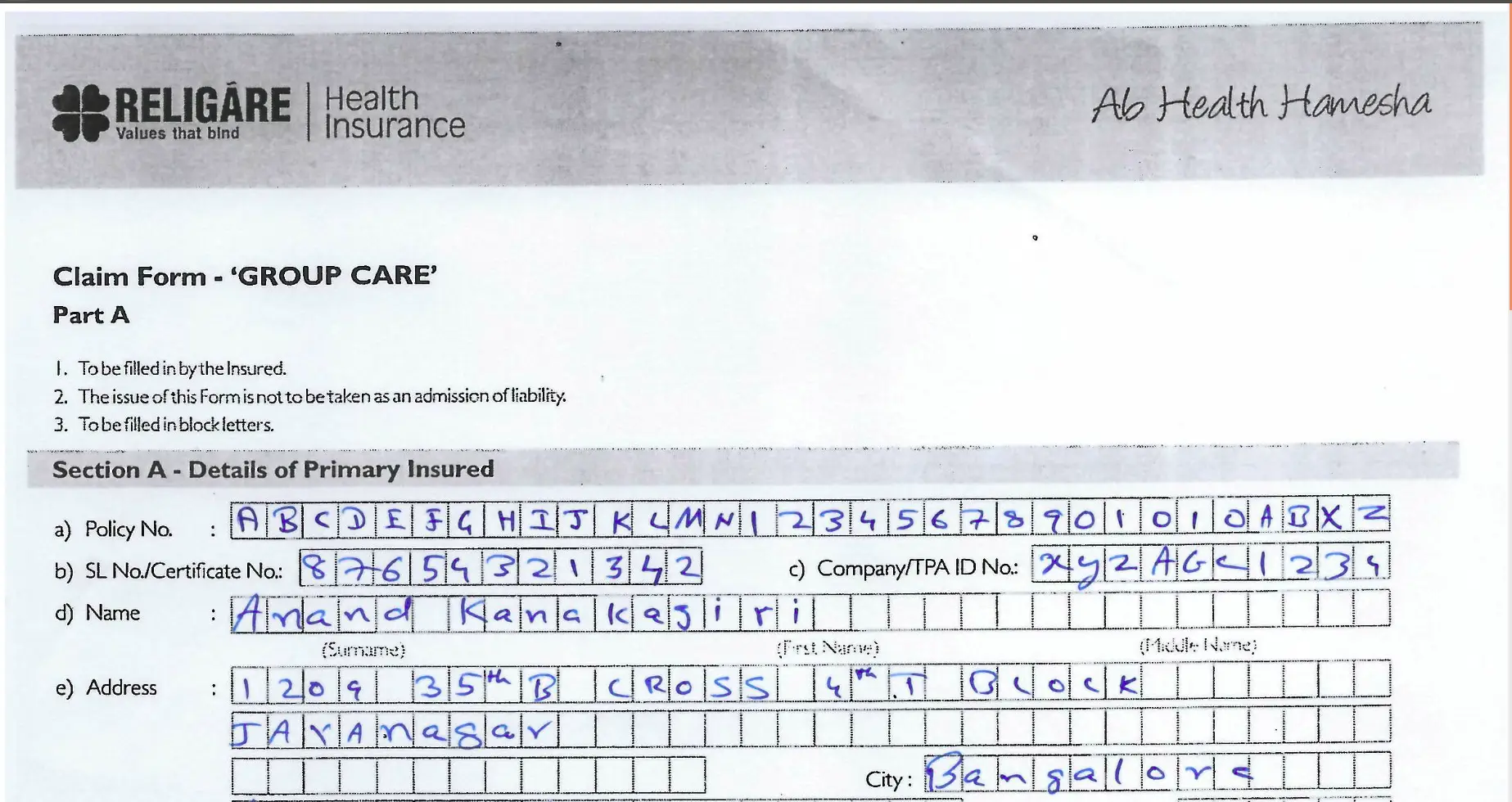

show(Markdown(doc.export_to_markdown()))Enter Picture

Output

This output illustrates how varied doc parts—textual content blocks, tables, and code listings are exactly marked with their content material and spatial info, making them prepared for additional processing or evaluation. However the mannequin is unable to transform all of the textual content DocTags markup format. As you’ll be able to see, mannequin didn’t learn the human written textual content.

Instance 2: Pattern Code Snippet

!curl -L -o image2.png https://i.imgur.com/BFN038S.png

The enter picture is receipt and now we’re extracting the textual content from it.

picture = load_image("./image2.png")# Create enter messages

messages = [

{

"role": "user",

"content": [

{"type": "image"},

{"type": "text", "text": "Convert this page to docling."}

]

},

]

# Put together inputs

prompt1 = processor.apply_chat_template(messages, add_generation_prompt=True)

inputs1 = processor(textual content=prompt1, photos=[image], return_tensors="pt")

inputs1 = inputs1.to(DEVICE)

# Generate outputs

generated_ids = mannequin.generate(**inputs1, max_new_tokens=8192)

prompt_length = inputs1.input_ids.form[1]

trimmed_generated_ids = generated_ids[:, prompt_length:]

doctags = processor.batch_decode(

trimmed_generated_ids,

skip_special_tokens=False,

)[0].lstrip()

# Populate doc

doctags_doc = DocTagsDocument.from_doctags_and_image_pairs([doctags], [image])

print(doctags)

# create a docling doc

doc = DoclingDocument(title="Doc")

doc.load_from_doctags(doctags_doc)

# export as any format

# HTML

# doc.save_as_html(output_file)

# MD

print(doc.export_to_markdown())from IPython.show import show, Markdown

show(Markdown(doc.export_to_markdown()))Output

It’s fairly spectacular because the mannequin extracted all of the content material from the receipt and it’s higher than the obove given instance.

Pocket book with full code: Click on Right here

Conclusion and Future Instructions

SmolDocling units a brand new benchmark in doc conversion by proving that smaller, extra environment friendly fashions can rival and even surpass the capabilities of their bigger counterparts. Its revolutionary use of DocTags and an end-to-end conversion technique present a compelling blueprint for the subsequent technology of vision-language fashions. It really works effectively with receipts total and performs acceptably with different paperwork, although not all the time completely this serves as a consequence of its memory-saving mannequin design.

Key Takeaways

- Effectivity: With a compact 256M parameter structure, SmolDocling achieves fast web page conversion with minimal computational overhead.

- Robustness: Intensive pre-training and task-specific datasets, together with a curriculum studying method, be certain that the mannequin generalizes effectively throughout numerous doc varieties.

- Comparative Superiority: Via rigorous evaluations, SmolDocling has demonstrated superior efficiency in OCR, code itemizing transcription, and equation recognition in comparison with bigger fashions.

Because the analysis neighborhood continues to refine strategies for ingredient localization and multimodal understanding, SmolDocling offers a transparent pathway towards extra resource-efficient and versatile doc processing options. With plans to launch the accompanying datasets publicly, this work paves the way in which for additional developments and collaborations within the area.

Login to proceed studying and luxuriate in expert-curated content material.