In immediately’s fast-paced digital world, companies are always searching for revolutionary methods to reinforce buyer engagement and streamline help providers. One efficient answer is using AI-powered buyer help voice brokers. These AI voice bots are able to understanding and responding to voice-based buyer help queries in real-time. They leverage conversational AI to automate interactions, scale back wait instances, and enhance the effectivity of buyer help. On this article, we’ll be taught all about AI-powered speech-enabled customer support brokers and learn to construct one utilizing Deepgram and pygame libraries.

What’s a Voice Agent?

A voice agent is an AI-powered agent designed to work together with customers via voice-based communication. It could actually perceive spoken language, course of requests, and generate human-like responses. It permits seamless voice-based interactions, decreasing the necessity for handbook inputs, and enhancing person expertise. In contrast to conventional chatbots that rely solely on textual content inputs, a voice agent permits hands-free, real-time conversations. This makes it a extra pure and environment friendly manner of interacting with know-how.

Additionally Learn: Paper-to-Voice Assistant: AI Agent Utilizing Multimodal Method

Distinction Between a Voice Agent and a Conventional Chatbot

| Function | Voice Agent | Conventional Chatbot |

| Enter Kind | Voice | Textual content |

| Response Kind | Voice | Textual content |

| Fingers-Free Use | Sure | No |

| Response Time | Quicker, real-time | Slight delay, relying on typing pace |

| Understanding Accents | Superior (varies by mannequin) | Not relevant |

| Multimodal Capabilities | Can combine textual content and voice | Primarily text-based |

| Context Retention | Greater, remembers previous interactions | Varies, usually restricted to textual content historical past |

| Consumer Expertise | Extra pure | Requires typing |

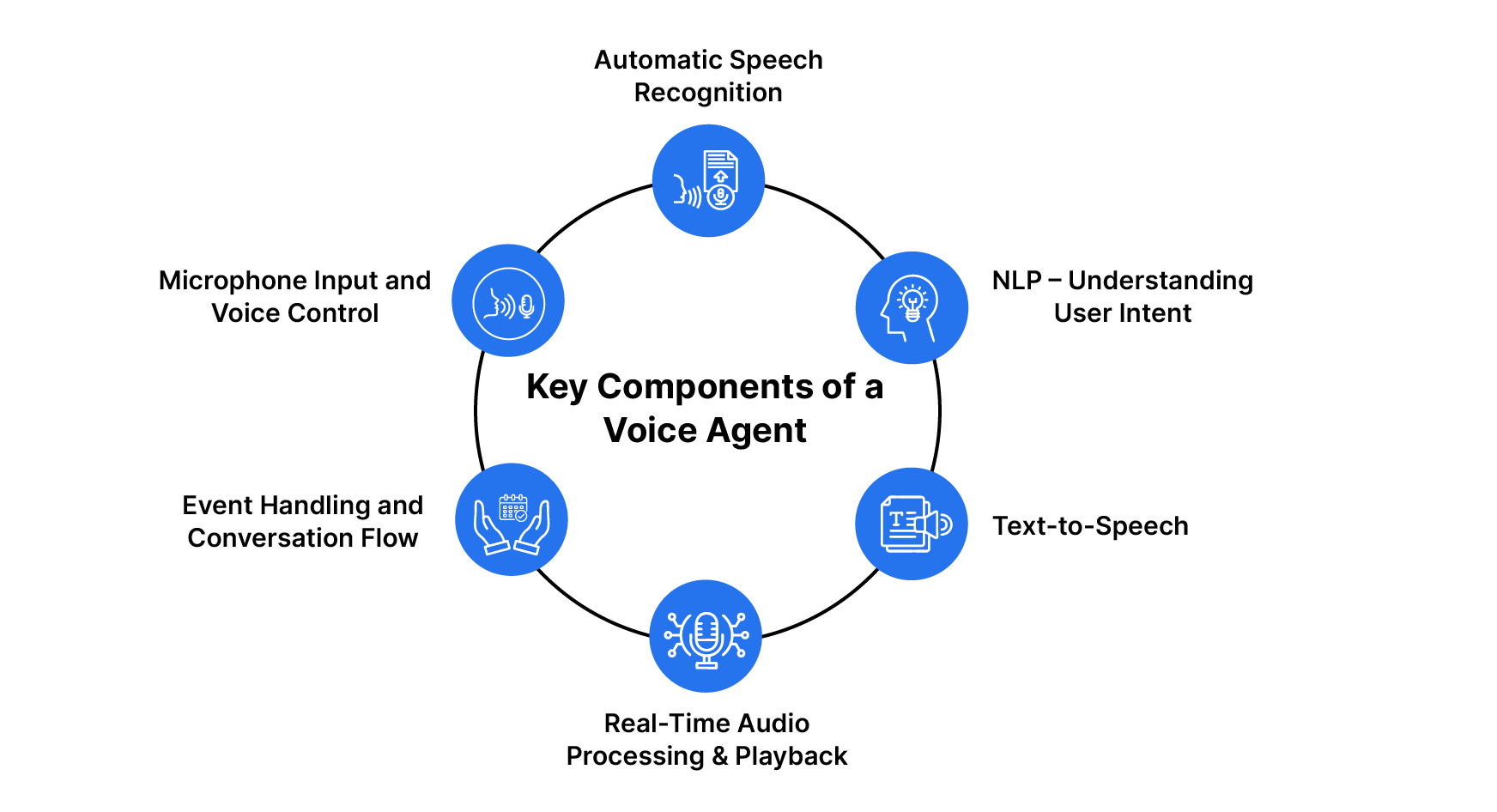

Key Elements of a Voice Agent

A Voice Agent is an AI-driven system that facilitates voice-based interactions, generally utilized in buyer help, digital assistants, and automatic name facilities. It makes use of speech recognition, pure language processing (NLP), and text-to-speech applied sciences to understand person queries and supply acceptable responses.

On this part, we’ll discover the important thing elements of a Voice Agent that allow seamless and environment friendly voice-based communication.

1. Computerized Speech Recognition (ASR) – Speech-to-Textual content Conversion

Step one in a voice agent’s workflow is to transform spoken language into textual content. That is achieved utilizing Computerized Speech Recognition (ASR).

Implementation in Code:

- The Deepgram API is used for real-time speech transcription.

- The “deepgram.hear.reside.v(“1”)” technique captures reside audio and transcribes it into textual content.

- The occasion “LiveTranscriptionEvents.Transcript” processes and extracts spoken phrases.

2. Pure Language Processing (NLP) – Understanding Consumer Intent

As soon as the speech is transcribed into textual content, the system must course of and perceive it. OpenAI’s LLM (GPT mannequin) is used right here for pure language understanding (NLU).

Implementation in Code:

- The transcribed textual content is appended to the “dialog” record.

- The GPT mannequin (o3-mini-2025-01-31) processes the message to generate an clever response.

- The system message (“system_message”) defines the agent’s persona and scope.

3. Textual content-to-Speech (TTS) – Producing Audio Responses

As soon as the system generates a response, it must be transformed again into speech for a pure dialog expertise. Deepgram’s Aura Helios TTS mannequin is used to generate speech.

Implementation in Code:

- The “generate_audio()” operate sends the generated textual content to Deepgram’s TTS API (“DEEPGRAM_URL”).

- The response is an audio file, which is then performed utilizing “pygame.mixer”.

4. Actual-Time Audio Processing & Playback

For a real-time voice agent, the generated speech have to be performed instantly after processing. Pygame’s mixer module is used to deal with audio playback.

Implementation in Code:

- The “playaudio()” operate performs the generated audio utilizing “pygame.mixer”.

- The microphone is muted whereas the response is performed, stopping unintended audio interference.

5. Occasion Dealing with and Dialog Circulation

An actual-time voice agent must deal with a number of occasions, akin to opening and shutting connections, processing speech, and dealing with errors.

Implementation in Code:

- Occasion listeners are registered for dealing with ASR (“on_message”), utterance detection (“on_utterance_end”), and errors (“on_error”).

- The system ensures clean dealing with of person enter and server responses.

6. Microphone Enter and Voice Management

A key side of a voice agent is capturing reside person enter utilizing a microphone. The Deepgram Microphone module is used for real-time audio streaming.

Implementation in Code:

- The microphone listens constantly and sends audio information for ASR processing.

- The system can mute/unmute the microphone whereas taking part in responses.

Accessing the API Keys

Earlier than we begin with the steps to construct the voice agent, let’s first see how we will generate the required API keys.

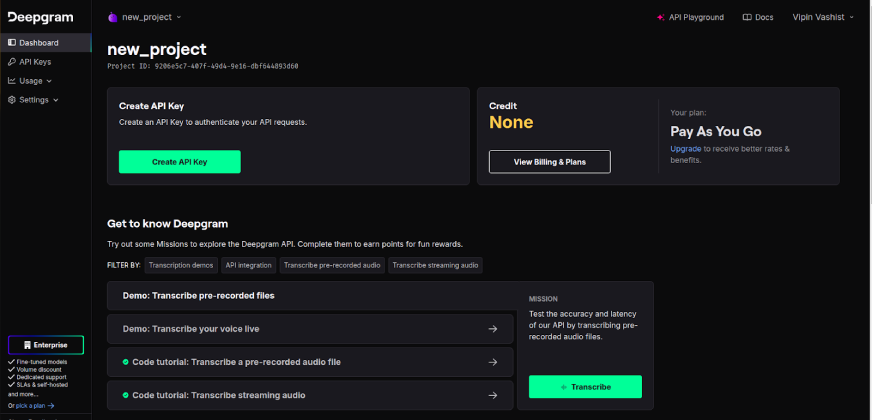

1. Deepgram API Key

To entry the Deepgram API key go to Deepgram and join a Deepgram account. If you have already got an account, merely log in.

After logging in, click on on the “Create API Key” to generate a brand new key. After logging in, click on on the “Create API Key” to generate a brand new key. Deepgram additionally supplies $200 in free credit, permitting customers to discover its providers with out an preliminary value.

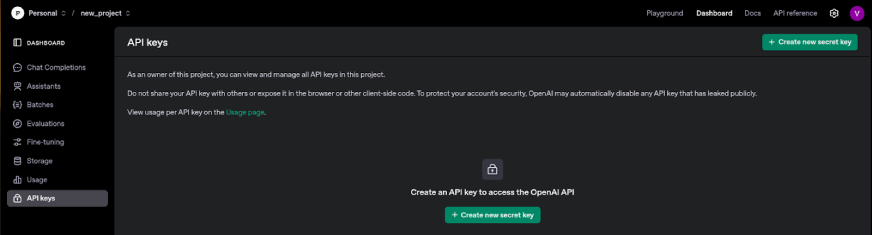

2. OpenAI API Key

To entry the OpenAI API key, go to OpenAI and login to your account. Join one in the event you don’t have already got an OpenAI account.

After logging in, click on on the “Create new secret key” to generate a brand new key.

Steps to Construct a Voice Agent

Now we’re able to construct a voice agent. On this information we’ll be taught to construct a buyer help voice agent that can assist customers of their duties, reply their queries, and supply personalised help in a pure, intuitive manner. So let’s start.

Step 1: Set Up API Keys

APIs assist us hook up with exterior providers, like speech recognition or textual content era. To verify solely licensed customers can use these providers, we have to authenticate with API keys. To do that securely, it’s finest to retailer the keys in separate textual content information or setting variables. This permits this system to securely learn and cargo the keys when needed.

With open ("deepgram_apikey_path","r") as f:

API_KEY = f.learn().strip()

with open("/openai_apikey_path","r") as f:

OPENAI_API_KEY = f.learn().strip()

load_dotenv()

os.environ["OPENAI_API_KEY"] = OPENAI_API_KEYStep 2: Outline System Directions

A voice assistant should comply with clear pointers to make sure it offers useful and well-organized responses. These guidelines outline the agent’s function, akin to whether or not it’s appearing as buyer help or a private assistant. Additionally they set the tone and magnificence of the responses, like whether or not they need to be formal, informal, or skilled. You’ll be able to even set guidelines on how detailed or concise the responses ought to be.

On this step, you write a system message that explains the agent’s goal and embrace pattern conversations to assist generate extra correct and related responses.

system_message = """ You're a buyer help agent specializing in vehicle-related points like flat tires, engine issues, and upkeep suggestions.

# Directions:

- Present clear, easy-to-follow recommendation.

- Preserve responses between 3 to 7 sentences.

- Provide security suggestions the place needed.

- If an issue is complicated, counsel visiting an expert mechanic.

# Instance:

Consumer: "My tire is punctured, what ought to I do?"

Response: "First, pull over safely and switch in your hazard lights. When you have a spare tire, comply with your automobile handbook to interchange it. In any other case, name for roadside help. Keep in a secure location whereas ready."

"""Step 3: Audio Textual content Processing

To create extra natural-sounding speech, we’ve carried out a devoted AudioTextProcessor class that handles the segmentation of textual content responses:

- The segment_text technique breaks lengthy responses into pure sentence boundaries utilizing common expressions.

- This permits the TTS engine to course of every sentence with acceptable pauses and intonation.

- The result’s extra human-like speech patterns that enhance person expertise.

class AudioTextProcessor:

@staticmethod

def segment_text(textual content):

"""Cut up textual content into segments at sentence boundaries for higher TTS."""

sentence_boundaries = re.finditer(r'(?<=[.!?])s+', textual content)

boundaries_indices = [boundary.start() for boundary in sentence_boundaries]

segments = []

begin = 0

for boundary_index in boundaries_indices:

segments.append(textual content[start:boundary_index + 1].strip())

begin = boundary_index + 1

segments.append(textual content[start:].strip())

return segmentsShort-term File Administration

To deal with audio information in a clear, environment friendly method, our enhanced implementation makes use of Python’s tempfile module:

- Short-term information are created for storing audio information throughout playback.

- Every audio file is routinely cleaned up after use.

- This prevents accumulation of unused information on the system and manages assets effectively.

Threading for Non-Blocking Audio Playback

A key enhancement in our new implementation is using threading for audio playback:

- Audio responses are performed in a separate thread from the principle software.

- This permits the voice agent to proceed listening and processing whereas talking.

- The microphone is muted throughout playback to stop suggestions loops.

- A threading.Occasion object (

mic_muted) coordinates this habits throughout threads.

Step 4: Implement Speech-to-Textual content Processing

To grasp person instructions, the assistant must convert spoken phrases into textual content. That is achieved utilizing Deepgram’s speech-to-text API, which may transcribe speech into textual content in actual time. It could actually course of totally different languages and accents, and distinguish between interim (incomplete) and remaining (confirmed) transcriptions.

On this step, the method begins by recording audio from the microphone. Then, the audio is distributed to Deepgram’s API for processing, and the textual content output is acquired and saved for additional use.

from deepgram import DeepgramClient, LiveTranscriptionEvents, LiveOptions, Microphone

import threading

# Initialize purchasers

deepgram_client = DeepgramClient(api_key=DEEPGRAM_API_KEY)

# Arrange Deepgram connection

dg_connection = deepgram_client.hear.websocket.v("1")

# Outline occasion handler callbacks

def on_open(connection, occasion, **kwargs):

print("Connection Open")

def on_message(connection, consequence, **kwargs):

# Ignore messages when microphone is muted for assistant's response

if mic_muted.is_set():

return

sentence = consequence.channel.options[0].transcript

if len(sentence) == 0:

return

if consequence.is_final:

is_finals.append(sentence)

if consequence.speech_final:

utterance = " ".be part of(is_finals)

print(f"Consumer stated: {utterance}")

is_finals.clear()

# Course of person enter and generate response

# [processing code here]

def on_speech_started(connection, speech_started, **kwargs):

print("Speech Began")

def on_utterance_end(connection, utterance_end, **kwargs):

if len(is_finals) > 0:

utterance = " ".be part of(is_finals)

print(f"Utterance Finish: {utterance}")

is_finals.clear()

def on_close(connection, shut, **kwargs):

print("Connection Closed")

def on_error(connection, error, **kwargs):

print(f"Dealt with Error: {error}")

# Register occasion handlers

dg_connection.on(LiveTranscriptionEvents.Open, on_open)

dg_connection.on(LiveTranscriptionEvents.Transcript, on_message)

dg_connection.on(LiveTranscriptionEvents.SpeechStarted, on_speech_started)

dg_connection.on(LiveTranscriptionEvents.UtteranceEnd, on_utterance_end)

dg_connection.on(LiveTranscriptionEvents.Shut, on_close)

dg_connection.on(LiveTranscriptionEvents.Error, on_error)

# Configure reside transcription choices with superior options

choices = LiveOptions(

mannequin="nova-2",

language="en-US",

smart_format=True,

encoding="linear16",

channels=1,

sample_rate=16000,

interim_results=True,

utterance_end_ms="1000",

vad_events=True,

endpointing=500,

)

addons = {

"no_delay": "true"

}Step 5: Deal with Conversations

As soon as the assistant has transcribed the person’s speech into textual content, it wants to research the textual content and generate an acceptable response. To do that, we use OpenAI’s o3-mini mannequin, which may perceive the context of earlier messages and generate human-like responses. It could actually even keep in mind dialog historical past, which may help the agent preserve continuity.

On this step, the assistant shops the person’s queries and its responses in a dialog record. Then, gpt-4o-mini is used to generate a response, which is returned because the assistant’s reply.

# Initialize OpenAI shopper

openai_client = OpenAI(api_key=OPENAI_API_KEY)

def get_ai_response(user_input):

"""Get response from OpenAI API."""

attempt:

# Add person message to dialog

dialog.append({"function": "person", "content material": user_input.strip()})

# Put together messages for API

messages = [{"role": "system", "content": system_message}]

messages.prolong(dialog)

# Get response from OpenAI

chat_completion = openai_client.chat.completions.create(

mannequin="gpt-4o-mini",

messages=messages,

temperature=0.7,

max_tokens=150

)

# Extract and save assistant's response

response_text = chat_completion.decisions[0].message.content material.strip()

dialog.append({"function": "assistant", "content material": response_text})

return response_text

besides Exception as e:

print(f"Error getting AI response: {e}")

return "I am having bother processing your request. Please attempt once more."Step 6: Convert Textual content to Speech

The assistant ought to converse its response aloud as an alternative of simply displaying textual content. To do that, Deepgram’s text-to-speech API is used to transform the textual content into natural-sounding speech.

On this step, the agent’s textual content response is distributed to Deepgram’s API, which processes it and returns an audio file of the speech. Lastly, the audio file is performed utilizing Python’s Pygame library, permitting the assistant to talk its response to the person.

class AudioTextProcessor:

@staticmethod

def segment_text(textual content):

"""Cut up textual content into segments at sentence boundaries for higher TTS."""

sentence_boundaries = re.finditer(r'(?<=[.!?])s+', textual content)

boundaries_indices = [boundary.start() for boundary in sentence_boundaries]

segments = []

begin = 0

for boundary_index in boundaries_indices:

segments.append(textual content[start:boundary_index + 1].strip())

begin = boundary_index + 1

segments.append(textual content[start:].strip())

return segments

@staticmethod

def generate_audio(textual content, headers):

"""Generate audio utilizing Deepgram TTS API."""

payload = {"textual content": textual content}

attempt:

with requests.submit(DEEPGRAM_TTS_URL, stream=True, headers=headers, json=payload) as r:

r.raise_for_status()

return r.content material

besides requests.exceptions.RequestException as e:

print(f"Error producing audio: {e}")

return None

def play_audio(file_path):

"""Play audio file utilizing pygame."""

attempt:

pygame.mixer.init()

pygame.mixer.music.load(file_path)

pygame.mixer.music.play()

whereas pygame.mixer.music.get_busy():

pygame.time.Clock().tick(10)

# Cease the mixer and launch assets

pygame.mixer.music.cease()

pygame.mixer.stop()

besides Exception as e:

print(f"Error taking part in audio: {e}")

lastly:

# Sign that playback is completed

mic_muted.clear()Step 7: Welcome and Farewell Messages

A well-designed voice agent creates a extra partaking and interactive expertise by greeting customers at startup and offering a farewell message upon exit. This helps set up a pleasant tone and ensures a clean conclusion to the interplay.

def generate_welcome_message():

""" Generate welcome message audio."""

welcome_msg = "Howdy, I am Eric, your automobile help assistant. How can I assist along with your automobile immediately?"

# Create non permanent file for welcome message

with tempfile.NamedTemporaryFile(delete=False, suffix='.mp3') as welcome_file:

welcome_path = welcome_file.identify

# Generate audio for welcome message

welcome_audio = audio_processor.generate_audio(welcome_msg, DEEPGRAM_HEADERS)

if welcome_audio:

with open(welcome_path, "wb") as f:

f.write(welcome_audio)

# Play welcome message

mic_muted.set()

threading.Thread(goal=play_audio, args=(welcome_path,)).begin()

return welcome_pathMicrophone Administration

One key enhancement is the correct administration of the microphone throughout conversations:

- The microphone is routinely muted when the agent is talking.

- This prevents the agent from “listening to” its voice.

- A threading occasion object coordinates this habits between threads.

# Mute microphone and play response

mic_muted.set()

microphone.mute()

threading.Thread(goal=play_audio, args=(temp_path,)).begin()

time.sleep(0.2)

microphone.unmute()Step 8: Exit Instructions

To make sure a clean and intuitive person interplay, the voice agent listens for frequent exit instructions akin to “exit,” “stop,” “goodbye,” or “bye.” When an exit command is detected, the system acknowledges and safely shuts down.

# Verify for exit instructions

if any(exit_cmd in utterance.decrease() for exit_cmd in ["exit", "quit", "goodbye", "bye"]):

print("Exit command detected. Shutting down...")

farewell_text = "Thanks for utilizing the automobile help assistant. Goodbye!"

with tempfile.NamedTemporaryFile(delete=False, suffix='.mp3') as temp_file:

temp_path = temp_file.identify

farewell_audio = audio_processor.generate_audio(farewell_text, DEEPGRAM_HEADERS)

if farewell_audio:

with open(temp_path, "wb") as f:

f.write(farewell_audio)

# Mute microphone and play farewell

mic_muted.set()

microphone.mute()

play_audio(temp_path)

time.sleep(0.2)

# Clear up and exit

if os.path.exists(temp_path):

os.take away(temp_path)

# Finish this system

os._exit(0)Step 9: Error Dealing with and Robustness

To make sure a seamless and resilient person expertise, the voice agent should deal with errors gracefully. Sudden points akin to community failures, lacking audio responses, or invalid person inputs can disrupt interactions if not correctly managed.

Exception Dealing with

Attempt-except blocks are used all through the code to catch and deal with errors gracefully:

- Within the audio era and playback capabilities.

- Throughout API interactions with OpenAI and Deepgram.

- In the principle occasion dealing with loop.

attempt:

# Generate audio for every section

with open(temp_path, "wb") as output_file:

for segment_text in text_segments:

audio_data = audio_processor.generate_audio(segment_text, DEEPGRAM_HEADERS)

if audio_data:

output_file.write(audio_data)

besides Exception as e:

print(f"Error producing or taking part in audio: {e}")Useful resource Cleanup

Correct useful resource administration is crucial for a dependable software:

- Short-term information are deleted after use.

- Pygame audio assets are correctly launched.

- Microphone and connection objects are closed on exit.

# Clear up

microphone.end()

dg_connection.end()

# Clear up welcome file

if os.path.exists(welcome_file):

os.take away(welcome_file)Step 10: Remaining Steps to Run the Voice Agent

We want a foremost operate to tie all the things collectively and make sure the assistant works easily. The principle operate will:

- Hearken to the person’s speech.

- Convert it to textual content and generate a response utilizing AI,

- Convert the response into speech, after which play the speech again to the person.

- This course of ensures that the assistant can work together with the person in a whole, seamless movement.

def foremost():

"""Important operate to run the voice assistant."""

print("Beginning Car Assist Voice Assistant 'Eric'...")

print("Communicate after the welcome message.")

print("nPress Enter to cease the assistant...n")

# Generate and play welcome message

welcome_file = generate_welcome_message()

time.sleep(0.5) # Give time for welcome message to begin

attempt:

# Initialize is_finalslist to retailer transcription segments

is_finals = []

# Arrange Deepgram connection

dg_connection = deepgram_client.hear.websocket.v("1")

# Register occasion handlers

# [event registration code here]

# Configure and begin Deepgram connection

if not dg_connection.begin(choices, addons=addons):

print("Failed to hook up with Deepgram")

return

# Begin microphone

microphone = Microphone(dg_connection.ship)

microphone.begin()

# Await person to press Enter to cease

enter("")

# Clear up

microphone.end()

dg_connection.end()

# Clear up welcome file

if os.path.exists(welcome_file):

os.take away(welcome_file)

print("Assistant stopped.")

besides Exception as e:

print(f"Error: {e}")

if __name__ == "__main__":

foremost()For an entire model of the code please refer right here.

Observe: As we’re presently utilizing the free model of Deepgram, the agent’s response time tends to be slower as a result of limitations of the free plan.

Use Circumstances of Voice Brokers

1. Buyer Assist Automation

Examples:

- Banking & Finance: Answering queries about account stability, transactions, or bank card payments.

- E-commerce: Offering order standing, return insurance policies, or product suggestions.

- Airways & Journey: Aiding with flight bookings, cancellations, and baggage insurance policies.

Instance Dialog:

Consumer: “The place is my order?”

Agent: “Your order was shipped on February 17 and is anticipated to reach by February 20.”

2. Healthcare Digital Assistants

Examples:

- Hospitals & Clinics: Reserving appointments with medical doctors.

- Residence Care: Reminding aged sufferers to take medicines.

- Telemedicine: Offering primary symptom evaluation earlier than connecting to a health care provider.

Instance Dialog:

Consumer: “I’ve a headache and fever. What ought to I do?”

Agent: “Primarily based in your signs, you will have a light fever. Keep hydrated and relaxation. If signs persist, seek the advice of a health care provider.”

3. Voice Assistants for Autos

Examples:

- Navigation: “Discover the closest gasoline station.”

- Music Management: “Play my highway journey playlist.”

- Emergency Assist: “Name roadside help.”

Instance Dialog:

Consumer: “How’s the site visitors on my route?”

Agent: “There’s average site visitors. Estimated arrival time is 45 minutes.”

Study Extra: AI for Buyer Service | High 10 Use Circumstances

Conclusion

Voice brokers are revolutionizing communication by making interactions pure, environment friendly, and accessible. They’ve numerous use instances throughout industries like buyer help, sensible properties, healthcare, and finance.

By leveraging speech-to-text, text-to-speech, and NLP, they perceive context, present clever responses, and deal with complicated duties seamlessly. As AI advances, these programs will grow to be extra personalised and human-like, their capacity to be taught from interactions will enable them to supply more and more tailor-made and intuitive experiences, making them indispensable companions in each private {and professional} settings.

Continuously Requested Questions

A. A voice agent is an AI-powered system that may course of speech, perceive context, and reply intelligently utilizing speech-to-text, NLP, and text-to-speech applied sciences.

A. The principle elements embrace:

– Speech-to-Textual content (STT): Converts spoken phrases into textual content.

– Pure Language Processing (NLP): Understands and processes the enter.

– Textual content-to-Speech (TTS): Converts textual content responses into human-like speech.

– AI Mannequin: Generates significant and context-aware replies.

A. voice brokers are broadly utilized in customer support, healthcare, digital assistants, sensible properties, banking, automotive help, and accessibility options.

A. Sure, many superior voice brokers help a number of languages and accents, bettering accessibility and person expertise worldwide.

A. No, they’re designed to help and improve human brokers by dealing with repetitive duties, permitting human brokers to give attention to complicated points.