Within the quickly evolving panorama of software program improvement, the intersection of synthetic intelligence, knowledge validation, and database administration has opened up unprecedented potentialities. This weblog put up explores an revolutionary strategy to SQL-code era and SQL code clarification utilizing the Newest PydanticAI Framework and Google’s Gemini-1.5 mannequin, demonstrating how cutting-edge AI applied sciences can streamline and improve database question improvement.

For builders, knowledge scientists, and knowledge analysts, this exploration gives a glimpse into the way forward for clever code era from pure language processing, the place complicated database queries will be created with ease and accuracy.

Studying Targets

- Perceive the basics of Pydantic and PydanticAI.

- Learn to implement an AI-powered SQL code era system.

- Discover the capabilities of Gemini-1.5-Flash in pure language for SQL translation.

- Achieve perception into constructing clever AI brokers for database interactions.

This text was revealed as part of the Information Science Blogathon.

What’s PydanticAI?

PydanticAI is a robust Python library that revolutionizes knowledge validation and kind checking. It supplies a declarative strategy to defining knowledge fashions, making it straightforward to create and validate complicated knowledge buildings.

Vital options of Pydantic embrace:

Customization

- Validates a variety of knowledge varieties, together with primitive varieties and sophisticated nested buildings.

- Helps almost any Python object for validation and serialization

Flexibility

Permits management over knowledge validation strictness:

- Coerce knowledge to the anticipated kind

- Implement strict type-checking when wanted

Serialization

- Help seamless conversion between Pydantic object, dictionary, and JSON.

- Permits self-documenting APIs and compatibility with instruments that use JSON schema.

Efficiency

- Core validation logic is written in Rust for distinctive pace and effectivity.

- Excellent for high-throughput functions like scalable REST APIs.

Ecosystem

- Extensively utilized in common Python libraries akin to FastAPI, Langchain, LlamaIndex, and plenty of extra.

- Fashionable Agentic LLM can’t be carried out with out Pydantic.

Examples of PydanticAI in Motion

PydanticAI simplifies knowledge validation and type-checking in Python, making it a robust device for creating strong knowledge fashions. Let’s discover some sensible examples that showcase its capabilities.

Fundamental Information Validation

from pydantic import BaseModel

class Person(BaseModel):

identify: str

age: int

# Legitimate knowledge

consumer = Person(identify="Alice", age=30)

print(consumer)

print("=====================================")

# Invalid knowledge (age is a string)

attempt:

consumer = Person(identify="Alice", age="thirty")

besides Exception as e:

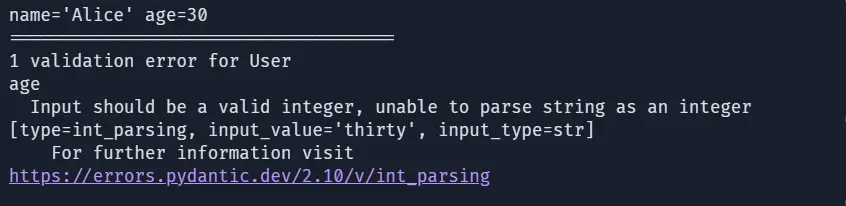

print(e)The above code defines a Person mannequin utilizing Pydantic’s BaseModel, implementing identify as a string and age as an integer. It validates appropriate knowledge however raises a validation error when invalid knowledge(a string for age) is supplied.

Output:

Auto Sort Coercion

from pydantic import BaseModel

class Product(BaseModel):

value: float

amount: int

# Information with mismatched varieties

product = Product(value="19.99", amount="5")

print(product)

print(kind(product.value))

print(kind(product.amount))Right here, the Product mannequin with value as float and amount as an integer. Pydantic robotically coerces string inputs (“19.99” and “5”) into the proper varieties (float and int), demonstrating its kind conversion characteristic.

Output:

Nested Mannequin

from pydantic import BaseModel

class Tackle(BaseModel):

avenue: str

metropolis: str

class Person(BaseModel):

identify: str

tackle: Tackle

# Legitimate knowledge

consumer = Person(identify="Bob", tackle={"avenue": "123 Foremost St", "metropolis": "Wonderland"})

print(consumer)

# Entry nested attributes

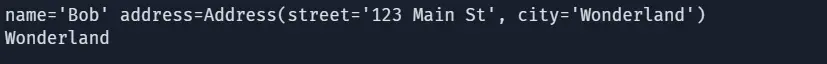

print(consumer.tackle.metropolis)Right here, We outline a nested Person mannequin containing an Tackle mannequin. Pydantic permits nested validation and auto-converts dictionaries into fashions. Legitimate knowledge initializes a Person object, and you may entry nested attributes like ‘consumer.tackle.metropolis’ immediately.

Output:

Validation with Customized Rule

from pydantic import BaseModel, Subject, field_validator

class Person(BaseModel):

identify: str

age: int = Subject(..., gt=0, description="Age should be better than zero")

@field_validator("identify")

def name_must_be_non_empty(cls, worth):

if not worth.strip():

elevate ValueError("Title can't be empty")

return worth

# Legitimate knowledge

consumer = Person(identify="Charlie", age=25)

print(consumer)

# invalid knowledge

attempt:

consumer = Person(identify=" ", age=-5)

besides Exception as e:

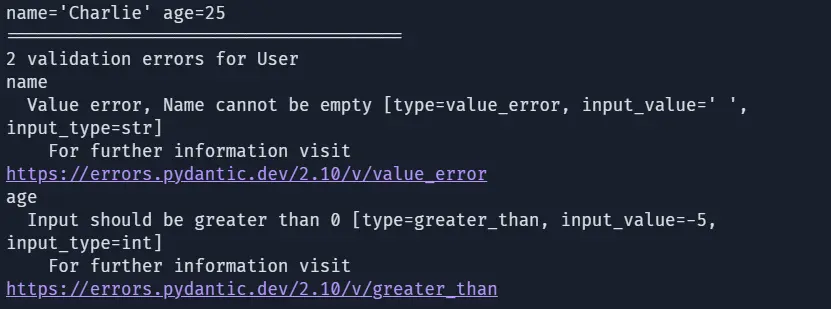

print(e)Right here, We outline a Person mannequin with a validation rule, age should be better than 0, and the identify can’t be empty (validated through the name_must_be_non_empty methodology). Legitimate knowledge creates a Person occasion, whereas invalid knowledge (empty identify or unfavorable age) raises detailed validation errors, demonstrating Pydantic’s validation capabilities.

Output:

These are a few of the core examples of Pydantic I hope they provide help to to know the essential precept of Information Validation.

What’s an AI Agent?

AI brokers are clever techniques designed to autonomously carry out duties, make choices, and work together with their surroundings to attain particular aims. These brokers are usually not new however latest fast improvement in generative AI and mixing it with brokers makes Agentic software program improvement on new period. Now, brokers can course of inputs, execute actions, and adapt dynamically. Their conduct mimics human-like problem-solving, enabling them to perform in varied domains with minimal human intervention.

What’s Agentic Workflow?

An agentic workflow refers back to the buildings, goal-driven sequence of duties managed and executed by one or a number of AI brokers. Unline inflexible conventional workflow, agentic workflow displays adaptability, autonomy, and context-awareness. AI brokers inside these workflows can independently make choices, delegate subtasks, and be taught from suggestions, resulting in environment friendly and optimized outcomes.

Fashionable Utilization of AI Brokers and Agentic Workflows

The mixing of AI brokers and agentic workflows has revolutionized industries by automating complicated duties, enhancing decision-making, and driving effectivity. These clever techniques adapt dynamically, enabling smarter options throughout numerous domains.

Enterprise Automation

AI brokers automate repetitive duties like buyer assist by way of chatbots, e mail administration, and gross sales pipeline optimization. They improve productiveness by liberating up human sources from higher-value duties.

Software program Improvement

AI-powered brokers speed up software program lifecycles by producing, testing, and debugging code, thereby decreasing improvement time and human error.

Healthcare

AI brokers help in medical prognosis, affected person monitoring, and therapy personalization, enhancing healthcare supply and operational effectivity.

Finance

Agentic workflows in monetary techniques automate fraud detection, danger assessments, and funding evaluation, enabling sooner and extra dependable decision-making.

E-Commerce

Intelligence businesses improve personalization in buying experiences, optimizing product suggestions and customer support.

The rise of AI brokers and agentic workflows signifies a shift towards extremely autonomous techniques able to managing complicated processes. Their adaptability and studying capabilities make them indispensable for contemporary industries, driving innovation, scalability, and effectivity throughout domains. As AI continues to evolve, AI brokers will additional combine into our each day workflows, remodeling how duties are managed and executed.

What’s the PydanticAI Framework?

PydanticAI is a Python Agent framework developed by the creator of Pydantic, FastAPI to streamline the development of production-grade functions using Generative AI, It emphasizes kind security, model-agnostic design, and seamless integration with massive language fashions (LLMs).

Key options PydanticAI consists of:

- Mannequin-Agnostic Help: PydanticAI is suitable with varied fashions, together with OpenAI, Antropic, Gemini, Groq, Mistral, and Ollama, with an easy interface to include further fashions.

- Sort-safety: Leveraging Python’s kind techniques and Pydantic’s validations, PydanticAI ensures strong and scalable agent improvement.

- Dependency Injection System: It introduces a novel, ty-safe dependency injection mechanism, enhancing testing and evaluation-driven improvement.

- Structured Response Validation: Using Pydantic’s validation capabilities, ensures correct and dependable construction responses.

- Logfire Integration: Presents integration with Pydantic Logfire for enhanced debugging and monitoring of LLm-powered functions.

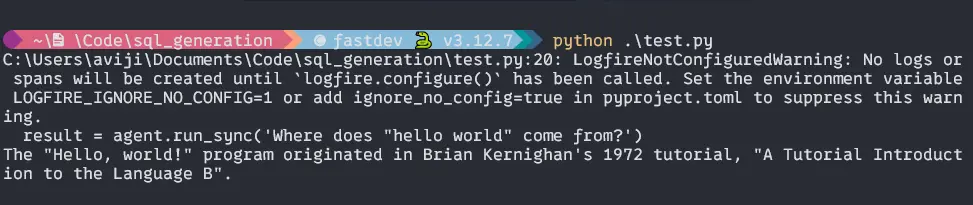

Here’s a minimal instance of PydanticAI:

import os

from pydantic_ai import Agent

from pydantic_ai.fashions.gemini import GeminiModel

from dotenv import load_dotenv

load_dotenv()

gemini_api_key = os.getenv("<GOOGLE_API_KEY>")

mannequin = GeminiModel(

"gemini-1.5-flash",

api_key=gemini_api_key,

)

agent = Agent(

mannequin=mannequin,

system_prompt="Be concise, reply with one sentence.",

)

consequence = agent.run_sync('The place does "hiya world" come from?')

print(consequence.knowledge)Output:

Now it’s time to do some actual stuff. We are going to construct a Postgres SQL Question Era utilizing the PydanticAI Agent Framework.

Getting Began with Your Challenge

Lay the inspiration in your undertaking with a step-by-step information to organising the important instruments and surroundings.

Setting Setting

We are going to create a conda surroundings for the undertaking.

#create an env

$ conda create --name sql_gen python=3.12

# activate the env

$ conda activate sql_genNow, create a undertaking folder

# create a folder

$ mkdir sql_code_gen

# become the folder

$ cd sql_code_genSet up Postgres and Load Database

To put in the Postgres, psql-command-tools, and pgadmin-4, Simply go to EDB obtain your installer in your techniques, and set up all of the instruments in a single go.

Now obtain the dvdrental database from right here and to load it to Postgres comply with these steps

Step1: Open your terminal

psql -U postgres

# It's going to ask for a password put it Step2: Create a database

# Within the postgres=#

CREATE DATABASE dvdrental;Step3: Command for Terminal

Now, exit the psql command after which kind within the terminal

pg_restore -U postgres -d dvdrental D:/sampledb/postgres/dvdrental.tarStep4: Connecing to psql

Now, Hook up with the psql and examine in case your database is loaded or not.

psql -U postgres

# Join with dvdrental

c dvdrental

# let's have a look at the tables

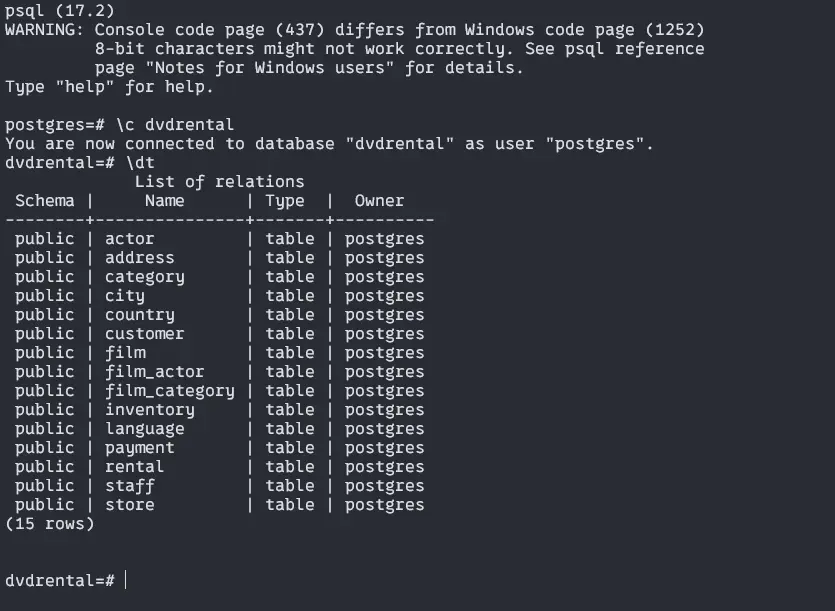

dtOutput:

In case you see the above tables then you’re okay. We’re all set to begin our essential undertaking.

Now Set up the mandatory Python libraries into the sql_gen conda env.

conda activate sql_gen

# set up libraries

pip set up pydantic asyncpg asyncio pydantic-ai

pip set up python-dotenv fastapi google-generativeai

pip set up devtools annotated-types type-extensionsChallenge Construction

Our undertaking has 4 information specifically essential, fashions, service, and schema.

sql_query_gen/

|

|--main.py

|--models.py

|--schema.py

|--service.py

|--.env

|--__init__.py

|--.gitignoreStep-by-Step Information to Implementing Your Challenge

Dive into the detailed steps and sensible strategies to deliver your undertaking from idea to actuality with this complete implementation information.

Pydantic Fashions

We are going to begin by creating knowledge fashions within the fashions.py file

from dataclasses import dataclass

from typing import Annotated

import asyncpg

from annotated_types import MinLen

from pydantic import BaseModel, Subject

@dataclass

class Deps:

conn: asyncpg.Connection

class Success(BaseModel):

sql_query: Annotated[str, MinLen(1)]

clarification: str = Subject("", description="Clarification of the SQL question, as markdown")

class InvalidRequest(BaseModel):

error_message: strWithin the above code,

- The Deps class manages database connection dependencies. @dataclass robotically generates particular strategies like __init__ and __repr__. Conn is typed as `asyncpg.Connection` and represents an lively PostgreSQL connection. This design follows dependency injection patterns, making the code extra testable and maintainable.

- The Success Class represents a profitable SQL-query era, sql_query should be a non-empty string (MinLen(1)) and use Annotated so as to add validation constraints. The clarification is an Elective discipline with a default empty string.

- The InvalidRequest class is the Error Response Mannequin, representing failed SQL-query era makes an attempt.

This code established the inspiration for Database connectivity administration, enter validation, Structured response dealing with, and Error dealing with.

Service module

Now, we are going to implement the PydanticAI companies for SQL era within the service module.

Import library and Configuration

import os

from typing import Union

from dotenv import load_dotenv

import asyncpg

from typing_extensions import TypeAlias

from pydantic_ai import Agent, ModelRetry, RunContext

from pydantic_ai.fashions.gemini import GeminiModel

from schema import DB_SCHEMA

from fashions import Deps, Success, InvalidRequestTo configure, create a .env file within the undertaking root and put your Gemini API key there

# .env

GEMINI_API_KEY="asgfhkdhjy457gthjhajbsd"Then within the service.py file:

load_dotenv()

gemini_api_key = os.getenv("GOOGLE_API_KEY")It’s going to load the Google API key from the `.env` file.

Creating mannequin and Agent

Response: TypeAlias = Union[Success, InvalidRequest]

mannequin = GeminiModel(

"gemini-1.5-flash",

api_key=gemini_api_key,

)

agent = Agent(

mannequin,

result_type=Response, # kind: ignore

deps_type=Deps,

)- First Outline a Response kind that may be both Success or InvalidRequest

- Initializes the Gemini 1.5 Flash mannequin with API key

- Create a PydanticAI agent with the desired response and dependency varieties

System Immediate Definition

Now we are going to outline the system immediate for our SQL question era.

@agent.system_prompt

async def system_prompt() -> str:

return f"""

Given the next PostgreSQL desk of data, your job is to

write a SQL question that fits the consumer's request.

Database schema:

{DB_SCHEMA}

Instance

request: Discover all movies with a rental fee better than $4.00 and a score of 'PG'

response: SELECT title, rental_rate

FROM movie

WHERE rental_rate > 4.00 AND score = 'PG';

Instance

request: Discover the movie(s) with the longest size

response: SELECT title, size

FROM movie

WHERE size = (SELECT MAX(size) FROM movie);

Instance

request: Discover the common rental period for movies in every class

response: SELECT c.identify, AVG(f.rental_duration) AS average_rental_duration

FROM class c

JOIN film_category fc ON c.category_id = fc.category_id

JOIN movie f ON fc.film_id = f.film_id

GROUP BY c.identify

ORDER BY average_rental_duration DESC;

"""Right here, we outline the bottom context for the AI mannequin and supply instance queries to information the mannequin’s responses. We additionally embrace the database schema data within the mannequin in order that the mannequin can analyze the schema and generate a greater response.

Response Validation

To make the response from the AI mannequin error-free and as much as the tasks necessities, we simply validate the responses.

@agent.result_validator

async def validate_result(ctx: RunContext[Deps], consequence: Response) -> Response:

if isinstance(consequence, InvalidRequest):

return consequence

# gemini usually provides extraneos backlashes to SQL

consequence.sql_query = consequence.sql_query.exchange("", " ")

if not consequence.sql_query.higher().startswith("SELECT"):

elevate ModelRetry("Please create a SELECT question")

attempt:

await ctx.deps.conn.execute(f"EXPLAIN {consequence.sql_query}")

besides asyncpg.exceptions.PostgresError as e:

elevate ModelRetry(f"Invalid SQL: {e}") from e

else:

return consequenceRight here, we are going to validate and course of the generated SQL queries

Key validation steps:

- Returns instantly if the result’s an InvalidRequeste, clear up the additional backslashes

- Make sure the question is a SELECT assertion

- Validates SQL syntax utilizing PostgreSQL EXPLAIN

- Increase ModelRetry for invalid queries

Database Schema

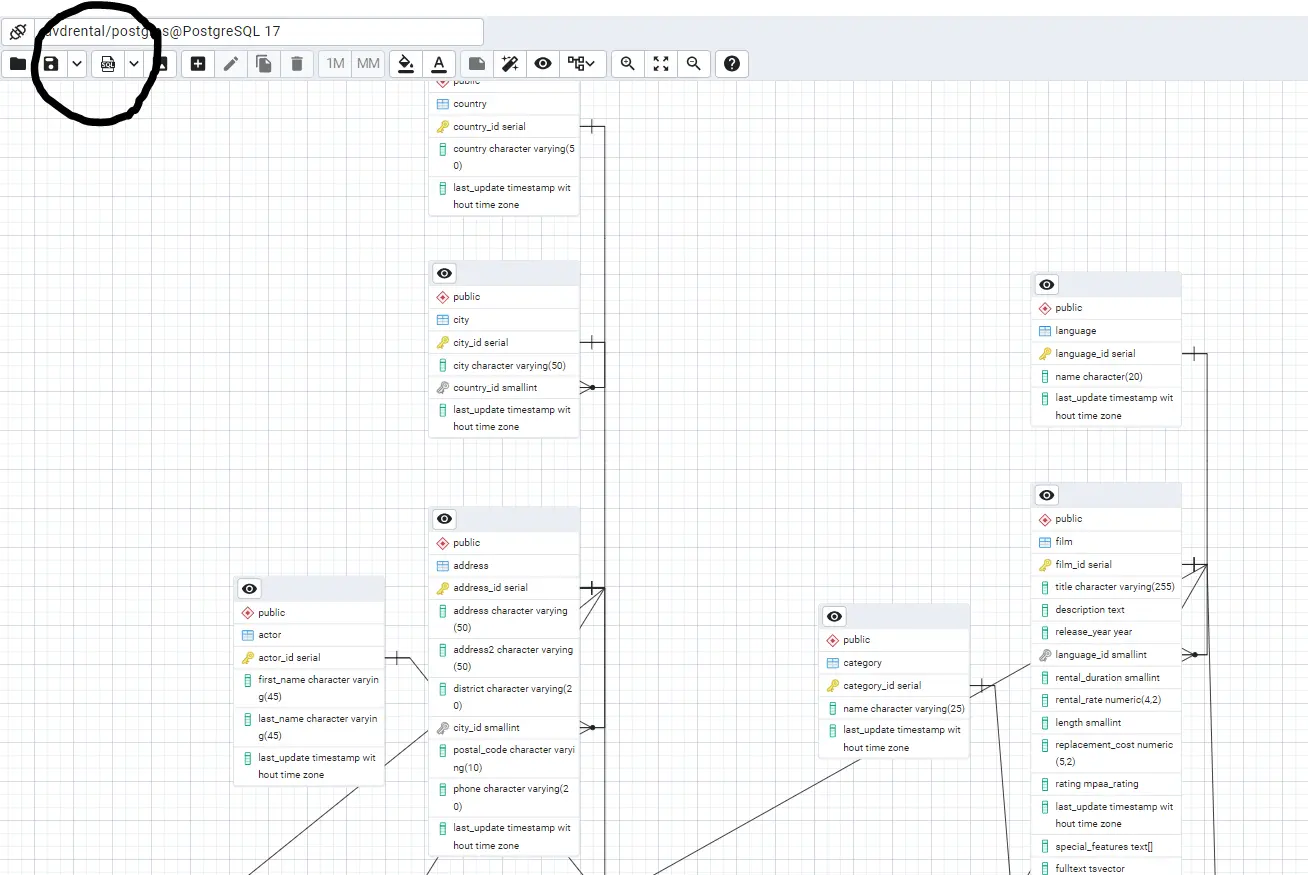

To get your database schema, Open the pgadmin4 you’ve got put in throughout Postgres setup, Go to the `dvdrental` database, right-click on it, and click on `ERD for Database`.

You’ll get the beneath ERD diagram, now generate SQL from the ERD (see the spherical black marking on the picture).

Copy the Schema to the Schema.py module:

# schema.py

DB_SCHEMA = """

BEGIN;

CREATE TABLE IF NOT EXISTS public.actor

(

actor_id serial NOT NULL,

first_name character various(45) COLLATE pg_catalog."default" NOT NULL,

last_name character various(45) COLLATE pg_catalog."default" NOT NULL,

last_update timestamp with out time zone NOT NULL DEFAULT now(),

CONSTRAINT actor_pkey PRIMARY KEY (actor_id)

);

.

.

.

.

.

.

"""The above code block is Closely truncated, to get full code please go to the Challenge Repo.

Now, that each one crucial modules have been accomplished, time to implement the principle methodology and check.

Implementing Foremost

We are going to do the Foremost perform definition and immediate dealing with.

import asyncio

import os

import sys

from typing import Union

from dotenv import load_dotenv

import asyncpg

from devtools import debug

from typing_extensions import TypeAlias

from pydantic_ai import Agent

from pydantic_ai.fashions.gemini import GeminiModel

from fashions import Deps, Success, InvalidRequest

load_dotenv()

gemini_api_key = os.getenv("GOOGLE_API_KEY")

Response: TypeAlias = Union[Success, InvalidRequest]

mannequin = GeminiModel(

"gemini-1.5-flash",

api_key=gemini_api_key,

)

agent = Agent(

mannequin,

result_type=Response, # kind: ignore

deps_type=Deps,

)

async def essential():

if len(sys.argv) == 1:

immediate = "Please create a SELECT question"

else:

immediate = sys.argv[1]

# connection to database

conn = await asyncpg.join(

consumer="postgres",

password="avizyt",

host="localhost",

port=5432,

database="dvdrental",

)

attempt:

deps = Deps(conn)

consequence = await agent.run(immediate, deps=deps)

consequence = debug(consequence.knowledge)

print("=========Your Question=========")

print(debug(consequence.sql_query))

print("=========Clarification=========")

print(debug(consequence.clarification))

lastly:

await conn.shut()

if __name__ == "__main__":

asyncio.run(essential())Right here, first, outline an asynchronous essential perform, and examine the command-line argument for shopper question. If no args are supplied, use the default immediate.

Then we set the Postgres connection parameters to attach with dvdrental database service.

Within the attempt block, create a Deps occasion with a database connection, run the AI brokers with the immediate, Processes the outcomes utilizing the debug perform (pip set up devtools). Then prints the formatted output together with the Generated SQL question and clarification of the question. after that, we lastly closed the database connection.

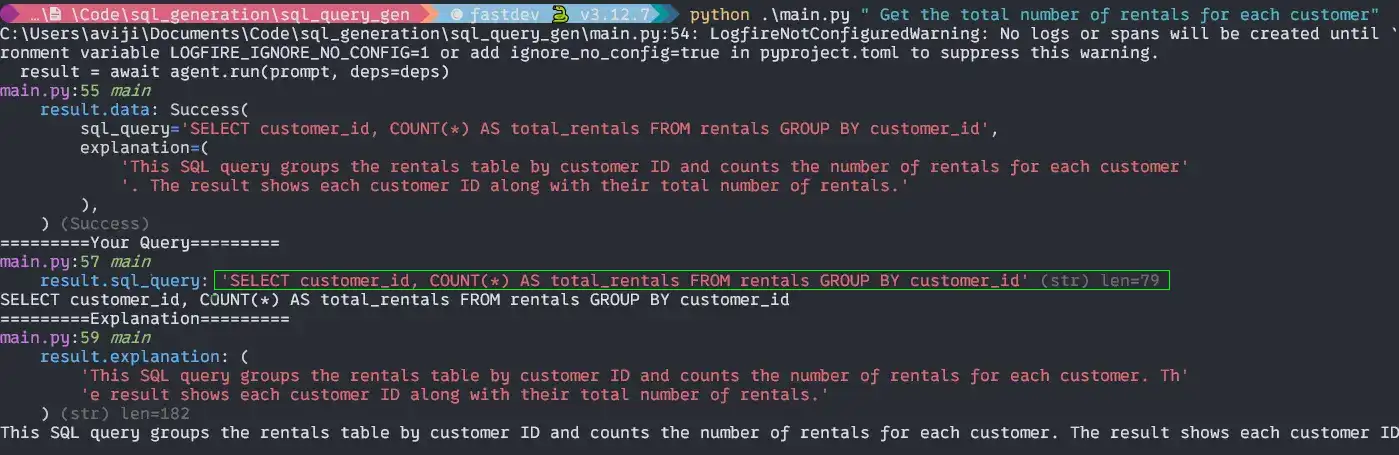

Now run the principle module like beneath:

# within the terminal

python essential.py " Get the overall variety of leases for every buyer"Output:

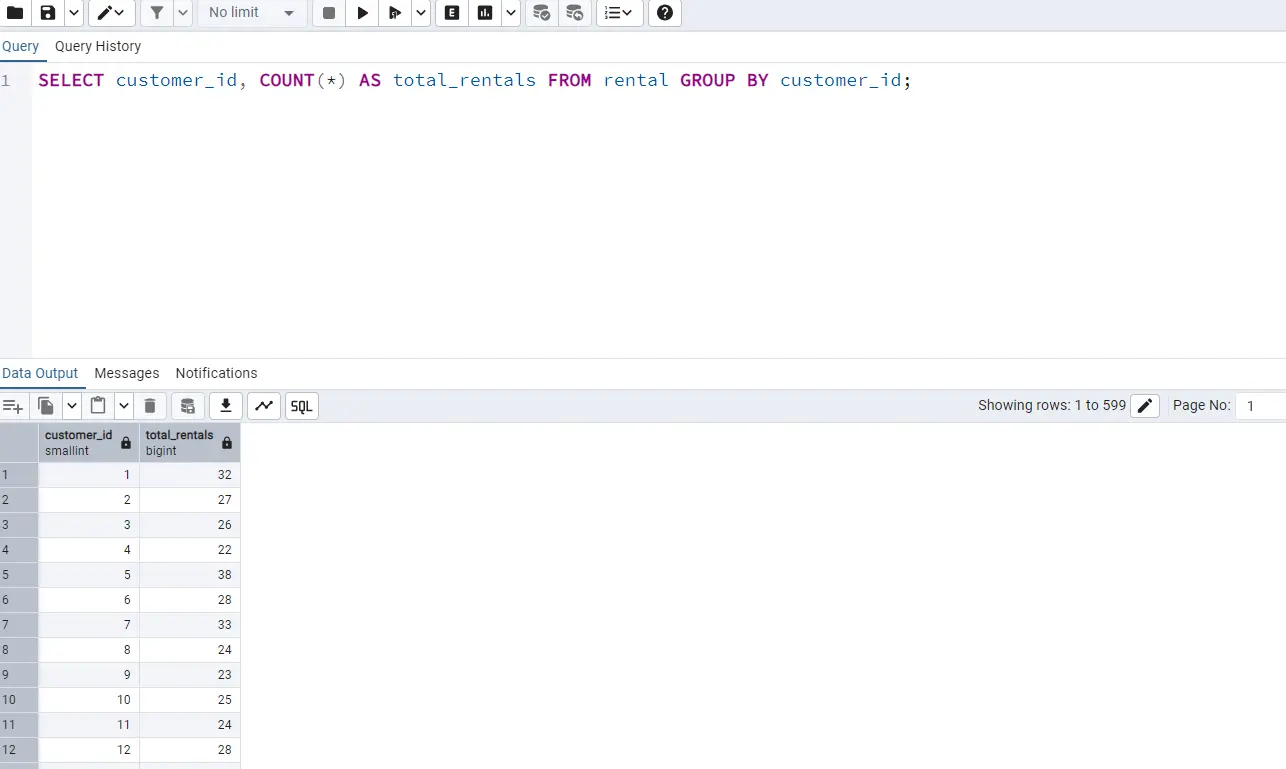

After testing the SQL question within the pgadmin4:

Wow! It’s working like we would like. Take a look at extra queries like this and benefit from the studying.

Conclusion

This undertaking represents a major step ahead in making database interactions extra intuitive and accessible. By combining the ability of AI with strong software program engineering rules, we’ve created a device that not solely generates SQL queries however does so in a approach that’s safe, instructional, and sensible for real-world use.

The success of this implementation demonstrates the potential for AI to boost fairly than exchange conventional database operations, offering a helpful device for each studying and productiveness.

Challenge Repo – All of the code used on this undertaking is out there right here.

Key Takeaways

- PydanticAI permits clever, context-aware code era.

- Gemini-1.5-Flash supplies superior pure language understanding for technical duties.

- AI brokers can rework how we work together with databases and generate code.

- Strong validation is essential in AI-generated code techniques.

Steadily Requested Questions

A. PydanticAI gives type-safe, validated code era with built-in error checking and contextual understanding.

A. Gemini mannequin supplies superior pure language processing, translating complicated human queries into exact SQL statements.

A. Completely! The structure will be tailored for code era, knowledge transformation, and clever automation throughout varied domains.

The media proven on this article will not be owned by Analytics Vidhya and is used on the Writer’s discretion.