Generative AI and basis fashions let autonomous machines generalize past the operational design domains on which they’ve been educated. Utilizing new AI strategies similar to tokenization and massive language and diffusion fashions, builders and researchers can now tackle longstanding hurdles to autonomy.

These bigger fashions require large quantities of numerous knowledge for coaching, fine-tuning and validation. However accumulating such knowledge — together with from uncommon edge instances and doubtlessly hazardous eventualities, like a pedestrian crossing in entrance of an autonomous car (AV) at evening or a human getting into a welding robotic work cell — could be extremely troublesome and resource-intensive.

To assist builders fill this hole, NVIDIA Omniverse Cloud Sensor RTX APIs allow bodily correct sensor simulation for producing datasets at scale. The applying programming interfaces (APIs) are designed to assist sensors generally used for autonomy — together with cameras, radar and lidar — and might combine seamlessly into current workflows to speed up the event of autonomous automobiles and robots of each sort.

Omniverse Sensor RTX APIs are actually accessible to pick builders in early entry. Organizations similar to Accenture, Foretellix, MITRE and Mcity are integrating these APIs by way of domain-specific blueprints to offer finish clients with the instruments they should deploy the subsequent era of commercial manufacturing robots and self-driving vehicles.

Powering Industrial AI With Omniverse Blueprints

In complicated environments like factories and warehouses, robots should be orchestrated to securely and effectively work alongside equipment and human employees. All these transferring components current a large problem when designing, testing or validating operations whereas avoiding disruptions.

Mega is an Omniverse Blueprint that provides enterprises a reference structure of NVIDIA accelerated computing, AI, NVIDIA Isaac and NVIDIA Omniverse applied sciences. Enterprises can use it to develop digital twins and check AI-powered robotic brains that drive robots, cameras, gear and extra to deal with huge complexity and scale.

Integrating Omniverse Sensor RTX, the blueprint lets robotics builders concurrently render sensor knowledge from any kind of clever machine in a manufacturing facility for high-fidelity, large-scale sensor simulation.

With the power to check operations and workflows in simulation, producers can save appreciable time and funding, and enhance effectivity in completely new methods.

Worldwide provide chain options firm KION Group and Accenture are utilizing the Mega blueprint to construct Omniverse digital twins that function digital coaching and testing environments for industrial AI’s robotic brains, tapping into knowledge from good cameras, forklifts, robotic gear and digital people.

The robotic brains understand the simulated surroundings with bodily correct sensor knowledge rendered by the Omniverse Sensor RTX APIs. They use this knowledge to plan and act, with every motion exactly tracked with Mega, alongside the state and place of all of the property within the digital twin. With these capabilities, builders can repeatedly construct and check new layouts earlier than they’re applied within the bodily world.

Driving AV Growth and Validation

Autonomous automobiles have been underneath growth for over a decade, however limitations in buying the suitable coaching and validation knowledge and sluggish iteration cycles have hindered large-scale deployment.

To deal with this want for sensor knowledge, firms are harnessing the NVIDIA Omniverse Blueprint for AV simulation, a reference workflow that allows bodily correct sensor simulation. The workflow makes use of Omniverse Sensor RTX APIs to render the digital camera, radar and lidar knowledge crucial for AV growth and validation.

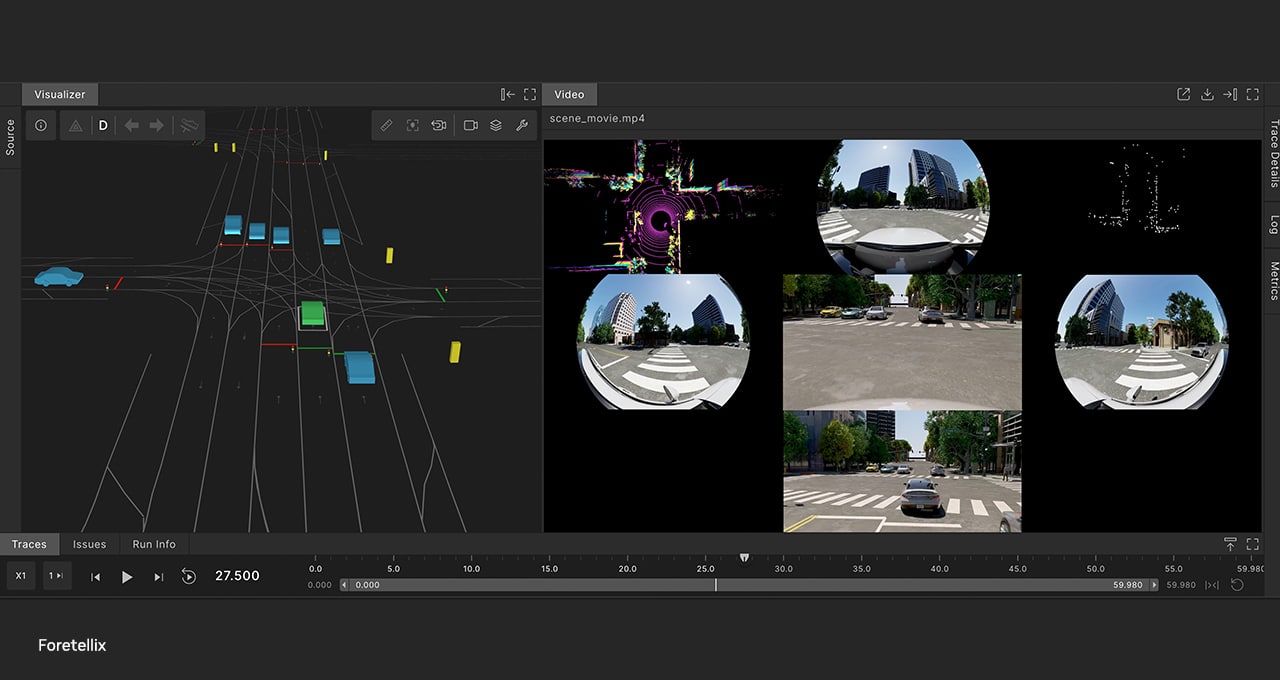

AV toolchain supplier Foretellix has built-in the blueprint into its Foretify AV growth toolchain to remodel object-level simulation into bodily correct sensor simulation.

The Foretify toolchain can generate any variety of testing eventualities concurrently. By including sensor simulation capabilities to those eventualities, Foretify can now allow builders to guage the completeness of their AV growth, in addition to prepare and check on the ranges of constancy and scale wanted to attain large-scale and secure deployment. As well as, Foretellix will use the newly introduced NVIDIA Cosmos platform to generate an excellent higher variety of eventualities for verification and validation.

Nuro, an autonomous driving know-how supplier with one of many largest degree 4 deployments within the U.S., is utilizing the Foretify toolchain to coach, check and validate its self-driving automobiles earlier than deployment.

As well as, analysis group MITRE is collaborating with the College of Michigan’s Mcity testing facility to construct a digital AV validation framework for regulatory use, together with a digital twin of Mcity’s 32-acre proving floor for autonomous automobiles. The challenge makes use of the AV simulation blueprint to render bodily correct sensor knowledge at scale within the digital surroundings, boosting coaching effectiveness.

The way forward for robotics and autonomy is coming into sharp focus, due to the facility of high-fidelity sensor simulation. Study extra about these options at CES by visiting Accenture at Ballroom F on the Venetian and Foretellix sales space 4016 within the West Corridor of Las Vegas Conference Heart.

Study extra concerning the newest in automotive and generative AI applied sciences by becoming a member of NVIDIA at CES.

See discover concerning software program product info.