DeepSeek-R1 is an open mannequin with state-of-the-art reasoning capabilities. As a substitute of providing direct responses, reasoning fashions like DeepSeek-R1 carry out a number of inference passes over a question, conducting chain-of-thought, consensus and search strategies to generate the perfect reply.

Performing this sequence of inference passes — utilizing purpose to reach at the perfect reply — is named test-time scaling. DeepSeek-R1 is an ideal instance of this scaling regulation, demonstrating why accelerated computing is important for the calls for of agentic AI inference.

As fashions are allowed to iteratively “assume” via the issue, they create extra output tokens and longer technology cycles, so mannequin high quality continues to scale. Important test-time compute is important to allow each real-time inference and higher-quality responses from reasoning fashions like DeepSeek-R1, requiring bigger inference deployments.

R1 delivers main accuracy for duties demanding logical inference, reasoning, math, coding and language understanding whereas additionally delivering excessive inference effectivity.

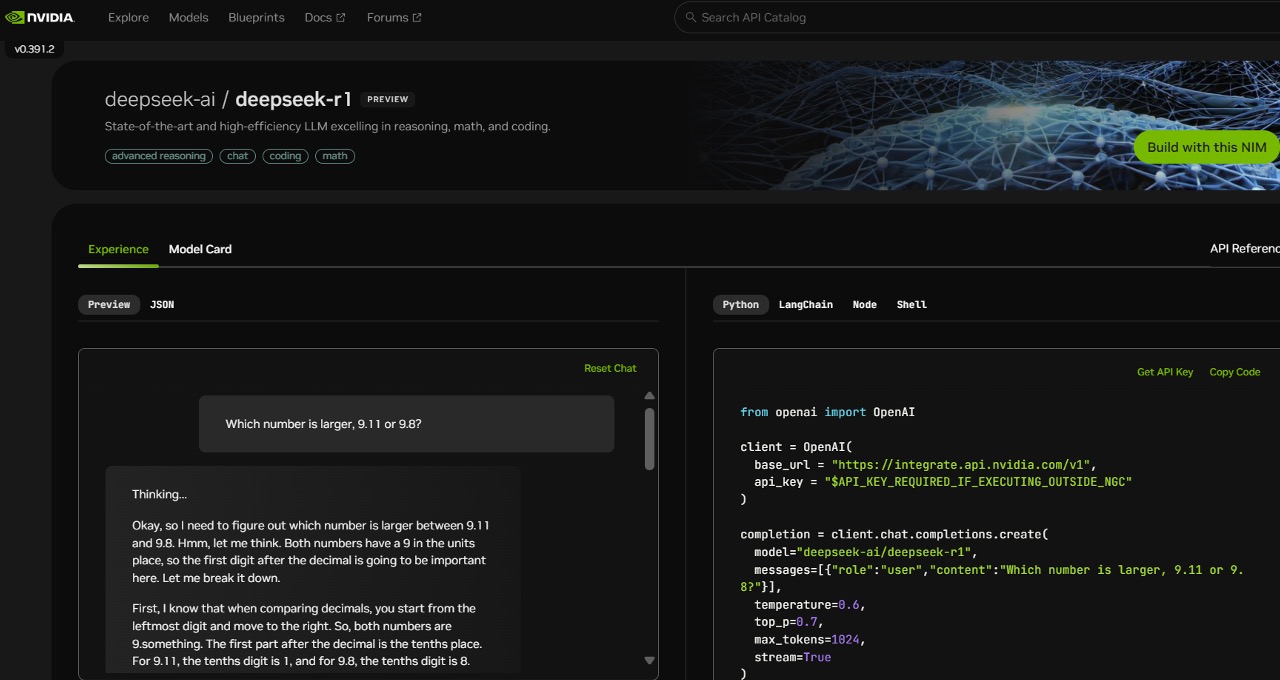

To assist builders securely experiment with these capabilities and construct their very own specialised brokers, the 671-billion-parameter DeepSeek-R1 mannequin is now obtainable as an NVIDIA NIM microservice preview on construct.nvidia.com. The DeepSeek-R1 NIM microservice can ship as much as 3,872 tokens per second on a single NVIDIA HGX H200 system.

Builders can take a look at and experiment with the applying programming interface (API), which is predicted to be obtainable quickly as a downloadable NIM microservice, a part of the NVIDIA AI Enterprise software program platform.

The DeepSeek-R1 NIM microservice simplifies deployments with assist for industry-standard APIs. Enterprises can maximize safety and information privateness by operating the NIM microservice on their most well-liked accelerated computing infrastructure. Utilizing NVIDIA AI Foundry with NVIDIA NeMo software program, enterprises can even be capable to create custom-made DeepSeek-R1 NIM microservices for specialised AI brokers.

DeepSeek-R1 — a Good Instance of Take a look at-Time Scaling

DeepSeek-R1 is a big mixture-of-experts (MoE) mannequin. It incorporates a formidable 671 billion parameters — 10x greater than many different standard open-source LLMs — supporting a big enter context size of 128,000 tokens. The mannequin additionally makes use of an excessive variety of consultants per layer. Every layer of R1 has 256 consultants, with every token routed to eight separate consultants in parallel for analysis.

Delivering real-time solutions for R1 requires many GPUs with excessive compute efficiency, related with high-bandwidth and low-latency communication to route immediate tokens to all of the consultants for inference. Mixed with the software program optimizations obtainable within the NVIDIA NIM microservice, a single server with eight H200 GPUs related utilizing NVLink and NVLink Swap can run the complete, 671-billion-parameter DeepSeek-R1 mannequin at as much as 3,872 tokens per second. This throughput is made doable by utilizing the NVIDIA Hopper structure’s FP8 Transformer Engine at each layer — and the 900 GB/s of NVLink bandwidth for MoE knowledgeable communication.

Getting each floating level operation per second (FLOPS) of efficiency out of a GPU is important for real-time inference. The next-generation NVIDIA Blackwell structure will give test-time scaling on reasoning fashions like DeepSeek-R1 an enormous increase with fifth-generation Tensor Cores that may ship as much as 20 petaflops of peak FP4 compute efficiency and a 72-GPU NVLink area particularly optimized for inference.

Get Began Now With the DeepSeek-R1 NIM Microservice

Builders can expertise the DeepSeek-R1 NIM microservice, now obtainable on construct.nvidia.com. Watch the way it works:

With NVIDIA NIM, enterprises can deploy DeepSeek-R1 with ease and guarantee they get the excessive effectivity wanted for agentic AI methods.

See discover relating to software program product info.