In at the moment’s digital world, companies and people purpose to supply prompt and correct solutions to web site guests. With elevated demand for seamless communication, AI-driven chatbots have grow to be a vital device for person interplay and providing helpful info in a cut up second. Chatbots can search, comprehend, and make the most of web site information effectively, making clients happy and enhancing the client expertise for corporations. On this article, we are going to clarify the right way to construct a chatbot that fetches info from an internet site, processes it effectively, and engages in significant conversations with the help of Qwen-2.5, LangChain, and FAISS. we are going to be taught the principle parts and integration course of.

Studying Aims

- Perceive the significance of AI-powered chatbots for companies.

- Discover ways to extract and course of web site information for chatbot use.

- Acquire insights into utilizing FAISS for environment friendly textual content retrieval.

- Discover the function of Hugging Face embeddings in chatbot intelligence.

- Uncover the right way to combine Qwen-2.5-32b for producing responses.

- Construct an interactive chatbot interface utilizing Streamlit.

This text was revealed as part of the Knowledge Science Blogathon.

Why Use a Web site Chatbot?

Many companies wrestle with dealing with giant volumes of buyer queries effectively. Conventional buyer help groups typically face delays, resulting in pissed off customers and elevated operational prices. Furthermore, hiring and coaching help brokers could be costly, making it troublesome for corporations to scale successfully.

A chatbot helps by providing prompt and automatic responses to person questions with no need a human. Companies are in a position to lower help prices significantly, enhance buyer interplay, and supply customers with prompt solutions to their questions. AI-based chatbots are able to dealing with giant volumes of knowledge, figuring out the precise info in a matter of seconds, and reacting appropriately based mostly on the context, making them very helpful for companies these days.

Web site chatbots are principally utilized in E-learning platforms, E-commerce web sites, buyer help platforms, and information web sites.

Additionally Learn: Constructing a Writing Assistant with LangChain and Qwen-2.5-32B

Key Parts of the Chatbot

- Unstructured URL Loader: Extracts content material from the web site.

- Textual content Splitter: Breaks down giant paperwork into manageable chunks.

- FAISS (Fb AI Similarity Search): Shops and retrieves doc embeddings effectively.

- Qwen-2.5-32b: A strong language mannequin that understands queries and generates responses.

- Streamlit: A framework to create an interactive chatbot interface.

How Does This Chatbot Work?

Right here’s a flowchart explaining the working of our chatbot.

Constructing a Customized Chatbot Utilizing Qwen-2.5-32b and LangChain

Now let’s see the right way to construct a customized web site chatbot utilizing Qwen-2.5-32b, LangChain, and FAISS.

Step 1: Setting Up the Basis

Let’s start by organising the stipulations.

1. Setting Setup

# Create a Setting

python -m venv env

# Activate it on Home windows

.envScriptsactivate

# Activate in MacOS/Linux

supply env/bin/activate2. Set up the Necessities.txt

pip set up -r https://uncooked.githubusercontent.com/Gouravlohar/Chatbot/refs/heads/foremost/necessities.txt3. API Key Setup

Paste the API key in .env file.

API_KEY="Your API KEY PASTE HERE"Now let’s get into the precise coding half.

Step 2: Dealing with Home windows Occasion Loop (For Compatibility)

import sys

import asyncio

if sys.platform.startswith("win"):

asyncio.set_event_loop_policy(asyncio.WindowsSelectorEventLoopPolicy())Ensures compatibility with Home windows by setting the right occasion loop coverage for asyncio, as Home windows makes use of a unique default occasion loop.

Step 3: Importing Required Libraries

import streamlit as st

import os

from dotenv import load_dotenv- Streamlit is used to create the chatbot UI.

- os is used to set atmosphere variables.

- dotenv helps load API keys from a .env file.

os.environ["STREAMLIT_SERVER_FILEWATCHER_TYPE"] = "none" This disables Streamlit’s file watcher to enhance efficiency by lowering pointless file system monitoring.

Step 4: Importing LangChain Modules

from langchain_huggingface import HuggingFaceEmbeddings

from langchain_community.vectorstores import FAISS

from langchain_community.document_loaders import UnstructuredURLLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_groq import ChatGroq

from langchain.chains import create_retrieval_chain

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain_core.prompts import ChatPromptTemplate- HuggingFaceEmbeddings : Converts textual content into vector embeddings.

- FAISS : Shops and retrieves related doc chunks based mostly on queries.

- UnstructuredURLLoader : Hundreds textual content content material from internet URLs.

- RecursiveCharacterTextSplitter : Splits giant textual content into smaller chunks for processing.

- ChatGroq : Makes use of the Groq API for AI-powered responses.

- create_retrieval_chain – Constructs a pipeline that retrieves related paperwork earlier than passing them to the LLM.

- create_stuff_documents_chain – Combines retrieved paperwork right into a format appropriate for LLM processing.

Step 5: Loading Setting Variables

load_dotenv()

groq_api_key = os.getenv("API_KEY")

if not groq_api_key:

st.error("Groq API Key not present in .env file")

st.cease()- Hundreds the Groq API key from the .env file.

- If the hot button is lacking, the app reveals an error and stops execution.

1. Perform to Load Web site Knowledge

def load_website_data(urls):

loader = UnstructuredURLLoader(urls=urls)

return loader.load()- Makes use of UnstructuredURLLoader to fetch content material from a listing of URLs.

2. Perform to Chunk Paperwork

def chunk_documents(docs):

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=50)

return text_splitter.split_documents(docs)- Splits giant textual content into 500-character chunks with 50-character overlap for higher context retention.

3. Perform to Construct FAISS Vector Retailer

def build_vectorstore(text_chunks):

embeddings = HuggingFaceEmbeddings(model_name="sentence-transformers/all-MiniLM-L6-v2")

return FAISS.from_documents(text_chunks, embeddings)- Converts textual content chunks into vector embeddings utilizing all-MiniLM-L6-v2.

- Shops embeddings in a FAISS vector database for environment friendly retrieval.

4. Perform to Load Qwen-2.5-32b

def load_llm(api_key):

return ChatGroq(groq_api_key=api_key, model_name="qwen-2.5-32b", streaming=True)- Hundreds Groq’s Qwen-2.5-32b mannequin for producing responses.

- Allows streaming for a real-time response expertise.

5. Streamlit UI Setup

st.title("Customized Web site Chatbot(Analytics Vidhya)")6. Dialog Historical past Setup

if "dialog" not in st.session_state:

st.session_state.dialog = []- Shops chat historical past in st.session_state so messages persist throughout interactions.

Step 6: Fetching and Processing Web site Knowledge

urls = ["https://www.analyticsvidhya.com/"]

docs = load_website_data(urls)

text_chunks = chunk_documents(docs)- Hundreds content material from Analytics Vidhya.

- Splits the content material into small chunks.

Step 7: Constructing FAISS Vector Retailer

vectorstore = build_vectorstore(text_chunks)

retriever = vectorstore.as_retriever()Shops processed textual content chunks in FAISS . Then Converts the FAISS vectorstore right into a retriever that may fetch related chunks based mostly on person queries.

Step 8: Loading the Groq LLM

llm = load_llm(groq_api_key)Step 9: Setting Up Retrieval Chain

system_prompt = (

"Use the given context to reply the query. "

"If you do not know the reply, say you do not know. "

"Use detailed sentences most and preserve the reply correct. "

"Context: {context}"

)

immediate = ChatPromptTemplate.from_messages([

("system", system_prompt),

("human", "{input}"),

])

combine_docs_chain = create_stuff_documents_chain(llm, immediate)

qa_chain = create_retrieval_chain(

retriever=retriever,

combine_docs_chain=combine_docs_chain

)- Defines a system immediate to make sure correct, context-based solutions.

- Makes use of ChatPromptTemplate to format the chatbot’s interactions.

- Combines retrieved paperwork (combine_docs_chain) to supply context to the LLM.

This step creates a qa_chain by linking the retriever (FAISS) with the LLM, guaranteeing responses are based mostly on retrieved web site content material.

Step 10: Displaying Chat Historical past

for msg in st.session_state.dialog:

if msg["role"] == "person":

st.chat_message("person").write(msg["message"])

else:

st.chat_message("assistant").write(msg["message"])This shows earlier dialog messages.

Step 11: Accepting Consumer Enter

user_input = st.chat_input("Kind your message right here") if hasattr(st, "chat_input") else st.text_input("Your message:")- Makes use of st.chat_input (if obtainable) for a greater chat UI.

- Falls again to st.text_input for compatibility.

Step 12: Processing Consumer Queries

if user_input:

st.session_state.dialog.append({"function": "person", "message": user_input})

if hasattr(st, "chat_message"):

st.chat_message("person").write(user_input)

else:

st.markdown(f"**Consumer:** {user_input}")

with st.spinner("Processing..."):

response = qa_chain.invoke({"enter": user_input})

assistant_response = response.get("reply", "I am unsure, please attempt once more.")

st.session_state.dialog.append({"function": "assistant", "message": assistant_response})

if hasattr(st, "chat_message"):

st.chat_message("assistant").write(assistant_response)

else:

st.markdown(f"**Assistant:** {assistant_response}")This code handles person enter, retrieval, and response technology in a chatbot. When a person enters a message, it’s saved in st.session_state.dialog and displayed within the chat interface. A loading spinner seems whereas the chatbot processes the question utilizing qa_chain.invoke({“enter”: user_input}), which retrieves related info and generates a response. The assistant’s reply is extracted from the response dictionary, guaranteeing a fallback message if no reply is discovered. Lastly, the assistant’s response is saved and displayed, sustaining a clean and interactive chat expertise.

Get the total code on GitHub right here

Ultimate Output

Testing the Chatbot

Now let’s check out a couple of prompts on the chatbot we simply constructed.

Immediate: “Are you able to record some methods to interact with the Analytics Vidhya neighborhood?”

Response:

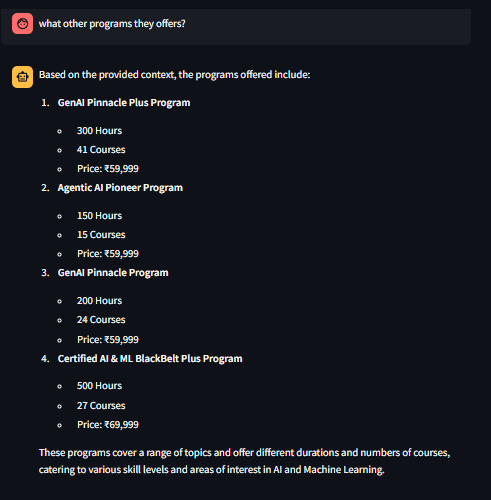

Immediate: “what different packages they presents?”

Response:

Conclusion

AI chatbots have reworked the style wherein individuals talk on the web. Utilizing superior fashions like Qwen-2.5-32b, companies and people can guarantee that their chatbot responds properly and suitably. As expertise continues to advance, utilizing AI chatbots on web sites would be the order of the day, and folks will have the ability to entry info simply.

Sooner or later, developments like having lengthy conversations, voice questioning, and interacting with greater data swimming pools can additional advance chatbots much more.

Key Takeaways

- The chatbot fetches content material from the Analytics Vidhya web site, processes it, and shops it in a FAISS vector database for fast retrieval.

- It splits web site content material into 500-character chunks with a 50-character overlap, guaranteeing higher context retention when retrieving related info.

- The chatbot makes use of Qwen-2.5-32b to generate responses, leveraging retrieved doc chunks to supply correct, context-aware solutions.

- Customers can work together with the chatbot utilizing a chat interface in Streamlit, and dialog historical past is saved for seamless interactions.

The media proven on this article shouldn’t be owned by Analytics Vidhya and is used on the Writer’s discretion.

Steadily Requested Questions

A. It makes use of UnstructuredURLLoader from LangChain to extract content material from specified URLs.

A. FAISS (Fb AI Similarity Search) helps retailer and retrieve related textual content chunks effectively based mostly on person queries.

A. The chatbot makes use of Groq’s Qwen-2.5-32B, a strong LLM, to generate solutions based mostly on retrieved web site content material.

A. Sure! Merely modify the urls record to incorporate extra web sites, and the chatbot will fetch, course of, and retrieve info from them.

A. It follows a Retrieval-Augmented Era (RAG) method, that means it retrieves related web site information first after which generates a solution utilizing LLM.

Login to proceed studying and luxuriate in expert-curated content material.