The LangGraph Reflection Framework is a kind of agentic framework which presents a robust approach to enhance language mannequin outputs via an iterative critique course of utilizing Generative AI. This text breaks down how one can implement a mirrored image agent that validates Python code utilizing Pyright and improves its high quality utilizing GPT-4o mini. AI brokers play an important function on this framework, automating decision-making processes by combining reasoning, reflection, and suggestions mechanisms to reinforce mannequin efficiency.

Studying Aims

- Perceive how the LangGraph Reflection Framework works.

- Discover ways to implement the framework to enhance the standard of Python code.

- Expertise how nicely the framework works via a hands-on trial.

This text was revealed as part of the Knowledge Science Blogathon.

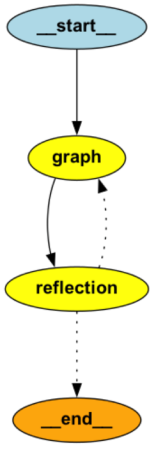

LangGraph Reflection Framework Structure

The LangGraph Reflection Framework follows a easy but efficient agentic structure:

- Fundamental Agent: Generates preliminary code based mostly on the consumer’s request.

- Critique Agent: Validates the generated code utilizing Pyright.

- Reflection Course of: If errors are detected, the principle agent known as once more to refine the code till no points stay.

Additionally Learn: Agentic Frameworks for Generative AI Purposes

Learn how to Implement the LangGraph Reflection Framework

Here’s a Step-by-Step Information for an Illustrative Implementation and Utilization:

Step 1: Atmosphere Setup

First, set up the required dependencies:

pip set up langgraph-reflection langchain pyrightStep 2: Code Evaluation with Pyright

We’ll use Pyright to investigate generated code and supply error particulars.

Pyright Evaluation Operate

from typing import TypedDict, Annotated, Literal

import json

import os

import subprocess

import tempfile

from langchain.chat_models import init_chat_model

from langgraph.graph import StateGraph, MessagesState, START, END

from langgraph_reflection import create_reflection_graph

os.environ["OPENAI_API_KEY"] = "your_openai_api_key"

def analyze_with_pyright(code_string: str) -> dict:

"""Analyze Python code utilizing Pyright for static kind checking and errors.

Args:

code_string: The Python code to investigate as a string

Returns:

dict: The Pyright evaluation outcomes

"""

with tempfile.NamedTemporaryFile(suffix=".py", mode="w", delete=False) as temp:

temp.write(code_string)

temp_path = temp.title

strive:

consequence = subprocess.run(

[

"pyright",

"--outputjson",

"--level",

"error", # Only report errors, not warnings

temp_path,

],

capture_output=True,

textual content=True,

)

strive:

return json.masses(consequence.stdout)

besides json.JSONDecodeError:

return {

"error": "Didn't parse Pyright output",

"raw_output": consequence.stdout,

}

lastly:

os.unlink(temp_path)Step 3: Fundamental Assistant Mannequin for Code Technology

GPT-4o Mini Mannequin Setup

def call_model(state: dict) -> dict:

"""Course of the consumer question with the GPT-4o mini mannequin.

Args:

state: The present dialog state

Returns:

dict: Up to date state with the mannequin response

"""

mannequin = init_chat_model(mannequin="gpt-4o-mini", openai_api_key = 'your_openai_api_key')

return {"messages": mannequin.invoke(state["messages"])}Observe: Use os.environ[“OPENAI_API_KEY”] = “YOUR_API_KEY” securely, and by no means hardcode the important thing in your code.

Step 4: Code Extraction and Validation

Code Extraction Sorts

# Outline kind lessons for code extraction

class ExtractPythonCode(TypedDict):

"""Sort class for extracting Python code. The python_code discipline is the code to be extracted."""

python_code: str

class NoCode(TypedDict):

"""Sort class for indicating no code was discovered."""

no_code: boolSystem Immediate for GPT-4o Mini

# System immediate for the mannequin

SYSTEM_PROMPT = """The under dialog is you conversing with a consumer to write down some python code. Your last response is the final message within the listing.

Generally you'll reply with code, othertimes with a query.

If there's code - extract it right into a single python script utilizing ExtractPythonCode.

If there is no such thing as a code to extract - name NoCode."""Pyright Code Validation Operate

def try_running(state: dict) -> dict | None:

"""Try to run and analyze the extracted Python code.

Args:

state: The present dialog state

Returns:

dict | None: Up to date state with evaluation outcomes if code was discovered

"""

mannequin = init_chat_model(mannequin="gpt-4o-mini")

extraction = mannequin.bind_tools([ExtractPythonCode, NoCode])

er = extraction.invoke(

[{"role": "system", "content": SYSTEM_PROMPT}] + state["messages"]

)

if len(er.tool_calls) == 0:

return None

tc = er.tool_calls[0]

if tc["name"] != "ExtractPythonCode":

return None

consequence = analyze_with_pyright(tc["args"]["python_code"])

print(consequence)

clarification = consequence["generalDiagnostics"]

if consequence["summary"]["errorCount"]:

return {

"messages": [

{

"role": "user",

"content": f"I ran pyright and found this: {explanation}nn"

"Try to fix it. Make sure to regenerate the entire code snippet. "

"If you are not sure what is wrong, or think there is a mistake, "

"you can ask me a question rather than generating code",

}

]

}Step 5: Creating the Reflection Graph

Constructing the Fundamental and Choose Graphs

def create_graphs():

"""Create and configure the assistant and decide graphs."""

# Outline the principle assistant graph

assistant_graph = (

StateGraph(MessagesState)

.add_node(call_model)

.add_edge(START, "call_model")

.add_edge("call_model", END)

.compile()

)

# Outline the decide graph for code evaluation

judge_graph = (

StateGraph(MessagesState)

.add_node(try_running)

.add_edge(START, "try_running")

.add_edge("try_running", END)

.compile()

)

# Create the whole reflection graph

return create_reflection_graph(assistant_graph, judge_graph).compile()

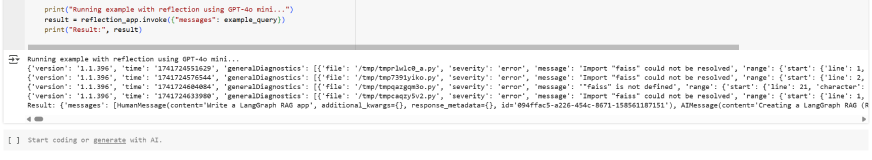

reflection_app = create_graphs()Step 6: Working the Software

Instance Execution

if __name__ == "__main__":

"""Run an instance question via the reflection system."""

example_query = [

{

"role": "user",

"content": "Write a LangGraph RAG app",

}

]

print("Working instance with reflection utilizing GPT-4o mini...")

consequence = reflection_app.invoke({"messages": example_query})

print("Outcome:", consequence)Output Evaluation

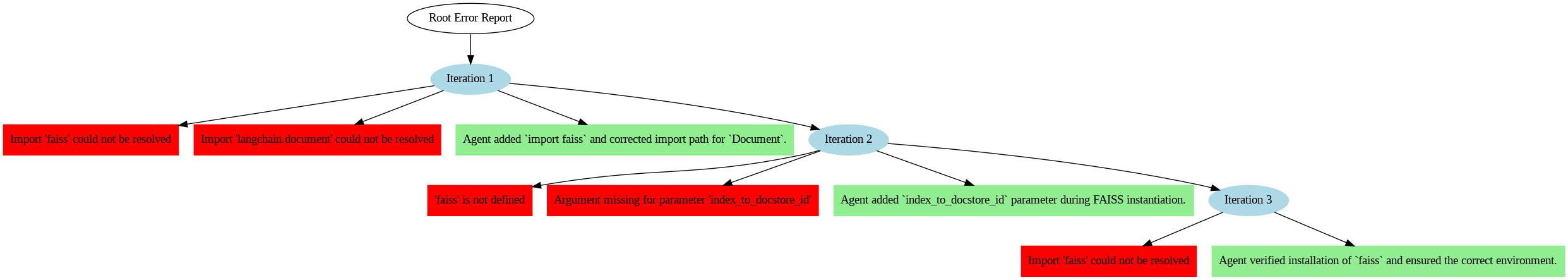

What Occurred within the Instance?

Our LangGraph Reflection system was designed to do the next:

- Take an preliminary code snippet.

- Run Pyright (a static kind checker for Python) to detect errors.

- Use the GPT-4o mini mannequin to investigate the errors, perceive them, and generate improved code strategies

Iteration 1 – Recognized Errors

1. Import “faiss” couldn’t be resolved.

- Clarification: This error happens when the faiss library isn’t put in or the Python setting doesn’t acknowledge the import.

- Resolution: The agent advisable working:

pip set up faiss-cpu2. Can’t entry attribute “embed” for sophistication “OpenAIEmbeddings”.

- Clarification: The code referenced .embed, however in newer variations of langchain, embedding strategies are .embed_documents() or .embed_query().

- Resolution: The agent appropriately changed .embed with .embed_query.

3. Arguments lacking for parameters “docstore”, “index_to_docstore_id”.

- Clarification: The FAISS vector retailer now requires a docstore object and an index_to_docstore_id mapping.

- Resolution: The agent added each parameters by creating an InMemoryDocstore and a dictionary mapping.

Iteration 2 – Development

Within the second iteration, the system improved the code however nonetheless recognized:

1. Import “langchain.doc” couldn’t be resolved.

- Clarification: The code tried to import Doc from the incorrect module.

- Resolution: The agent up to date the import to from langchain.docstore import Doc.

2. “InMemoryDocstore” shouldn’t be outlined.

- Clarification: The lacking import for InMemoryDocstore was recognized.

- Resolution: The agent appropriately added:

from langchain.docstore import InMemoryDocstoreIteration 3 – Last Resolution

Within the last iteration, the reflection agent efficiently addressed all points by:

- Importing faiss appropriately.

- Switching .embed to .embed_query for embedding features.

- Including a sound InMemoryDocstore for doc administration.

- Creating a correct index_to_docstore_id mapping.

- Accurately accessing doc content material utilizing .page_content as an alternative of treating paperwork as easy strings.

The improved code then efficiently ran with out errors.

Why This Issues

- Automated Error Detection: The LangGraph Reflection framework simplifies the debugging course of by analyzing code errors utilizing Pyright and producing actionable insights.

- Iterative Enchancment: The framework constantly refines the code till errors are resolved, mimicking how a developer may manually debug and enhance their code.

- Adaptive Studying: The system adapts to altering code buildings, equivalent to up to date library syntax or model variations.

Conclusion

The LangGraph Reflection Framework demonstrates the facility of mixing AI critique brokers with strong static evaluation instruments. This clever suggestions loop permits sooner code correction, improved coding practices, and higher general growth effectivity. Whether or not for learners or skilled builders, LangGraph Reflection presents a robust software for bettering code high quality.

Key Takeaways

- By combining LangChain, Pyright, and GPT-4o mini throughout the LangGraph Reflection Framework, this answer offers an efficient solution to robotically validate code.

- The framework helps LLMs generate improved options iteratively and likewise ensures higher-quality outputs via reflection and critique cycles.

- This method enhances the robustness of AI-generated code and improves efficiency in real-world situations.

The media proven on this article shouldn’t be owned by Analytics Vidhya and is used on the Creator’s discretion.

Regularly Requested Questions

A. LangGraph Reflection is a robust framework that mixes a main AI agent (for code era or activity execution) with a critique agent (to establish points and recommend enhancements). This iterative loop improves the ultimate output by leveraging suggestions and reflection.

A. The reflection mechanism follows this workflow:

– Fundamental Agent: Generates the preliminary output.

– Critique Agent: Analyzes the generated output for errors or enhancements.

– Enchancment Loop: If points are discovered, the principle agent is re-invoked with suggestions for refinement. This loop continues till the output meets high quality requirements.

A. You’ll want the next dependencies:

– langgraph-reflection

– langchain

– pyright (for code evaluation)

– faiss (for vector search)

– openai (for GPT-based fashions)

To put in them, run: pip set up langgraph-reflection langchain pyright faiss openai

A. LangGraph Reflection excels at duties like:

– Python code validation and enchancment.

– Pure language responses requiring fact-checking.

– Doc summarization with readability and completeness.

– Guaranteeing AI-generated content material adheres to security tips.

A. No, whereas now we have proven Pyright in code correction examples, the framework may even assist in bettering textual content summarization, information validation, and chatbot response refinement.

Login to proceed studying and revel in expert-curated content material.