Sequence fashions are CNN-based deep studying fashions designed to course of sequential information. The info, the place the context is supplied by the earlier parts, is necessary for prediction not like the plain CNNs, which course of information organized right into a grid-like construction (photos).

Functions of Sequence modeling are seen in varied fields. For instance, it’s utilized in Pure Language Processing (NLP) for language translation, textual content technology, and sentiment classification. It’s extensively utilized in speech recognition the place the spoken language is transformed into textual type, for instance in music technology and forecasting shares.

On this weblog, we’ll delve into varied forms of sequential architectures, how they work and differ from one another, and look into their purposes.

About Us: At Viso.ai, we energy Viso Suite, essentially the most full end-to-end pc imaginative and prescient platform. We offer all the pc imaginative and prescient companies and AI imaginative and prescient expertise you’ll want. Get in contact with our group of AI specialists and schedule a demo to see the important thing options.

Historical past of Sequence Fashions

The evolution of sequence fashions mirrors the general progress in deep studying, marked by gradual enhancements and important breakthroughs to beat the hurdles of processing sequential information. The sequence fashions have enabled machines to deal with and generate intricate information sequences with ever-growing accuracy and effectivity. We’ll talk about the next sequence fashions on this weblog:

- Recurrent Neural Networks (RNNs): The idea of RNNs was launched by John Hopfields and others within the Nineteen Eighties.

- Lengthy Quick-Time period Reminiscence (LSTM): In 1997, Sepp Hochreiter and Jürgen Schmidhuber proposed LSTM community fashions.

- Gated Recurrent Unit (GRU): Kyunghyun Cho and his colleagues launched GRUs in 2014, a simplified variation of LSTM.

- Transformers: The Transformer mannequin was launched by Vaswani et al. in 2017, creating a serious shift in sequence modeling.

Sequence Mannequin 1: Recurrent Neural Networks (RNN)

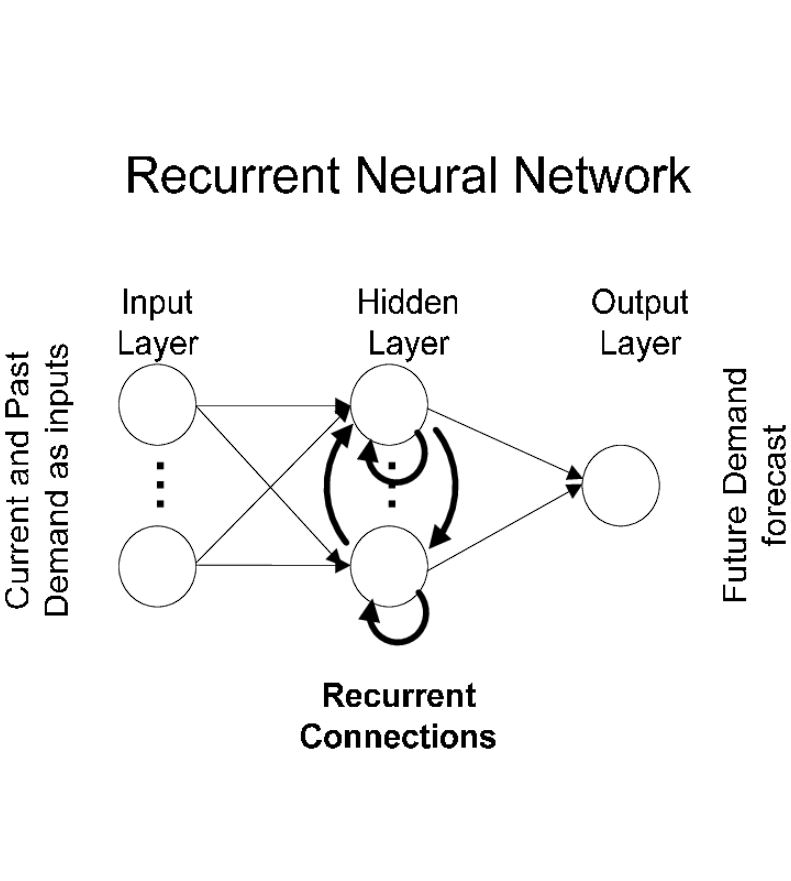

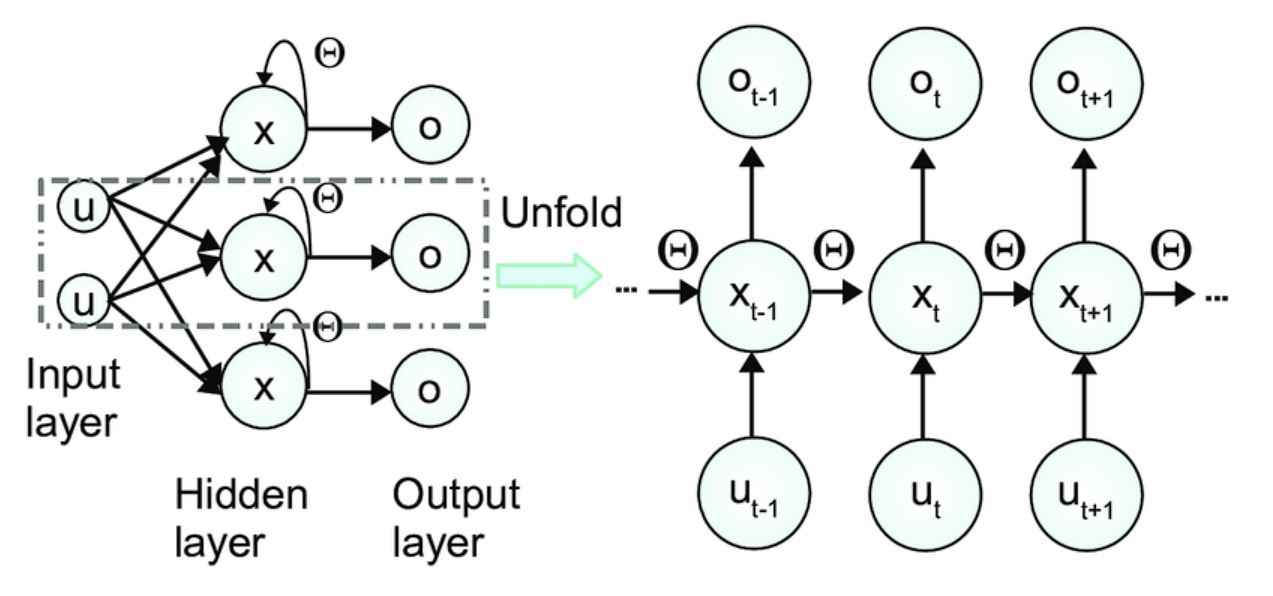

RNNs are merely a feed-forward community that has an inner reminiscence that helps in predicting the subsequent factor in sequence. This reminiscence characteristic is obtained because of the recurrent nature of RNNs, the place it makes use of a hidden state to assemble context concerning the sequence given as enter.

In contrast to feed-forward networks that merely carry out transformations on the enter supplied, RNNs use their inner reminiscence to course of inputs. Subsequently regardless of the mannequin has discovered within the earlier time step influences its prediction.

This nature of RNNs is what makes them helpful for purposes similar to predicting the subsequent phrase (google autocomplete) and speech recognition. As a result of as a way to predict the subsequent phrase, it’s essential to know what the earlier phrase was.

Allow us to now take a look at the structure of RNNs.

Enter

Enter given to the mannequin at time step t is normally denoted as x_t

For instance, if we take the phrase “kittens”, the place every letter is taken into account as a separate time step.

Hidden State

That is the necessary a part of RNN that enables it to deal with sequential information. A hidden state at time t is represented as h_t which acts as a reminiscence. Subsequently, whereas making predictions, the mannequin considers what it has discovered over time (the hidden state) and combines it with the present enter.

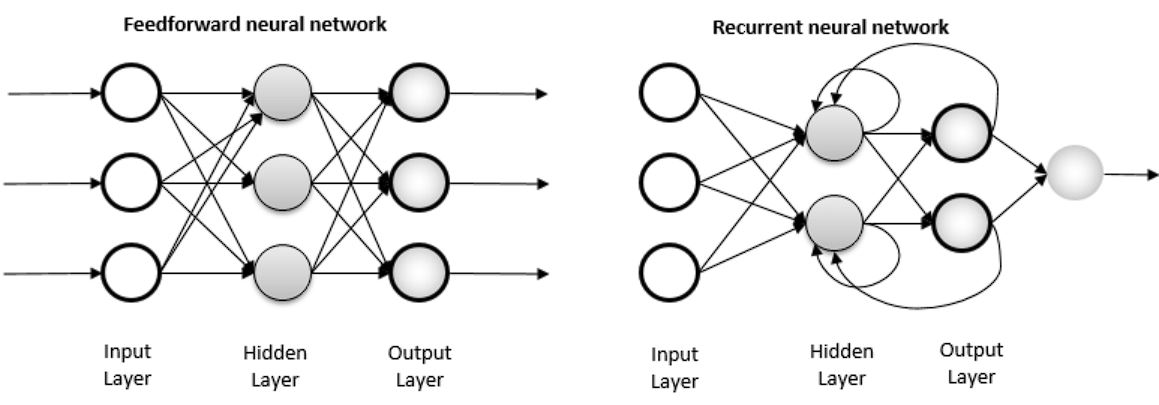

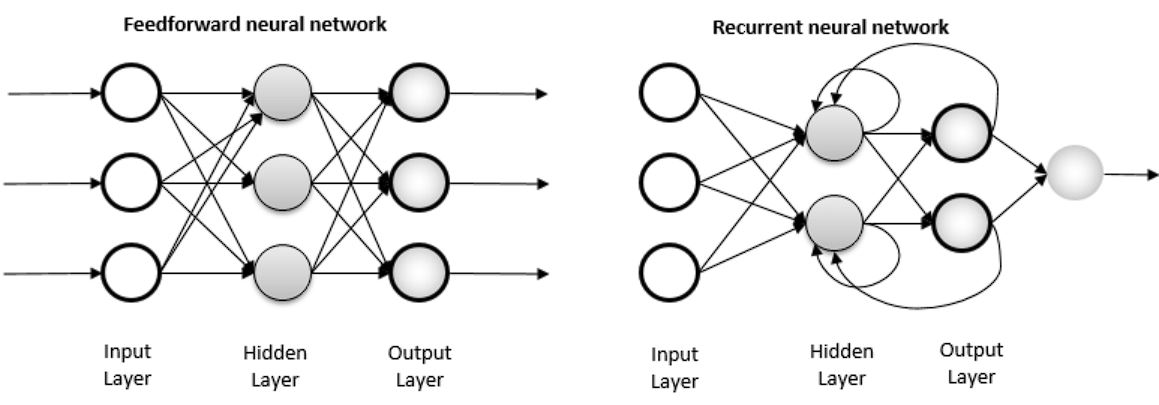

RNNs vs Feed Ahead Community

In a regular feed-forward neural community or Multi-Layer Perceptron, the info flows solely in a single course, from the enter layer, by means of the hidden layers, and to the output layer. There aren’t any loops within the community, and the output of any layer doesn’t have an effect on that very same layer sooner or later. Every enter is unbiased and doesn’t have an effect on the subsequent enter, in different phrases, there aren’t any long-term dependencies.

In distinction in a RNN mannequin, the data cycles by means of a loop. When the mannequin makes a prediction, it considers the present enter and what it has discovered from the earlier inputs.

Weights

There are 3 totally different weights utilized in RNNs:

- Enter-to-Hidden Weights (W_xh): These weights join the enter to the hidden state.

- Hidden-to-Hidden Weights (W_hh): These weights join the earlier hidden state to the present hidden state and are discovered by the community.

- Hidden-to-Output Weights (W_hy): These weights join the hidden state to the output.

Bias Vectors

Two bias vectors are used, one for the hidden state and the opposite for the output.

Activation Features

The 2 capabilities used are tanh and ReLU, the place tanh is used for the hidden state.

A single cross within the community seems to be like this:

At time step t, given enter x_t and former hidden state h_t-1:

- The community computes the intermediate worth z_t utilizing the enter, earlier hidden state, weights, and biases.

- It then applies the activation perform tanh to z_t to get the brand new hidden state h_t

- The community then computes the output y_t utilizing the brand new hidden state, output weights, and output biases.

This course of is repeated for every time step within the sequence and the subsequent letter or phrase is predicted within the sequence.

Backpropagation by means of time

A backward cross in a neural community is used to replace the weights to attenuate the loss. Nevertheless in RNNs, it is a bit more advanced than a regular feed-forward community, due to this fact the usual backpropagation algorithm is custom-made to include the recurrent nature of RNNs.

In a feed-forward community, backpropagation seems to be like this:

- Ahead Cross: The mannequin computes the activations and outputs of every layer, one after the other.

- Backward Cross: Then it computes the gradients of the loss with respect to the weights and repeats the method for all of the layers.

- Parameter Replace: Replace the weights and biases utilizing the gradient descent algorithm.

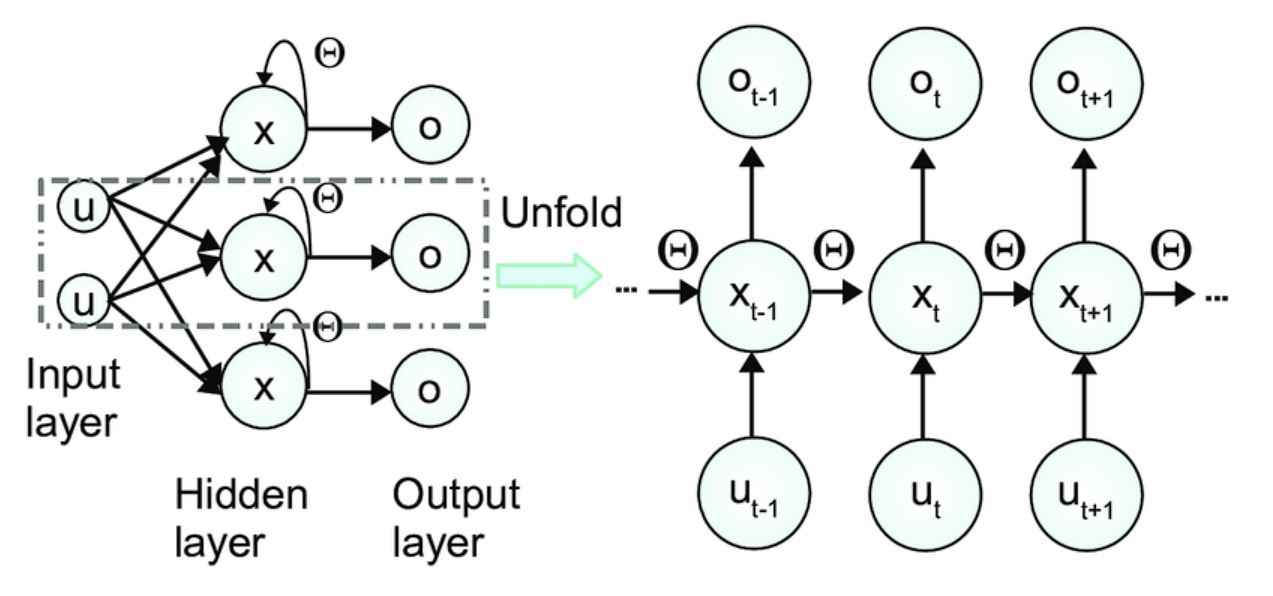

Nevertheless, in RNNs, this course of is adjusted to include the sequential information. To study to foretell the subsequent phrase appropriately, the mannequin must study what weights within the earlier time steps led to the proper or incorrect prediction.

Subsequently, an unrolling course of is carried out. Unrolling the RNNs implies that for every time step, your complete RNN is unrolled, representing the weights at that exact time step. For instance, if now we have t time steps, then there will likely be t unrolled variations.

As soon as that is carried out, the losses are calculated for every time step, after which the mannequin computes the gradients of the loss for hidden states, weight, and biases, backpropagating the error by means of the unrolled community.

This gorgeous a lot explains the working of RNNs.

RNNs face critical limitations similar to exploding and vanishing gradients issues, and restricted reminiscence. Combining all these limitations made coaching RNNs tough. Consequently, LSTMs have been developed, that inherited the muse of RNNs and mixed with a couple of modifications.

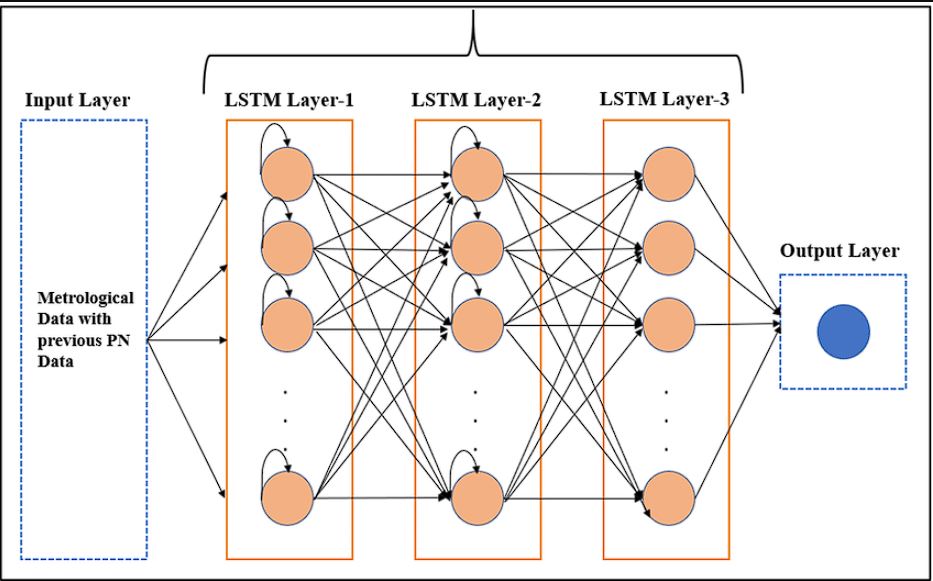

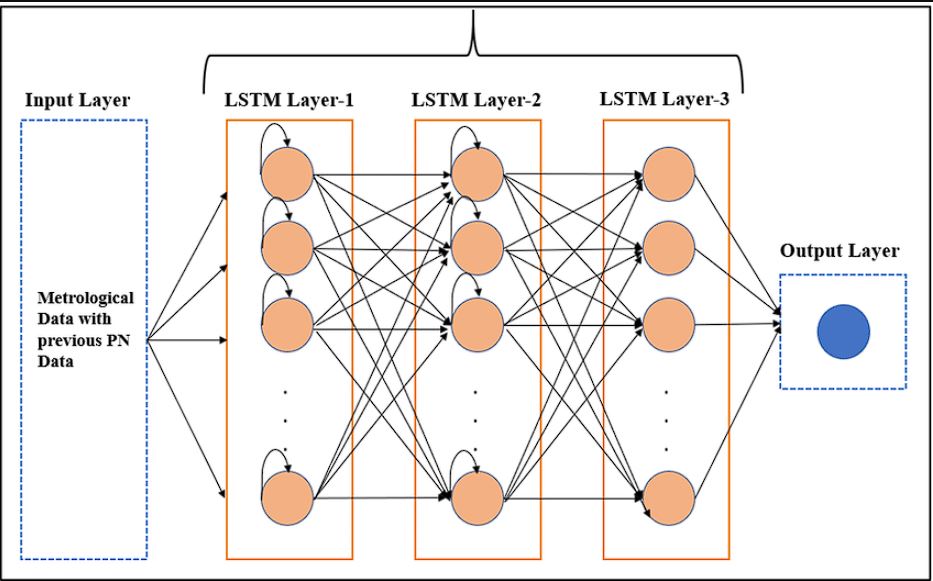

Sequence Mannequin 2: Lengthy Quick-Time period Reminiscence Networks (LSTM)

LSTM networks are a particular type of RNN-based sequence mannequin that addresses the problems of vanishing and exploding gradients and are utilized in purposes similar to sentiment evaluation. As we mentioned above, LSTM makes use of the muse of RNNs and therefore is much like it, however with the introduction of a gating mechanism that enables it to carry reminiscence over an extended interval.

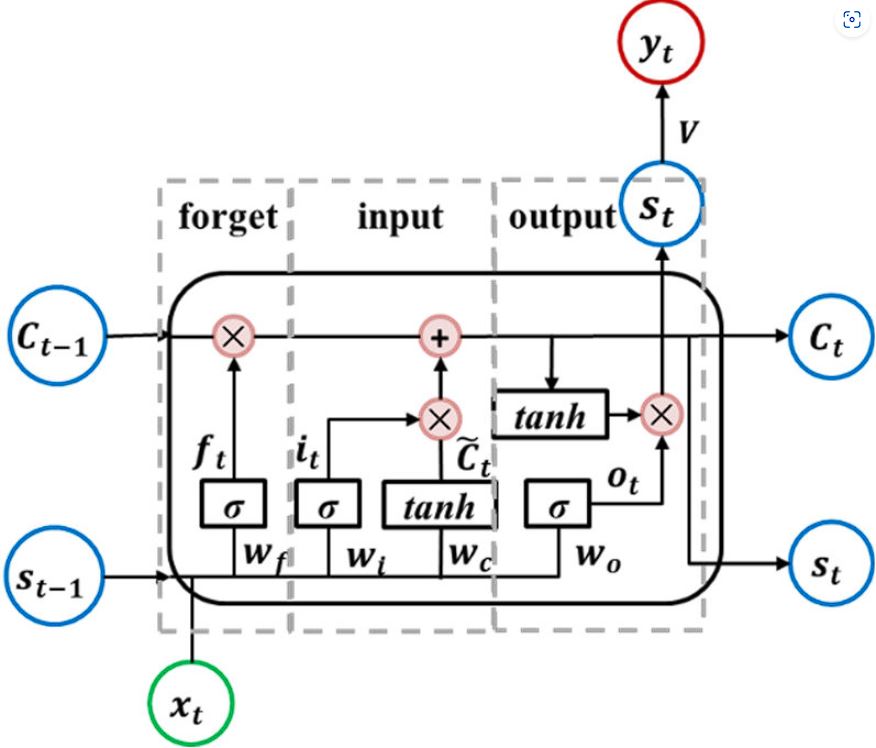

An LSTM community consists of the next parts.

Cell State

The cell state in an LSTM community is a vector that capabilities because the reminiscence of the community by carrying info throughout totally different time steps. It runs down your complete sequence chain with just some linear transformations, dealt with by the overlook gate, enter gate, and output gate.

Hidden State

The hidden state is the short-term reminiscence compared cell state that shops reminiscence for an extended interval. The hidden state serves as a message provider, carrying info from the earlier time step to the subsequent, identical to in RNNs. It’s up to date based mostly on the earlier hidden state, the present enter, and the present cell state.

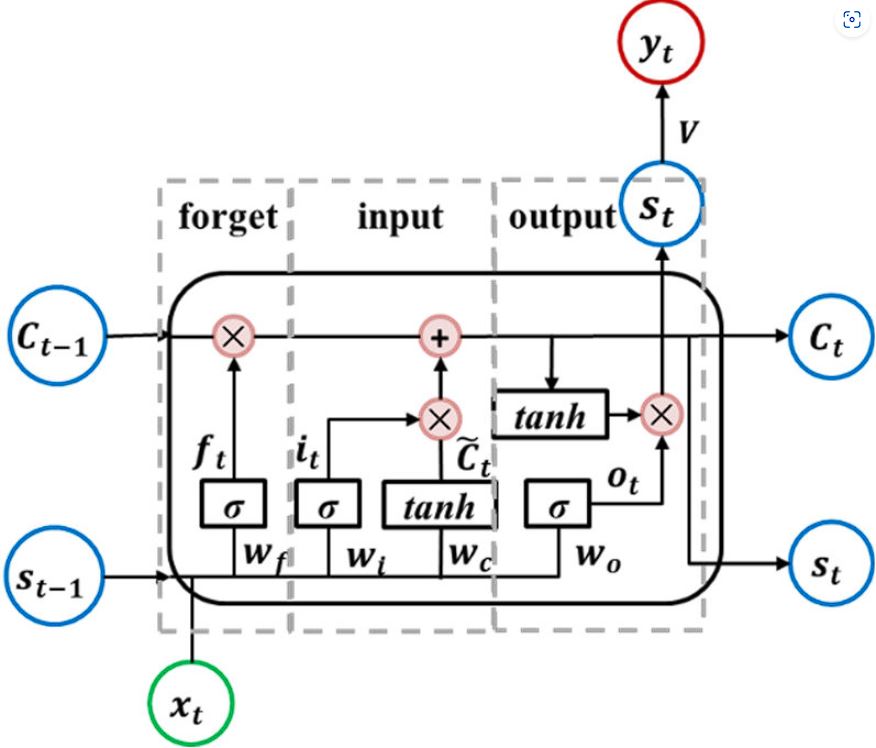

LSTMs use three totally different gates to manage info saved within the cell state.

Neglect Gate Operation

The overlook gate decides which info from the earlier cell state needs to be carried ahead and which have to be forgotten. It offers an output worth between 0 and 1 for every ingredient within the cell state. A worth of 0 implies that the data is totally forgotten, whereas a worth of 1 implies that the data is totally retained.

That is determined by element-wise multiplication of overlook gate output with the earlier cell state.

Enter Gate Operation

The enter gate controls which new info is added to the cell state. It consists of two elements: the enter gate and the cell candidate. The enter gate layer makes use of a sigmoid perform to output values between 0 and 1, deciding the significance of latest info.

The values output by the gates aren’t discrete; they lie on a steady spectrum between 0 and 1. That is because of the sigmoid activation perform, which squashes any quantity into the vary between 0 and 1.

Output Gate Operation

The output gate decides what the subsequent hidden state needs to be, by deciding how a lot of the cell state is uncovered to the hidden state.

Allow us to now take a look at how all these parts work collectively to make predictions.

- At every time step t, the community receives an enter x_t

- For every enter, LSTM calculates the values of the totally different gates. Be aware that, these are learnable weights, as with time the mannequin will get higher at deciding the worth of all three gates.

- The mannequin computes the Neglect Gate.

- The mannequin then computes the Enter Gate.

- It updates the Cell State by combining the earlier cell state with the brand new info, which is set by the worth of the gates.

- Then it computes the Output Gate, which decides how a lot info of the cell state must be uncovered to the hidden state.

- The hidden state is handed to a totally related layer to supply the ultimate output

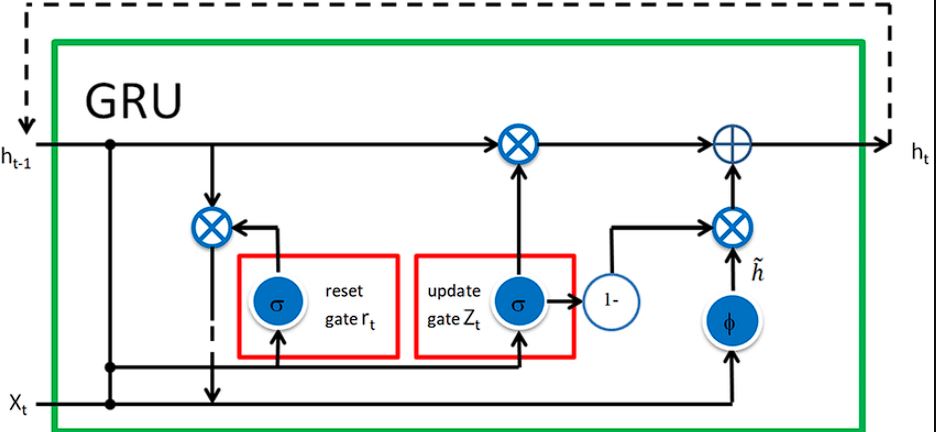

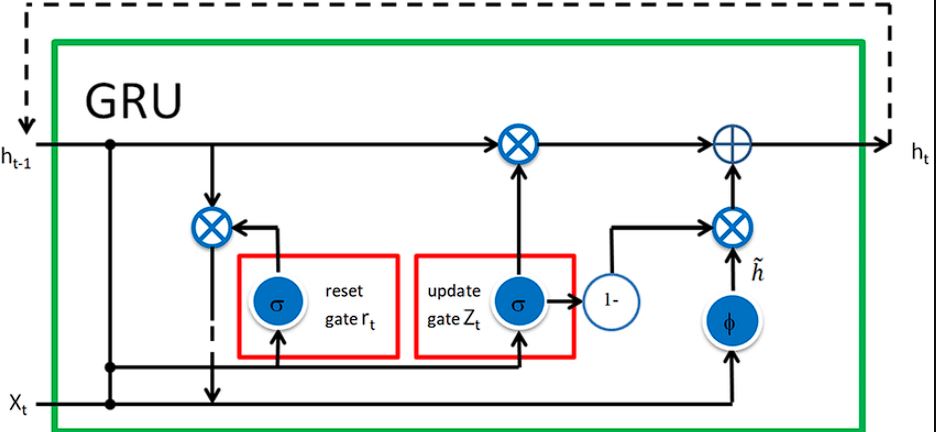

Sequence Mannequin 3: Gated Recurrent Unit (GRU)

LSTM and Gated Recurrent Unit are each forms of Recurrent Networks. Nevertheless, GRUs differ from LSTM within the variety of gates they use. GRU is easier compared to LSTM and makes use of solely two gates as a substitute of utilizing three gates present in LSTM.

Furthermore, GRU is easier than LSTM when it comes to reminiscence additionally, as they solely make the most of the hidden state for reminiscence. Listed here are the gates utilized in GRU:

- The replace gate in GRU controls how a lot of previous info must be carried ahead.

- The reset gate controls how a lot info within the reminiscence it must overlook.

- The hidden state shops info from the earlier time step.

Sequence Mannequin 4: Transformer Fashions

The transformer mannequin has been fairly a breakthrough on the earth of deep studying and has introduced the eyes of the world to itself. Varied LLMs similar to ChatGPT and Gemini from Google use the transformer structure of their fashions.

Transformer structure differs from the earlier fashions now we have mentioned in its skill to present various significance to totally different elements of the sequence of phrases it has been supplied. This is called the self-attention mechanism and is confirmed to be helpful for long-range dependencies in texts.

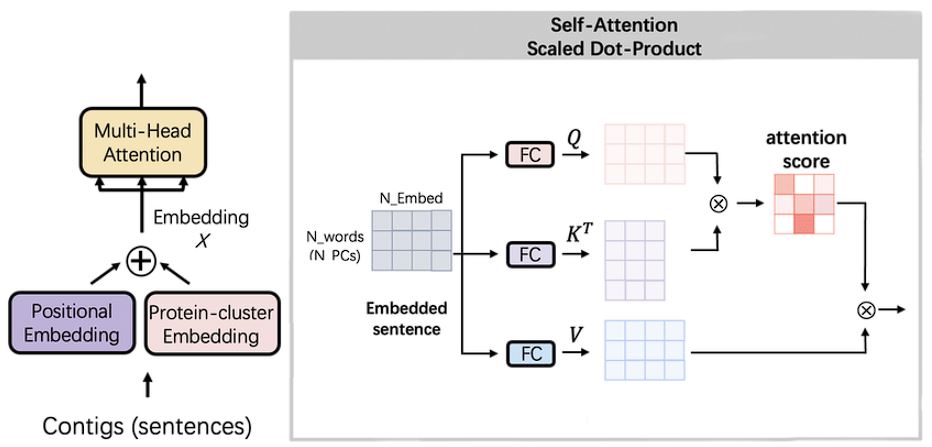

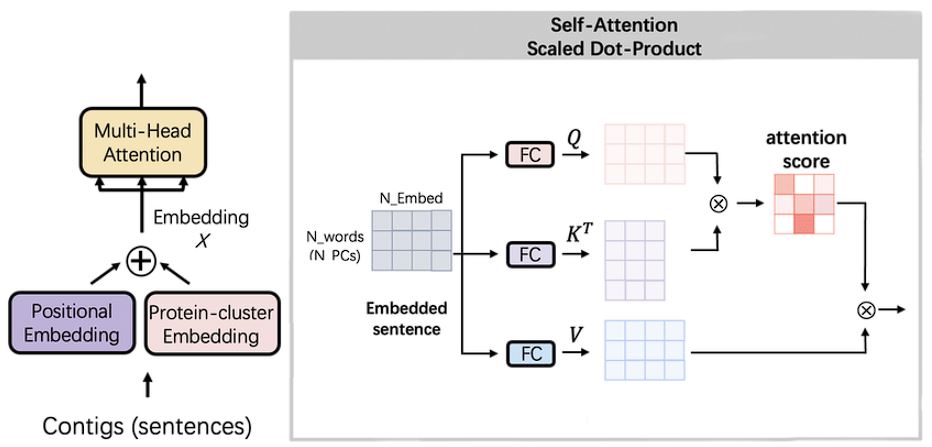

Self-Consideration Mannequin

As we mentioned above, self-attention is a mechanism that enables the mannequin to present various significance and extract necessary options within the enter information.

It really works by first computing the eye rating for every phrase within the sequence and derives their relative significance. This course of permits the mannequin to concentrate on related elements and provides it the power to know pure language, not like another mannequin.

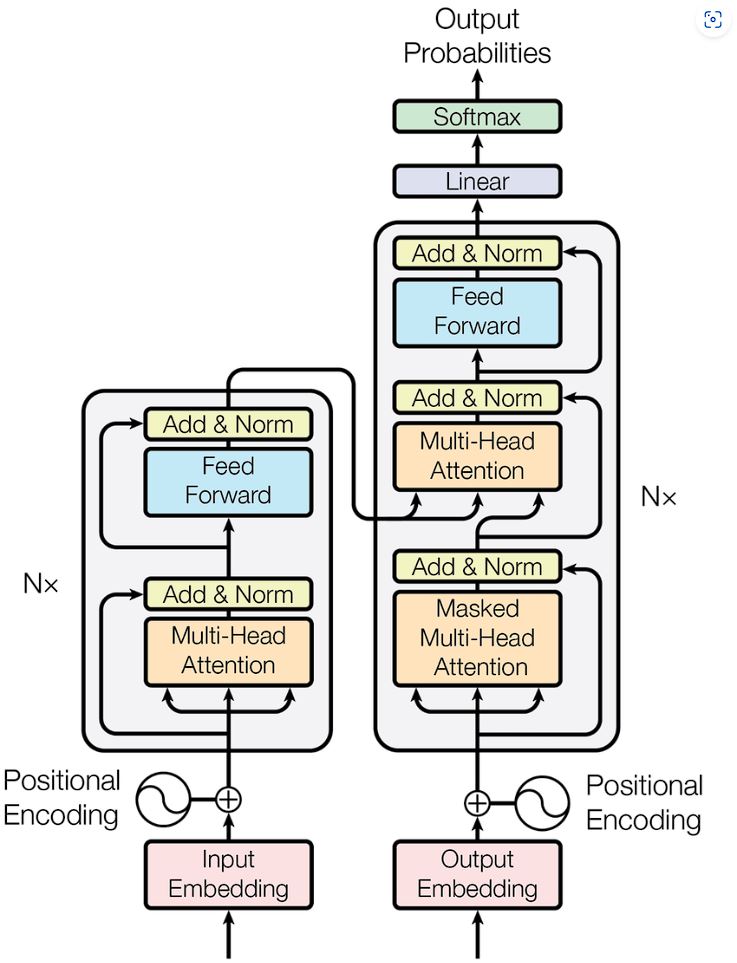

Structure of Transformer mannequin

The important thing characteristic of the Transformer mannequin is its self-attention mechanisms that enable it to course of information in parallel moderately than sequentially as in Recurrent Neural Networks (RNNs) or Lengthy Quick-Time period Reminiscence Networks (LSTMs).

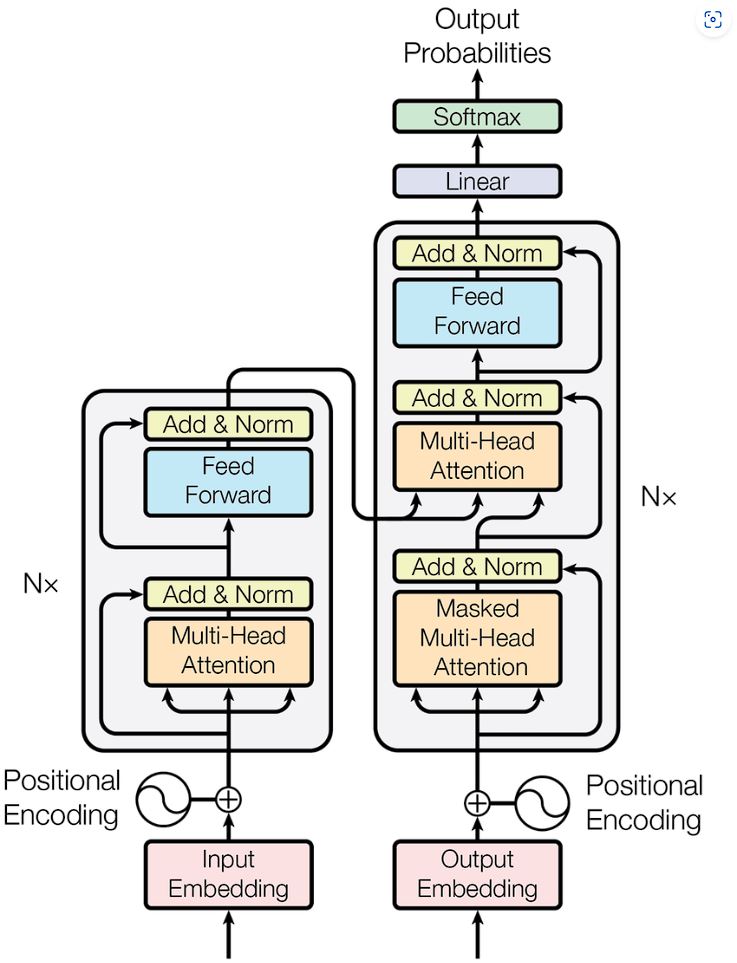

The Transformer structure consists of an encoder and a decoder.

Encoder

The Encoder consists of the identical a number of layers. Every layer has two sub-layers:

- Multi-head self-attention mechanism.

- Totally related feed-forward community.

The output of every sub-layer passes by means of a residual connection and a layer normalization earlier than it’s fed into the subsequent sub-layer.

“Multi-head” right here implies that the mannequin has a number of units (or “heads”) of discovered linear transformations that it applies to the enter. That is necessary as a result of it enhances the modeling capabilities of the community.

For instance, the sentence: “The cat, which already ate, was full.” By having multi-head consideration, the community will:

- Head 1 will concentrate on the connection between “cat” and “ate”, serving to the mannequin perceive who did the consuming.

- Head 2 will concentrate on the connection between “ate” and “full”, serving to the mannequin perceive why the cat is full.

On account of this, we are able to course of the enter and extract the context higher parallelly.

Decoder

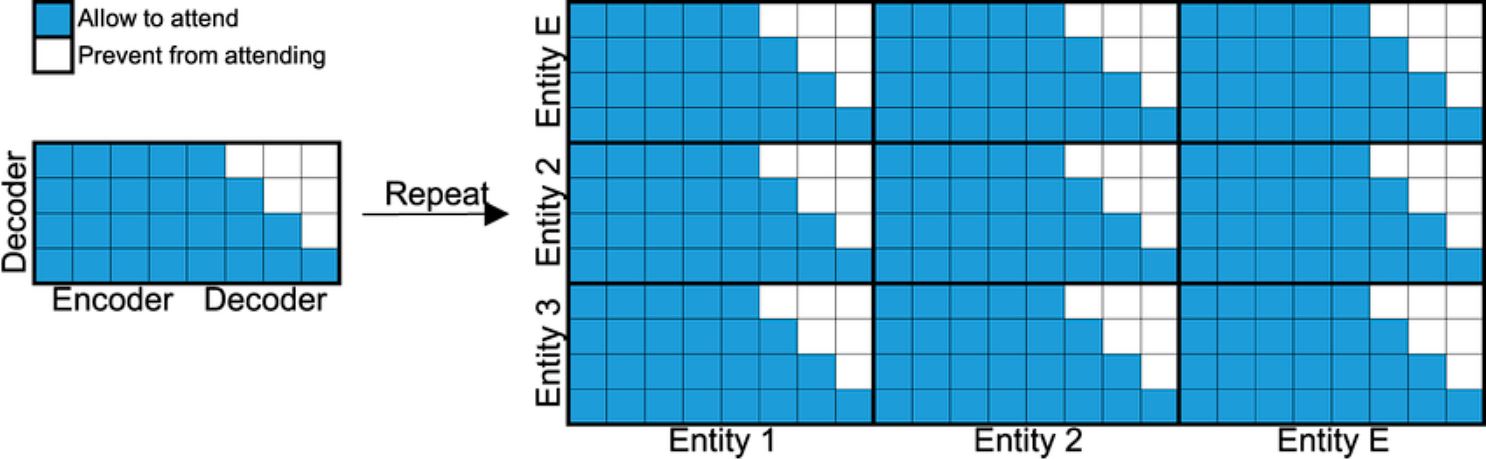

The Decoder has an identical construction to the Encoder however with one distinction. Masked multi-head consideration is used right here. Its main parts are:

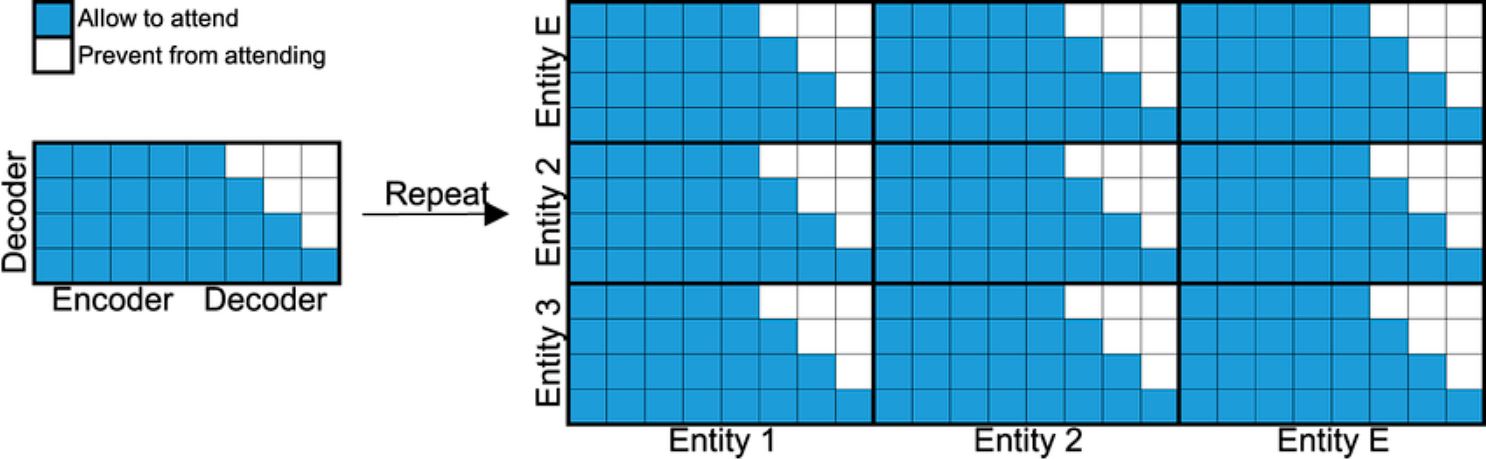

- Masked Self-Consideration Layer: Much like the Self-Consideration layer within the Encoder however includes masking.

- Self Consideration Layer

- Feed-Ahead Neural Community.

The “masked” a part of the time period refers to a way used throughout coaching the place future tokens are hidden from the mannequin.

The rationale for that is that in coaching, the entire sequence (sentence) is fed into the mannequin directly, however this poses an issue, the mannequin now is aware of what the subsequent phrase is and there’s no studying concerned in its prediction. Masking out removes the subsequent phrase from the coaching sequence supplied, which permits the mannequin to offer its prediction.

For instance, let’s think about a machine translation process, the place we wish to translate the English sentence “I’m a scholar” to French: “Je suis un étudiant”.

[START] Je suis un étudiant [END]

Right here’s how the masked layer helps with prediction:

- When predicting the primary phrase “Je”, we masks out (ignore) all the opposite phrases. So, the mannequin doesn’t know the subsequent phrases (it simply sees [START]).

- When predicting the subsequent phrase “suis”, we masks out the phrases to its proper. This implies the mannequin can’t see “un étudiant [END]” for making its prediction. It solely sees [START] Je.

Abstract

On this weblog, we seemed into the totally different Convolution Neural Community architectures which might be used for sequence modeling. We began with RNNs, which function a foundational mannequin for LSTM and GRU. RNNs differ from customary feed-forward networks due to the reminiscence options as a result of their recurrent nature, which means the community shops the output from one layer and is used as enter to a different layer. Nevertheless, coaching RNNs turned out to be tough. Consequently, we noticed the introduction of LSTM and GRU which use gating mechanisms to retailer info for an prolonged time.

Lastly, we seemed on the Transformer machine studying mannequin, an structure that’s utilized in notable LLMs similar to ChatGPT and Gemini. Transformers differed from different sequence fashions due to their self-attention mechanism that allowed the mannequin to present various significance to a part of the sequence, leading to human-like comprehension of texts.

Learn our blogs to know extra concerning the ideas we mentioned right here: