The world of AI and Massive Language Fashions (LLMs) strikes shortly. Integrating exterior instruments and real-time information is significant for constructing actually highly effective purposes. The Mannequin Context Protocol (MCP) gives a normal approach to bridge this hole. This information offers a transparent, beginner-friendly walkthrough for creating an MCP shopper server utilizing LangChain. Understanding the MCP shopper server structure helps construct strong AI brokers. We’ll cowl the necessities, together with what’s MCP server performance, and supply a sensible MCP shopper server utilizing LangChain instance.

Understanding the Mannequin Context Protocol (MCP)

So, what’s MCP server and shopper interplay all about? The Mannequin Context Protocol (MCP) is an open-standard system. Anthropic developed it to attach LLMs with exterior instruments and information sources successfully. It makes use of a structured and reusable method. MCP helps AI fashions speak to totally different programs. This enables them to entry present data and do duties past their preliminary coaching. Consider it as a common translator between the AI and the surface world, forming the core of the MCP shopper server structure.

Key Options of MCP

MCP stands out as a consequence of a number of essential options:

- Standardized Integration: MCP offers a single, constant approach to join LLMs to many instruments and information sources. This removes the necessity for distinctive code for each connection. It simplifies the MCP shopper server utilizing LangChain setup.

- Context Administration: The protocol ensures the AI mannequin retains monitor of the dialog context throughout a number of steps. This prevents shedding essential data when duties require a number of interactions.

- Safety and Isolation: MCP contains robust safety measures. It controls entry strictly and retains server connections separate utilizing permission boundaries. This ensures protected communication between the shopper and server.

Position of MCP in LLM-Primarily based Purposes

LLM purposes usually want outdoors information. They could want to question databases, fetch paperwork, or use internet APIs. MCP acts as a vital center layer. It lets fashions work together with these exterior assets easily, with no need handbook steps. Utilizing an MCP shopper server utilizing LangChain lets builders construct smarter AI brokers. These brokers change into extra succesful, work quicker, and function securely inside a well-defined MCP shopper server structure. This setup is prime for superior AI assistants. Now Let’s have a look at the implementation half.

Setting Up the Atmosphere

Earlier than constructing our MCP shopper server utilizing LangChain, let’s put together the atmosphere. You want these things:

- Python model 3.11 or newer.

- Arrange a brand new digital atmosphere (non-obligatory)

- An API key (e.g., OpenAI or Groq, relying on the mannequin you select).

- Particular Python libraries: langchain-mcp-adapters, langgraph, and an LLM library (like langchain-openai or langchain-groq) of your selection.

Set up the wanted libraries utilizing pip. Open your terminal or command immediate and run:

pip set up langchain-mcp-adapters langgraph langchain-groq # Or langchain-openaiBe sure to have the right Python model and vital keys prepared.

Constructing the MCP Server

The MCP server’s job is to supply instruments the shopper can use. In our MCP shopper server utilizing langchain instance, we are going to construct a easy server. This server will deal with primary math operations in addition to advanced climate api to get climate particulars of a metropolis. Understanding what’s MCP server performance begins right here.

Create a Python file named mcp_server.py:

- Let’s import the required libraries

import math

import requests

from mcp.server.fastmcp import FastMCP2. Initialize the FastMCP object

mcp= FastMCP("Math")3. Let’s outline the maths instruments

@mcp.device()

def add(a: int, b: int) -> int:

print(f"Server acquired add request: {a}, {b}")

return a + b

@mcp.device()

def multiply(a: int, b: int) -> int:

print(f"Server acquired multiply request: {a}, {b}")

return a * b

@mcp.device()

def sine(a: int) -> int:

print(f"Server acquired sine request: {a}")

return math.sin(a)4. Now, Let’s outline a climate device, ensure you have API from right here.

WEATHER_API_KEY = "YOUR_API_KEY"

@mcp.device()

def get_weather(metropolis: str) -> dict:

"""

Fetch present climate for a given metropolis utilizing WeatherAPI.com.

Returns a dictionary with metropolis, temperature (C), and situation.

"""

print(f"Server acquired climate request: {metropolis}")

url = f"http://api.weatherapi.com/v1/present.json?key={WEATHER_API_KEY}&q={metropolis}"

response = requests.get(url)

if response.status_code != 200:

return {"error": f"Did not fetch climate for {metropolis}."}

information = response.json()

return {

"metropolis": information["location"]["name"],

"area": information["location"]["region"],

"nation": information["location"]["country"],

"temperature_C": information["current"]["temp_c"],

"situation": information["current"]["condition"]["text"]

}

5. Now, instantiate the mcp server

if __name__ =="__main__":

print("Beginning MCP Server....")

mcp.run(transport="stdio")Rationalization:

This script units up a easy MCP server named “Math”. It makes use of FastMCP to outline 4 instruments, add, multiply, sine and get_weather marked by the @mcp.device() decorator. Kind hints inform MCP in regards to the anticipated inputs and outputs. The server runs utilizing normal enter/output (stdio) for communication when executed immediately. This demonstrates what’s MCP server in a primary setup.

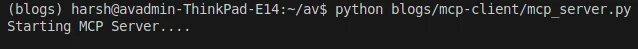

Run the server: Open your terminal and navigate to the listing containing mcp_server.py. Then run:

python mcp_server.pyThe server ought to begin with none warnings. This server will carry on working for the shopper to entry the instruments

Output:

Constructing the MCP Consumer

The shopper connects to the server, sends requests (like asking the agent to carry out a calculation and fetch the reside climate), and handles the responses. This demonstrates the shopper facet of the MCP shopper server utilizing LangChain.

Create a Python file named shopper.py:

- Import the required libraries first

# shopper.py

from mcp import ClientSession, StdioServerParameters

from mcp.shopper.stdio import stdio_client

from langchain_mcp_adapters.instruments import load_mcp_tools

from langgraph.prebuilt import create_react_agent

from langchain_groq import ChatGroq

from langchain_openai import ChatOpenAI

import asyncio

import os- Arrange the API key for the LLM (Groq or OpenAI) and initialize the LLM mannequin

# Set your API key (substitute along with your precise key or use atmosphere variables)

GROQ_API_KEY = "YOUR_GROQ_API_KEY" # Substitute along with your key

os.environ["GROQ_API_KEY"] = GROQ_API_KEY

# OPENAI_API_KEY = "YOUR_OPENAI_API_KEY"

# os.environ["OPENAI_API_KEY"] = OPENAI_API_KEY

# Initialize the LLM mannequin

mannequin = ChatGroq(mannequin="llama3-8b-8192", temperature=0)

# mannequin = ChatOpenAI(mannequin="gpt-4o-mini", temperature=0)- Now, outline the parameters to start out the MCP server course of.

server_params = StdioServerParameters(

command="python", # Command to execute

args=["mcp_server.py"] # Arguments for the command (our server script)

)- Let’s outline the Asynchronous operate to run the agent interplay

async def run_agent():

async with stdio_client(server_params) as (learn, write):

async with ClientSession(learn, write) as session:

await session.initialize()

print("MCP Session Initialized.")

instruments = await load_mcp_tools(session)

print(f"Loaded Instruments: {[tool.name for tool in tools]}")

agent = create_react_agent(mannequin, instruments)

print("ReAct Agent Created.")

print(f"Invoking agent with question")

response = await agent.ainvoke({

"messages": [("user", "What is (7+9)x17, then give me sine of the output recieved and then tell me What's the weather in Torronto, Canada?")]

})

print("Agent invocation full.")

# Return the content material of the final message (often the agent's closing reply)

return response["messages"][-1].content material- Now, run this operate and watch for the outcomes on th terminal

# Normal Python entry level test

if __name__ == "__main__":

# Run the asynchronous run_agent operate and watch for the outcome

print("Beginning MCP Consumer...")

outcome = asyncio.run(run_agent())

print("nAgent Last Response:")

print(outcome)Rationalization:

This shopper script configures an LLM (utilizing ChatGroq right here; bear in mind to set your API key). It defines how one can begin the server utilizing StdioServerParameters. The run_agent operate connects to the server by way of stdio_client, creates a ClientSession, and initializes it. load_mcp_tools fetches the server’s instruments for LangChain. A create_react_agent makes use of the LLM and instruments to course of a consumer question. Lastly, agent.ainvoke sends the question, letting the agent probably use the server’s instruments to seek out the reply. This reveals a whole MCP shopper server utilizing langchain instance.

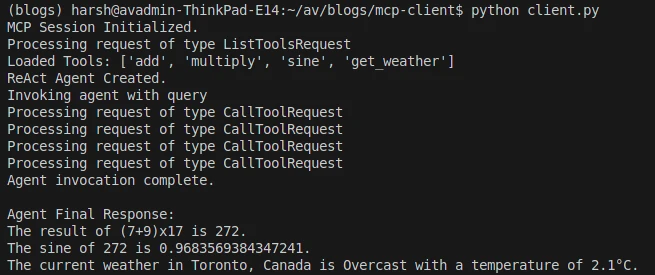

Run the shopper:

python shopper.pyOutput:

We will see that the shopper begins the server course of, initializes the connection, hundreds instruments, invokes the agent, and prints the ultimate reply calculated by calling the server’s add device additionally known as climate api and retrieving the reside climate information.

Actual-World Purposes

Utilizing an MCP shopper server utilizing LangChain opens up many prospects for creating refined AI brokers. Some sensible purposes embrace:

- LLM Independency: By using Langchain, we are able to now combine any LLM with MCP. Beforehand we had been

- Information Retrieval: Brokers can connect with database servers by way of MCP to fetch real-time buyer information or question inner information bases.

- Doc Processing: An agent may use MCP instruments to work together with a doc administration system, permitting it to summarize, extract data, or replace paperwork based mostly on consumer requests.

- Activity Automation: Combine with numerous enterprise programs (like CRMs, calendars, or mission administration instruments) by way of MCP servers to automate routine duties like scheduling conferences or updating gross sales information. The MCP shopper server structure helps these advanced workflows.

Finest Practices

When constructing your MCP shopper server utilizing LangChain, observe good practices for higher outcomes:

- Undertake a modular design by creating particular instruments for distinct duties and preserving server logic separate from shopper logic.

- Implement strong error dealing with in each server instruments and the shopper agent so the system can handle failures gracefully.

- Prioritize safety, particularly if the server handles delicate information, through the use of MCP’s options like entry controls and permission boundaries.

- Present clear descriptions and docstrings on your MCP instruments; this helps the agent perceive their function and utilization.

Widespread Pitfalls

Be aware of potential points when creating your system. Context loss can happen in advanced conversations if the agent framework doesn’t handle state correctly, resulting in errors. Poor useful resource administration in long-running MCP servers would possibly trigger reminiscence leaks or efficiency degradation, so deal with connections and file handles fastidiously. Guarantee compatibility between the shopper and server transport mechanisms, as mismatches (like one utilizing stdio and the opposite anticipating HTTP) will forestall communication. Lastly, look ahead to device schema mismatches the place the server device’s definition doesn’t align with the shopper’s expectation, which might block device execution. Addressing these factors strengthens your MCP shopper server utilizing LangChain implementation.

Conclusion

Leveraging the Mannequin Context Protocol with LangChain offers a robust and standardized approach to construct superior AI brokers. By creating an MCP shopper server utilizing LangChain, you allow your LLMs to work together securely and successfully with exterior instruments and information sources. This information demonstrated a primary MCP shopper server utilizing LangChain instance, outlining the core MCP shopper server structure and what’s MCP server performance entails. This method simplifies integration, boosts agent capabilities, and ensures dependable operations, paving the best way for extra clever and helpful AI purposes.

Often Requested Questions

A. MCP is an open normal designed by Anthropic. It offers a structured method for Massive Language Fashions (LLMs) to work together with exterior instruments and information sources securely.

A. LangChain offers the framework for constructing brokers, whereas MCP gives a standardized protocol for device communication. Combining them simplifies constructing brokers that may reliably use exterior capabilities.

A. MCP is designed to be transport-agnostic. Widespread implementations use normal enter/output (stdio) for native processes or HTTP-based Server-Despatched Occasions (SSE) for community communication.

A. Sure, MCP is designed with safety in thoughts. It contains options like permission boundaries and connection isolation to make sure safe interactions between shoppers and servers.

A. Completely. LangChain helps many LLM suppliers. So long as the chosen LLM works with LangChain/LangGraph agent frameworks, it may possibly work together with instruments loaded by way of an MCP shopper.

Login to proceed studying and luxuriate in expert-curated content material.