Lately, we’ve witnessed an thrilling shift in how AI techniques work together with customers, not simply answering questions, however reasoning, planning, and taking actions. This transformation is pushed by the rise of agentic frameworks like Autogen, LangGraph, and CrewAI. These frameworks allow giant language fashions (LLMs) to behave extra like autonomous brokers—able to making choices, calling features, and collaborating throughout duties. Amongst these, one significantly highly effective but developer-friendly possibility comes from Microsoft:Semantic Kernel. On this tutorial, we’ll discover what makes Semantic Kernel stand out, the way it compares to different approaches, and how one can begin utilizing it to construct your individual AI brokers.

Studying Goals

- Perceive the core structure and goal of Semantic Kernel.

- Discover ways to combine plugins and AI providers into the Kernel.

- Discover single-agent and multi-agent system setups utilizing Semantic Kernel.

- Uncover how operate calling and orchestration work inside the framework.

- Acquire sensible insights into constructing clever brokers with Semantic Kernel and Azure OpenAI.

This text was printed as part of the Knowledge Science Blogathon.

What’s Semantic Kernel?

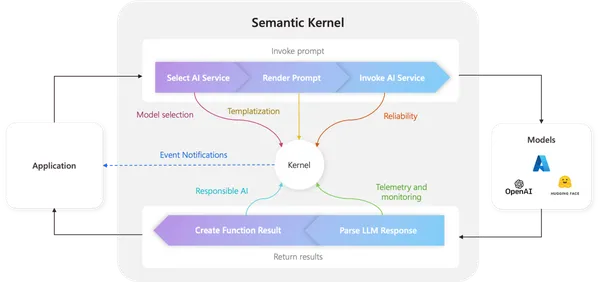

Earlier than we begin our journey, let’s first perceive what the semantic kernel means. lets break

- Semantic: Refers back to the capability to grasp and course of which means from pure language.

- Kernel: Refers back to the core engine that powers the framework, managing duties, features, and interactions between AI fashions and exterior instruments.

Why is it Known as Semantic Kernel?

Microsoft’s Semantic Kernel is designed to bridge the hole between LLMs (like GPT) and conventional programming by permitting builders to outline features, plugins, and brokers that may work collectively in a structured means.

It offers a framework the place:

- Pure language prompts and AI features (semantic features) function along with conventional code features.

- AI can cause, plan, and execute duties utilizing these mixed features.

- It allows multi-agent collaboration the place totally different brokers can carry out particular roles.

Agentic Framework vs Conventional API calling

When working with an agentic framework, a standard query arises: Can’t we obtain the identical outcomes utilizing the OpenAI API alone? 🤔 I had the identical doubt once I first began exploring this.

Let’s take an instance: Suppose you’re constructing a Q&A system for firm insurance policies—HR coverage and IT coverage. With a conventional API name, you may get good outcomes, however typically, the responses might lack accuracy or consistency.

An agentic framework, then again, is extra strong as a result of it means that you can create specialised brokers—one centered on HR insurance policies and one other on IT insurance policies. Every agent is optimized for its area, resulting in extra dependable solutions.

With this instance, I hope you now have a clearer understanding of the important thing distinction between an agentic framework and conventional API calling!.

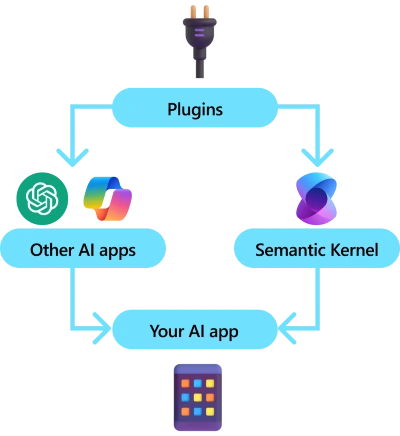

What are Plugins in Semantic Kernel?

Plugins are a key a part of Semantic Kernel. If you happen to’ve used plugins in ChatGPT or Copilot extensions in Microsoft 365, you have already got an concept of how they work. Merely put, plugins allow you to package deal your current APIs into reusable instruments that an AI can use. Because of this AI can have the flexibility to transcend its capabilities.

Behind the scenes, Semantic Kernel makes use of operate calling—a built-in characteristic of most trendy LLMs—to allow planning and API execution. With operate calling, an LLM can request a selected operate, and Semantic-Kernel has the flexibility to redirect to your code. The outcomes are then returned to the LLM, permitting it to generate a remaining response.

Code Implementation

Earlier than working the code, set up Semantic Kernel and different required packages utilizing the next instructions:

pip set up semantic-kernel, pip set up openai, pip set up pydanticRight here’s a easy Python instance utilizing Semantic Kernel to reveal how a plugin works. This instance defines a plugin that interacts with an AI assistant to fetch climate updates.

import semantic_kernel as sk

from semantic_kernel.connectors.ai.open_ai import AzureChatCompletion

# Step 1: Outline a Easy Plugin (Perform) for Climate Updates

def weather_plugin(location: str) -> str:

# Simulating a climate API response

weather_data = {

"New York": "Sunny, 25°C",

"London": "Cloudy, 18°C",

"Tokyo": "Wet, 22°C"

}

return weather_data.get(location, "Climate information not accessible.")

# Step 2: Initialize Semantic Kernel with Azure OpenAI

kernel = sk.Kernel()

kernel.add_service(

"azure-openai-chat",

AzureChatCompletion(

api_key="your-azure-api-key",

endpoint="your-azure-endpoint",

deployment_name="your-deployment-name" # Substitute along with your Azure OpenAI deployment

)

)

# Step 3: Register the Plugin (Perform) in Semantic Kernel

kernel.add_plugin("WeatherPlugin", weather_plugin)

# Step 4: Calling the Plugin by Semantic Kernel

location = "New York"

response = kernel.invoke("WeatherPlugin", location)

print(f"Climate in {location}: {response}")

How This Demonstrates a Plugin in Semantic Kernel

- Defines a Plugin – The weather_plugin operate simulates fetching climate information.

- Integrates with Semantic Kernel – The operate is added as a plugin utilizing kernel.add_plugin().

- Permits AI to Use It – The AI can now name this operate dynamically.

This reveals how plugins lengthen an AI’s skills, enabling it to carry out duties past normal textual content era. Would you want one other instance, reminiscent of a database question plugin? 🚀

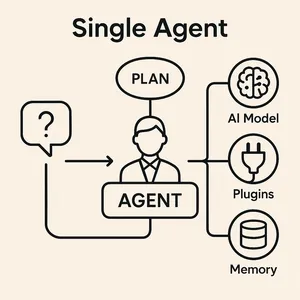

What’s a Single-Agent System?

On this part, we’ll perceive what a single agent is and likewise have a look at its code.

A single agent is mainly an entity that handles person queries by itself. It takes care of all the things with no need a number of brokers or an orchestrator (we’ll cowl that within the multi-agent part). The one agent is liable for processing requests, fetching related info, and producing responses—multi function place.

#import cs# import asyncio

from pydantic import BaseModel

from semantic_kernel import Kernel

from semantic_kernel.brokers import ChatCompletionAgent

from semantic_kernel.connectors.ai.open_ai import AzureChatCompletion

from semantic_kernel.contents import ChatHistory

# Initialize Kernel

kernel = Kernel()

kernel.add_service(AzureChatCompletion(service_id="agent1", api_key="YOUR_API_KEY",endpoint="",deployment_name="MODEL_NAME"))

# Outline the Agent

AGENT_NAME = "Agent1"

AGENT_INSTRUCTIONS = (

"You're a extremely succesful AI agent working solo, very like J.A.R.V.I.S. from Iron Man. "

"Your process is to repeat the person's message whereas introducing your self as J.A.R.V.I.S. in a assured {and professional} method. "

"At all times keep a composed and clever tone in your responses."

)

agent = ChatCompletionAgent(service_id="agent1", kernel=kernel, title=AGENT_NAME, directions=AGENT_INSTRUCTIONS)

chat_history = ChatHistory()

chat_history.add_user_message("How are you doing?")

response_text = ""

async for content material in agent.invoke(chat_history):

chat_history.add_message(content material)

response_text = content material.content material # Retailer the final response

{"user_input": "How are you doing?", "agent_response": response_text}

Output:

{‘user_input’: ‘How are you doing?’,

‘agent_response’: ‘Greetings, I’m J.A.R.V.I.S. I’m right here to copy your message: “How are you doing?” Please be happy to ask the rest you may want.’}

Issues to know

- kernel = Kernel() -> This creates the thing of semantic kernel

- kernel.add_service() -> Used for including and configuring the prevailing fashions (like OpenAI, Azure OpenAI, or native fashions) to the kernel. I’m utilizing Azure OpenAI with the GPT-4o mannequin. You have to present your individual endpoint info.

- agent =- ChatCompletionAgent(service_id=”agent1″, kernel=kernel, title=AGENT_NAME, directions=AGENT_INSTRUCTIONS) -> Used for telling we going to make use of the chatcompletionagent , that works nicely in qna.

- chat_history = ChatHistory() -> Creates a brand new chat historical past object to retailer the dialog.This retains monitor of previous messages between the person and the agent.

- chat_history.add_user_message(“How are you doing?”) -> Provides a person message (“How are you doing?”) to the chat historical past. The agent will use this historical past to generate a related response.

- agent.invoke(chat_history) -> Passes the historical past to the agent , the agent will course of the dialog and generates the response.

- agent. invoke(chat_history) -> This methodology passes the historical past to the agent. The agent processes the dialog and generates the response.What’s a Multi-Agent?

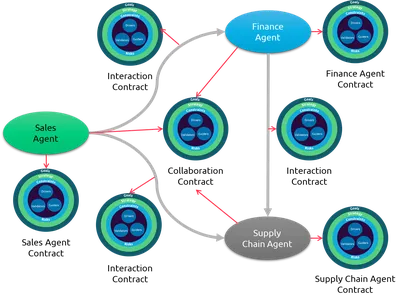

What’s a Multi-Agent system?

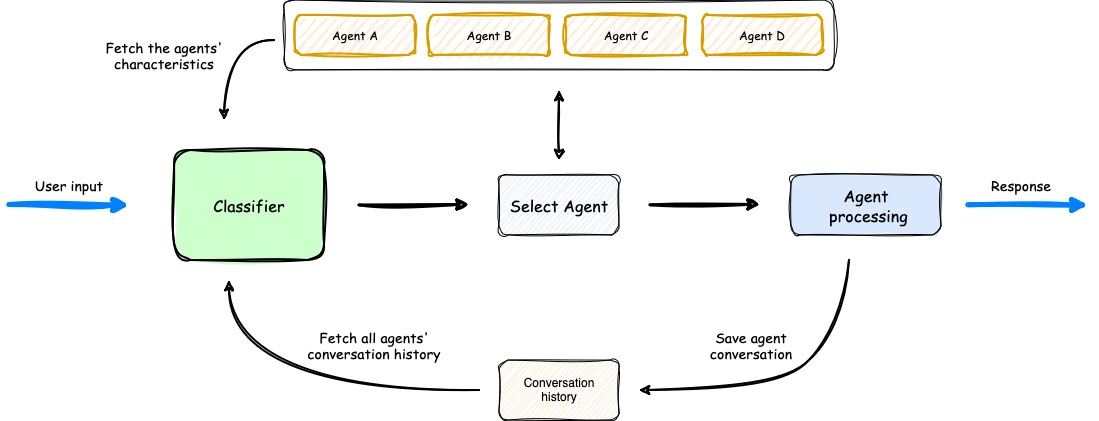

In Multi-Agent techniques, there’s a couple of agent, typically greater than two. Right here, we usually use an orchestrator agent whose accountability is to resolve which accessible agent ought to deal with a given request. The necessity for an orchestrator is dependent upon your use case. First, let me clarify the place an orchestrator can be used.

Suppose you’re engaged on fixing person queries associated to financial institution information whereas one other agent handles medical information. On this case, you’ve created two brokers, however to find out which one needs to be invoked, the orchestrator comes into play. The orchestrator decides which agent ought to deal with a given request or question. We offer a set of directions to the orchestrator, defining its duties and decision-making course of.

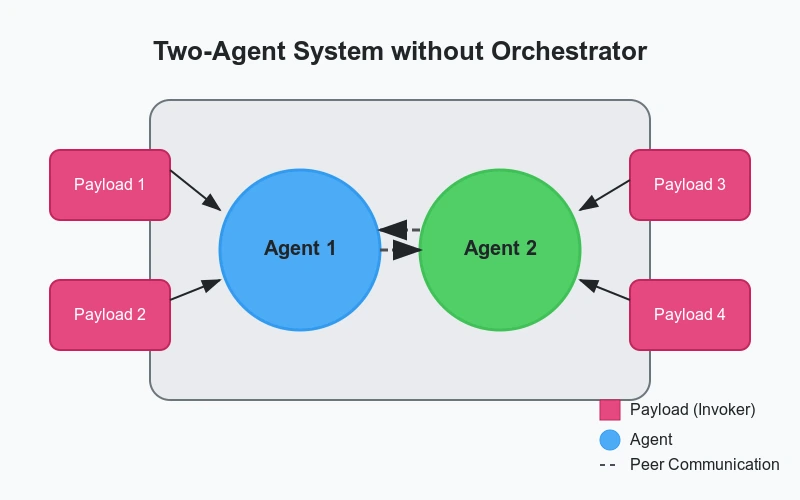

Now, let’s have a look at a case the place an orchestrator isn’t wanted. Suppose you’ve created an API that performs totally different operations primarily based on the payload information. For instance, if the payload comprises “Well being”, you’ll be able to immediately invoke the Well being Agent, and equally, for “Financial institution”, you invoke the Financial institution Agent.

import asyncio

from pydantic import BaseModel

from semantic_kernel import Kernel

from semantic_kernel.brokers import ChatCompletionAgent

from semantic_kernel.connectors.ai.open_ai import AzureChatCompletion

from semantic_kernel.contents import ChatHistory

# Initialize Kernel

kernel = Kernel()

# Add a number of providers for various brokers

kernel.add_service(AzureChatCompletion(service_id="banking_agent", api_key="YOUR_API_KEY", endpoint="", deployment_name="MODEL_NAME"))

kernel.add_service(AzureChatCompletion(service_id="healthcare_agent", api_key="YOUR_API_KEY", endpoint="", deployment_name="MODEL_NAME"))

kernel.add_service(AzureChatCompletion(service_id="classifier_agent", api_key="YOUR_API_KEY", endpoint="", deployment_name="MODEL_NAME"))

# Outline Orchestrator Agent

CLASSIFIER_AGENT = ChatCompletionAgent(

service_id="orchestrator_agent", kernel=kernel, title="OrchestratorAgent",

directions="You're an AI liable for classifying person queries. Determine whether or not the question belongs to banking or healthcare. Reply with both 'banking' or 'healthcare'."

)

# Outline Area-Particular Brokers

BANKING_AGENT = ChatCompletionAgent(

service_id="banking_agent", kernel=kernel, title="BankingAgent",

directions="You're an AI specializing in banking queries. Reply person queries associated to finance and banking."

)

HEALTHCARE_AGENT = ChatCompletionAgent(

service_id="healthcare_agent", kernel=kernel, title="HealthcareAgent",

directions="You're an AI specializing in healthcare queries. Reply person queries associated to medical and well being matters."

)

# Perform to Decide the Acceptable Agent

async def identify_agent(user_input: str):

chat_history = ChatHistory()

chat_history.add_user_message(user_input)

async for content material in CLASSIFIER_AGENT.invoke(chat_history):

classification = content material.content material.decrease()

if "banking" in classification:

return BANKING_AGENT

elif "healthcare" in classification:

return HEALTHCARE_AGENT

return None

# Perform to Deal with Person Question

async def handle_query(user_input: str):

selected_agent = await identify_agent(user_input)

if not selected_agent:

return {"error": "No appropriate agent discovered for the question."}

chat_history = ChatHistory()

chat_history.add_user_message(user_input)

response_text = ""

async for content material in selected_agent.invoke(chat_history):

chat_history.add_message(content material)

response_text = content material.content material

return {"user_input": user_input, "agent_response": response_text}

# Instance Utilization

user_query = "What are one of the best practices for securing a checking account?"

response = asyncio.run(handle_query(user_query))

print(response)Right here, the move happens after the person passes the question, which then goes to the orchestrator liable for figuring out the question and discovering the suitable agent. As soon as the agent is recognized, the actual agent is invoked, the question is processed, and the response is generated.

Output when the question is expounded to the financial institution:

{

"user_input": "What are one of the best practices for securing a checking account?",

"agent_response": "To safe your checking account, use robust passwords, allow two-

issue authentication, often monitor transactions, and keep away from sharing delicate

info on-line."

}

Output when the question is expounded to well being:

{

"user_input": "What are one of the best methods to take care of a wholesome way of life?",

"agent_response": "To keep up a wholesome way of life, eat a balanced weight-reduction plan, train

often, get sufficient sleep, keep hydrated, and handle stress successfully."

}

Conclusion

On this article, we discover how Semantic Kernel enhances AI capabilities by an agentic framework. We talk about the position of plugins, examine the Agentic Framework with conventional API calling, define the variations between single-agent and multi-agent techniques, and study how they streamline advanced decision-making. As AI continues to evolve, leveraging the Semantic Kernel’s agentic strategy can result in extra environment friendly and context-aware purposes.

Key Takeaways

- Semantic-kernel – It’s an agentic framework that enhances AI fashions by enabling them to plan, cause, and make choices extra successfully.

- Agentic Framework vs Conventional API – The agentic framework is extra appropriate when working throughout a number of domains, reminiscent of healthcare and banking, whereas a conventional API is preferable when dealing with a single area with out the necessity for multi-agent interactions.

- Plugins – They permit LLMs to execute particular duties by integrating exterior code, reminiscent of calling an API, retrieving information from a database like CosmosDB, or performing different predefined actions.

- Single-Agent vs Multi-Agent – A single-agent system operates with out an orchestrator, dealing with duties independently. In a multi-agent system, an orchestrator manages a number of brokers, or a number of brokers work together with out an orchestrator however collaborate on duties.

You could find the code on my github.

If any of you’ve any doubt, be happy to ask within the feedback. Join with me on Linkedin.

Steadily Requested Questions

A1. Semantic Kernel is a Microsoft framework that mixes pure language understanding with conventional programming to construct clever AI brokers.

A2. “Semantic” refers to language understanding, and “Kernel” represents the core engine that manages AI features and duties.

A3. Not like API calls, Semantic Kernel helps agent-based reasoning, process planning, and multi-function execution utilizing LLMs.

A4. Plugins are reusable instruments that wrap current APIs, permitting the AI to increase its skills and work together with exterior techniques.

A5. It’s a setup the place one AI agent handles all duties, with out requiring orchestration or a number of brokers.

The media proven on this article isn’t owned by Analytics Vidhya and is used on the Creator’s discretion.

Login to proceed studying and revel in expert-curated content material.