LangChain and LlamaIndex are sturdy frameworks tailor-made for creating purposes utilizing giant language fashions. Whereas each excel in their very own proper, every presents distinct strengths and focuses, making them appropriate for various NLP software wants. On this weblog we’d perceive when to make use of which framework, i.e., comparability between LangChain and LlamaIndex.

Studying Goals

- Differentiate between LangChain and LlamaIndex by way of their design, performance, and software focus.

- Acknowledge the suitable use instances for every framework (e.g., LangChain for chatbots, LlamaIndex for knowledge retrieval).

- Achieve an understanding of the important thing elements of each frameworks, together with indexing, retrieval algorithms, workflows, and context retention.

- Assess the efficiency and lifecycle administration instruments out there in every framework, equivalent to LangSmith and debugging in LlamaIndex.

- Choose the appropriate framework or mixture of frameworks for particular mission necessities.

This text was printed as part of the Information Science Blogathon.

What’s LangChain?

You’ll be able to consider LangChain as a framework fairly than only a software. It gives a variety of instruments proper out of the field that allow interplay with giant language fashions (LLMs). A key function of LangChain is the usage of chains, which permit the chaining of elements collectively. For instance, you may use a PromptTemplate and an LLMChain to create a immediate and question an LLM. This modular construction facilitates simple and versatile integration of varied elements for advanced duties.

LangChain simplifies each stage of the LLM software lifecycle:

- Growth: Construct your purposes utilizing LangChain’s open-source constructing blocks, elements, and third-party integrations. Use LangGraph to construct stateful brokers with first-class streaming and human-in-the-loop help.

- Productionization: Use LangSmith to examine, monitor and consider your chains, so that you could constantly optimize and deploy with confidence.

- Deployment: Flip your LangGraph purposes into production-ready APIs and Assistants with LangGraph Cloud.

LangChain Ecosystem

- langchain-core: Base abstractions and LangChain Expression Language.

- Integration packages (e.g. langchain-openai, langchain-anthropic, and so forth.): Vital integrations have been cut up into light-weight packages which can be co-maintained by the LangChain group and the mixing builders.

- langchain: Chains, brokers, and retrieval methods that make up an software’s cognitive structure.

- langchain-community: Third-party integrations which can be group maintained.

- LangGraph: Construct sturdy and stateful multi-actor purposes with LLMs by modeling steps as edges and nodes in a graph. Integrates easily with LangChain, however can be utilized with out it.

- LangGraphPlatform: Deploy LLM purposes constructed with LangGraph to manufacturing.

- LangSmith: A developer platform that allows you to debug, check, consider, and monitor LLM purposes.

Constructing Your First LLM Utility with LangChain and OpenAI

Let’s make a easy LLM Utility utilizing LangChain and OpenAI, additionally be taught the way it works:

Let’s begin by putting in packages

!pip set up langchain-core langgraph>0.2.27

!pip set up -qU langchain-openaiOrganising openai as llm

import getpass

import os

from langchain_openai import ChatOpenAI

os.environ["OPENAI_API_KEY"] = getpass.getpass()

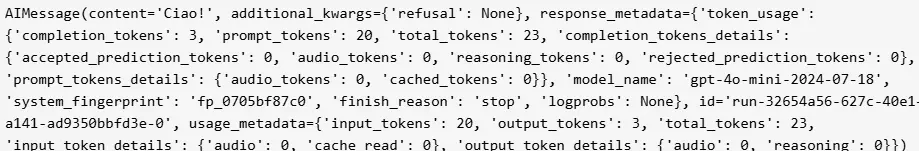

mannequin = ChatOpenAI(mannequin="gpt-4o-mini")To simply merely name the mannequin, we are able to go in an inventory of messages to the .invoke methodology.

from langchain_core.messages import HumanMessage, SystemMessage

messages = [

SystemMessage("Translate the following from English into Italian"),

HumanMessage("hi!"),

]

mannequin.invoke(messages)

Now lets create a Immediate template. Immediate templates are nothing however an idea in LangChain designed to help with this transformation. They soak up uncooked person enter and return knowledge (a immediate) that is able to go right into a language mannequin.

from langchain_core.prompts import ChatPromptTemplate

system_template = "Translate the next from English into {language}"

prompt_template = ChatPromptTemplate.from_messages(

[("system", system_template), ("user", "{text}")]

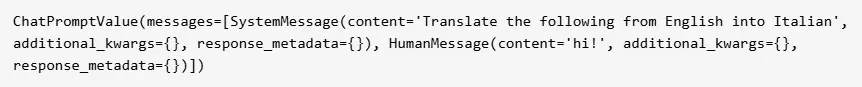

)Right here you possibly can see that it takes two variables, language and textual content. We format the language parameter into the system message, and the person textual content right into a person message. The enter to this immediate template is a dictionary. We are able to mess around with this immediate template by itself.

immediate = prompt_template.invoke({"language": "Italian", "textual content": "hello!"})

immediate

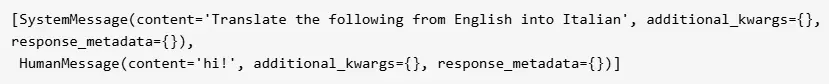

We are able to see that it returns a ChatPromptValue that consists of two messages. If we need to entry the messages immediately we do:

immediate.to_messages()

Lastly, we are able to invoke the chat mannequin on the formatted immediate:

response = mannequin.invoke(immediate)

print(response.content material)

LangChain is very versatile and adaptable, providing all kinds of instruments for various NLP purposes,

from easy queries to advanced workflows. You’ll be able to learn extra about LangChain elements right here.

What’s LlamaIndex?

LlamaIndex (previously generally known as GPT Index) is a framework for constructing context-augmented generative AI purposes with LLMs together with brokers and workflows. Its major focus is on ingesting, structuring, and accessing non-public or domain-specific knowledge. LlamaIndex excels at managing giant datasets, enabling swift and exact data retrieval, making it preferrred for search and retrieval duties. It presents a set of instruments that make it simple to combine customized knowledge into LLMs, particularly for initiatives requiring superior search capabilities.

LlamaIndex is very efficient for knowledge indexing and querying. Primarily based on my expertise with LlamaIndex, it is a perfect answer for working with vector embeddings and RAGs.

LlamaIndex imposes no restriction on how you employ LLMs. You need to use LLMs as auto-complete, chatbots, brokers, and extra. It simply makes utilizing them simpler.

They supply instruments like:

- Information connectors ingest your present knowledge from their native supply and format. These could possibly be APIs, PDFs, SQL, and (a lot) extra.

- Information indexes construction your knowledge in intermediate representations which can be simple and performant for LLMs to eat.

- Engines present pure language entry to your knowledge. For instance:

- Question engines are highly effective interfaces for question-answering (e.g. a RAG circulate).

- Chat engines are conversational interfaces for multi-message, “forwards and backwards” interactions along with your knowledge.

- Brokers are LLM-powered information staff augmented by instruments, from easy helper features to API integrations and extra.

- Observability/Analysis integrations that allow you to carefully experiment, consider, and monitor your app in a virtuous cycle.

- Workflows let you mix all the above into an event-driven system way more versatile than different, graph-based approaches.

LlamaIndex Ecosystem

Similar to LangChain, LlamaIndex too has its personal ecosystem.

- llama_deploy: Deploy your agentic workflows as manufacturing microservices

- LlamaHub: A big (and rising!) assortment of customized knowledge connectors

- SEC Insights: A LlamaIndex-powered software for monetary analysis

- create-llama: A CLI software to shortly scaffold LlamaIndex initiatives

Constructing Your First LLM Utility with LlamaIndex and OpenAI

Let’s make a easy LLM Utility utilizing LlamaIndex and OpenAI, additionally be taught the way it works:

Let’s set up libraries

!pip set up llama-indexSetup the OpenAI Key:

LlamaIndex makes use of OpenAI’s gpt-3.5-turbo by default. Be sure your API secret’s out there to your code by setting it as an setting variable. In MacOS and Linux, that is the command:

export OPENAI_API_KEY=XXXXXand on Home windows it’s

set OPENAI_API_KEY=XXXXXThis instance makes use of the textual content of Paul Graham’s essay, “What I Labored On”.

Obtain the info through this hyperlink and put it aside in a folder known as knowledge.

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

paperwork = SimpleDirectoryReader("knowledge").load_data()

index = VectorStoreIndex.from_documents(paperwork)

query_engine = index.as_query_engine()

response = query_engine.question("What is that this essay all about?")

print(response)

LlamaIndex abstracts the question course of however primarily compares the question with probably the most related data from the vectorized knowledge (or index), which is then supplied as context to the LLM.

Comparative Evaluation between LangChain vs LlamaIndex

LangChain and LlamaIndex cater to totally different strengths and use instances within the area of NLP purposes powered by giant language fashions (LLMs). Right here’s an in depth comparability:

| Function | LlamaIndex | LangChain |

|---|---|---|

| Information Indexing | – Converts numerous knowledge sorts (e.g., unstructured textual content, database data) into semantic embeddings. – Optimized for creating searchable vector indexes. |

– Allows modular and customizable knowledge indexing. – Makes use of chains for advanced operations, integrating a number of instruments and LLM calls. |

| Retrieval Algorithms | – Makes a speciality of rating paperwork primarily based on semantic similarity. – Excels in environment friendly and correct question efficiency. |

– Combines retrieval algorithms with LLMs to generate context-aware responses. – Splendid for interactive purposes requiring dynamic data retrieval. |

| Customization | – Restricted customization, tailor-made to indexing and retrieval duties. – Targeted on pace and accuracy inside its specialised area. |

– Extremely customizable for numerous purposes, from chatbots to workflow automation. – Helps intricate workflows and tailor-made outputs. |

| Context Retention | – Primary capabilities for retaining question context. – Appropriate for easy search and retrieval duties. |

– Superior context retention for sustaining coherent, long-term interactions. – Important for chatbots and buyer help purposes. |

| Use Circumstances | Greatest for inner search techniques, information administration, and enterprise options needing exact data retrieval. | Splendid for interactive purposes like buyer help, content material era, and complicated NLP duties. |

| Efficiency | – Optimized for fast and correct knowledge retrieval. – Handles giant datasets effectively. |

– Handles advanced workflows and integrates numerous instruments seamlessly. – Balances efficiency with subtle job necessities. |

| Lifecycle Administration | – Gives debugging and monitoring instruments for monitoring efficiency and reliability. – Ensures easy software lifecycle administration. |

– Offers the LangSmith analysis suite for testing, debugging, and optimization. – Ensures sturdy efficiency beneath real-world situations. |

Each frameworks provide highly effective capabilities, and selecting between them ought to rely in your mission’s particular wants and targets. In some instances, combining the strengths of each LlamaIndex and LangChain would possibly present the perfect outcomes.

Conclusion

LangChain and LlamaIndex are each highly effective frameworks however cater to totally different wants. LangChain is very modular, designed to deal with advanced workflows involving chains, prompts, fashions, reminiscence, and brokers. It excels in purposes that require intricate context retention and interplay administration,

equivalent to chatbots, buyer help techniques, and content material era instruments. Its integration with instruments like LangSmith for analysis and LangServe for deployment enhances the event and optimization lifecycle, making it preferrred for dynamic, long-term purposes.

LlamaIndex, alternatively, makes a speciality of knowledge retrieval and search duties. It effectively converts giant datasets into semantic embeddings for fast and correct retrieval, making it a superb selection for RAG-based purposes, information administration, and enterprise options. LlamaHub additional extends its performance by providing knowledge loaders for integrating numerous knowledge sources.

Finally, select LangChain if you happen to want a versatile, context-aware framework for advanced workflows and interaction-heavy purposes, whereas LlamaIndex is greatest fitted to techniques targeted on quick, exact data retrieval from giant datasets.

Key Takeaways

- LangChain excels at creating modular and context-aware workflows for interactive purposes like chatbots and buyer help techniques.

- LlamaIndex makes a speciality of environment friendly knowledge indexing and retrieval, preferrred for RAG-based techniques and enormous dataset administration.

- LangChain’s ecosystem helps superior lifecycle administration with instruments like LangSmith and LangGraph for debugging and deployment.

- LlamaIndex presents sturdy instruments like vector embeddings and LlamaHub for semantic search and numerous knowledge integration.

- Each frameworks could be mixed for purposes requiring seamless knowledge retrieval and complicated workflow integration.

- Select LangChain for dynamic, long-term purposes and LlamaIndex for exact, large-scale data retrieval duties.

Often Requested Questions

A. LangChain focuses on constructing advanced workflows and interactive purposes (e.g., chatbots, job automation), whereas LlamaIndex makes a speciality of environment friendly search and retrieval from giant datasets utilizing vectorized embeddings.

A. Sure, LangChain and LlamaIndex could be built-in to mix their strengths. For instance, you need to use LlamaIndex for environment friendly knowledge retrieval after which feed the retrieved data into LangChain workflows for additional processing or interplay.

A. LangChain is healthier fitted to conversational AI because it presents superior context retention, reminiscence administration, and modular chains that help dynamic, context-aware interactions.

A. LlamaIndex makes use of vector embeddings to signify knowledge semantically. It allows environment friendly top-k similarity searches, making it extremely optimized for quick and correct question responses, even with giant datasets.

The media proven on this article just isn’t owned by Analytics Vidhya and is used on the Creator’s discretion.