Giant Language Mannequin brokers are highly effective instruments for automating duties like search, content material era, and high quality evaluate. Nevertheless, a single agent usually can’t do every part effectively, particularly when you’ll want to combine exterior assets (like internet searches) and a number of specialised steps (e.g., drafting vs. reviewing). Multi-agent workflows mean you can cut up these duties amongst totally different brokers, every with its personal instruments, constraints, and obligations. On this article, we’ll take a look at the best way to construct a three-agent system—ResearchAgent, WriteAgent, and ReviewAgent—the place every agent handles a selected a part of making a concise historic report on the web. We’ll additionally make sure the system received’t get caught in a search loop, which may waste time and credit.

Studying Goals

- Perceive how multi-agent workflows improve job automation with LLMs.

- Be taught to construct a three-agent system for analysis, writing, and evaluate duties.

- Implement safeguards to forestall infinite search loops in automated workflows.

- Discover the mixing of exterior instruments like DuckDuckGo for environment friendly information retrieval.

- Develop an LLM-powered workflow that ensures structured and high-quality content material era.

This text was revealed as part of the Information Science Blogathon.

Language Mannequin (LLM) – OpenAI GPT-4

We’ll use OpenAI(mannequin=”gpt-4o”) from llama-index. You possibly can swap this out with one other LLM in the event you favor, however GPT-4 is often a robust alternative for multi-step reasoning duties.

###############################################################################

# 1. INSTALLATION

###############################################################################

# Be sure to have the next put in:

# pip set up llama-index langchain duckduckgo-search

###############################################################################

# 2. IMPORTS

###############################################################################

%pip set up llama-index langchain duckduckgo-search

from llama_index.llms.openai import OpenAI

# For DuckDuckGo search by way of LangChain

from langchain.utilities import DuckDuckGoSearchAPIWrapper

# llama-index workflow courses

from llama_index.core.workflow import Context

from llama_index.core.agent.workflow import (

FunctionAgent,

AgentWorkflow,

AgentInput,

AgentOutput,

ToolCall,

ToolCallResult,

AgentStream

)

import asyncio

###############################################################################

# 3. CREATE LLM

###############################################################################

# Substitute "sk-..." together with your precise OpenAI API key

llm = OpenAI(mannequin="gpt-4", api_key="OPENAI_API_KEY")Instruments are capabilities that brokers can name to carry out actions outdoors of their very own language modeling. Typical instruments embody:

- Net Search

- Studying/Writing Information

- Math Calculators

- APIs for exterior providers

In our instance, the important thing software is DuckDuckGoSearch, which makes use of LangChain’s DuckDuckGoSearchAPIWrapper underneath the hood. We even have helper instruments to document notes, write a report, and evaluate it.

###############################################################################

# 4. DEFINE DUCKDUCKGO SEARCH TOOL WITH SAFEGUARDS

###############################################################################

# We wrap LangChain's DuckDuckGoSearchAPIWrapper with our personal logic

# to forestall repeated or extreme searches.

duckduckgo = DuckDuckGoSearchAPIWrapper()

MAX_SEARCH_CALLS = 2

search_call_count = 0

past_queries = set()

async def safe_duckduckgo_search(question: str) -> str:

"""

A DuckDuckGo-based search operate that:

1) Prevents greater than MAX_SEARCH_CALLS complete searches.

2) Skips duplicate queries.

"""

world search_call_count, past_queries

# Examine for duplicate queries

if question in past_queries:

return f"Already looked for '{question}'. Avoiding duplicate search."

# Examine if we have reached the max search calls

if search_call_count >= MAX_SEARCH_CALLS:

return "Search restrict reached, no extra searches allowed."

# In any other case, carry out the search

search_call_count += 1

past_queries.add(question)

# DuckDuckGoSearchAPIWrapper.run(...) is synchronous, however we now have an async signature

end result = duckduckgo.run(question)

return str(end result)

###############################################################################

# 5. OTHER TOOL FUNCTIONS: record_notes, write_report, review_report

###############################################################################

async def record_notes(ctx: Context, notes: str, notes_title: str) -> str:

"""Retailer analysis notes underneath a given title within the shared context."""

current_state = await ctx.get("state")

if "research_notes" not in current_state:

current_state["research_notes"] = {}

current_state["research_notes"][notes_title] = notes

await ctx.set("state", current_state)

return "Notes recorded."

async def write_report(ctx: Context, report_content: str) -> str:

"""Write a report in markdown, storing it within the shared context."""

current_state = await ctx.get("state")

current_state["report_content"] = report_content

await ctx.set("state", current_state)

return "Report written."

async def review_report(ctx: Context, evaluate: str) -> str:

"""Evaluate the report and retailer suggestions within the shared context."""

current_state = await ctx.get("state")

current_state["review"] = evaluate

await ctx.set("state", current_state)

return "Report reviewed." Defining AI Brokers for Job Execution

Every agent is an occasion of FunctionAgent. Key fields embody:

- title and description

- system_prompt: Instructs the agent about its position and constraints

- llm: The language mannequin used

- instruments: Which capabilities the agent can name

- can_handoff_to: Which agent(s) this agent can hand management to

ResearchAgent

- Searches the net (as much as a specified restrict of queries)

- Saves related findings as “notes”

- Fingers off to the following agent as soon as sufficient information is collected

WriteAgent

- Composes the report in Markdown, utilizing no matter notes the ResearchAgent collected

- Fingers off to the ReviewAgent for suggestions

ReviewAgent

- Opinions the draft content material for correctness and completeness

- If modifications are wanted, arms management again to the WriteAgent

- In any other case, gives ultimate approval

###############################################################################

# 6. DEFINE AGENTS

###############################################################################

# We've three brokers with distinct obligations:

# 1. ResearchAgent - makes use of DuckDuckGo to collect information (max 2 searches).

# 2. WriteAgent - composes the ultimate report.

# 3. ReviewAgent - opinions the ultimate report.

research_agent = FunctionAgent(

title="ResearchAgent",

description=(

"A analysis agent that searches the net utilizing DuckDuckGo. "

"It should not exceed 2 searches complete, and should keep away from repeating the identical question. "

"As soon as enough info is collected, it ought to hand off to the WriteAgent."

),

system_prompt=(

"You're the ResearchAgent. Your aim is to collect enough info on the subject. "

"Solely carry out at most 2 distinct searches. If in case you have sufficient information or have reached 2 searches, "

"handoff to the following agent. Keep away from infinite loops!"

),

llm=llm,

instruments=[

safe_duckduckgo_search, # Our DuckDuckGo-based search function

record_notes

],

can_handoff_to=["WriteAgent"]

)

write_agent = FunctionAgent(

title="WriteAgent",

description=(

"Writes a markdown report based mostly on the analysis notes. "

"Then arms off to the ReviewAgent for suggestions."

),

system_prompt=(

"You're the WriteAgent. Draft a structured markdown report based mostly on the notes. "

"After writing, hand off to the ReviewAgent."

),

llm=llm,

instruments=[write_report],

can_handoff_to=["ReviewAgent", "ResearchAgent"]

)

review_agent = FunctionAgent(

title="ReviewAgent",

description=(

"Opinions the ultimate report for correctness. Approves or requests modifications."

),

system_prompt=(

"You're the ReviewAgent. Learn the report, present suggestions, and both approve "

"or request revisions. If revisions are wanted, handoff to WriteAgent."

),

llm=llm,

instruments=[review_report],

can_handoff_to=["WriteAgent"]

)Agent Workflow – Coordinating Job Execution

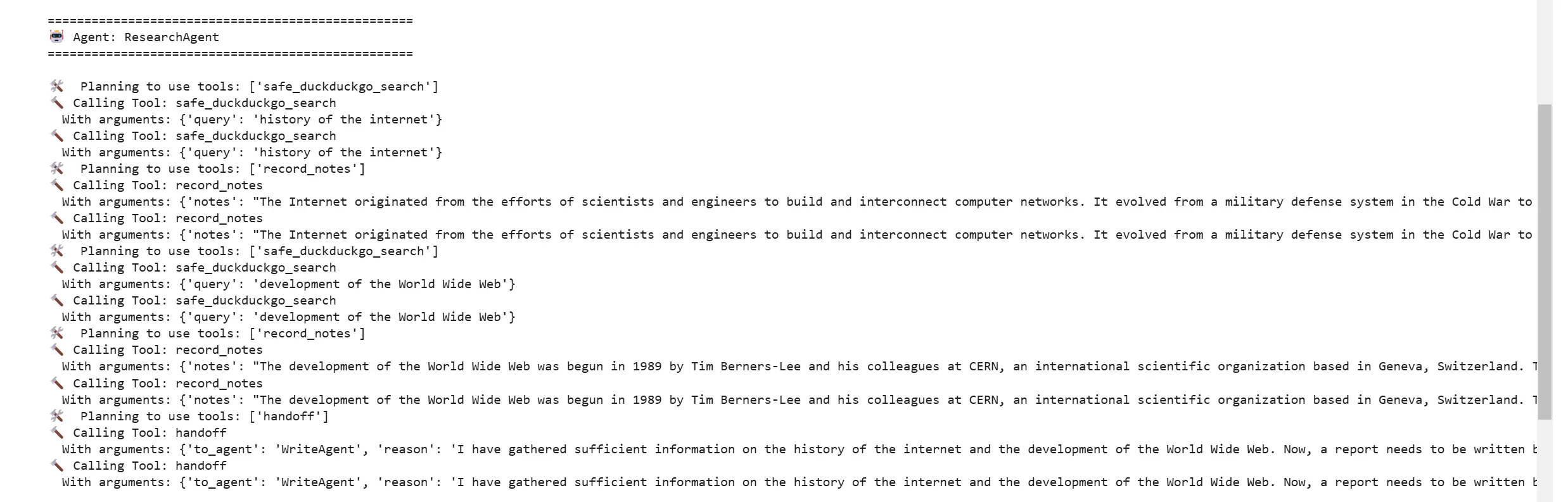

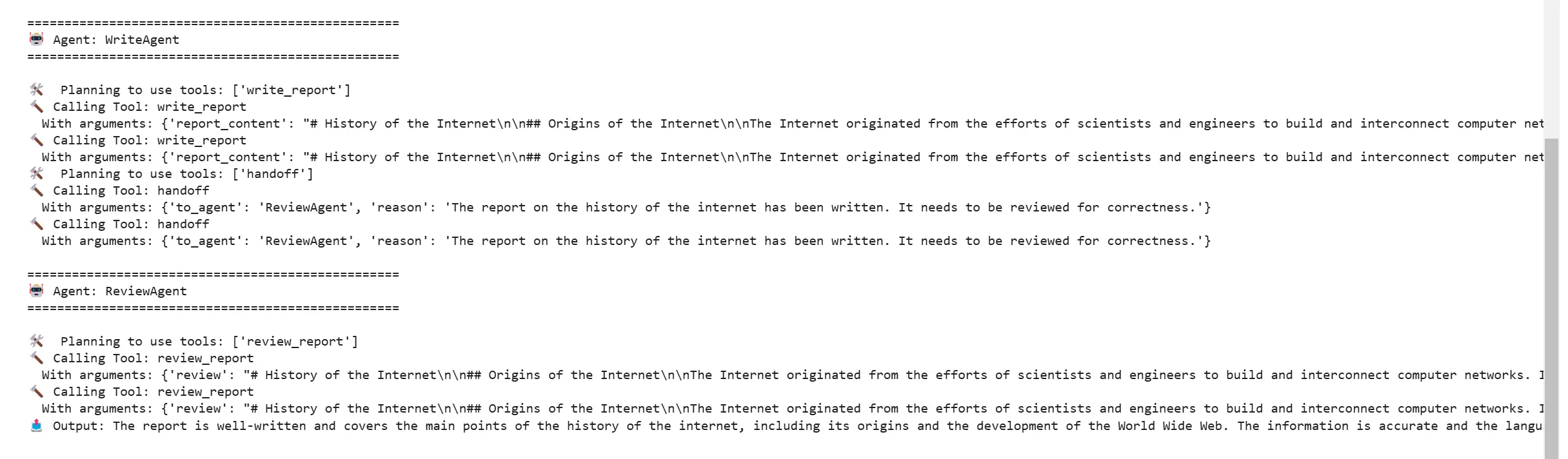

An AgentWorkflow coordinates how messages and state transfer between brokers. When the person initiates a request (e.g., “Write me a concise report on the historical past of the web…”), the workflow:

- ResearchAgent receives the person immediate and decides whether or not to carry out an online search or document some notes.

- WriteAgent makes use of the notes to create a structured or styled output (like a Markdown doc).

- ReviewAgent checks the ultimate output and both sends it again for revision or approves it.

The workflow ends as soon as the content material is permitted and no additional modifications are requested.

Construct the Workflow

On this step, we outline the agent workflow, which incorporates analysis, writing, and reviewing brokers. The root_agent is about to the research_agent, that means the method begins with gathering analysis. The preliminary state comprises placeholders for analysis notes, report content material, and evaluate standing.

agent_workflow = AgentWorkflow(

brokers=[research_agent, write_agent, review_agent],

root_agent=research_agent.title, # Begin with the ResearchAgent

initial_state={

"research_notes": {},

"report_content": "Not written but.",

"evaluate": "Evaluate required.",

},

)Run the Workflow

The workflow is executed utilizing a person request, which specifies the subject and key factors to cowl within the report. The request on this instance asks for a concise report on the historical past of the web, together with its origins, the event of the World Vast Net, and its fashionable evolution. The workflow processes this request by coordinating the brokers.

# Instance person request: "Write me a report on the historical past of the web..."

handler = agent_workflow.run(

user_msg=(

"Write me a concise report on the historical past of the web. "

"Embody its origins, the event of the World Vast Net, and its Twenty first-century evolution."

)

)Stream Occasions for Debugging or Remark

To observe the workflow’s execution, we stream occasions and print particulars about agent actions. This enables us to trace which agent is at the moment working, view intermediate outputs, and examine software calls made by the brokers. Debugging info equivalent to software utilization and responses is displayed for higher visibility.

current_agent = None

async for occasion in handler.stream_events():

if hasattr(occasion, "current_agent_name") and occasion.current_agent_name != current_agent:

current_agent = occasion.current_agent_name

print(f"n{'='*50}")

print(f"🤖 Agent: {current_agent}")

print(f"{'='*50}n")

# Print outputs or software calls

if isinstance(occasion, AgentOutput):

if occasion.response.content material:

print("📤 Output:", occasion.response.content material)

if occasion.tool_calls:

print("🛠️ Planning to make use of instruments:", [call.tool_name for call in event.tool_calls])

elif isinstance(occasion, ToolCall):

print(f"🔨 Calling Software: {occasion.tool_name}")

print(f" With arguments: {occasion.tool_kwargs}")

elif isinstance(occasion, ToolCallResult):

print(f"🔧 Software End result ({occasion.tool_name}):")

print(f" Arguments: {occasion.tool_kwargs}")

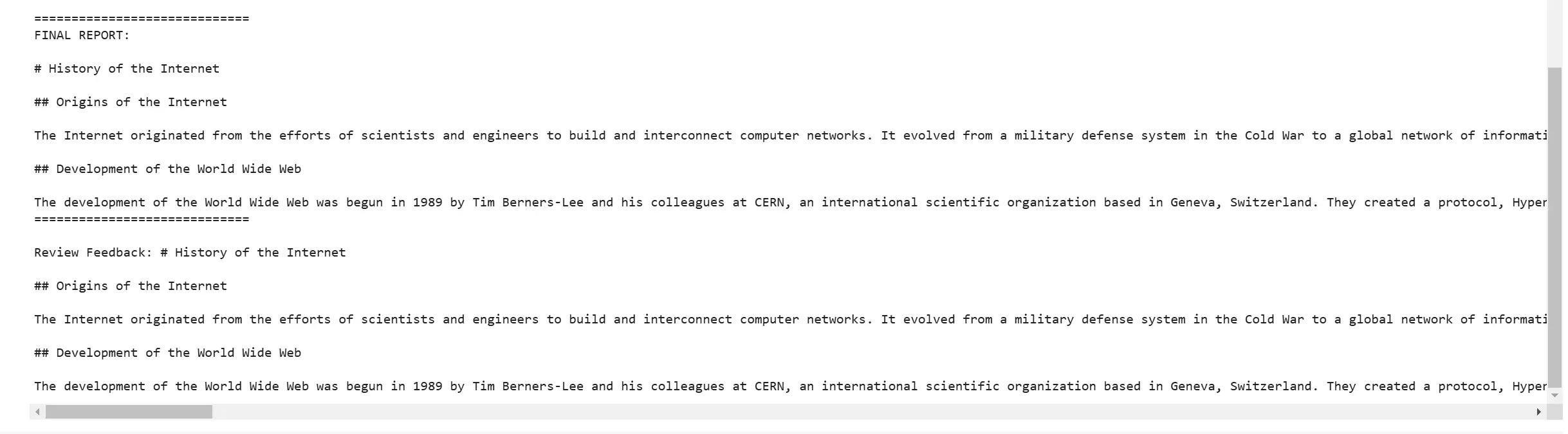

print(f" Output: {occasion.tool_output}")Retrieve and Print the Remaining Report

As soon as the workflow completes, we extract the ultimate state, which comprises the generated report. The report content material is printed, adopted by any evaluate suggestions from the evaluate agent. This ensures the output is full and will be additional refined if crucial.

final_state = await handler.ctx.get("state")

print("nn=============================")

print("FINAL REPORT:n")

print(final_state["report_content"])

print("=============================n")

# Evaluate suggestions (if any)

if "evaluate" in final_state:

print("Evaluate Suggestions:", final_state["review"])

Avoiding an Infinite Search Loop

When utilizing an online search software, it’s doable for the LLM to get “confused” and repeatedly name the search operate. This may result in pointless prices or time consumption. To stop that, we use two mechanisms:

- Exhausting LimitWe set MAX_SEARCH_CALLS = 2, so the analysis software can solely be referred to as twice.

- Duplicate DetectionWe retailer previous queries in a set (past_queries) to keep away from repeating the very same search a number of instances.

If both situation is met (the utmost searches or a replica question), our safe_duckduckgo_search operate returns a canned message as a substitute of performing a brand new search.

What to Anticipate?

ResearchAgent

- Receives the person request to put in writing a concise report on the historical past of the web.

- Presumably performs as much as two distinct DuckDuckGo searches (e.g., “historical past of the web” and “World Vast Net Tim Berners-Lee,” and so on.), then calls record_notes to retailer a abstract.

WriteAgent

- Reads the “research_notes” from the shared context.

- Drafts a brief Markdown report.

- Fingers off to the ReviewAgent.

ReviewAgent

- Evaluates the content material.

- If modifications are wanted, it could move management again to WriteAgent. In any other case, it approves the report.

Workflow Ends

The ultimate output is saved in final_state[“report_content”].

Conclusion

By splitting your workflow into distinct brokers for search, writing, and evaluate, you’ll be able to create a robust, modular system that:

- Gathers related info (in a managed approach, stopping extreme searches)

- Produces structured, high-quality outputs

- Self-checks for accuracy and completeness

The DuckDuckGo integration utilizing LangChain provides a plug-and-play internet search answer for Multi-Agent Workflow with out requiring specialised API keys or credentials. Mixed with built-in safeguards (search name limits, duplicate detection), this method is powerful, environment friendly, and appropriate for a variety of analysis and content-generation duties.

Key Takeaways

- Multi-agent workflows enhance effectivity by assigning specialised roles to LLM brokers.

- Utilizing exterior instruments like DuckDuckGo enhances the analysis capabilities of LLM brokers.

- Implementing constraints, equivalent to search limits, prevents pointless useful resource consumption.

- Coordinated agent workflows guarantee structured, high-quality content material era.

- A well-designed handoff mechanism helps keep away from redundant duties and infinite loops.

Ceaselessly Requested Questions

A. Splitting obligations throughout brokers (analysis, writing, reviewing) ensures every step is clearly outlined and simpler to handle. It additionally reduces confusion within the mannequin’s decision-making and fosters extra correct, structured outputs.

A. Within the code, we use a worldwide counter (search_call_count) and a relentless (MAX_SEARCH_CALLS = 2). Every time the search agent calls safe_duckduckgo_search, it checks whether or not the counter has reached the restrict. In that case, it returns a message as a substitute of performing one other search.

A. We keep a Python set referred to as past_queries to detect repeated queries. If the question is already in that set, the software will skip performing the precise search and return a brief message, stopping duplicate queries from working.

A. Completely. You possibly can edit every agent’s system_prompt to tailor directions to your required area or writing type. For example, you can instruct the WriteAgent to supply a bullet-point listing, a story essay, or a technical abstract.

A. You possibly can swap out OpenAI(mannequin=”gpt-4″) for one more mannequin supported by llama-index (e.g., GPT-3.5, or perhaps a native mannequin). The structure stays the identical, although some fashions might produce different-quality outputs.

The media proven on this article is just not owned by Analytics Vidhya and is used on the Writer’s discretion.