In lots of real-world purposes, information will not be purely textual—it could embody photographs, tables, and charts that assist reinforce the narrative. A multimodal report generator lets you incorporate each textual content and pictures right into a ultimate output, making your experiences extra dynamic and visually wealthy.

This text outlines tips on how to construct such a pipeline utilizing:

- LlamaIndex for orchestrating doc parsing and question engines,

- OpenAI language fashions for textual evaluation,

- LlamaParse to extract each textual content and pictures from PDF paperwork,

- An observability setup utilizing Arize Phoenix (through LlamaTrace) for logging and debugging.

The tip result’s a pipeline that may course of a complete PDF slide deck—each textual content and visuals—and generate a structured report containing each textual content and pictures.

Studying Goals

- Perceive tips on how to combine textual content and visuals for efficient monetary report technology utilizing multimodal pipelines.

- Study to make the most of LlamaIndex and LlamaParse for enhanced monetary report technology with structured outputs.

- Discover LlamaParse for extracting each textual content and pictures from PDF paperwork successfully.

- Arrange observability utilizing Arize Phoenix (through LlamaTrace) for logging and debugging complicated pipelines.

- Create a structured question engine to generate experiences that interleave textual content summaries with visible components.

This text was revealed as part of the Knowledge Science Blogathon.

Overview of the Course of

Constructing a multimodal report generator includes making a pipeline that seamlessly integrates textual and visible components from complicated paperwork like PDFs. The method begins with putting in the mandatory libraries, comparable to LlamaIndex for doc parsing and question orchestration, and LlamaParse for extracting each textual content and pictures. Observability is established utilizing Arize Phoenix (through LlamaTrace) to watch and debug the pipeline.

As soon as the setup is full, the pipeline processes a PDF doc, parsing its content material into structured textual content and rendering visible components like tables and charts. These parsed components are then related, making a unified dataset. A SummaryIndex is constructed to allow high-level insights, and a structured question engine is developed to generate experiences that mix textual evaluation with related visuals. The result’s a dynamic and interactive report generator that transforms static paperwork into wealthy, multimodal outputs tailor-made for person queries.

Step-by-Step Implementation

Comply with this detailed information to construct a multimodal report generator, from establishing dependencies to producing structured outputs with built-in textual content and pictures. Every step ensures a seamless integration of LlamaIndex, LlamaParse, and Arize Phoenix for an environment friendly and dynamic pipeline.

Step 1: Set up and Import Dependencies

You’ll want the next libraries working on Python 3.9.9 :

- llama-index

- llama-parse (for textual content + picture parsing)

- llama-index-callbacks-arize-phoenix (for observability/logging)

- nest_asyncio (to deal with async occasion loops in notebooks)

!pip set up -U llama-index-callbacks-arize-phoenix

import nest_asyncio

nest_asyncio.apply()Step 2: Set Up Observability

We combine with LlamaTrace – LlamaCloud API (Arize Phoenix). First, acquire an API key from llamatrace.com, then arrange atmosphere variables to ship traces to Phoenix.

Phoenix API key may be obtained by signing up for LlamaTrace right here , then navigate to the underside left panel and click on on ‘Keys’ the place it is best to discover your API key.

For instance:

PHOENIX_API_KEY = "<PHOENIX_API_KEY>"

os.environ["OTEL_EXPORTER_OTLP_HEADERS"] = f"api_key={PHOENIX_API_KEY}"

llama_index.core.set_global_handler(

"arize_phoenix", endpoint="https://llamatrace.com/v1/traces"

)Step 3: Load the information – Receive Your Slide Deck

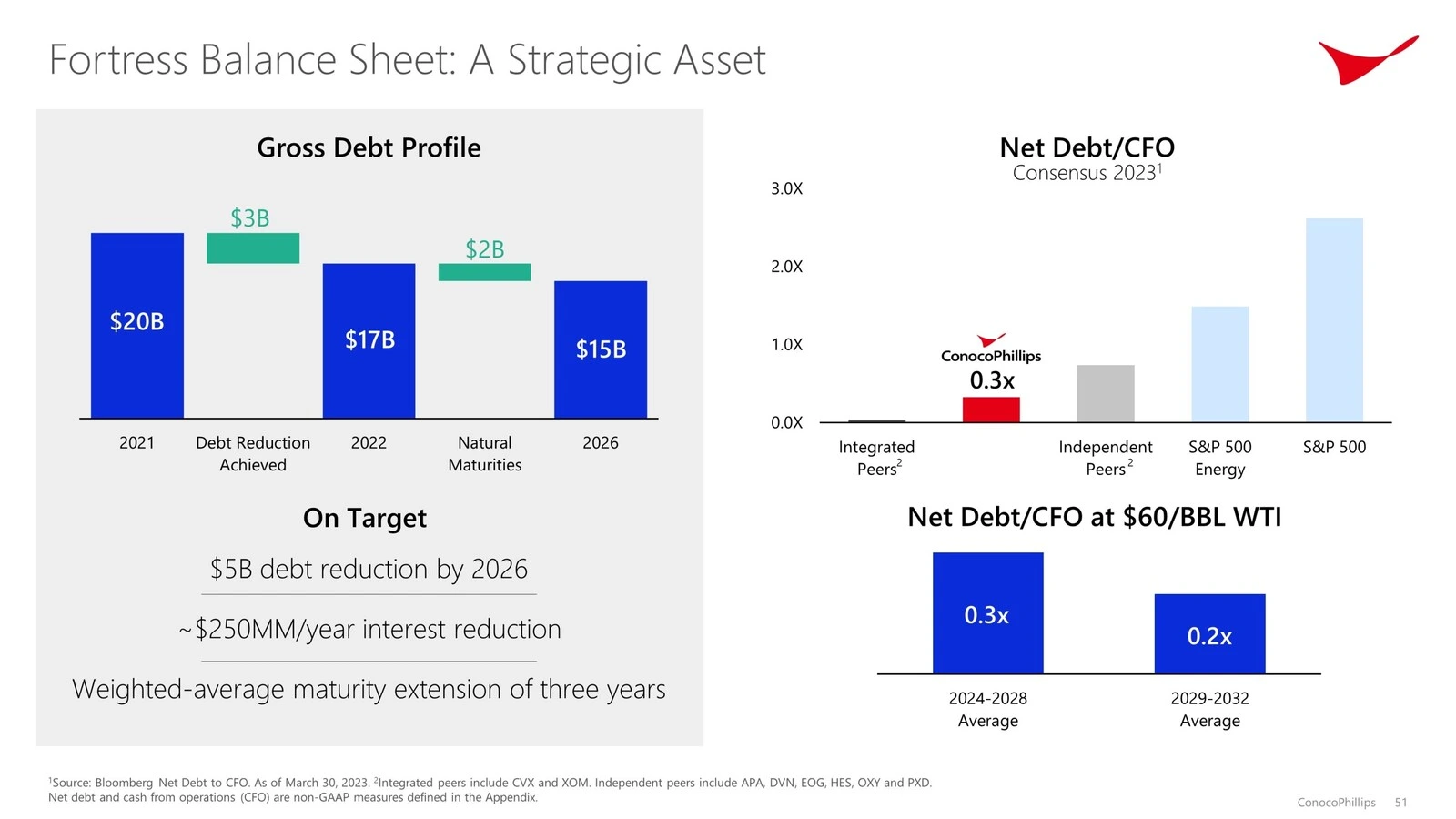

For demonstration, we use ConocoPhillips’ 2023 investor assembly slide deck. We obtain the PDF:

import os

import requests

# Create the directories (ignore errors in the event that they exist already)

os.makedirs("information", exist_ok=True)

os.makedirs("data_images", exist_ok=True)

# URL of the PDF

url = "https://static.conocophillips.com/information/2023-conocophillips-aim-presentation.pdf"

# Obtain and save to information/conocophillips.pdf

response = requests.get(url)

with open("information/conocophillips.pdf", "wb") as f:

f.write(response.content material)

print("PDF downloaded to information/conocophillips.pdf")Test if the pdf slide deck is within the information folder, if not place it within the information folder and title it as you need.

Step 4: Set Up Fashions

You want an embedding mannequin and an LLM. On this instance:

from llama_index.llms.openai import OpenAI

from llama_index.embeddings.openai import OpenAIEmbedding

embed_model = OpenAIEmbedding(mannequin="text-embedding-3-large")

llm = OpenAI(mannequin="gpt-4o")Subsequent, you register these because the default for LlamaIndex:

from llama_index.core import Settings

Settings.embed_model = embed_model

Settings.llm = llmStep 5: Parse the Doc with LlamaParse

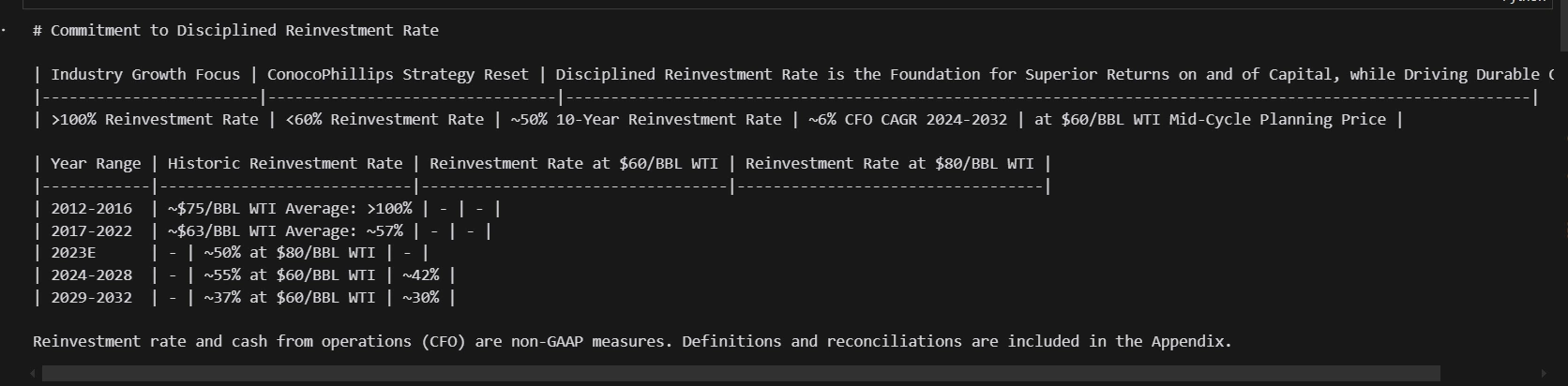

LlamaParse can extract textual content and pictures (through a multimodal giant mannequin). For every PDF web page, it returns:

- Markdown textual content (with tables, headings, bullet factors, and many others.)

- A rendered picture (saved domestically)

print(f"Parsing slide deck...")

md_json_objs = parser.get_json_result("information/conocophillips.pdf")

md_json_list = md_json_objs[0]["pages"]

print(md_json_list[10]["md"])

print(md_json_list[1].keys())

image_dicts = parser.get_images(md_json_objs, download_path="data_images")

Step 6: Affiliate Textual content and Photos

We create a listing of TextNode objects (LlamaIndex’s information construction) for every web page. Every node has metadata in regards to the web page quantity and the corresponding picture file path:

from llama_index.core.schema import TextNode

from typing import Non-compulsory

# get pages loaded by means of llamaparse

import re

def get_page_number(file_name):

match = re.search(r"-page-(d+).jpg$", str(file_name))

if match:

return int(match.group(1))

return 0

def _get_sorted_image_files(image_dir):

"""Get picture information sorted by web page."""

raw_files = [f for f in list(Path(image_dir).iterdir()) if f.is_file()]

sorted_files = sorted(raw_files, key=get_page_number)

return sorted_files

from copy import deepcopy

from pathlib import Path

# connect picture metadata to the textual content nodes

def get_text_nodes(json_dicts, image_dir=None):

"""Cut up docs into nodes, by separator."""

nodes = []

image_files = _get_sorted_image_files(image_dir) if image_dir will not be None else None

md_texts = [d["md"] for d in json_dicts]

for idx, md_text in enumerate(md_texts):

chunk_metadata = {"page_num": idx + 1}

if image_files will not be None:

image_file = image_files[idx]

chunk_metadata["image_path"] = str(image_file)

chunk_metadata["parsed_text_markdown"] = md_text

node = TextNode(

textual content="",

metadata=chunk_metadata,

)

nodes.append(node)

return nodes

# this may break up into pages

text_nodes = get_text_nodes(md_json_list, image_dir="data_images")

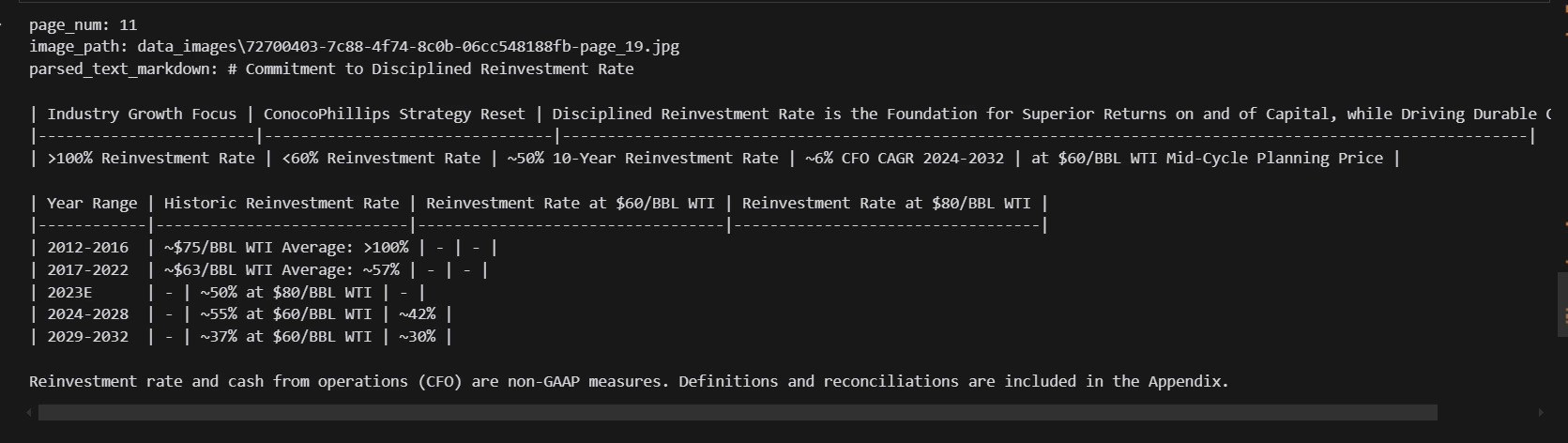

print(text_nodes[10].get_content(metadata_mode="all"))

Step 7: Construct a Abstract Index

With these textual content nodes in hand, you may create a SummaryIndex:

import os

from llama_index.core import (

StorageContext,

SummaryIndex,

load_index_from_storage,

)

if not os.path.exists("storage_nodes_summary"):

index = SummaryIndex(text_nodes)

# save index to disk

index.set_index_id("summary_index")

index.storage_context.persist("./storage_nodes_summary")

else:

# rebuild storage context

storage_context = StorageContext.from_defaults(persist_dir="storage_nodes_summary")

# load index

index = load_index_from_storage(storage_context, index_id="summary_index")The SummaryIndex ensures you may simply retrieve or generate high-level summaries over the whole doc.

Step 8: Outline a Structured Output Schema

Our pipeline goals to supply a ultimate output with interleaved textual content blocks and picture blocks. For that, we create a customized Pydantic mannequin (utilizing Pydantic v2 or guaranteeing compatibility) with two block sorts—TextBlock and ImageBlock—and a mother or father mannequin ReportOutput:

from llama_index.llms.openai import OpenAI

from pydantic import BaseModel, Area

from typing import Listing

from IPython.show import show, Markdown, Picture

from typing import Union

class TextBlock(BaseModel):

"""Textual content block."""

textual content: str = Area(..., description="The textual content for this block.")

class ImageBlock(BaseModel):

"""Picture block."""

file_path: str = Area(..., description="File path to the picture.")

class ReportOutput(BaseModel):

"""Knowledge mannequin for a report.

Can comprise a mixture of textual content and picture blocks. MUST comprise not less than one picture block.

"""

blocks: Listing[Union[TextBlock, ImageBlock]] = Area(

..., description="A listing of textual content and picture blocks."

)

def render(self) -> None:

"""Render as HTML on the web page."""

for b in self.blocks:

if isinstance(b, TextBlock):

show(Markdown(b.textual content))

else:

show(Picture(filename=b.file_path))

system_prompt = """

You're a report technology assistant tasked with producing a well-formatted context given parsed context.

You'll be given context from a number of experiences that take the type of parsed textual content.

You might be accountable for producing a report with interleaving textual content and pictures - within the format of interleaving textual content and "picture" blocks.

Since you can not straight produce a picture, the picture block takes in a file path - it is best to write within the file path of the picture as an alternative.

How are you aware which picture to generate? Every context chunk will comprise metadata together with a picture render of the supply chunk, given as a file path.

Embody ONLY the photographs from the chunks which have heavy visible components (you may get a touch of this if the parsed textual content incorporates quite a lot of tables).

You MUST embody not less than one picture block within the output.

You MUST output your response as a software name with a purpose to adhere to the required output format. Do NOT give again regular textual content.

"""

llm = OpenAI(mannequin="gpt-4o", api_key="OpenAI_API_KEY", system_prompt=system_prompt)

sllm = llm.as_structured_llm(output_cls=ReportOutput)The important thing level: ReportOutput requires not less than one picture block, guaranteeing the ultimate reply is multimodal.

Step 9: Create a Structured Question Engine

LlamaIndex lets you use a “structured LLM” (i.e., an LLM whose output is mechanically parsed into a selected schema). Right here’s how:

query_engine = index.as_query_engine(

similarity_top_k=10,

llm=sllm,

# response_mode="tree_summarize"

response_mode="compact",

)

response = query_engine.question(

"Give me a abstract of the monetary efficiency of the Alaska/Worldwide phase vs. the decrease 48 phase"

)

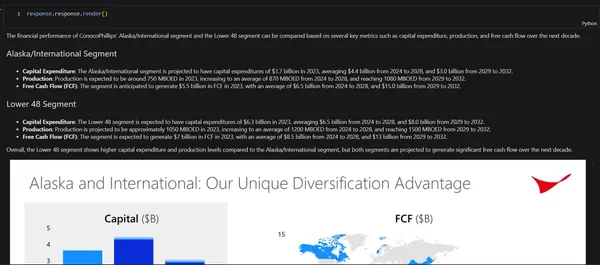

response.response.render()

# Output

The monetary efficiency of ConocoPhillips' Alaska/Worldwide phase and the Decrease 48 phase may be in contrast based mostly on a number of key metrics comparable to capital expenditure, manufacturing, and free money movement over the subsequent decade.

Alaska/Worldwide Phase

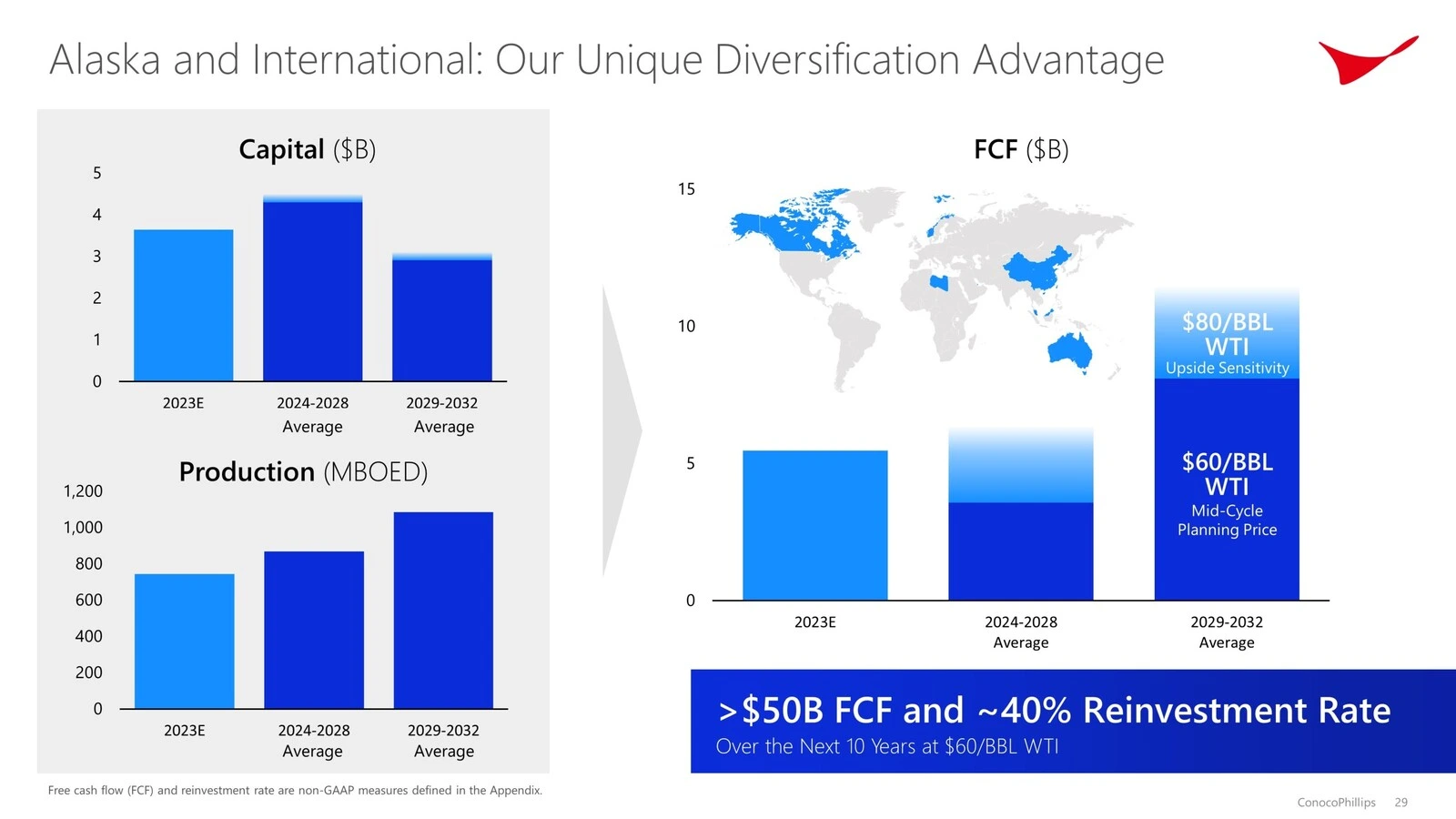

Capital Expenditure: The Alaska/Worldwide phase is projected to have capital expenditures of $3.7 billion in 2023, averaging $4.4 billion from 2024 to 2028, and $3.0 billion from 2029 to 2032.

Manufacturing: Manufacturing is predicted to be round 750 MBOED in 2023, growing to a median of 870 MBOED from 2024 to 2028, and reaching 1080 MBOED from 2029 to 2032.

Free Money Circulation (FCF): The phase is anticipated to generate $5.5 billion in FCF in 2023, with a median of $6.5 billion from 2024 to 2028, and $15.0 billion from 2029 to 2032.

Decrease 48 Phase

Capital Expenditure: The Decrease 48 phase is predicted to have capital expenditures of $6.3 billion in 2023, averaging $6.5 billion from 2024 to 2028, and $8.0 billion from 2029 to 2032.

Manufacturing: Manufacturing is projected to be roughly 1050 MBOED in 2023, growing to a median of 1200 MBOED from 2024 to 2028, and reaching 1500 MBOED from 2029 to 2032.

Free Money Circulation (FCF): The phase is predicted to generate $7 billion in FCF in 2023, with a median of $8.5 billion from 2024 to 2028, and $13 billion from 2029 to 2032.

General, the Decrease 48 phase exhibits increased capital expenditure and manufacturing ranges in comparison with the Alaska/Worldwide phase, however each segments are projected to generate vital free money movement over the subsequent decade.

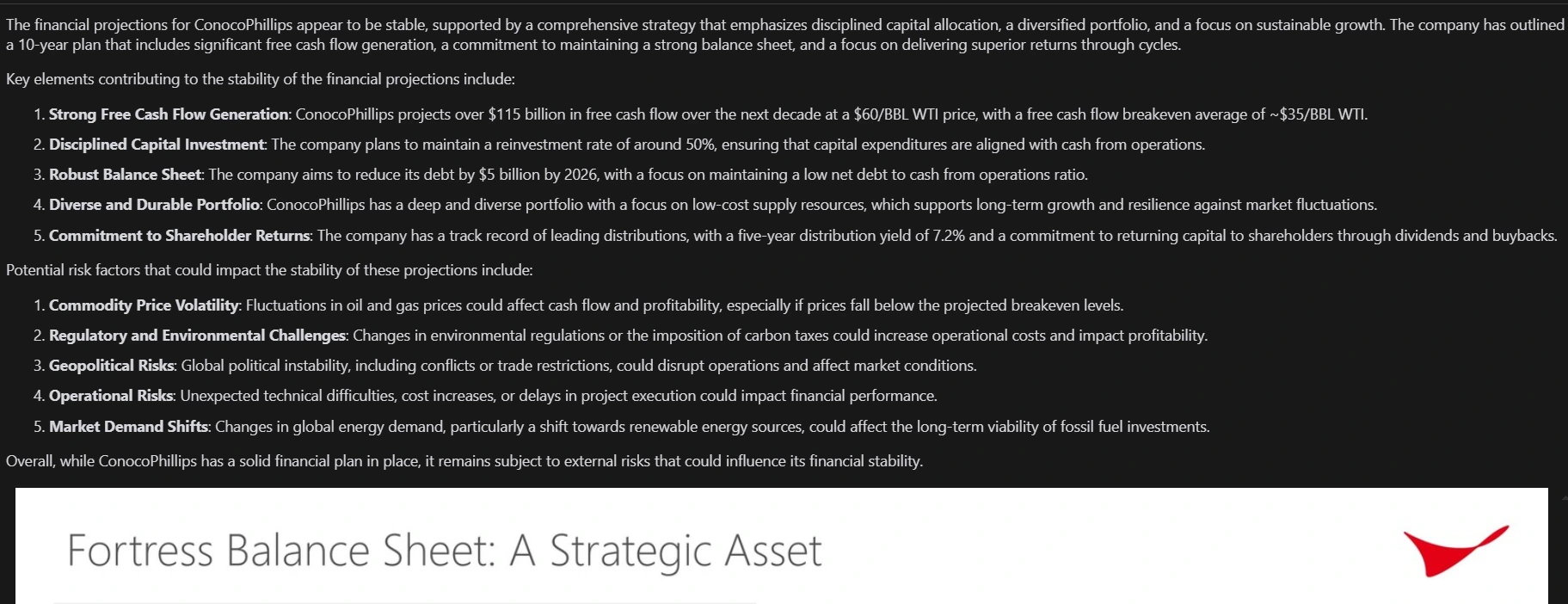

# Attempting one other question

response = query_engine.question(

"Give me a abstract of whether or not you assume the monetary projections are secure, and if not, what are the potential threat elements. "

"Assist your analysis with sources."

)

response.response.render()

Conclusion

By combining LlamaIndex, LlamaParse, and OpenAI, you may construct a multimodal report generator that processes a complete PDF (with textual content, tables, and pictures) right into a structured output. This strategy delivers richer, extra visually informative outcomes—precisely what stakeholders have to glean essential insights from complicated company or technical paperwork.

Be happy to adapt this pipeline to your individual paperwork, add a retrieval step for giant archives, or combine domain-specific fashions for analyzing the underlying photographs. With the foundations laid out right here, you may create dynamic, interactive, and visually wealthy experiences that go far past easy text-based queries.

A giant because of Jerry Liu from LlamaIndex for creating this superb pipeline.

Key Takeaways

- Remodel PDFs with textual content and visuals into structured codecs whereas preserving the integrity of unique content material utilizing LlamaParse and LlamaIndex.

- Generate visually enriched experiences that interweave textual summaries and pictures for higher contextual understanding.

- Monetary report technology may be enhanced by integrating each textual content and visible components for extra insightful and dynamic outputs.

- Leveraging LlamaIndex and LlamaParse streamlines the method of monetary report technology, guaranteeing correct and structured outcomes.

- Retrieve related paperwork earlier than processing to optimize report technology for giant archives.

- Enhance visible parsing, incorporate chart-specific analytics, and mix fashions for textual content and picture processing for deeper insights.

Regularly Requested Questions

A. A multimodal report generator is a system that produces experiences containing a number of varieties of content material—primarily textual content and pictures—in a single cohesive output. On this pipeline, you parse a PDF into each textual and visible components, then mix them right into a single ultimate report.

A. Observability instruments like Arize Phoenix (through LlamaTrace) allow you to monitor and debug mannequin conduct, monitor queries and responses, and establish points in actual time. It’s particularly helpful when coping with giant or complicated paperwork and a number of LLM-based steps.

A. Most PDF textual content extractors solely deal with uncooked textual content, typically shedding formatting, photographs, and tables. LlamaParse is able to extracting each textual content and pictures (rendered web page photographs), which is essential for constructing multimodal pipelines the place it is advisable refer again to tables, charts, or different visuals.

A. SummaryIndex is a LlamaIndex abstraction that organizes your content material (e.g., pages of a PDF) so it might shortly generate complete summaries. It helps collect high-level insights from lengthy paperwork with out having to chunk them manually or run a retrieval question for each bit of information.

A. Within the ReportOutput Pydantic mannequin, implement that the blocks checklist requires not less than one ImageBlock. That is said in your system immediate and schema. The LLM should comply with these guidelines, or it is not going to produce legitimate structured output.

The media proven on this article will not be owned by Analytics Vidhya and is used on the Creator’s discretion.