Generative AI is unlocking new capabilities for PCs and workstations, together with sport assistants, enhanced content-creation and productiveness instruments and extra.

NVIDIA NIM microservices, out there now, and AI Blueprints, within the coming weeks, speed up AI growth and enhance its accessibility. Introduced on the CES commerce present in January, NVIDIA NIM supplies prepackaged, state-of-the-art AI fashions optimized for the NVIDIA RTX platform, together with the NVIDIA GeForce RTX 50 Sequence and, now, the brand new NVIDIA Blackwell RTX PRO GPUs. The microservices are simple to obtain and run. They span the highest modalities for PC growth and are suitable with high ecosystem functions and instruments.

The experimental System Assistant function of Venture G-Help was additionally launched in the present day. Venture G-Help showcases how AI assistants can improve apps and video games. The System Assistant permits customers to run real-time diagnostics, get suggestions on efficiency optimizations, or management system software program and peripherals — all through easy voice or textual content instructions. Builders and fans can prolong its capabilities with a easy plug-in structure and new plug-in builder.

Amid a pivotal second in computing — the place groundbreaking AI fashions and a world developer group are driving an explosion in AI-powered instruments and workflows — NIM microservices, AI Blueprints and G-Help are serving to convey key improvements to PCs. This RTX AI Storage weblog sequence will proceed to ship updates, insights and sources to assist builders and fans construct the following wave of AI on RTX AI PCs and workstations.

Prepared, Set, NIM!

Although the tempo of innovation with AI is unimaginable, it could actually nonetheless be tough for the PC developer group to get began with the expertise.

Bringing AI fashions from analysis to the PC requires curation of mannequin variants, adaptation to handle the entire enter and output information, and quantization to optimize useful resource utilization. As well as, fashions should be transformed to work with optimized inference backend software program and related to new AI utility programming interfaces (APIs). This takes substantial effort, which might gradual AI adoption.

NVIDIA NIM microservices assist resolve this difficulty by offering prepackaged, optimized, simply downloadable AI fashions that connect with industry-standard APIs. They’re optimized for efficiency on RTX AI PCs and workstations, and embrace the highest AI fashions from the group, in addition to fashions developed by NVIDIA.

NIM microservices assist a spread of AI functions, together with giant language fashions (LLMs), imaginative and prescient language fashions, picture technology, speech processing, retrieval-augmented technology (RAG)-based search, PDF extraction and laptop imaginative and prescient. Ten NIM microservices for RTX can be found, supporting a spread of functions, together with language and picture technology, laptop imaginative and prescient, speech AI and extra. Get began with these NIM microservices in the present day:

NIM microservices are additionally out there by way of high AI ecosystem instruments and frameworks.

For AI fans, AnythingLLM and ChatRTX now assist NIM, making it simple to talk with LLMs and AI brokers by way of a easy, user-friendly interface. With these instruments, customers can create customized AI assistants and combine their very own paperwork and information, serving to automate duties and improve productiveness.

For builders seeking to construct, take a look at and combine AI into their functions, FlowiseAI and Langflow now assist NIM and provide low- and no-code options with visible interfaces to design AI workflows with minimal coding experience. Help for ComfyUI is coming quickly. With these instruments, builders can simply create advanced AI functions like chatbots, picture mills and information evaluation programs.

As well as, Microsoft VS Code AI Toolkit, CrewAI and Langchain now assist NIM and supply superior capabilities for integrating the microservices into utility code, serving to guarantee seamless integration and optimization.

Go to the NVIDIA technical weblog and construct.nvidia.com to get began.

NVIDIA AI Blueprints Will Provide Pre-Constructed Workflows

NVIDIA AI Blueprints give AI builders a head begin in constructing generative AI workflows with NVIDIA NIM microservices.

Blueprints are ready-to-use, extensible reference samples that bundle all the pieces wanted — supply code, pattern information, documentation and a demo app — to create and customise superior AI workflows that run domestically. Builders can modify and prolong AI Blueprints to tweak their conduct, use totally different fashions or implement utterly new performance.

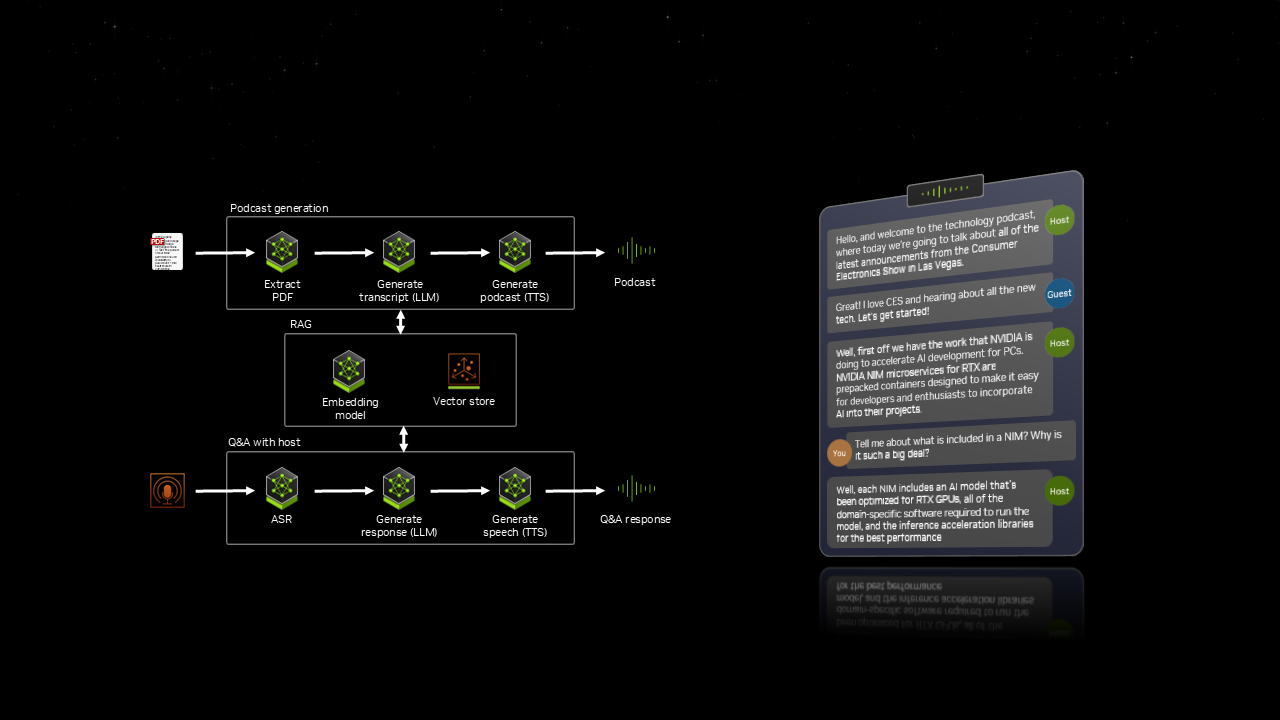

The PDF to podcast AI Blueprint will remodel paperwork into audio content material so customers can study on the go. By extracting textual content, pictures and tables from a PDF, the workflow makes use of AI to generate an informative podcast. For deeper dives into subjects, customers can then have an interactive dialogue with the AI-powered podcast hosts.

The AI Blueprint for 3D-guided generative AI will give artists finer management over picture technology. Whereas AI can generate superb pictures from easy textual content prompts, controlling picture composition utilizing solely phrases could be difficult. With this blueprint, creators can use easy 3D objects specified by a 3D renderer like Blender to information AI picture technology. The artist can create 3D property by hand or generate them utilizing AI, place them within the scene and set the 3D viewport digicam. Then, a prepackaged workflow powered by the FLUX NIM microservice will use the present composition to generate high-quality pictures that match the 3D scene.

NVIDIA NIM on RTX With Home windows Subsystem for Linux

One of many key applied sciences that permits NIM microservices to run on PCs is Home windows Subsystem for Linux (WSL).

Microsoft and NVIDIA collaborated to convey CUDA and RTX acceleration to WSL, making it attainable to run optimized, containerized microservices on Home windows. This enables the identical NIM microservice to run wherever, from PCs and workstations to the info middle and cloud.

Get began with NVIDIA NIM on RTX AI PCs at construct.nvidia.com.

Venture G-Help Expands PC AI Options With Customized Plug-Ins

As a part of Venture G-Help, an experimental model of the System Assistant function for GeForce RTX desktop customers is now out there through the NVIDIA App, with laptop computer assist coming quickly.

G-Help helps customers management a broad vary of PC settings — together with optimizing sport and system settings, charting body charges and different key efficiency statistics, and controlling choose peripherals settings akin to lighting — all through primary voice or textual content instructions.

G-Help is constructed on NVIDIA ACE — the identical AI expertise suite sport builders use to breathe life into non-player characters. Not like AI instruments that use large cloud-hosted AI fashions that require on-line entry and paid subscriptions, G-Help runs domestically on a GeForce RTX GPU. This implies it’s responsive, free and may run with out an web connection. Producers and software program suppliers are already utilizing ACE to create customized AI Assistants like G-Help, together with MSI’s AI Robotic engine, the Streamlabs Clever AI Assistant and upcoming capabilities in HP’s Omen Gaming hub.

G-Help was constructed for community-driven enlargement. Get began with this NVIDIA GitHub repository, together with samples and directions for creating plug-ins that add new performance. Builders can outline features in easy JSON codecs and drop configuration recordsdata into a delegated listing, permitting G-Help to routinely load and interpret them. Builders may even submit plug-ins to NVIDIA for overview and potential inclusion.

At the moment out there pattern plug-ins embrace Spotify, to allow hands-free music and quantity management, and Google Gemini — permitting G-Help to invoke a a lot bigger cloud-based AI for extra advanced conversations, brainstorming classes and net searches utilizing a free Google AI Studio API key.

Within the clip under, you’ll see G-Help ask Gemini about which Legend to choose in Apex Legends when solo queueing, and whether or not it’s clever to leap into Nightmare mode at degree 25 in Diablo IV:

For much more customization, comply with the directions within the GitHub repository to generate G-Help plug-ins utilizing a ChatGPT-based “Plug-in Builder.” With this instrument, customers can write and export code, then combine it into G-Help — enabling fast, AI-assisted performance that responds to textual content and voice instructions.

Watch how a developer used the Plug-in Builder to create a Twitch plug-in for G-Help to examine if a streamer is reside:

Extra particulars on the way to construct, share and cargo plug-ins can be found within the NVIDIA GitHub repository.

Take a look at the G-Help article for system necessities and extra data.

Construct, Create, Innovate

NVIDIA NIM microservices for RTX can be found at construct.nvidia.com, offering builders and AI fans with highly effective, ready-to-use instruments for constructing AI functions.

Obtain Venture G-Help by way of the NVIDIA App’s “Residence” tab, within the “Discovery” part. G-Help at present helps GeForce RTX desktop GPUs, in addition to a wide range of voice and textual content instructions within the English language. Future updates will add assist for GeForce RTX Laptop computer GPUs, new and enhanced G-Help capabilities, in addition to assist for extra languages. Press “Alt+G” after set up to activate G-Help.

Every week, RTX AI Storage options community-driven AI improvements and content material for these seeking to study extra about NIM microservices and AI Blueprints, in addition to constructing AI brokers, inventive workflows, digital people, productiveness apps and extra on AI PCs and workstations.

Plug in to NVIDIA AI PC on Fb, Instagram, TikTok and X — and keep knowledgeable by subscribing to the RTX AI PC publication.

Comply with NVIDIA Workstation on LinkedIn and X.

See discover relating to software program product data.