NVIDIA is taking an array of developments in rendering, simulation and generative AI to SIGGRAPH 2024, the premier pc graphics convention, which can happen July 28 – Aug. 1 in Denver.

Greater than 20 papers from NVIDIA Analysis introduce improvements advancing artificial information turbines and inverse rendering instruments that may assist prepare next-generation fashions. NVIDIA’s AI analysis is making simulation higher by boosting picture high quality and unlocking new methods to create 3D representations of actual or imagined worlds.

The papers deal with diffusion fashions for visible generative AI, physics-based simulation and more and more life like AI-powered rendering. They embody two technical Greatest Paper Award winners and collaborations with universities throughout the U.S., Canada, China, Israel and Japan in addition to researchers at firms together with Adobe and Roblox.

These initiatives will assist create instruments that builders and companies can use to generate advanced digital objects, characters and environments. Artificial information technology can then be harnessed to inform highly effective visible tales, help scientists’ understanding of pure phenomena or help in simulation-based coaching of robots and autonomous automobiles.

Diffusion Fashions Enhance Texture Portray, Textual content-to-Picture Era

Diffusion fashions, a well-liked instrument for remodeling textual content prompts into pictures, will help artists, designers and different creators quickly generate visuals for storyboards or manufacturing, lowering the time it takes to carry concepts to life.

Two NVIDIA-authored papers are advancing the capabilities of those generative AI fashions.

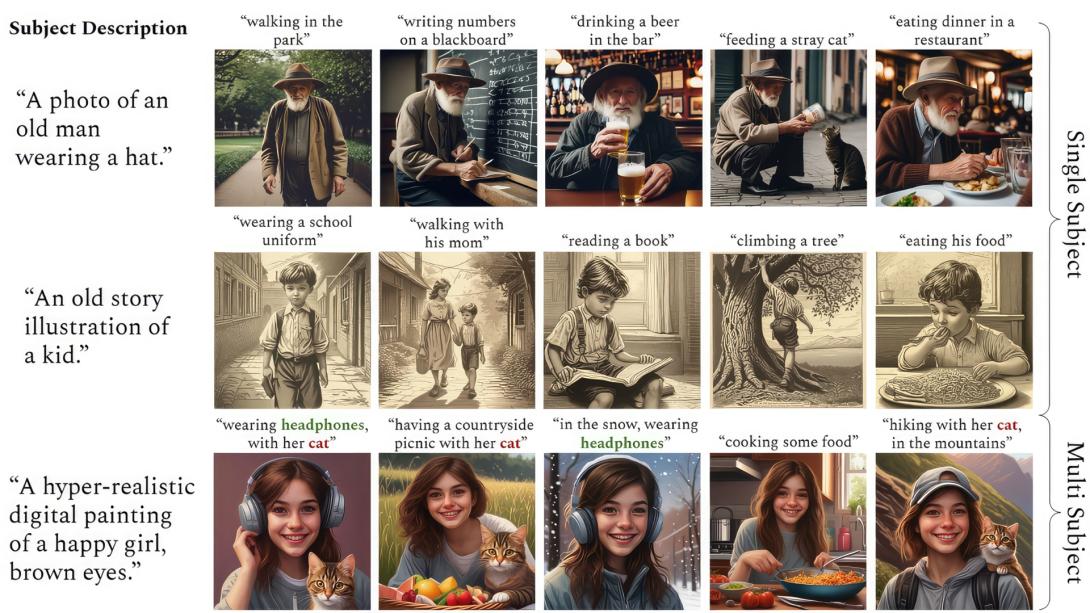

ConsiStory, a collaboration between researchers at NVIDIA and Tel Aviv College, makes it simpler to generate a number of pictures with a constant predominant character — an important functionality for storytelling use circumstances similar to illustrating a comic book strip or growing a storyboard. The researchers’ method introduces a method known as subject-driven shared consideration, which reduces the time it takes to generate constant imagery from 13 minutes to round 30 seconds.

NVIDIA researchers final 12 months gained the Greatest in Present award at SIGGRAPH’s Actual-Time Stay occasion for AI fashions that flip textual content or picture prompts into customized textured supplies. This 12 months, they’re presenting a paper that applies 2D generative diffusion fashions to interactive texture portray on 3D meshes, enabling artists to color in actual time with advanced textures based mostly on any reference picture.

Kick-Beginning Developments in Physics-Based mostly Simulation

Graphics researchers are narrowing the hole between bodily objects and their digital representations with physics-based simulation — a spread of strategies to make digital objects and characters transfer the identical method they’d in the true world.

A number of NVIDIA Analysis papers characteristic breakthroughs within the discipline, together with SuperPADL, a challenge that tackles the problem of simulating advanced human motions based mostly on textual content prompts (see video at high).

Utilizing a mixture of reinforcement studying and supervised studying, the researchers demonstrated how the SuperPADL framework could be skilled to breed the movement of greater than 5,000 abilities — and might run in actual time on a consumer-grade NVIDIA GPU.

One other NVIDIA paper contains a neural physics technique that applies AI to learn the way objects — whether or not represented as a 3D mesh, a NeRF or a strong object generated by a text-to-3D mannequin — would behave as they’re moved in an atmosphere.

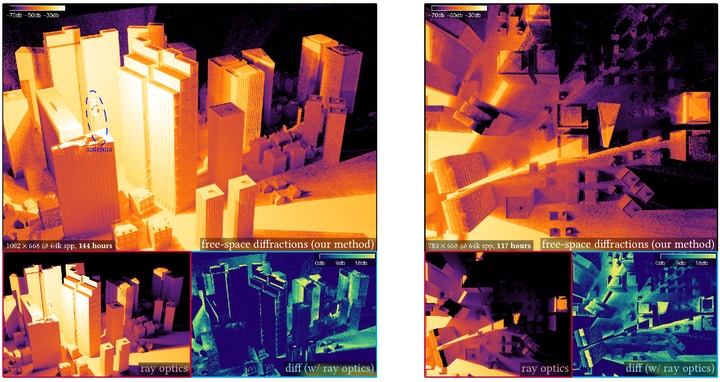

A paper written in collaboration with Carnegie Mellon College researchers develops a brand new form of renderer — one which, as a substitute of modeling bodily gentle, can carry out thermal evaluation, electrostatics and fluid mechanics. Named one among 5 finest papers at SIGGRAPH, the strategy is straightforward to parallelize and doesn’t require cumbersome mannequin cleanup, providing new alternatives for dashing up engineering design cycles.

Within the instance above, the renderer performs a thermal evaluation of the Mars Curiosity rover, the place holding temperatures inside a selected vary is essential to mission success.

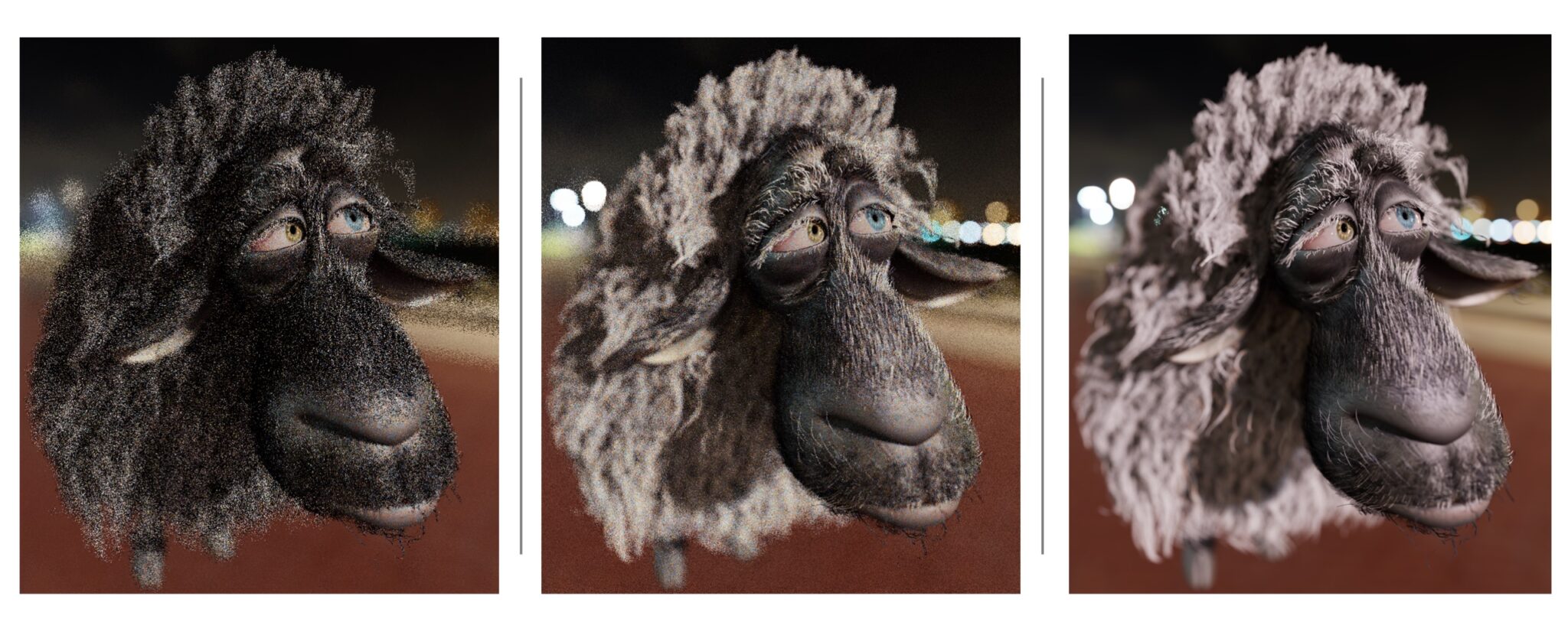

Extra simulation papers introduce a extra environment friendly method for modeling hair strands and a pipeline that accelerates fluid simulation by 10x.

Elevating the Bar for Rendering Realism, Diffraction Simulation

One other set of NVIDIA-authored papers current new strategies to mannequin seen gentle as much as 25x sooner and simulate diffraction results — similar to these utilized in radar simulation for coaching self-driving automobiles — as much as 1,000x sooner.

A paper by NVIDIA and College of Waterloo researchers tackles free-space diffraction, an optical phenomenon the place gentle spreads out or bends across the edges of objects. The crew’s technique can combine with path-tracing workflows to extend the effectivity of simulating diffraction in advanced scenes, providing as much as 1,000x acceleration. Past rendering seen gentle, the mannequin may be used to simulate the longer wavelengths of radar, sound or radio waves.

Path tracing samples quite a few paths — multi-bounce gentle rays touring by means of a scene — to create a photorealistic image. Two SIGGRAPH papers enhance sampling high quality for ReSTIR, a path-tracing algorithm first launched by NVIDIA and Dartmouth School researchers at SIGGRAPH 2020 that has been key to bringing path tracing to video games and different real-time rendering merchandise.

Certainly one of these papers, a collaboration with the College of Utah, shares a brand new option to reuse calculated paths that will increase efficient pattern rely by as much as 25x, considerably boosting picture high quality. The opposite improves pattern high quality by randomly mutating a subset of the sunshine’s path. This helps denoising algorithms carry out higher, producing fewer visible artifacts within the last render.

Instructing AI to Assume in 3D

NVIDIA researchers are additionally showcasing multipurpose AI instruments for 3D representations and design at SIGGRAPH.

One paper introduces fVDB, a GPU-optimized framework for 3D deep studying that matches the dimensions of the true world. The fVDB framework gives AI infrastructure for the massive spatial scale and excessive decision of city-scale 3D fashions and NeRFs, and segmentation and reconstruction of large-scale level clouds.

A Greatest Technical Paper award winner written in collaboration with Dartmouth School researchers introduces a principle for representing how 3D objects work together with gentle. The idea unifies a various spectrum of appearances right into a single mannequin.

And a collaboration with College of Tokyo, College of Toronto and Adobe Analysis introduces an algorithm that generates clean, space-filling curves on 3D meshes in actual time. Whereas earlier strategies took hours, this framework runs in seconds and provides customers a excessive diploma of management over the output to allow interactive design.

NVIDIA at SIGGRAPH

Be taught extra about NVIDIA at SIGGRAPH. Particular occasions embody a fireplace chat between NVIDIA founder and CEO Jensen Huang and Meta founder and CEO Mark Zuckerberg, in addition to a hearth chat with Huang and Lauren Goode, senior author at WIRED, on the influence of robotics and AI in industrial digitalization.

NVIDIA researchers will even current OpenUSD Day by NVIDIA, a full-day occasion showcasing how builders and trade leaders are adopting and evolving OpenUSD to construct AI-enabled 3D pipelines.

NVIDIA Analysis has tons of of scientists and engineers worldwide, with groups centered on subjects together with AI, pc graphics, pc imaginative and prescient, self-driving automobiles and robotics. See extra of their newest work.