The event of autonomous automobiles represents a dramatic change in transportation programs. These autonomous automobiles are primarily based on a cutting-edge set of applied sciences that allow them to drive safely and successfully with out the necessity for human intervention.

Pc imaginative and prescient is a key part of self-driving automobiles. It empowers the automobiles to understand and comprehend their environment, together with roads, site visitors, pedestrians, and different objects. To acquire this knowledge, a car makes use of cameras and sensors. It then makes fast selections and drives safely in varied highway circumstances primarily based on what it observes.

On this article, we’ll elaborate on how pc imaginative and prescient enhances these automobiles. We’ll describe the article detection fashions, knowledge processing with a LiDAR machine, analyzing scenes, and planning the route.

Improvement Timeline of Autonomous Automobiles

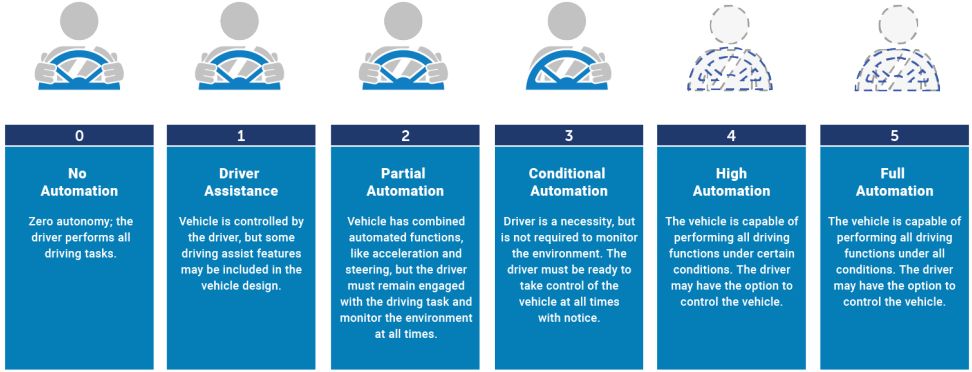

A rising variety of vehicles with know-how that enable to function the automobiles below human supervision have been manufactured and launched onto the market. Superior driver help programs (ADAS) and automatic driving programs (ADS) are each new types of driving automation.

Right here we current the event timeline of the autonomous automobiles.

- 1971 – Daniel Wisner designed an digital cruise management system

- 1990 – William Chundrlik developed the adaptive cruise management (ACC) system

- 2008 – Volvo invented the Automated Emergency Braking (AEB) system.

- 2013 – Introducing pc imaginative and prescient strategies for car detection, monitoring, and habits understanding

- 2014 – Tesla launched its first business autonomous car Tesla mannequin S

- 2015 – Algorithms for vision-based car detection and monitoring (collision avoidance)

- 2017 – 27 publicly out there knowledge units for autonomous driving

- 2019 – 3D object detection (and pedestrian detection) strategies for autonomous automobiles

- 2020 – LiDAR applied sciences and notion algorithms for autonomous driving

- 2021 – Deep studying strategies for pedestrian, bike, and car detection

Key CV strategies in Autonomous Automobiles

To navigate safely, autonomous automobiles make use of a mix of sensors, cameras, and clever algorithms. To perform this, they require two key parts: machine studying and pc imaginative and prescient.

The eyes of the auto are pc imaginative and prescient fashions. They document photos and movies of all the things surrounding the car utilizing cameras and sensors. Street strains, site visitors alerts, folks, and different automobiles are all examples of this. The car then interprets these photos and movies utilizing specialised strategies.

Machine studying strategies signify the mind of the automotive. They analyze the knowledge from the sensors and cameras. After that, they make the most of specialised algorithms to determine traits, predict outcomes, and take in recent knowledge. Right here we’ll current the principle CV methods that enable autonomous driving.

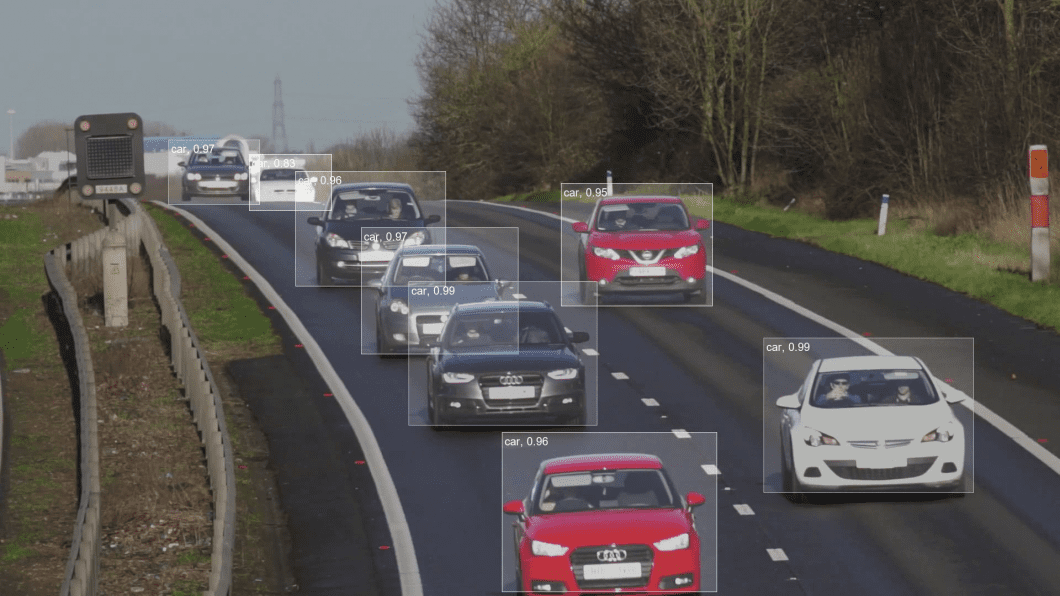

Object Detection

Coaching self-driving automobiles to acknowledge objects on the highway and round them is a serious part of creating them operate. To distinguish between objects like different automobiles, pedestrians, highway indicators, and obstacles, the automobiles use cameras and sensors. The car acknowledges this stuff in real-time with pace and accuracy utilizing refined pc imaginative and prescient methods.

Automobiles can acknowledge the looks of the bicycle owner, pedestrian, or automotive in entrance of them due to class-specific object detection. The management system triggers visible and auditory alerts to advise the driving force to take preventative motion when it estimates the chance of a frontal collision with the recognized pedestrian, bicycle, or car.

Li et al. (2016) launched a unified framework to detect each cyclists and pedestrians from photos. Their framework generates a number of object candidates by utilizing a detection suggestion technique. They utilized a Sooner R-CNN-based mannequin to categorise these object candidates. The detection efficiency is then additional enhanced by a post-processing step.

Garcia et al. (2017) developed a sensor fusion method for detecting automobiles in city environments. The proposed method integrates knowledge from a 2D LiDAR and a monocular digicam utilizing each the unscented Kalman filter (UKF) and joint probabilistic knowledge affiliation. On single-lane roadways, it produces encouraging car detection outcomes.

Chen et al. (2020) developed a light-weight car detector with a 1/10 mannequin measurement that’s thrice quicker than YOLOv3. EfficientLiteDet is a light-weight real-time method for pedestrian and car detection by Murthy et al. in 2022. To perform multi-scale object detection, EfficientLiteDet makes use of Tiny-YOLOv4 by including a prediction head.

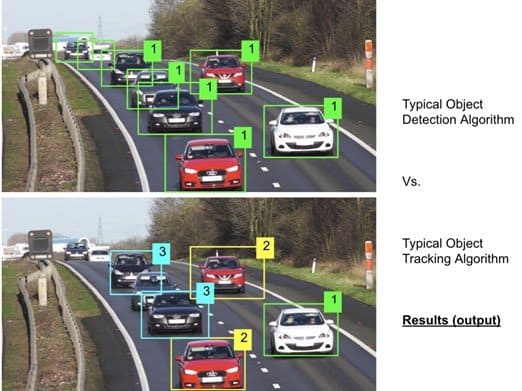

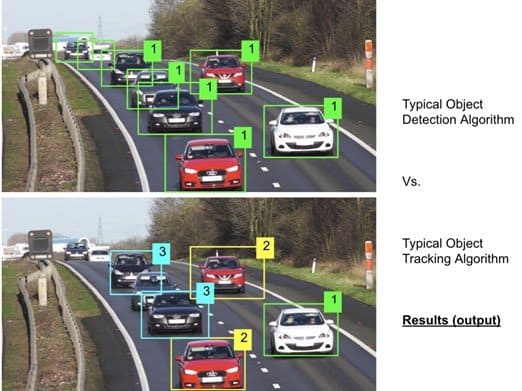

Object Monitoring

When the car detects one thing, it should regulate it, notably whether it is shifting. Understanding the place objects, comparable to different automobiles and folks, may transfer subsequent is important for path planning and stopping collisions. The car predicts these items’ subsequent location by monitoring their actions over time. It’s achieved by pc imaginative and prescient algorithms.

Deep SORT (Easy On-line and Realtime Monitoring with a Deep Affiliation Metric), incorporates deep studying capabilities to extend monitoring precision. It incorporates look knowledge to protect an object’s id all through time, even when it’s obscured or briefly leaves the body.

Monitoring the motion of things surrounding self-driving vehicles is essential. To plan the motion of a steering wheel and forestall collisions, Deep SORT assists the car in predicting the actions of those objects.

Deep SORT allows the self-driving automobiles to hint the paths of objects which might be noticed by YOLO. That is notably helpful in site visitors jams when automobiles, bikes, and folks transfer in numerous methods.

Semantic Segmentation

For autonomous automobiles to understand and interpret their environment, semantic segmentation is important. Semantic segmentation offers an intensive grasp of the objects in an image, comparable to roads, automobiles, indicators, site visitors alerts, and pedestrians, by classifying every pixel.

For autonomous driving programs to make clever selections relating to their motions and interactions with their surroundings, this information is essential.

Semantic segmentation is now extra correct and environment friendly due to deep studying methods that make the most of neural community fashions. Semantic segmentation efficiency has improved because of extra exact and efficient pixel-level categorization made attainable by convolutional neural networks (CNNs) and autoencoders.

Moreover, autoencoders purchase the flexibility to rebuild enter photos whereas preserving necessary particulars for semantic segmentation. Utilizing deep studying methods, autonomous automobiles can carry out semantic segmentation at outstanding speeds with out sacrificing accuracy.

Semantic segmentation real-time knowledge evaluation requires scene comprehension and visible sign processing. To categorize pixels into distinct teams, visible sign processing methods extract helpful info from the enter knowledge, comparable to picture attributes and traits. Scene understanding denotes the flexibility of the car to know its environment utilizing segmented photos.

Sensors and Datasets

Cameras

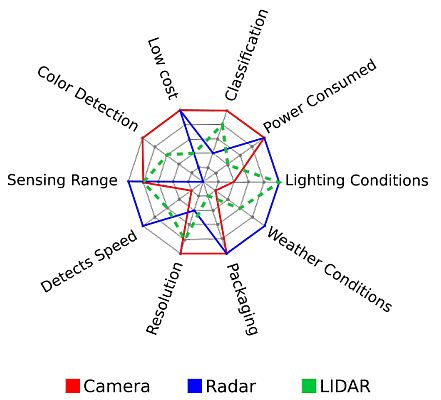

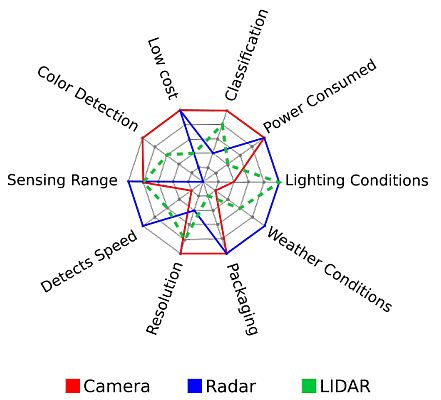

Essentially the most broadly used picture sensors for detecting the seen mild spectrum mirrored from objects are cameras. Cameras are comparatively inexpensive than LiDAR and Radar. Digicam photos provide easy two-dimensional info that’s helpful for lane or object detection.

Cameras have a measurement vary of a number of millimeters to 1 hundred meters. Nevertheless, mild and climate circumstances like fog, haze, mist, and smog have a serious affect on digicam efficiency, limiting its use to clear skies and daytime. Moreover, since a single high-resolution digicam usually produces 20–60 MB of information per second, cameras additionally wrestle with huge knowledge volumes.

LiDAR

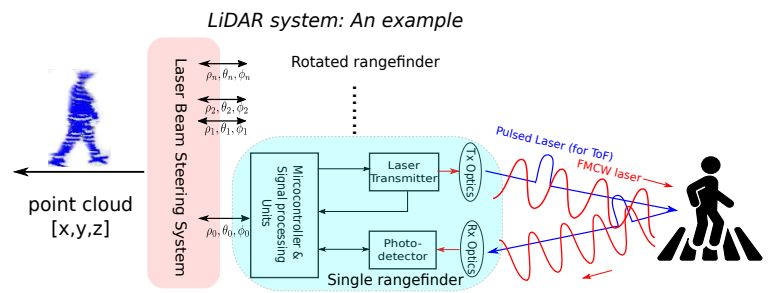

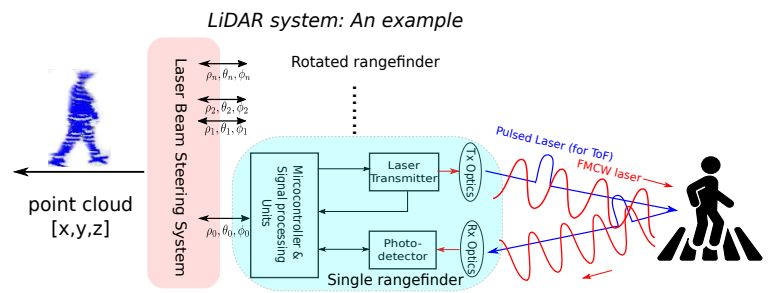

LiDAR is an energetic ranging sensor that measures the round-trip time of laser mild pulses to find out an object’s distance. It might probably measure as much as 200 meters due to its low divergence laser beams, which cut back energy degradation over distance.

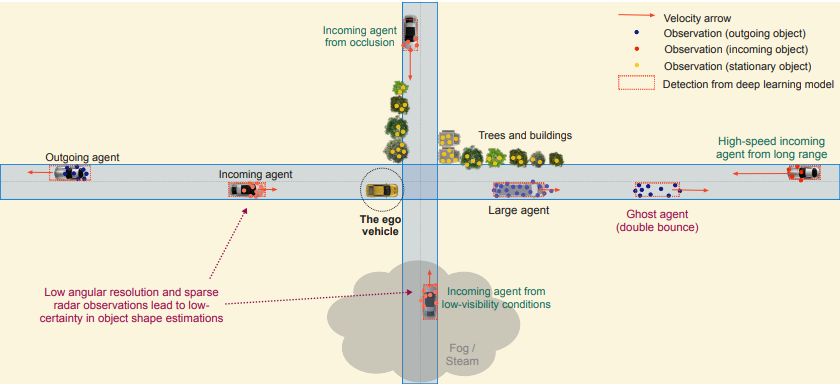

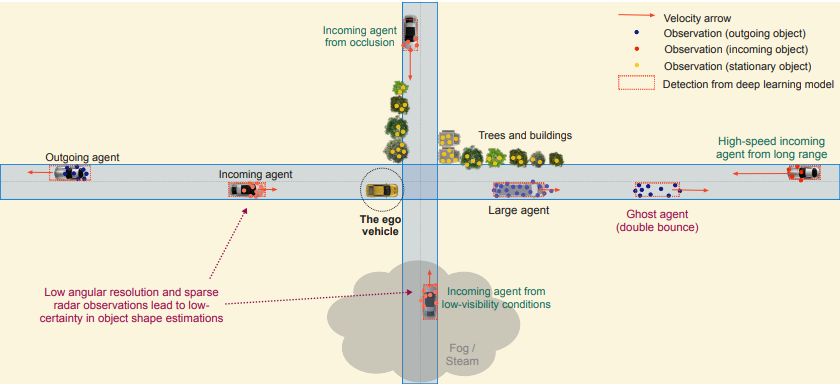

LiDAR can create exact and high-resolution maps due to its high-accuracy distance measuring functionality. Nevertheless, LiDAR isn’t applicable for recognizing small targets as a result of its sparse observations.

Moreover, climate circumstances can have an effect on its measurement accuracy and vary. Lastly, LiDAR’s intensive utility in autonomous automobiles is restricted by its costly price. Moreover, LiDAR generates between 10 and 70 MB of information per second, which makes it tough for onboard pc platforms to course of this knowledge in real-time.

Radar and Ultrasonic sensors

Radar detects objects by utilizing radio or electromagnetic radiation. It might probably decide the gap to an object, the article’s angle, and relative pace. Radar programs sometimes run at 24 GHz or 77 GHz frequencies.

A 24 GHz radar can measure as much as 70 meters, and a 77 GHz radar can measure as much as 200 meters. Radar is best fitted to measurements in environments with mud, smoke, rain, poor lighting, or uneven surfaces than LiDAR. The information measurement generated by every radar ranges from 10 to 100 KB.

Ultrasonic sensors use ultrasonic waves to measure an object’s distance. They obtain the ultrasonic wave mirrored from the goal after the sensor head emits it. The time between emission and reception is measured to calculate the gap.

Some great benefits of ultrasonic sensors embody their ease of use, wonderful accuracy, and capability to detect even minute modifications in location. They’re also used in automotive anti-collision and self-parking programs. Furthermore, their measuring distance is restricted to fewer than 20 meters.

Knowledge units

The flexibility of full self driving automobiles to sense their environment is important to their protected operation. Usually talking, autonomous automobiles use a wide range of sensors along with superior pc imaginative and prescient algorithms to assemble the information they want from their environment.

Benchmark knowledge units are needed since these algorithms sometimes depend on deep studying strategies, notably convolutional neural networks (CNNs). Researchers from academia and business have gathered a wide range of knowledge units for assessing varied facets of autonomous driving programs.

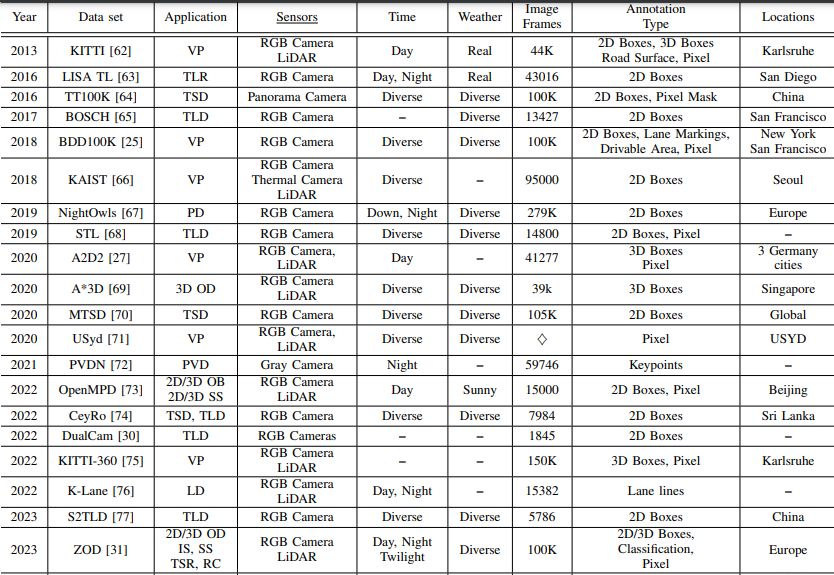

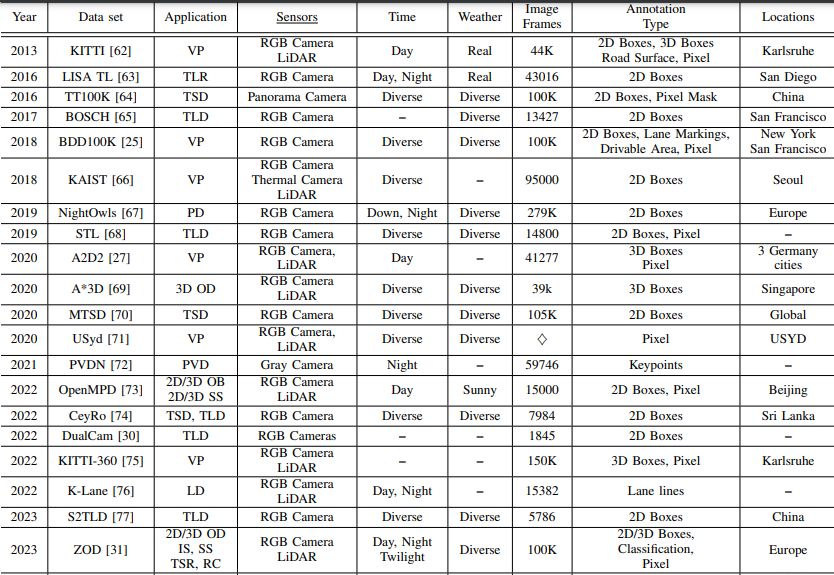

The information units utilized for notion duties in autonomous automobiles that have been gathered between 2013 and 2023 are compiled within the desk under. The desk shows the kinds of sensors, the existence of unfavorable circumstances (comparable to time or climate), the amount of the information set, and the situation of information assortment.

Moreover, it presents the kinds of annotation codecs and attainable purposes. Subsequently, the desk offers tips for engineers to pick out the most effective knowledge set for his or her specific utility.

What’s Subsequent for Autonomous Automobiles?

Autonomous automobiles will grow to be considerably extra clever as synthetic intelligence (AI) advances. Though the event of autonomous know-how has introduced many thrilling breakthroughs, there are nonetheless necessary obstacles that should be fastidiously thought of:

- Security options: Guaranteeing the protection of those automobiles is a big process. As well as, growing protected mechanisms for vehicles is important, e.g. site visitors mild obeying, blind spot detection, lane departure warning, and so forth. Additionally, to satisfy the necessities of the freeway site visitors security administration.

- Reliability: These automobiles should all the time operate correctly, no matter their location or the climate circumstances. This type of dependability is important for gaining human drivers’ acceptance.

- Public belief: To get belief – autonomous automobiles require extra than simply demonstrating their reliability and security. Educating the general public concerning the benefits and limitations of those automobiles and being clear about their operation, together with safety and privateness.

- Good metropolis integration: It should lead to safer roads, much less site visitors congestion, and extra environment friendly site visitors circulation. All of it comes right down to linking vehicles to the infrastructure of good cities.

Incessantly Requested Questions

Q1: What programs for assisted driving have been predecessors of autonomous automobiles?

Reply: Superior driver help programs (ADAS) and automatic driving programs (ADS) are types of driving automation which might be predecessors to autonomous automobiles.

Q2: Which pc imaginative and prescient strategies are essential for autonomous driving?

Reply: Strategies like object detection, object monitoring, and semantic segmentation are essential for autonomous driving programs.

Q3: What gadgets allow the sensing of the surroundings in autonomous automobiles?

Reply: Cameras, LiDAR, radars, and ultrasonic sensors – all these allow distant sensing of the encircling site visitors and objects.

This fall: Which components have an effect on the broader acceptance of autonomous automobiles?

Reply: The components that have an effect on broader acceptance of autonomous automobiles embody their security, reliability, public belief (together with privateness), and good metropolis integration.