Yearly, Safer Web Day supplies a possibility to pause and mirror on the state of on-line security – how far we’ve come and the way we are able to proceed to enhance. For nearly a decade, Microsoft has marked the event by releasing analysis on how people of all ages understand and expertise threat on-line. Final yr, we highlighted the rising significance of AI. This yr, in our ninth World On-line Security Survey, we’ve dug deeper to know how folks view and are utilizing this expertise, plus how nicely they will establish AI-generated content material.

Our findings present that whereas there was a worldwide enhance in AI customers (51% have ever used in comparison with 39% in 2023), worries concerning the expertise have additionally elevated: 88% of individuals have been frightened about generative AI, in comparison with 83% final yr. Additional, our knowledge confirms that individuals have issue in figuring out AI generated content material, which can amplify abusive AI content material dangers.

Asserting new sources to empower the accountable use of AI

At Microsoft, we’re dedicated to advancing AI responsibly to appreciate its advantages. Elementary to that is the work we do to construct a powerful security structure and to safeguard our providers from abuse. Sadly, we all know that the creation of dangerous content material is without doubt one of the methods wherein AI might be topic to abuse, which is why we’re taking a complete strategy to addressing this concern. That strategy consists of public consciousness and schooling – and this yr’s analysis underscored the necessity for media literacy and steerage on the accountable use of AI. Constructing on the launch of our Household Security Toolkit final yr, we’re happy to announce new sources:

- Partnership with Childnet: We’re proud to associate with Childnet, a number one UK group devoted to creating the web a safer place for kids. Collectively, we’re growing academic supplies geared toward stopping the misuse of AI, such because the creation of deepfakes. These sources might be obtainable to colleges and households, offering invaluable info on learn how to shield kids from on-line dangers. This partnership underscores our complete strategy to tackling non-consensual intimate imagery (NCII) dangers, together with via schooling for teenagers.

- Minecraft “CyberSafe AI: Dig Deeper”: We’re thrilled to announce the discharge of “CyberSafe AI: Dig Deeper,” a brand new academic sport in Minecraft and Minecraft Schooling that focuses on the accountable use of AI. This sport is designed to have interaction younger minds and foster curiosity whereas educating necessary classes about AI in a protected and managed sport setting. Gamers will embark on thrilling adventures, fixing puzzles and challenges that spotlight the moral concerns of AI and put together them to navigate real-world digital security eventualities at dwelling and in school. Whereas the participant doesn’t have interaction with generative AI expertise instantly via the sport, they may work via challenges and eventualities that simulate use of AI and learn to use it responsibly. “Dig Deeper” is the fourth installment in a sequence of CyberSafe worlds from Minecraft created in partnership with Xbox Household Security which were downloaded greater than 80 million instances.

- AI Information for Older Adults: We’re additionally proud to associate with Older Adults Expertise Companies (OATS) from AARP, whose applications and companions collectively have interaction over 500,000 older adults every year with free expertise and AI coaching. As a part of the partnership, OATS launched an AI Information for Older Adults that helps folks age 50+ perceive the advantages and dangers of AI, together with steerage on staying protected. Coaching for OATS name heart workers to deal with AI-related questions can be serving to enhance older adults’ confidence of their potential to make use of the expertise and spot scams.

Extra sources for educators to assist college students navigate the digital world might be discovered right here.

A deeper dive into this yr’s World On-line Security Survey findings

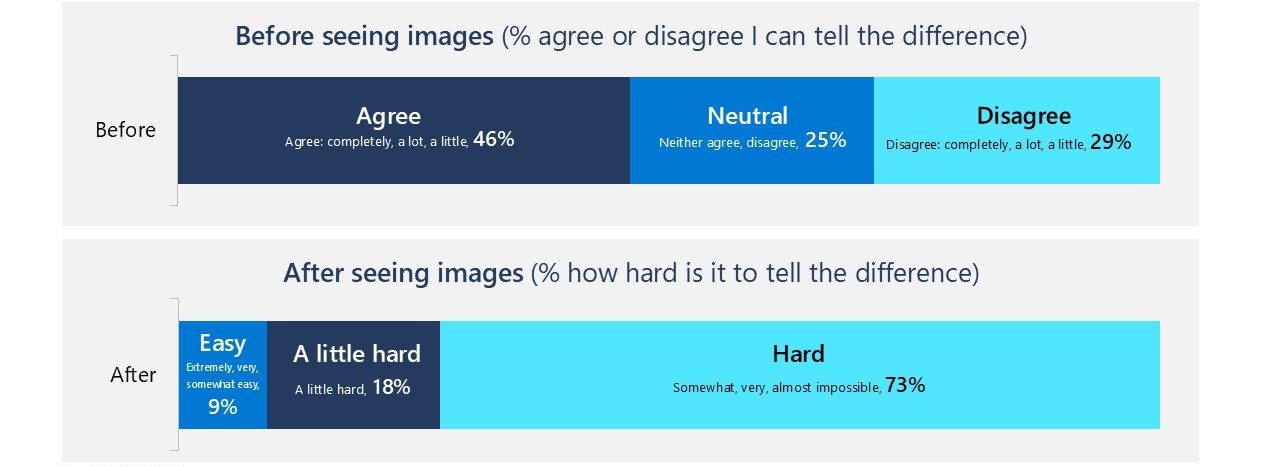

Because the digital panorama evolves, we adapt our world survey inquiries to mirror these modifications. This yr, we recognized a possibility to quiz folks on their potential to establish AI-generated content material utilizing photographs from Microsoft’s “Actual or Not” quiz. We requested respondents about their confidence in recognizing deepfakes earlier than and after taking a look at a sequence of photographs. We discovered 73% of respondents admitted that recognizing AI-generated photographs is tough, and solely 38% of photographs have been recognized accurately. We additionally requested folks about their issues: frequent worries about generative AI included scams (73%), sexual or on-line abuse (73%) and deepfakes (72%).

Our analysis additionally reveals that individuals worldwide proceed to be uncovered to a wide range of on-line dangers, with 66% uncovered to at the very least one threat during the last yr. You could find the complete outcomes, together with further knowledge on teen and guardian experiences and perceptions of life on-line right here.

Reaffirming our dedication to on-line security

Our strategy at Microsoft is centered on empowering customers by advancing security and human rights. We all know we have now a duty to take steps to guard our customers from unlawful and dangerous on-line content material and conduct, in addition to to contribute to a safer on-line ecosystem. We even have a duty to guard human rights, together with essential values comparable to freedom of expression, privateness, and entry to info. At Microsoft, we obtain this steadiness via fastidiously tailoring our security interventions throughout our completely different client providers, relying on the character of the service and of the hurt.

Our strategy to advance on-line security has all the time been grounded in privateness and free expression. We advocate for proportionate and tailor-made security rules, supporting risk-based approaches whereas cautioning in opposition to over-broad measures that hinder privateness or freedom of speech. We’ll proceed to have interaction intently with policymakers and regulators all over the world on methods to sort out the most important dangers, particularly to kids, in considerate methods: productiveness software program like Microsoft Phrase, for instance, shouldn’t be topic to the identical necessities as a social media service. And at last, we’ll proceed our advocacy for modernized laws to shield the general public from abusive AI-generated content material in assist of a safer digital setting for all.

World On-line Security Survey Methodology

Microsoft has printed annual analysis since 2016 that surveys how folks of various ages use and think about on-line expertise. This newest consumer-based report relies on a survey of almost 15,000 teenagers (13-17) and adults that was carried out this previous summer time in 15 international locations analyzing folks’s attitudes and perceptions about on-line security instruments and interactions. Responses to on-line security differ relying on the nation. Full outcomes might be accessed right here.