I’ve been exploring Hugging Face’s SmolAgents to construct AI brokers in a number of strains of code and it labored completely for me. From constructing a analysis agent to Agentic Rag, it has been a seamless expertise. Hugging Face’s SmolAgents present a light-weight and environment friendly option to create AI brokers for varied duties, comparable to analysis help, query answering, and extra. The simplicity of the framework permits builders to deal with the logic and performance of their AI brokers with out getting slowed down by complicated configurations. Nonetheless, debugging multi-agent runs is difficult on account of their unpredictable workflows and intensive logs and a lot of the errors are sometimes “LLM dumb” sort of points that the mannequin self-corrects in subsequent steps. Discovering efficient methods to validate and examine these runs stays a key problem. That is the place OpenTelemetry turns out to be useful. Let’s see the way it works!

Why is the Debugging Agent Run is Tough?

Right here’s why debugging agent run is troublesome:

- Unpredictability: AI Brokers are designed to be versatile and inventive, which implies they don’t at all times observe a hard and fast path. This makes it arduous to foretell precisely what they’ll do, and subsequently, arduous to debug when one thing goes unsuitable.

- Complexity: AI Brokers typically carry out many steps in a single run, and every step can generate numerous logs (messages or knowledge about what’s taking place). This will shortly overwhelm you in case you’re attempting to determine what went unsuitable.

- Errors are sometimes minor: Many errors in agent runs are small errors (just like the LLM writing incorrect code or making a unsuitable choice) that the agent fixes by itself within the subsequent step. These errors aren’t at all times crucial, however they nonetheless make it more durable to trace what’s taking place.

What’s the Significance of Log in Agent Run?

Log means recording what occurs throughout an agent run. That is vital as a result of:

- Debugging: If one thing goes unsuitable, you may have a look at the logs to determine what occurred.

- Monitoring: In manufacturing (when your agent is being utilized by actual customers), you want to keep watch over the way it’s performing. Logs enable you to do this.

- Enchancment: By reviewing logs, you may determine patterns or recurring points and enhance your agent over time.

What’s OpenTelemetry?

OpenTelemetry is a normal for instrumentation, which implies it gives instruments to routinely report (or “log”) what’s taking place in your software program. On this case, it’s used to log agent runs.

How does it work?

- You add some instrumentation code to your agent. This code doesn’t change how the agent works; it simply information what’s taking place.

- When your agent runs, OpenTelemetry routinely logs all of the steps, errors, and different vital particulars.

- These logs are despatched to a platform (like a dashboard or monitoring software) the place you may evaluate them later.

Why is this beneficial?

- Ease of use: You don’t should manually add logging code in every single place. OpenTelemetry does it for you.

- Standardization: OpenTelemetry is a broadly used commonplace, so it really works with many instruments and platforms.

- Readability: The logs are structured and arranged, making it simpler to know what occurred throughout an agent run.

Logging agent runs is crucial as a result of AI brokers are complicated and unpredictable. Utilizing OpenTelemetry makes it simple to routinely report and monitor what’s taking place, so you may debug points, enhance efficiency, and guarantee every thing runs easily in manufacturing.

Methods to Use OpenTelemetry?

This script is establishing a Python surroundings with particular libraries and configuring OpenTelemetry for tracing. Right here’s a step-by-step clarification:

Right here I’ve put in the dependencies, imported required modules and arrange OpenTelemetry in terminal.

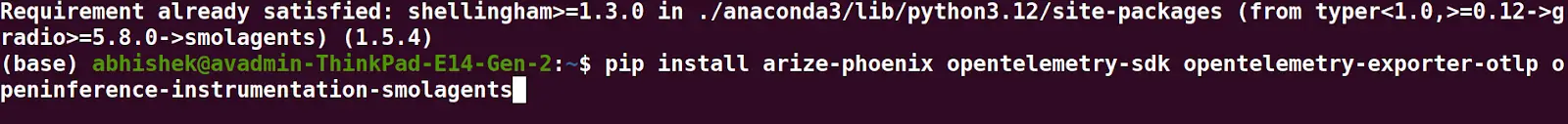

Set up Dependencies

!pip set up smolagents

!pip set up arize-phoenix opentelemetry-sdk opentelemetry-exporter-otlp openinference-instrumentation-smolagents

- smolagents: A library for constructing light-weight brokers (probably for AI or automation duties).

- arize-phoenix: A software for monitoring and debugging machine studying fashions.

- opentelemetry-sdk: The OpenTelemetry SDK for instrumenting, producing, and exporting telemetry knowledge (traces, metrics, logs).

- opentelemetry-exporter-otlp: An exporter for sending telemetry knowledge within the OTLP (OpenTelemetry Protocol) format.

- openinference-instrumentation-smolagents: A library that devices smolagents to routinely generate OpenTelemetry traces.

Import Required Modules

from opentelemetry import hint

from opentelemetry.sdk.hint import TracerProvider

from opentelemetry.sdk.hint.export import BatchSpanProcessor

from openinference.instrumentation.smolagents import SmolagentsInstrumentor

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk.hint.export import ConsoleSpanExporter, SimpleSpanProcessor- hint: The OpenTelemetry tracing API.

- TracerProvider: The central part for creating and managing traces.

- BatchSpanProcessor: Processes spans in batches for environment friendly exporting.

- SmolagentsInstrumentor: Mechanically devices smolagents to generate traces.

- OTLPSpanExporter: Exports traces utilizing the OTLP protocol over HTTP.

- ConsoleSpanExporter: Exports traces to the console (for debugging).

- SimpleSpanProcessor: Processes spans one after the other (helpful for debugging or low-volume tracing).

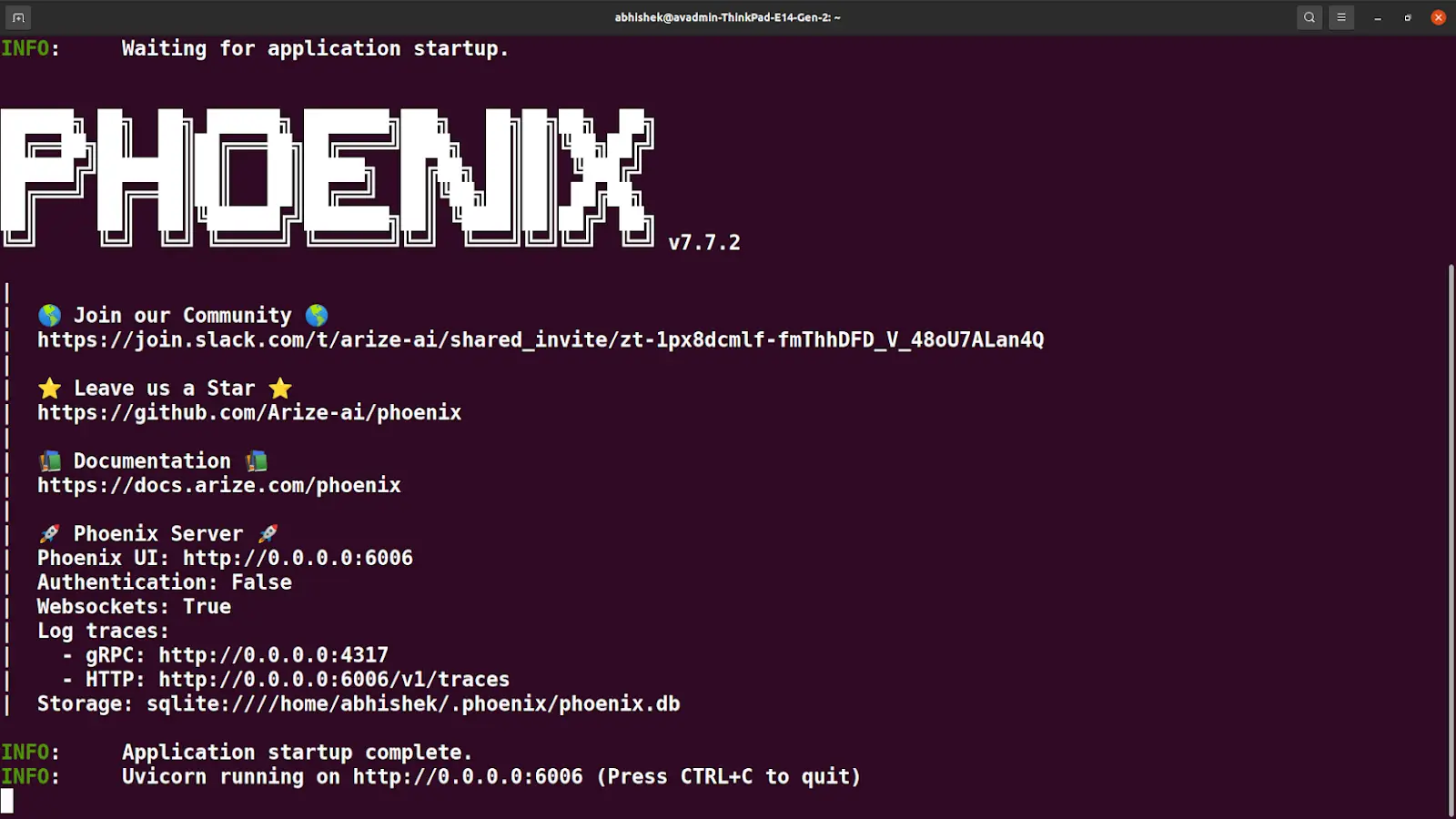

Set Up OpenTelemetry Tracing

endpoint = "http://0.0.0.0:6006/v1/traces"

trace_provider = TracerProvider()

trace_provider.add_span_processor(SimpleSpanProcessor(OTLPSpanExporter(endpoint)))- endpoint: The URL the place traces shall be despatched (on this case, http://0.0.0.0:6006/v1/traces).

- trace_provider: Creates a brand new TracerProvider occasion.

- add_span_processor: Provides a span processor to the supplier. Right here, it makes use of SimpleSpanProcessor to ship traces to the desired endpoint through OTLPSpanExporter.

Instrument smolagents

SmolagentsInstrumentor().instrument(tracer_provider=trace_provider)This line devices the smolagents library to routinely generate traces utilizing the configured trace_provider.

- Installs the mandatory Python libraries.

- Configures OpenTelemetry to gather traces from smolagents.

- Sends the traces to a specified endpoint (http://0.0.0.0:6006/v1/traces) utilizing the OTLP protocol.

- If you wish to debug, you may add a ConsoleSpanExporter to print traces to the terminal.

You will see all the main points right here: http://0.0.0.0:6006/v1/traces to inspact your agent’s run.

Run the Agent

from smolagents import (

CodeAgent,

ToolCallingAgent,

ManagedAgent,

DuckDuckGoSearchTool,

VisitWebpageTool,

HfApiModel,

)

mannequin = HfApiModel()

agent = ToolCallingAgent(

instruments=[DuckDuckGoSearchTool(), VisitWebpageTool()],

mannequin=mannequin,

)

managed_agent = ManagedAgent(

agent=agent,

title="managed_agent",

description="That is an agent that may do net search.",

)

manager_agent = CodeAgent(

instruments=[],

mannequin=mannequin,

managed_agents=[managed_agent],

)

manager_agent.run(

"If the US retains its 2024 progress fee, what number of years will it take for the GDP to double?"

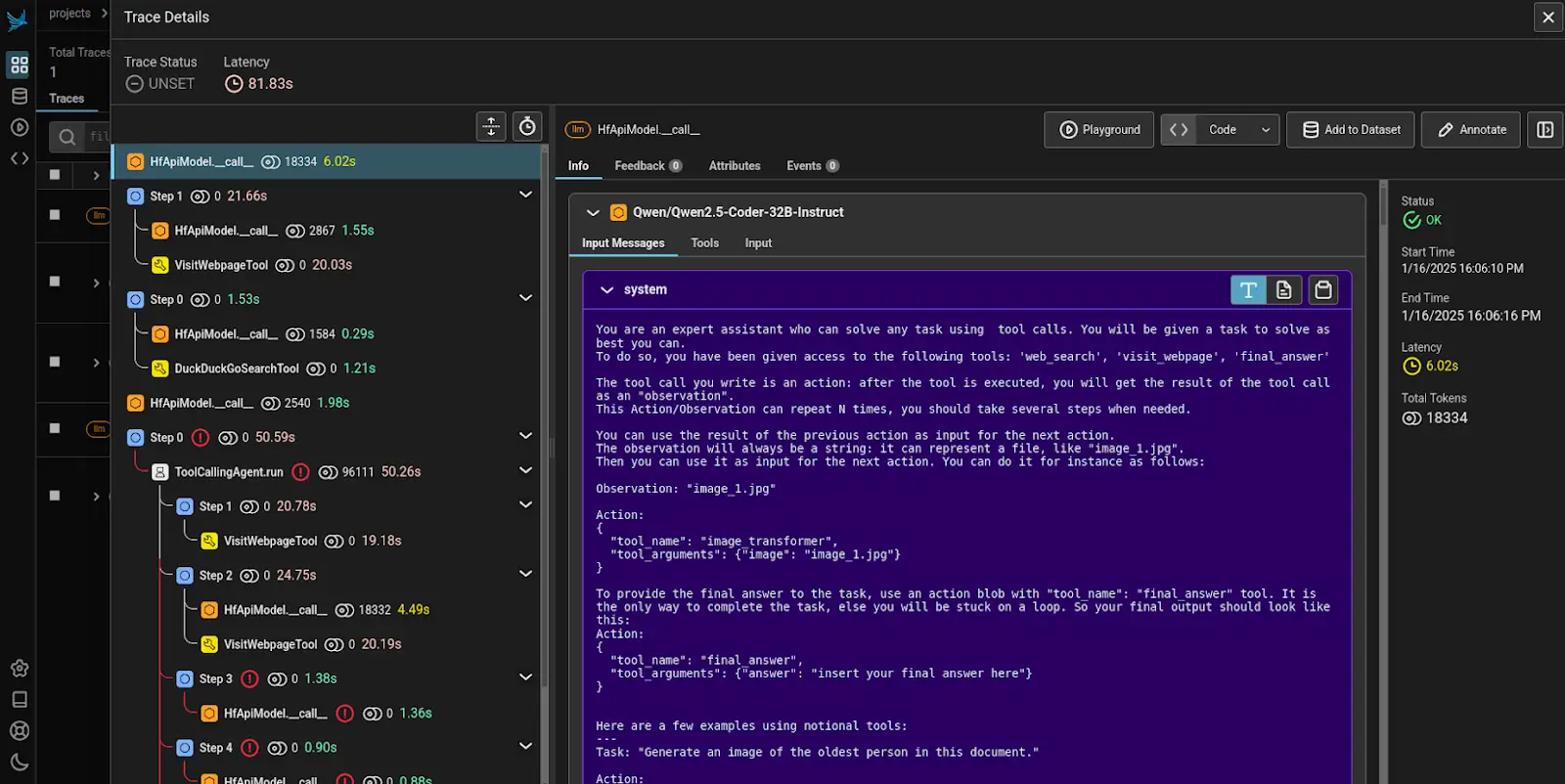

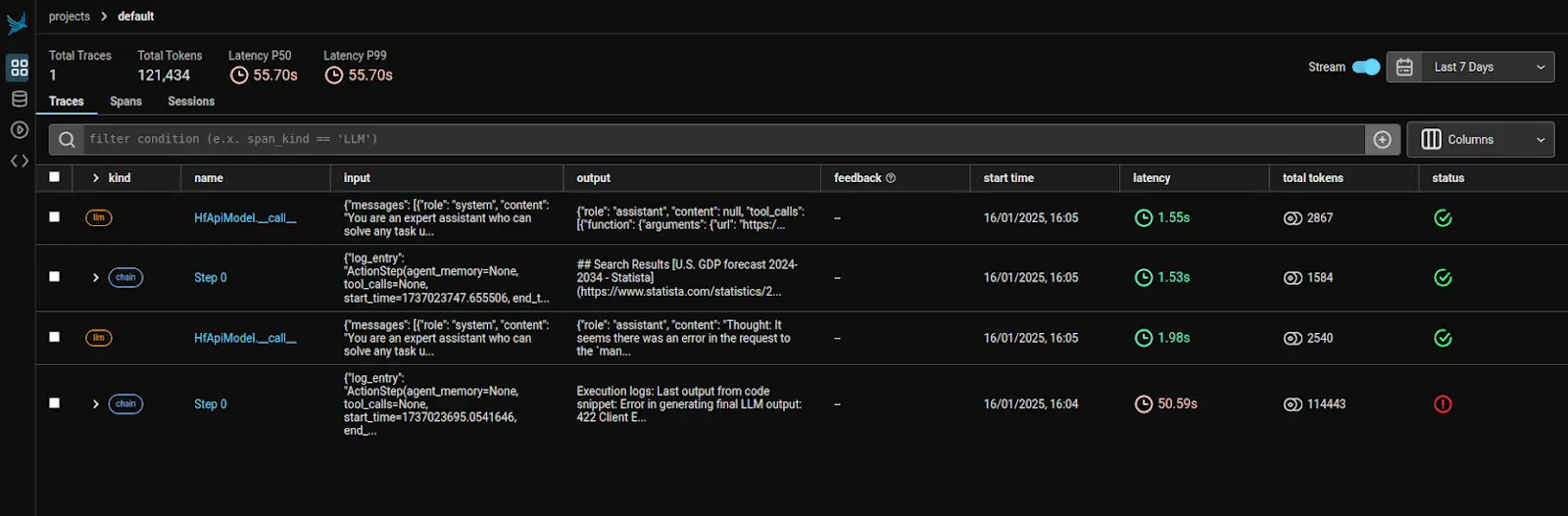

)Right here’s how the logs will look:

Conclusion

In conclusion, debugging AI agent runs may be complicated on account of their unpredictable workflows, intensive logging, and self-correcting minor errors. These challenges spotlight the crucial position of efficient monitoring instruments like OpenTelemetry, which give the visibility and construction wanted to streamline debugging, enhance efficiency, and guarantee brokers function easily. Attempt it your self and uncover how OpenTelemetry can simplify your AI agent growth and debugging course of, making it simpler to realize seamless, dependable operations.

Discover the The Agentic AI Pioneer Program to deepen your understanding of Agent AI and unlock its full potential. Be a part of us on this journey to find revolutionary insights and purposes!