TensorFlow Lite (TFLite) is a set of instruments to transform and optimize TensorFlow fashions to run on cellular and edge gadgets. Google developed TensorFlow for inner use however later selected to open-source it. As we speak, TFLite is working on greater than 4 billion gadgets!

As an Edge AI implementation, TensorFlow Lite significantly reduces the obstacles to introducing large-scale pc imaginative and prescient with on-device machine studying, making it attainable to run machine studying in every single place.

The deployment of high-performing deep studying fashions on embedded gadgets to unravel real-world issues is a battle utilizing at present’s AI expertise. Privateness, knowledge limitations, community connection points, and the necessity for optimized fashions which can be extra resource-efficient are among the key challenges of many functions on the sting to make real-time deep studying scalable.

Within the following, we are going to focus on:

- Tensorflow vs. Tensorflow Lite

- Selecting the right TF Lite Mannequin

- Pre-trained Fashions for TensorFlow Lite

- How you can use TensorFlow Lite

About us: At viso.ai, we energy essentially the most complete pc imaginative and prescient platform Viso Suite. The enterprise answer is utilized by groups to construct, deploy, and scale customized pc imaginative and prescient techniques dramatically quicker, in a build-once, deploy-anywhere strategy. We help TensorFlow for pc imaginative and prescient together with PyTorch and lots of different frameworks.

What’s Tensorflow Lite?

TensorFlow Lite is an open-source deep studying framework designed for on-device inference (Edge Computing). TensorFlow Lite supplies a set of instruments that allows on-device machine studying by permitting builders to run their skilled fashions on cellular, embedded, and IoT gadgets and computer systems. It helps platforms comparable to embedded Linux, Android, iOS, and MCU.

TensorFlow Lite is specifically optimized for on-device machine studying (Edge ML). As an Edge ML mannequin, it’s appropriate for deployment to resource-constrained edge gadgets. Edge intelligence, the flexibility to maneuver deep studying duties (object detection, picture recognition, and so forth.) from the cloud to the information supply, is important to scale pc imaginative and prescient in real-world use circumstances.

What’s TensorFlow?

TensorFlow is an open-source software program library for AI and machine studying with deep neural networks. TensorFlow for pc imaginative and prescient was developed by Google Mind for inner use at Google and open-sourced in 2015. As we speak, it’s used for each analysis and manufacturing at Google.

What’s Edge Machine Studying?

Edge Machine Studying (Edge ML), or on-device machine studying, is important to beat the constraints of pure cloud-based options. The important thing advantages of Edge AI are real-time latency (no knowledge offloading), privateness, robustness, connectivity, smaller mannequin measurement, and effectivity (prices of computation and vitality, watt/FPS).

To be taught extra about how Edge AI combines Cloud with Edge Computing for native machine studying, I like to recommend studying our article Edge AI – Driving Subsequent-Gen AI Functions.

Pc Imaginative and prescient on Edge Gadgets

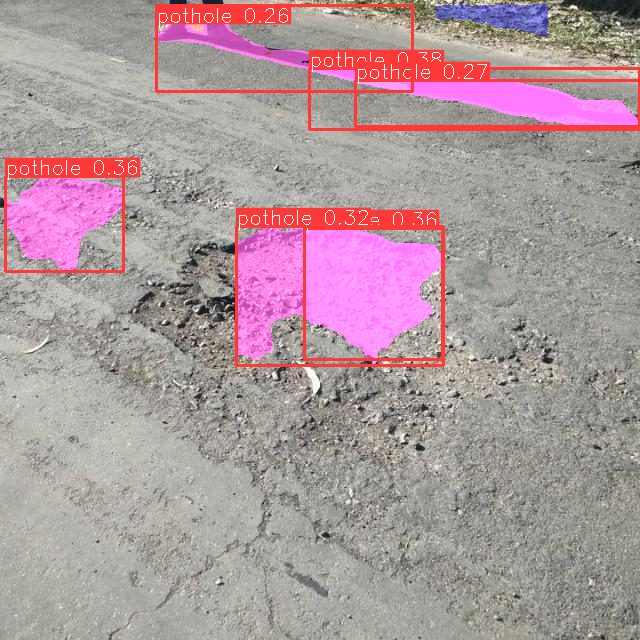

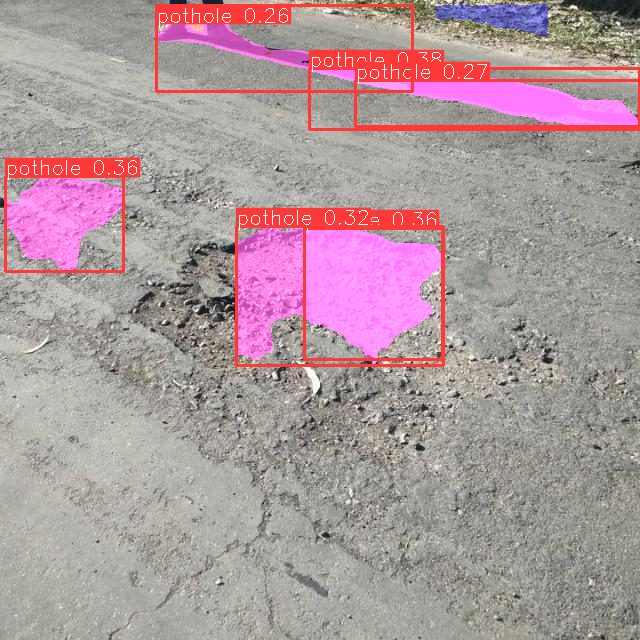

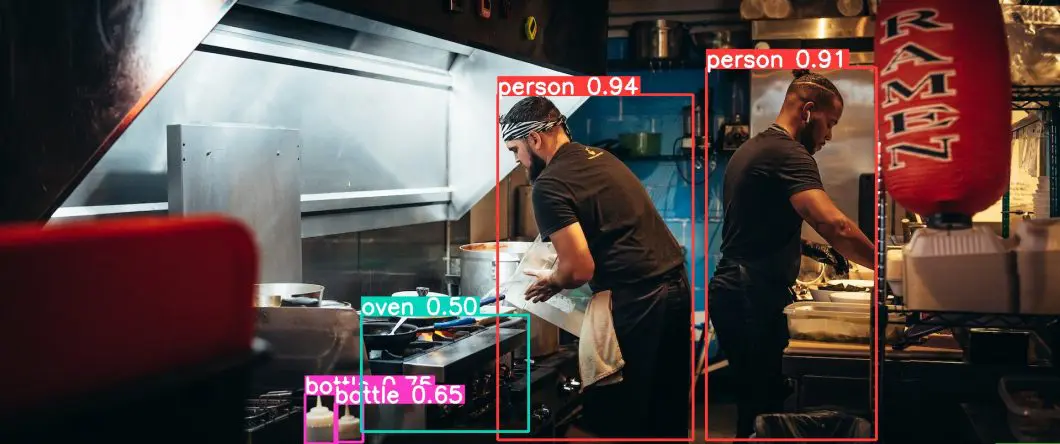

Amongst different duties, particularly object detection is of nice significance to most pc imaginative and prescient functions. Present approaches of object detection implementations can hardly run on resource-constrained edge gadgets. To mitigate this dilemma, Edge ML-optimized fashions and light-weight variants that obtain correct real-time object detection on edge gadgets have been developed.

Distinction between Tensorflow Lite and Tensorflow

TensorFlow Lite is a lighter model of the unique TensorFlow (TF). TF Lite is particularly designed for cellular computing platforms and embedded gadgets, edge computer systems, online game consoles, and digital cameras. TensorFlow Lite is meant to supply the flexibility to carry out predictions on an already skilled mannequin (Inference duties).

TensorFlow, alternatively, may help construct and practice the ML mannequin. In different phrases, TensorFlow is supposed for coaching fashions, whereas TensorFlow Lite is extra helpful for inference and edge gadgets. TensorFlow Lite additionally optimizes the skilled mannequin utilizing quantization methods (mentioned later on this article), which consequently reduces the required reminiscence utilization in addition to the computational price of using neural networks.

TensorFlow Lite Benefits

- Mannequin Conversion: TensorFlow fashions might be effectively transferred into TensorFlow Lite fashions for mobile-friendly deployment. TF Lite can optimize current fashions to be much less reminiscence and cost-consuming, the perfect scenario for utilizing machine studying fashions on cellular.

- Minimal Latency: TensorFlow Lite decreases inference time, which implies issues that rely upon efficiency time for real-time efficiency are ultimate use circumstances of TensorFlow Lite.

- Consumer-friendly: TensorFlow Lite gives a comparatively easy approach for cellular builders to construct functions on iOS and Android gadgets utilizing Tensorflow machine studying fashions.

- Offline inference: Edge inference doesn’t depend on an web connection, which implies that TFLite permits builders to deploy machine studying fashions in distant conditions or in locations the place an web connection might be costly or scarce. For instance, sensible cameras might be skilled to establish wildlife in distant places and solely transmit sure integral components of the video feed.

Machine studying model-dependent duties might be executed in areas removed from wi-fi infrastructure. The offline inference capabilities of Edge ML are an integral a part of most mission-critical pc imaginative and prescient functions that ought to nonetheless have the ability to run with short-term lack of web connection (in autonomous driving, animal monitoring or safety techniques, and extra).

Selecting the right Tensorflow Lite Mannequin

Right here is how you can choose appropriate fashions for TensorFlow Lite deployment. For widespread functions like picture classification or object detection, you would possibly face selections amongst a number of TensorFlow Lite fashions various in measurement, knowledge enter necessities, inference pace, and accuracy.

To make an knowledgeable determination, prioritize your main constraint: mannequin measurement, knowledge measurement, inference pace, or accuracy. Usually, go for the smallest mannequin to make sure wider system compatibility and faster inference occasions.

- In case you’re unsure about your foremost constraint, default to the mannequin measurement as your deciding issue. Selecting a smaller mannequin gives better deployment flexibility throughout gadgets and usually ends in quicker inferences, enhancing consumer expertise.

- Nonetheless, keep in mind that smaller fashions would possibly compromise on accuracy. If accuracy is vital, think about bigger fashions.

Pre-trained Fashions for TensorFlow Lite

Make the most of pre-trained, open-source TensorFlow Lite fashions to rapidly combine machine studying capabilities into real-time cellular and edge system functions.

There’s a broad record of supported TF Lite instance apps with pre-trained fashions for varied duties:

- Autocomplete: Generate textual content solutions utilizing a Keras language mannequin.

- Picture Classification: Determine objects, folks, actions, and extra throughout varied platforms.

- Object Detection: Detect objects with bounding bins, together with animals, on totally different gadgets.

- Pose Estimation: Estimate single or a number of human poses, relevant in various situations.

- Speech Recognition: Acknowledge spoken key phrases on varied platforms.

- Gesture Recognition: Use your USB webcam to acknowledge gestures on Android/iOS.

- Picture Segmentation: Precisely localize and label objects, folks, and animals on a number of gadgets.

- Textual content Classification: Categorize textual content into predefined teams for content material moderation and tone detection.

- On-device Suggestion: Present customized suggestions based mostly on user-selected occasions.

- Pure Language Query Answering: Use BERT to reply questions based mostly on textual content passages.

- Tremendous Decision: Improve low-resolution photographs to increased high quality.

- Audio Classification: Classify audio samples, and use a microphone on varied gadgets.

- Video Understanding: Determine human actions in movies.

- Reinforcement Studying: Practice recreation brokers, and construct video games utilizing TensorFlow Lite.

- Optical Character Recognition (OCR): Extract textual content from photographs on Android.

How you can Use TensorFlow Lite

As mentioned within the earlier paragraph, TensorFlow mannequin frameworks might be compressed and deployed to an edge system or embedded utility utilizing TF Lite. There are two foremost steps to utilizing TFLite: producing the TensorFlow Lite mannequin and working inference. The official improvement workflow documentation might be discovered right here. I’ll clarify the important thing steps of utilizing TensorFlow Lite within the following.

Information Curation for Producing a TensorFlow Lite Mannequin

Tensorflow Lite fashions are represented with the .tflite file extension, which is an extension particularly for particular environment friendly transportable codecs known as FlatBuffers. FlatBuffers is an environment friendly cross-platform serialization library for varied programming languages and permits entry to serialized knowledge with out parsing or unpacking. This technique permits for just a few key benefits over the TensorFlow protocol buffer mannequin format.

Benefits of utilizing FlatBuffers embrace lowered measurement and quicker inference, which allows Tensorflow Lite to make use of minimal compute and reminiscence assets to execute effectively on edge gadgets. As well as, you may also add metadata with human-readable mannequin descriptions in addition to machine-readable knowledge. That is normally carried out to allow the automated era of pre-processing and post-processing pipelines throughout on-device inference.

Methods to Generate Tensorflow Lite Mannequin

There are just a few popularized methods to generate a Tensorflow Lite mannequin, which we are going to cowl within the following part.

How you can use an Present Tensorflow Lite Mannequin

There are a plethora of obtainable fashions which were pre-made by TensorFlow for performing particular duties. Typical machine studying strategies like segmentation, pose estimation, object detection, reinforcement studying, and pure language question-answering can be found for public use on the Tensorflow Lite instance apps web site.

These pre-built fashions might be deployed as-is and require little to no modification. The TFLite instance functions are nice to make use of at the start of tasks or when beginning to implement TensorFlow Lite with out spending time constructing new fashions from scratch.

How you can Create a Tensorflow Lite Mannequin

You can too create your personal TensorFlow Lite mannequin that serves a goal supplied by the app, utilizing distinctive knowledge. TensorFlow supplies a mannequin maker (TensorFlow Lite Mannequin Maker). The Mannequin Maker help library aids in duties comparable to picture classification, object detection, textual content classification, BERT query reply, audio classification, and advice (objects are really helpful utilizing context info).

With the TensorFlow Mannequin Maker, the method of coaching a TensorFlow Lite mannequin utilizing a customized dataset is easy. The characteristic takes benefit of switch studying to scale back the quantity of coaching knowledge required in addition to lower general coaching time. The mannequin maker library permits customers to effectively practice a Tensorflow Lite mannequin with their very own uploaded datasets.

Right here is an instance of coaching an picture classification mannequin with lower than 10 strains of code (that is included within the TF Lite documentation however put right here for comfort). This may be carried out as soon as all obligatory Mannequin Maker packages are put in:from tflite_model_maker import image_classifier

from tflite_model_maker.image_classifier import DataLoader

# Load enter knowledge particular to an on-device ML utility.

knowledge = DataLoader.from_folder(‘flower_photos/’)

train_data, test_data = knowledge.cut up(0.9)

# Customise the TensorFlow mannequin.

mannequin = image_classifier.create(train_data)

# Consider the mannequin.

loss, accuracy = mannequin.consider(test_data)

# Export to Tensorflow Lite mannequin and label file in `export_dir`.

mannequin.export(export_dir=’/tmp/’)

On this instance, the consumer would have their dataset known as “flower photographs” and use that to coach the TensorFlow Lite mannequin utilizing the picture classifier pre-made job.

Convert a TensorFlow Mannequin right into a TensorFlow Lite Mannequin

You’ll be able to create a mannequin in TensorFlow after which convert it right into a TensorFlow Lite mannequin utilizing the TensorFlow Lite Converter. The TensorFlow Lite converter applies optimizations and quantization to lower mannequin measurement and latency, leaving little to no loss in detection or mannequin accuracy.

The TensorFlow Lite converter generates an optimized FlatBuffer format recognized by the .tflite file extension utilizing the preliminary Tensorflow mannequin. The touchdown web page of TensorFlow Lite Converter incorporates a Python API to transform the mannequin.

The Quickest Solution to Use TensorFlow Lite

To not develop all the things across the Edge ML mannequin from scratch, you need to use a pc imaginative and prescient platform. Viso Suite is the end-to-end answer utilizing TensorFlow Lite to construct, deploy, and scale real-world functions.

The Viso Platform is optimized for Edge Pc Imaginative and prescient and supplies full-edge system administration, an utility builder, and absolutely built-in deployment instruments. The enterprise-grade answer helps to maneuver quicker from prototype to manufacturing, with out the necessity to combine and replace separate pc imaginative and prescient instruments manually. Yow will discover an outline of the options right here.

Study extra about Viso Suite right here.

What’s Subsequent With TensorFlow Lite?

General, light-weight AI mannequin variations of standard machine studying libraries will significantly facilitate the implementation of scalable pc imaginative and prescient options by transferring picture recognition capabilities from the cloud to edge gadgets linked to cameras. By leveraging TensorFlow Lite (TFLite), it may assist keep organized with collections save, and categorize content material based mostly in your preferences. TFLite’s streamlined deployment capabilities empower builders to categorize and deploy fashions throughout a variety of gadgets and platforms, guaranteeing optimum efficiency and consumer expertise.

Since Google developed and makes use of TensorFlow internally, the light-weight Edge ML mannequin variant will probably be a well-liked alternative for on-device inference.

To remain up to date on the most recent releases, information, and articles about Tensorflow lite, observe the TensorFlow weblog.