Six months in the past, LLMs.txt was launched as a groundbreaking file format designed to make web site documentation accessible for big language fashions (LLMs). Since its launch, the usual has steadily gained traction amongst builders and content material creators. Immediately, as discussions round Mannequin Context Protocols (MCP) intensify, LLMs.txt is within the highlight as a confirmed, AI-first documentation resolution that bridges the hole between human-readable content material and machine-friendly knowledge. On this article, we’ll discover the evolution of LLMs.txt, study its construction and advantages, dive into technical integrations (together with a Python module & CLI), and evaluate it to the rising MCP commonplace.

The Rise of LLMs.txt

Background and Context

Launched six months in the past, LLMs.txt was developed to handle a important problem: conventional internet recordsdata like robots.txt and sitemap.xml are designed for search engine crawlers—not for AI fashions that require concise, curated content material. LLMs.txt supplies a streamlined overview of web site documentation, permitting LLMs to shortly perceive important data with out being slowed down by extraneous particulars.

Key Factors:

- Goal: To distill web site content material right into a format optimized for AI reasoning.

- Adoption: In its quick lifespan, main platforms comparable to Mintlify, Anthropic, and Cursor have built-in LLMs.txt, highlighting its effectiveness.

- Present Tendencies: With the current surge in discussions round MCPs (Mannequin Context Protocols), the neighborhood is actively evaluating these two approaches for enhancing LLM capabilities.

The dialog on Twitter displays the speedy adoption of LLMs.txt and the thrill round its potential, whilst the controversy about MCPs grows:

- Jeremy Howard (@jeremyphoward):

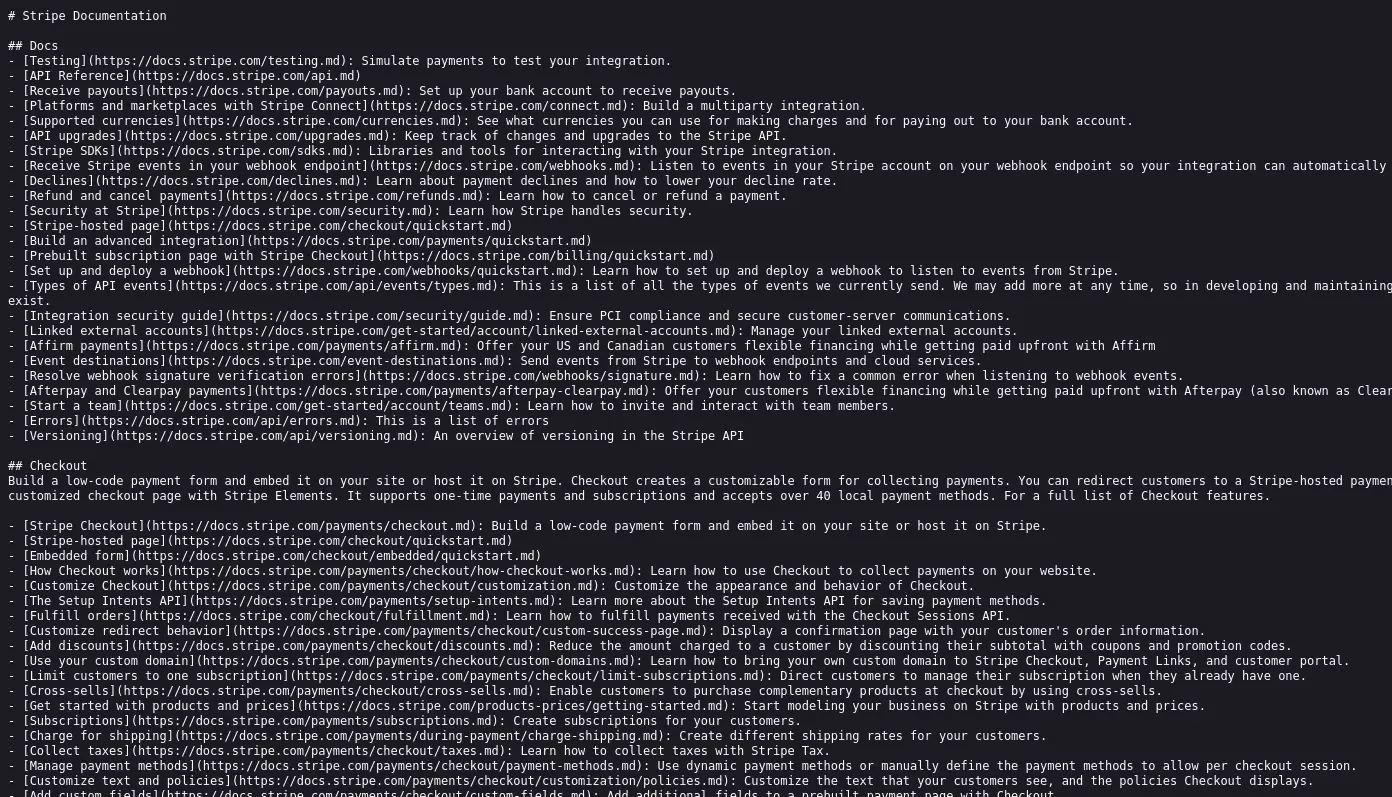

Celebrated the momentum, stating, “My proposed llms.txt commonplace actually has received an enormous quantity of momentum in current weeks.” He thanked the neighborhood—and notably @StripeDev—for his or her assist, with Stripe now internet hosting LLMs.txt on their documentation web site. - Stripe Builders (@StripeDev):

Introduced that they’ve added LLMs.txt and Markdown to their docs (docs.stripe.com/llms.txt), enabling builders to simply combine Stripe’s information into any LLM.

- Developer Insights:

Tweets from builders like @TheSeaMouse, @xpression_app, and @gpjt haven’t solely praised LLMs.txt however have additionally sparked discussions evaluating it to MCP. Some customers word that whereas LLMs.txt enhances content material ingestion, MCP guarantees to make LLMs extra actionable.

What’s an LLMs.txt File?

LLMs.txt is a Markdown file with a structured format particularly designed for making web site documentation accessible to LLMs. There are two major variations:

/llms.txt

- Goal:

Gives a high-level, curated overview of your web site’s documentation. It helps LLMs shortly grasp the positioning’s construction and find key assets. - Construction:

- An H1 with the identify of the challenge or web site. That is the one required part

- A blockquote with a brief abstract of the challenge, containing key data needed for understanding the remainder of the file

- Zero or extra markdown sections (e.g. paragraphs, lists, and so forth) of any sort besides headings, containing extra detailed details about the challenge and how one can interpret the supplied recordsdata

- Zero or extra markdown sections delimited by H2 headers, containing “file lists” of URLs the place additional element is out there

- Every “file listing” is a markdown listing, containing a required markdown hyperlink [name](url), then optionally a: and notes in regards to the file.

/llms-full.txt

- Goal:

Accommodates the whole documentation content material in a single place, providing detailed context when wanted. - Utilization:

Particularly helpful for technical API references, in-depth guides, and complete documentation eventualities.

Instance Construction Snippet:

# Mission Title

> Temporary challenge abstract

## Core Documentation

- [Quick Start](url): A concise introduction

- [API Reference](url): Detailed API documentation

## Elective

- [Additional Resources](url): Supplementary dataKey Benefits of LLMs.txt

LLMs.txt affords a number of distinct advantages over conventional internet requirements:

- Optimized for LLM Processing:

It eliminates non-essential components like navigation menus, JavaScript, and CSS, focusing solely on the important content material that LLMs want. - Environment friendly Context Administration:

On condition that LLMs function inside restricted context home windows, the concise format of LLMs.txt ensures that solely probably the most pertinent data is used. - Twin Readability:

The Markdown format makes LLMs.txt each human-friendly and simply parsed by automated instruments. - Complementary to Current Requirements:

Not like sitemap.xml or robots.txt—which serve completely different functions—LLMs.txt supplies a curated, AI-centric view of documentation.

Tips on how to Use LLMs.txt with AI Techniques?

To leverage the advantages of LLMs.txt, its content material should be fed into AI programs manually. Right here’s how completely different platforms combine LLMs.txt:

ChatGPT

- Methodology:

Customers copy the URL or full content material of the /llms-full.txt file into ChatGPT, enriching the context for extra correct responses. - Advantages:

This methodology permits ChatGPT to reference detailed documentation when wanted.

Claude

- Methodology: Since Claude at the moment lacks direct shopping capabilities, customers can paste the content material or add the file, guaranteeing complete context is supplied.

- Advantages: This method grounds Claude’s responses in dependable, up-to-date documentation.

Cursor

- Methodology: Cursor’s @Docs function permits customers so as to add an LLMs.txt hyperlink, integrating exterior content material seamlessly.

- Advantages: Enhances the contextual consciousness of Cursor, making it a strong software for builders.

A number of instruments streamline the creation of LLMs.txt recordsdata, decreasing handbook effort:

- Mintlify: Mechanically generates each /llms.txt and /llms-full.txt recordsdata for hosted documentation, guaranteeing consistency.

Look into this too: https://mintlify.com/docs/quickstart

- llmstxt by dotenv: Converts your web site’s sitemap.xml right into a compliant LLMs.txt file, integrating seamlessly along with your present workflow.

- llmstxt by Firecrawl: Makes use of internet scraping to compile your web site content material into an LLMs.txt file, minimizing handbook intervention.

Actual-World Implementations & Versatility

The flexibility of LLMs.txt recordsdata is obvious from real-world tasks like FastHTML, which observe each proposals for AI-friendly documentation:

- FastHTML Documentation:

The FastHTML challenge not solely makes use of an LLMs.txt file to offer a curated overview of its documentation but additionally affords a daily HTML docs web page with the identical URL appended by a .md extension. This twin method ensures that each human readers and LLMs can entry the content material in probably the most appropriate format. - Automated Growth:

The FastHTML challenge opts to mechanically develop its LLMs.txt file into two markdown recordsdata utilizing an XML-based construction. These two recordsdata are:- llms-ctx.txt: Accommodates the context with out the optionally available URLs.

- llms-ctx-full.txt: Contains the optionally available URLs for a extra complete context.

- These recordsdata are generated utilizing the llms_txt2ctx command-line utility, and the FastHTML documentation supplies detailed steering on how one can use them.

- Versatility Throughout Purposes:

Past technical documentation, LLMs.txt recordsdata are proving helpful in a wide range of contexts—from serving to builders navigate software program documentation to enabling companies to stipulate their buildings, breaking down complicated laws for stakeholders, and even offering private web site content material (e.g., CVs).

Notably, all nbdev tasks now generate .md variations of all pages by default. Equally, Reply.AI and quick.ai tasks utilizing nbdev have their docs regenerated with this function—exemplified by the markdown model of fastcore’s paperwork module.

Python Module & CLI for LLMs.txt

For builders trying to combine LLMs.txt into their workflows, a devoted Python module and CLI can be found to parse LLMs.txt recordsdata and create XML context paperwork appropriate for programs like Claude. This software not solely makes it straightforward to transform documentation into XML but additionally supplies each a command-line interface and a Python API.

Set up

pip set up llms-txtTips on how to use it?

CLI

After set up, llms_txt2ctx is out there in your terminal.

To get assist for the CLI:

llms_txt2ctx -hTo transform an llms.txt file to XML context and save to llms.md:

llms_txt2ctx llms.txt > llms.mdGo –optionally available True so as to add the ‘optionally available’ part of the enter file.

Python module

from llms_txt import *

samp = Path('llms-sample.txt').read_text()Use parse_llms_file to create a knowledge construction with the sections of an llms.txt file (you can too add optionally available=True if wanted):

parsed = parse_llms_file(samp)

listing(parsed)

['title', 'summary', 'info', 'sections']

parsed.title,parsed.abstractImplementation and checks

To point out how easy it’s to parse llms.txt recordsdata, right here’s a whole parser in <20 strains of code with no dependencies:

from pathlib import Path

import re,itertools

def chunked(it, chunk_sz):

it = iter(it)

return iter(lambda: listing(itertools.islice(it, chunk_sz)), [])

def parse_llms_txt(txt):

"Parse llms.txt file contents in `txt` to a `dict`"

def _p(hyperlinks):

link_pat="-s*[(?P<title>[^]]+)]((?P<url>[^)]+))(?::s*(?P<desc>.*))?"

return [re.search(link_pat, l).groupdict()

for l in re.split(r'n+', links.strip()) if l.strip()]

begin,*relaxation = re.cut up(fr'^##s*(.*?$)', txt, flags=re.MULTILINE)

sects = {okay: _p(v) for okay,v in dict(chunked(relaxation, 2)).gadgets()}

pat="^#s*(?P<title>.+?$)n+(?:^>s*(?P<abstract>.+?$)$)?n+(?P<data>.*)"

d = re.search(pat, begin.strip(), (re.MULTILINE|re.DOTALL)).groupdict()

d['sections'] = sects

return dWe now have supplied a take a look at suite in checks/test-parse.py and confirmed that this implementation passes all checks.

Python Supply Code Overview

The llms_txt Python module supplies the supply code and helpers wanted to create and use llms.txt recordsdata. Right here’s a short overview of its performance:

- File Specification: The module adheres to the llms.txt spec: an H1 title, a blockquote abstract, optionally available content material sections, and H2-delimited sections containing file lists.

- Parsing Helpers: Features comparable to parse_llms_file break down the file right into a easy knowledge construction (with keys like title, abstract, data, and sections).

For instance, parse_link(txt) extracts the title, URL, and optionally available description from a markdown hyperlink. - XML Conversion: Features like create_ctx and mk_ctx convert the parsed knowledge into an XML context file, which is particularly helpful for programs like Claude.

- Command-Line Interface: The CLI command llms_txt2ctx makes use of these helpers to course of an llms.txt file and output an XML context file. This software simplifies integrating llms.txt into varied workflows.

- Concise Implementation: The module even features a parser carried out in below 20 strains of code, leveraging regex and helper features like chunked for environment friendly processing.

For extra particulars, you possibly can seek advice from this hyperlink – https://llmstxt.org/core.html

Evaluating LLMs.txt and MCP (Mannequin Context Protocol)

Whereas each LLMs.txt and the rising Mannequin Context Protocol (MCP) goal to boost LLM capabilities, they deal with completely different challenges within the AI ecosystem. Beneath is an enhanced comparability that dives deeper into every method:

LLMs.txt

- Goal:

Focuses on offering LLMs with clear, curated content material by distilling web site documentation right into a structured Markdown format. - Implementation:

A static file maintained by web site homeowners, very best for technical documentation, API references, and complete guides. - Advantages:

- Simplifies content material ingestion.

- Simple to implement and replace.

- Enhances immediate high quality by filtering out pointless components.

MCP (Mannequin Context Protocol)

What’s MCP?

MCP is an open commonplace that creates safe, two-way connections between your knowledge and AI-powered instruments. Consider it as a USB-C port for AI purposes—a single, widespread connector that lets completely different instruments and knowledge sources “discuss” to one another.

Why MCP Matter?

As AI assistants change into integral to our day by day workflow (take into account Replit, GitHub Copilot, or Cursor IDE), guaranteeing they’ve entry to all of the context they want is essential. Immediately, integrating new knowledge sources typically requires customized code, which is messy and time-consuming. MCP simplifies this by:

- Providing Pre-built Integrations: A rising library of ready-to-use connectors.

- Offering Flexibility: Enabling seamless switching between completely different AI suppliers.

- Enhancing Safety: Guaranteeing that your knowledge stays safe inside your infrastructure.

How Does MCP Work?

MCP follows a client-server structure:

- MCP Hosts: Applications (like Claude Desktop or widespread IDEs) that wish to entry knowledge.

- MCP Shoppers: Elements sustaining a 1:1 reference to MCP servers.

- MCP Servers: Light-weight adapters that expose particular knowledge sources or instruments.

- Connection Lifecycle:

- Initialization: Alternate of protocol variations and capabilities.

- Message Alternate: Supporting request-response patterns and notifications.

- Termination: Clear shutdown, disconnection, or dealing with errors.

Actual-World Influence and Early Adoption

Think about in case your AI software may seamlessly entry your native recordsdata, databases, or distant companies with out writing customized code for each connection. MCP guarantees precisely that—simplifying how AI instruments combine with numerous knowledge sources. Early adopters are already experimenting with MCP in varied environments, streamlining workflows and decreasing improvement overhead.

Learn extra about MCP: Mannequin Context Protocol – Analytics Vidhya

Shared Motives and Key Variations

Frequent Objective: Each LLMs.txt and MCP goal to empower LLMs however they achieve this in complementary methods. LLMs.txt improves content material ingestion by offering a curated, streamlined view of web site documentation, whereas MCP extends LLM performance by enabling them to execute real-world duties. In essence, LLMs.txt helps LLMs “learn” higher, and MCP helps them “act” successfully.

Nature of the Options

- LLMs.txt:

- Static, Curated Content material Commonplace: LLMs.txt is designed as a static file that adheres to a strict Markdown-based construction. It consists of an H1 title, a blockquote abstract, and H2-delimited sections with curated hyperlink lists.

- Technical Benefits:

- Token Effectivity: By filtering out non-essential particulars (comparable to navigation menus, JavaScript, and CSS), LLMs.txt compresses complicated internet content material right into a succinct format that matches throughout the restricted context home windows of LLMs.

- Simplicity: Its format is straightforward to generate and parse utilizing commonplace textual content processing instruments (e.g., regex and Markdown parsers), making it accessible to a variety of builders.

- Enhancing Capabilities:

- Improves the standard of context supplied to an LLM, resulting in extra correct reasoning and higher response era.

- Facilitates testing and iterative refinement since its construction is predictable and machine-readable.

- MCP (Mannequin Context Protocol):

- Dynamic, Motion-Enabling Protocol: MCP is a strong, open commonplace that creates safe, two-way communications between LLMs and exterior knowledge sources or companies.

- Technical Benefits:

- Standardized API Interfacing: MCP acts like a common connector—much like a USB-C port—permitting LLMs to interface seamlessly with numerous knowledge sources (native recordsdata, databases, distant APIs, and so forth.) with out customized code for every integration.

- Actual-Time Interplay: By a client-server structure, MCP helps dynamic request-response cycles and notifications, enabling LLMs to fetch real-time knowledge and execute duties (e.g., sending emails, updating spreadsheets, or triggering workflows).

- Complexity Dealing with: MCP should deal with challenges like authentication, error dealing with, and asynchronous communication, making it extra engineering-intensive but additionally way more versatile in extending LLM capabilities.

- Enhancing Capabilities:

- Transforms LLMs from passive textual content mills into lively, task-performing assistants.

- Facilitates seamless integration of LLMs into enterprise processes and improvement workflows, boosting productiveness by means of automation.

Ease of Implementation

- LLMs.txt:

- Is comparatively simple to implement. Its creation and parsing depend on light-weight textual content processing methods that require minimal engineering overhead.

- May be maintained manually or by way of easy automation instruments.

- MCP:

- Requires a strong engineering effort. Implementing MCP includes designing safe APIs, managing a client-server structure, and constantly sustaining compatibility with evolving exterior service requirements.

- Entails creating pre-built connectors and dealing with the complexities of real-time knowledge trade.

Collectively: These improvements symbolize complementary methods in enhancing LLM capabilities. LLMs.txt ensures that LLMs have a high-quality, condensed snapshot of important content material—significantly bettering comprehension and response high quality. In the meantime, MCP elevates LLMs by permitting them to bridge the hole between static content material and dynamic motion, in the end reworking them from mere content material analyzers into interactive, task-performing programs.

Conclusion

LLMs.txt, launched six months in the past, has already carved out a significant area of interest within the realm of AI-first documentation. By offering a curated, streamlined methodology for LLMs to ingest and perceive complicated web site documentation, LLMs.txt considerably enhances the accuracy and reliability of AI responses. Its simplicity and token effectivity have made it a useful software for builders and content material creators alike.

On the identical time, the emergence of Mannequin Context Protocols (MCP) marks the subsequent evolution in LLM capabilities. MCP’s dynamic, standardized method permits LLMs to seamlessly entry and work together with exterior knowledge sources and companies reworking them from passive readers into lively, task-performing assistants. Collectively, LLMs.txt and MCP symbolize a strong synergy: whereas LLMs.txt ensures that AI fashions have the absolute best context, MCP supplies them with the means to behave upon that context.

Trying forward, the way forward for AI-driven documentation and automation seems more and more promising. As greatest practices and instruments proceed to evolve, builders can count on extra built-in, safe, and environment friendly programs that not solely improve the capabilities of LLMs but additionally redefine how we work together with digital content material. Whether or not you’re a developer striving for innovation, a enterprise proprietor aiming to optimize workflows, or an AI fanatic wanting to discover the frontier of know-how, now’s the time to dive into these requirements and unlock the complete potential of AI-first documentation.

Login to proceed studying and luxuriate in expert-curated content material.