Neural fashion switch is a way that permits us to merge two pictures, taking fashion from one picture and content material from one other picture, leading to a brand new and distinctive picture. For instance, one might rework their portray into an art work that resembles the work of artists like Picasso or Van Gogh.

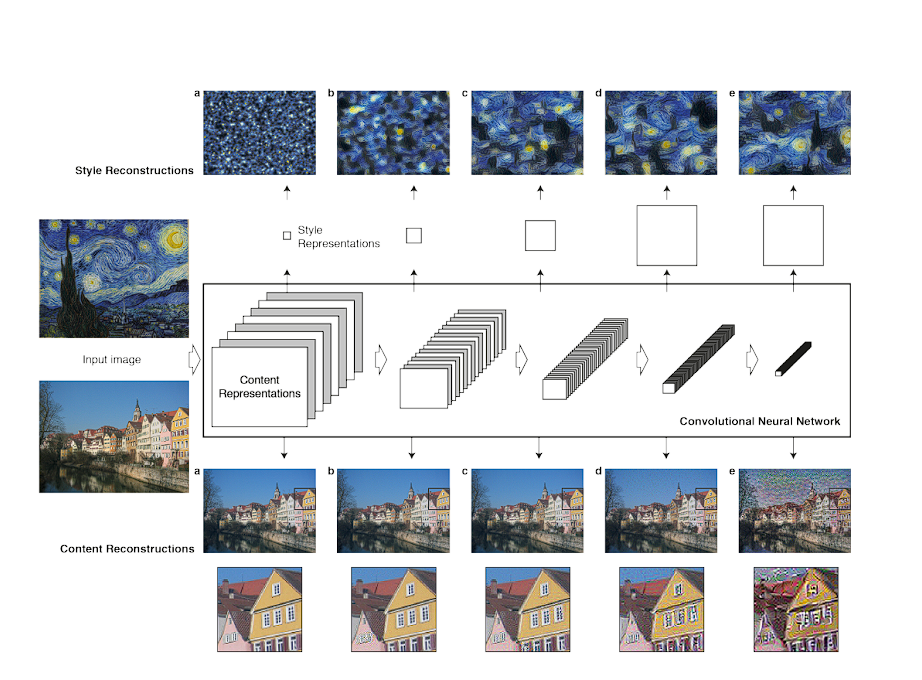

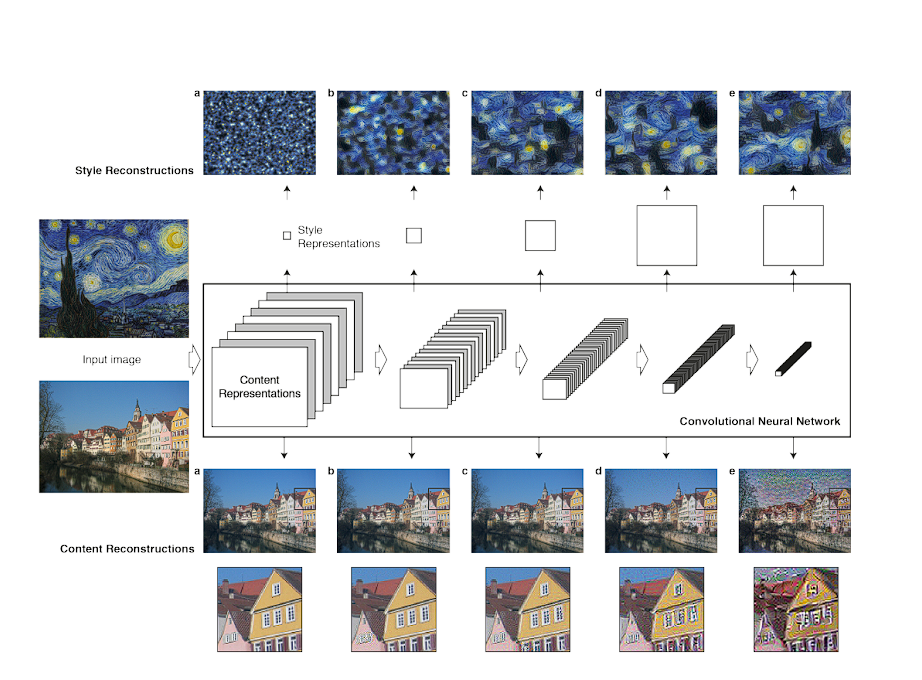

Right here is how this method works, at that begin you’ve gotten three pictures, a pixelated picture, the content material picture, and a method picture, the Machine Studying mannequin transforms the pixelated picture into a brand new picture that maintains recognizable options from the content material and magnificence picture.

Neural Fashion Switch (NST) has a number of use circumstances, similar to photographers enhancing their pictures by making use of creative types, entrepreneurs creating partaking content material, or an artist creating a novel and new artwork kind or prototyping their art work.

On this weblog, we’ll discover NST, and the way it works, after which take a look at some doable eventualities the place one might make use of NST.

Neural Fashion Switch Defined

Neural Fashion Switch follows a easy course of that includes:

- Three pictures, the picture from which the fashion is copied, the content material picture, and a beginning picture that’s simply random noise.

- Two loss values are calculated, one for fashion Loss and one other for content material loss.

- The NST iteratively tries to scale back the loss, at every step by evaluating how shut the pixelated picture is to the content material and magnificence picture, and on the finish of the method after a number of iterations, the random noise has been became the ultimate picture.

Distinction between Fashion and Content material Picture

We have now been speaking about Content material and Fashion Pictures, let’s take a look at how they differ from one another:

- Content material Picture: From the content material picture, the mannequin captures the high-level construction and spatial options of the picture. This includes recognizing objects, shapes, and their preparations inside the picture. For instance, in {a photograph} of a cityscape, the content material illustration is the association of buildings, streets, and different structural components.

- Fashion Picture: From the Fashion picture, the mannequin learns the creative components of a picture, similar to textures, colours, and patterns. This would come with colour palettes, brush strokes, and texture of the picture.

By optimizing the loss, NST combines the 2 distinct representations within the Fashion and Content material picture and combines them right into a single picture given as enter.

Background and Historical past of Neural Fashion Switch

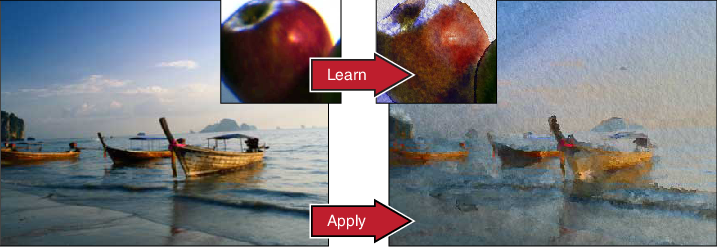

NST is an instance of a picture styling downside that has been in improvement for many years, with picture analogies and texture synthesis algorithms paving foundational work for NST.

- Picture Analogies: This strategy learns the “transformation” between a photograph and the art work it’s attempting to duplicate. The algorithm then analyzes the variations between each the pictures, these realized variations are then used to rework a brand new photograph into the specified creative fashion.

- Picture Quilting: This technique focuses on replicating the feel of a method picture. It first breaks down the fashion picture into small patches after which replaces these patches within the content material picture.

The sphere of Neural fashion switch took a very new flip with Deep Studying. Earlier strategies used picture processing methods that manipulated the picture on the pixel degree, trying to merge the feel of 1 picture into one other.

With deep studying, the outcomes have been impressively good. Right here is the journey of NST.

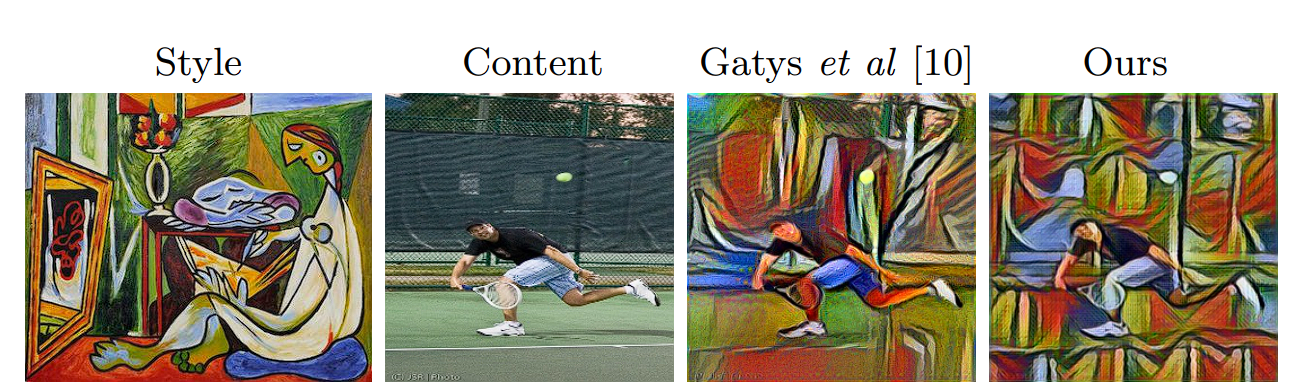

Gatys et al. (2015)

The analysis paper by Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge, titled “A Neural Algorithm of Inventive Fashion,” made an vital mark within the timeline of NST.

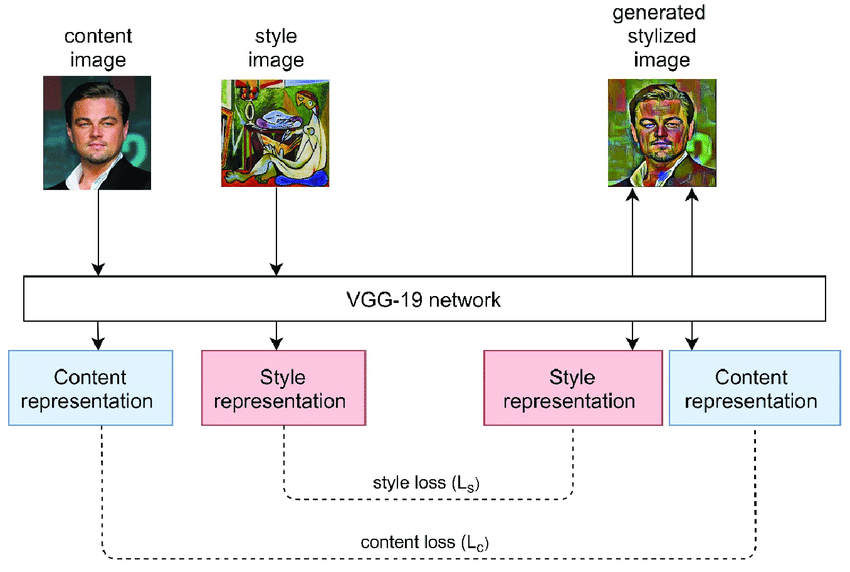

The researchers repurposed the VGG-19 structure that was pre-trained for object detection to separate and recombine the content material and magnificence of pictures.

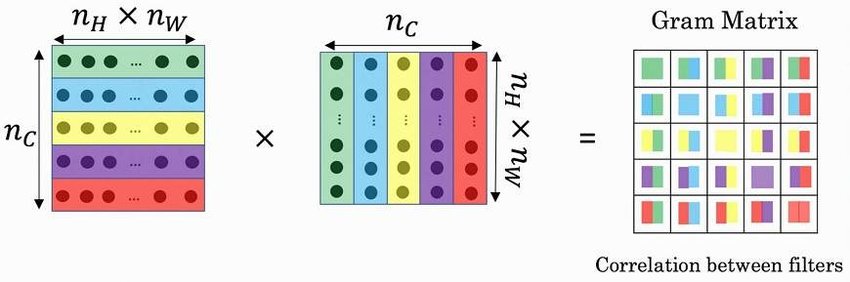

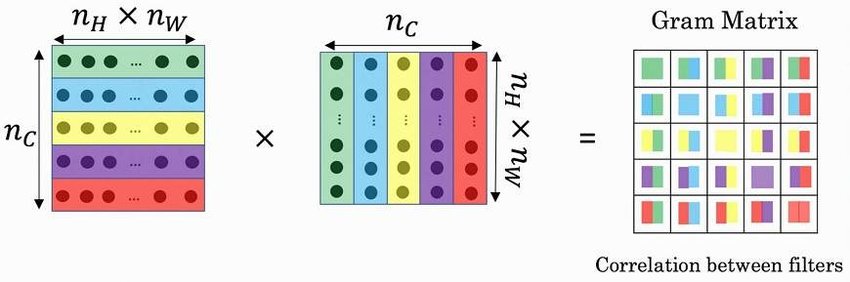

- The mannequin analyzes the content material picture by way of the pre-trained VGG-19 mannequin, capturing the objects and constructions. It then analyses the fashion picture utilizing an vital idea, the Gram Matrix.

- The generated picture is iteratively refined by minimizing a mixture of content material loss and magnificence loss. One other key idea on this mannequin was using a Gram matrix.

What’s Gram Matrix?

A Gram matrix captures the fashion info of a picture in numerical kind.

A picture might be represented by the relationships between the activations of options detected by a convolutional neural community (CNN). The Gram matrix focuses on these relationships, capturing how usually sure options seem collectively within the picture. That is performed by minimizing the mean-squared error distance between the entries of the Gram matrix from the unique picture and the Gram matrix of the picture to be generated.

A excessive worth within the Gram matrix signifies that sure options (represented by the function maps) steadily co-occur within the picture. This tells in regards to the picture’s fashion. For instance, a excessive worth between a “horizontal edge” map and a “vertical edge” map would point out {that a} sure geometric sample exists within the picture.

The fashion loss is calculated utilizing the gram matrix, and content material loss is calculated by analyzing the upper layers within the mannequin, chosen consciously as a result of the upper degree captures the semantic particulars of the picture similar to form and format.

This mannequin makes use of the approach we mentioned above the place it tries to scale back the Fashion and Content material loss.

Johnson et al. Quick Fashion Switch (2016)

Whereas the earlier mannequin produced first rate outcomes, it was computationally costly and sluggish.

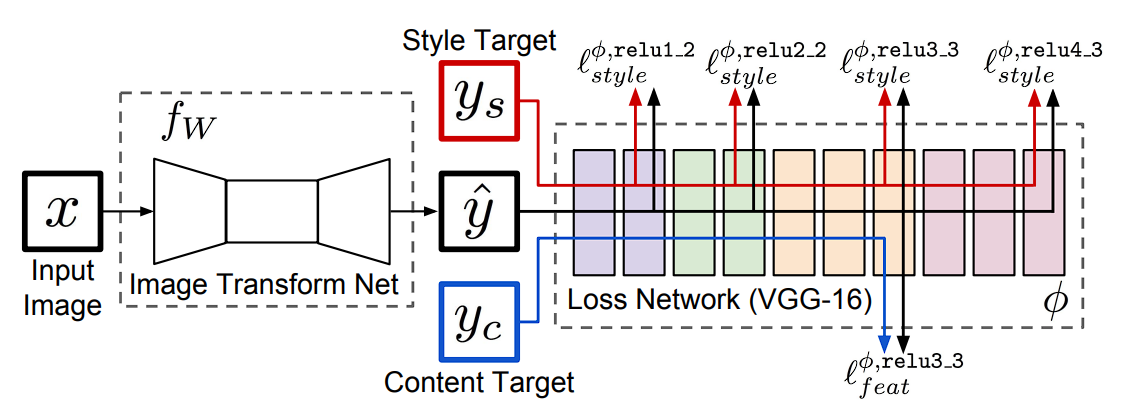

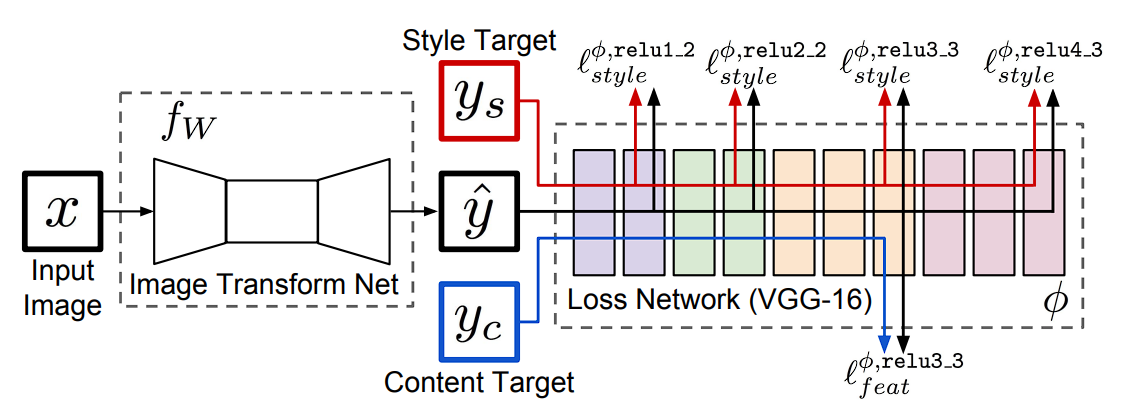

In 2016, Justin Johnson, Alexandre Alahi, and Li Fei-Fei addressed computation limitations by publishing their analysis paper titled “Perceptual Losses for Actual-Time Fashion Switch and Tremendous-Decision.”

On this paper, they launched a community that would carry out fashion switch in real-time utilizing perceptual loss, wherein as a substitute of utilizing direct pixel values to calculate Gram Matrix, perceptual loss makes use of the CNN mannequin to seize the fashion and content material loss.

The 2 outlined perceptual loss capabilities make use of a loss community, subsequently it’s protected to say that these perceptual loss capabilities are themselves Convolution Neural Networks.

What’s Perceptual Loss?

Perceptual loss has two elements:

- Function Reconstruction Loss: This loss encourages the mannequin to have output pictures which have an analogous function illustration to the goal picture. The function reconstruction loss is the squared, normalized Euclidean distance between the function representations of the output picture and goal picture. Reconstructing from increased layers preserves picture content material and total spatial construction however not colour, texture, and actual form. Utilizing a function reconstruction loss encourages the output picture y to be perceptually much like the goal picture y with out forcing them to match precisely.

- Fashion Reconstruction Loss: The Fashion Reconstruction Loss goals to penalize variations in fashion, similar to colours, textures, and customary patterns, between the output picture and the goal picture. The fashion reconstruction loss is outlined utilizing the Gram matrix of the activations.

Throughout fashion switch, the perceptual loss technique utilizing the VGG-19 mannequin extracts options from the content material (C) and magnificence (S) pictures.

As soon as the options are extracted from every picture perceptual loss calculates the distinction between these options. This distinction represents how properly the generated picture has captured the options of each the content material picture (C) and the fashion picture (S).

This innovation allowed for quick and environment friendly fashion switch, making it sensible for real-world purposes.

Huang and Belongie (2017): Arbitrary Fashion Switch

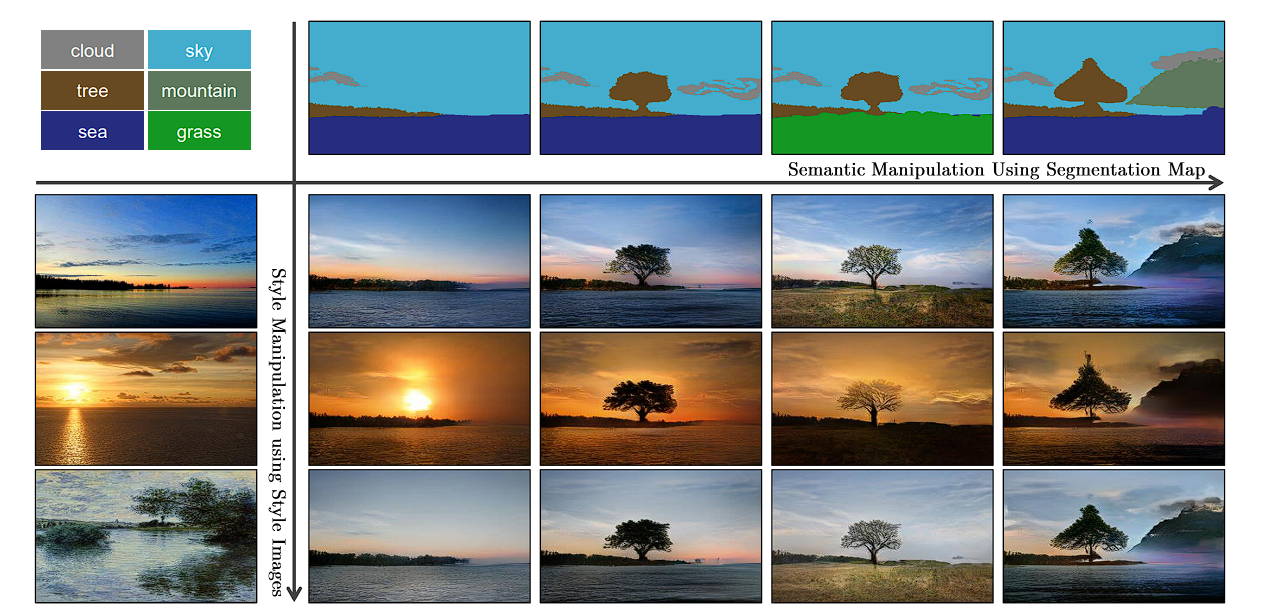

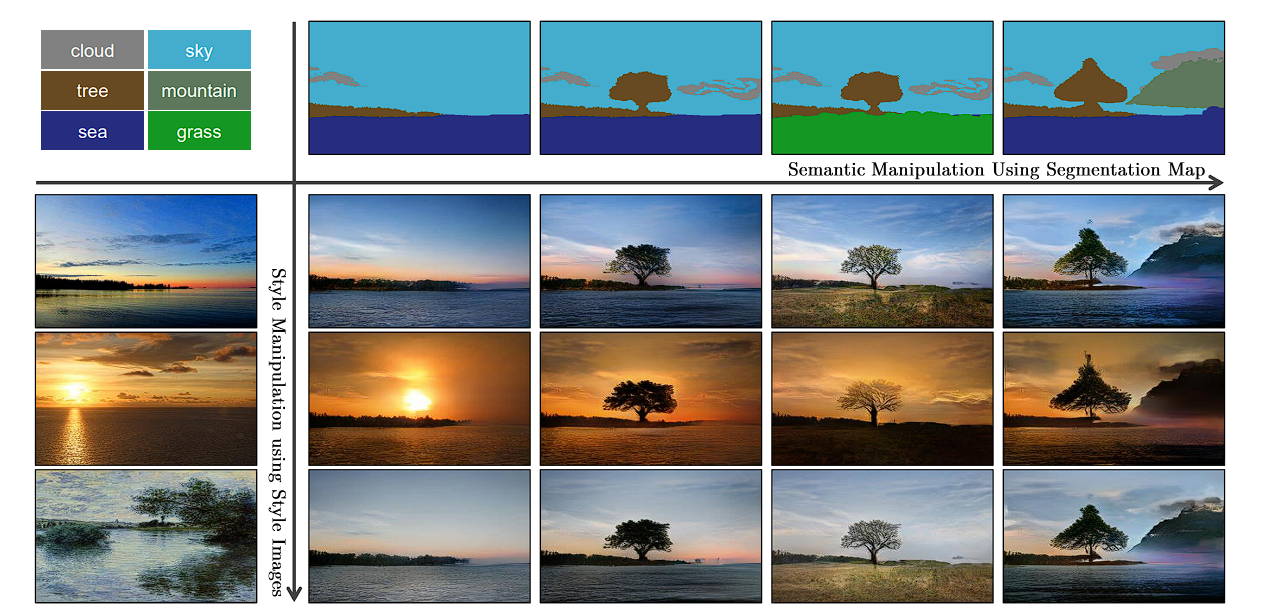

Xun Huang and Serge Belongie additional superior the sphere with their 2017 paper named, “Arbitrary Fashion Switch in Actual-Time with Adaptive Occasion Normalization (AdaIN).”

The mannequin launched in Quick Fashion Switch did velocity up the method. Nonetheless, the mannequin was restricted to a sure set of types solely.

The mannequin primarily based on Arbitrary fashion switch permits for random fashion switch utilizing AdaIN layers. This gave the freedom to the person to regulate content material fashion, colour, and spatial controls.

What’s AdaIN?

AdaIN, or Adaptive Occasion Normalization aligns the statistics (imply and variance) of content material options with these of favor options. This injected the user-defined fashion info into the generated picture.

This gave the next advantages:

- Arbitrary Types: The flexibility to switch the traits of any fashion picture onto a content material picture, whatever the content material or fashion’s particular traits.

- Nice Management: By adjusting the parameters of AdaIN (such because the fashion weight or the diploma of normalization), the person can management the depth and constancy of the fashion switch.

SPADE (Spatially Adaptive Normalization) 2019

Park et al. launched SPADE, which has performed an excellent function within the area of conditional picture synthesis (conditional picture synthesis refers back to the process of producing photorealistic pictures conditioning on sure enter knowledge). Right here the person provides a semantic picture, and the mannequin generates an actual picture out of it.

This mannequin makes use of specifically adaptive normalization to realize the outcomes. Earlier strategies instantly fed the semantic format as enter to the deep neural community, which then the mannequin processed by way of stacks of convolution, normalization, and nonlinearity layers. Nonetheless, the normalization layers on this washed away the enter picture, leading to misplaced semantic info. This allowed for person management over the semantics and magnificence of the picture.

GANs primarily based Fashions

GANs have been first launched in 2014 and have been modified to be used in varied purposes, fashion switch being certainly one of them. Listed below are a few of the well-liked GAN fashions which are used:

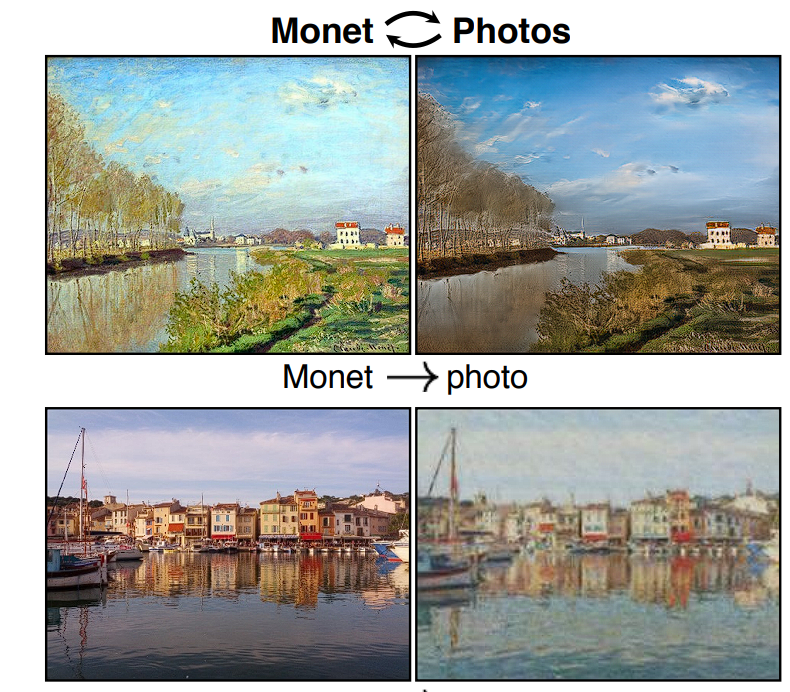

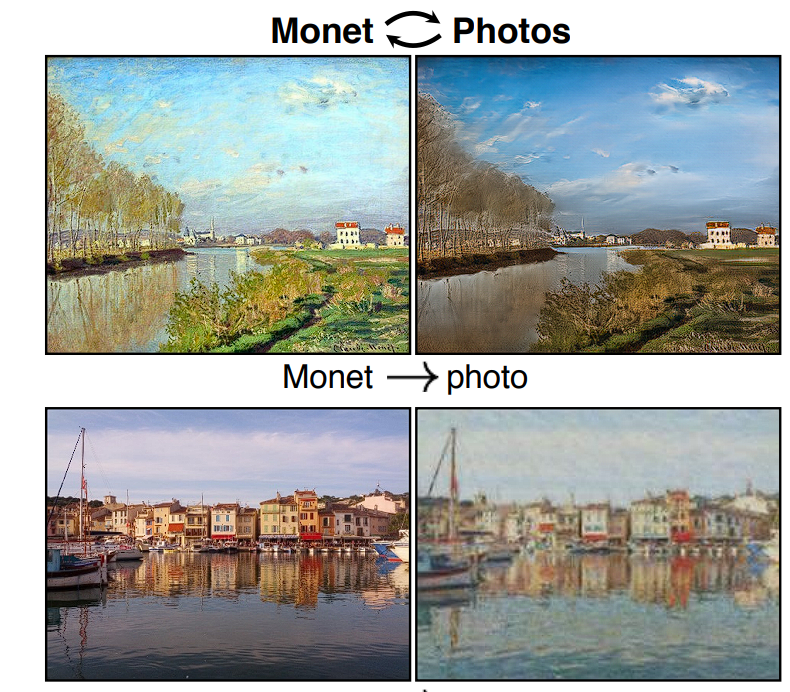

CycleGAN

- Authors: Zhu et al. (2017)

- CycleGAN makes use of unpaired picture datasets to study mappings between domains to realize image-to-image translation. It could actually study the transformation by taking a look at numerous pictures of horses and many pictures of zebras, after which determine flip one into the opposite.

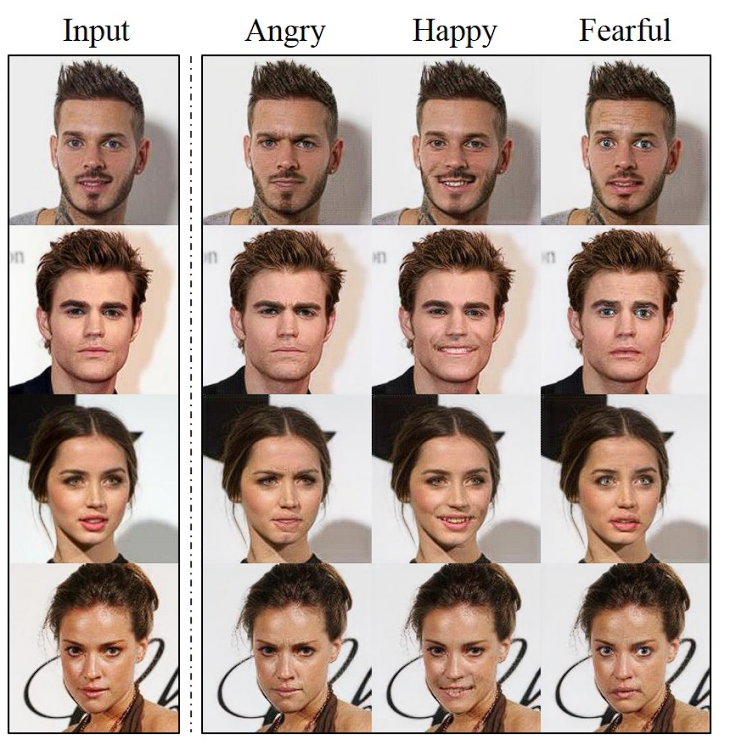

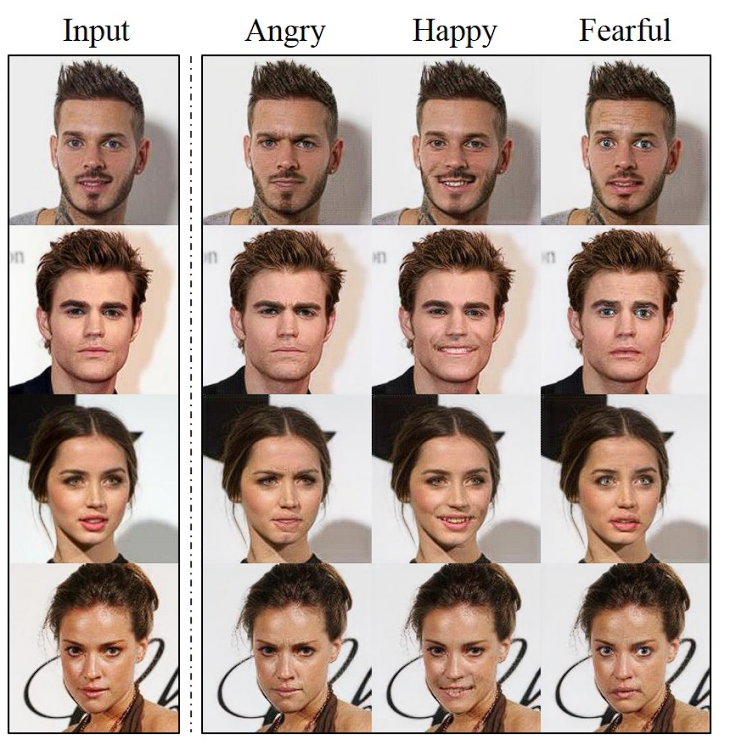

StarGAN

- Authors: Choi et al. (2018)

- StarGAN extends GANs to multi-domain picture translation. Earlier than this, GANs have been capable of translate between two particular domains solely, i.e., photograph to portray. Nonetheless, starGAN can deal with a number of domains, which implies it may possibly change hair colour, add glasses, change facial features, and many others. With no need a separate mannequin for every picture translation process.

DualGAN:

- Authors: Yi et al. (2017)

- DualGAN introduces twin studying the place two GANs are skilled concurrently for ahead and backward transformations between two domains. DualGAN has been utilized to duties like fashion switch between totally different creative domains.

Functions of Neural Fashion Switch

Neural Fashion Switch has been utilized in various purposes that scale throughout varied fields. Listed below are some examples:

Inventive Creation

NST has revolutionized the world of artwork creation by enabling artists to experiment by mixing content material from one picture with the fashion of one other. This manner artists can create distinctive and visually gorgeous items.

Digital artists can use NST to experiment with totally different types shortly, permitting them to prototype and discover new types of creative creation.

This has launched a brand new method of making artwork, a hybrid kind. For instance, artists can mix classical portray types with fashionable pictures, producing a brand new hybrid artwork kind.

Furthermore, these Deep Studying fashions are seen in varied purposes on cellular and net platforms:

- Functions like Prisma and DeepArt are powered by NST, enabling them to use creative filters to person pictures, making it straightforward for frequent individuals to discover artwork.

- Web sites and software program like Deep Dream Generator and Adobe Photoshop’s Neural Filters supply NST capabilities to shoppers and digital artists.

Picture Enhancement

NST can be used extensively to reinforce and stylize pictures, giving new life to older pictures that is likely to be blurred or lose their colours. Giving new alternatives for individuals to revive their pictures and photographers.

For instance, Photographers can apply creative types to their pictures, and rework their pictures to a specific fashion shortly with out the necessity of manually tuning their pictures.

Video Enhancement

Movies are image frames stacked collectively, subsequently NST might be utilized to movies as properly by making use of fashion to particular person frames. This has immense potential on the planet of leisure and film creation.

For instance, administrators and animators can use NST to use distinctive visible types to motion pictures and animations, with out the necessity for closely investing in devoted professionals, as the ultimate video might be edited and enhanced to provide a cinematic or any form of fashion they like. That is particularly useful for particular person film creators.

What’s Subsequent with NST

On this weblog, we checked out how NST works by taking a method picture and content material picture and mixing them, turning a pixelated picture into a picture that has blended up the fashion illustration and content material illustration. That is carried out by iteratively decreasing the fashion loss and content material illustration loss.

We then checked out how NST has progressed over time, from its inception in 2015 the place it used Gram Matrices to perceptual loss and GANs.

Concluding this weblog, we will say NST has revolutionized artwork, pictures, and media, enabling the creation of customized artwork, and inventive advertising supplies, by giving people the flexibility to create artwork varieties that will not been doable earlier than.

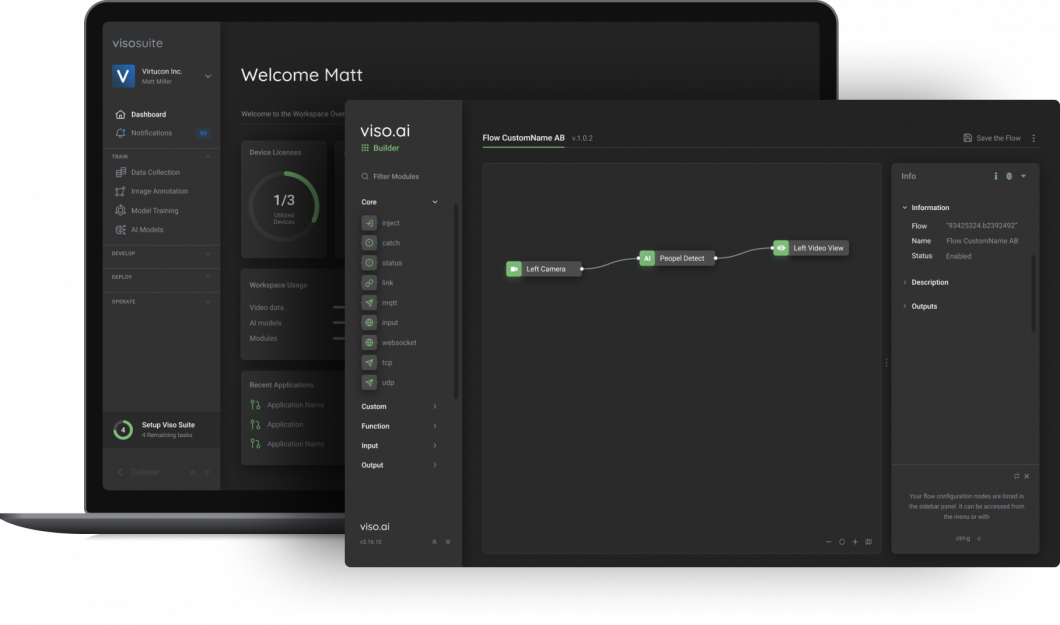

Enterprise AI

Viso Suite infrastructure makes it doable for enterprises to combine state-of-the-art laptop imaginative and prescient programs into their on a regular basis workflows. Viso Suite is versatile and future-proof, that means that as initiatives evolve and scale, the expertise continues to evolve as properly. To study extra about fixing enterprise challenges with laptop imaginative and prescient, e-book a demo with our staff of specialists.