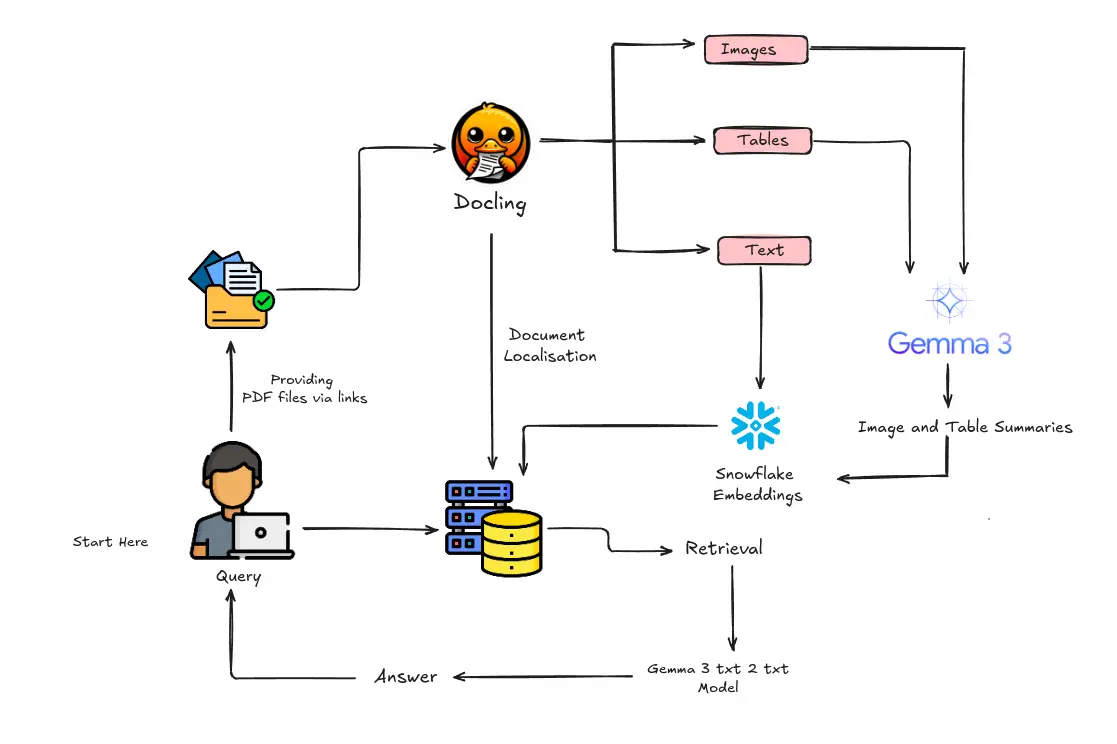

On this tutorial, we discover the way to arrange and execute a complicated retrieval-augmented era (RAG) pipeline in Google Colab. We leverage a number of state-of-the-art instruments and libraries-including Gemma 3 for language and imaginative and prescient duties, Docling for doc conversion, LangChain for chain-of-thought orchestration, and Milvus as our vector database construct a multimodal system that understands and processes textual content, tables, and pictures. Let’s dive into every element and see how they work collectively.

What’s Multimodal RAG?

Multimodal RAG (Retrieval-Augmented Era) extends conventional text-based RAG techniques by integrating a number of knowledge modalities, on this case, textual content, tables, and pictures. Because of this the pipeline not solely processes and retrieves textual content but in addition leverages imaginative and prescient fashions to grasp and describe picture content material, making the answer extra complete. This multimodal method is especially helpful for paperwork like annual experiences that always comprise visible parts, comparable to charts and diagrams.

Proposed Structure of Multimodal RAG with Gemma 3

The purpose of this challenge is to construct a sturdy multimodal RAG pipeline that may ingest paperwork (like PDFs), course of textual content and pictures, retailer doc embeddings in a vector database, and reply queries by retrieving related data. This setup is especially helpful for functions comparable to analyzing annual experiences, extracting monetary statements, or summarizing technical papers. By integrating varied libraries and instruments, we mix the facility of language fashions with doc conversion and vector search to create a complete end-to-end answer.

The pipeline makes use of a number of key libraries and instruments:

- Colab-Xterm Extension: This provides terminal help in Colab, permitting us to run shell instructions and handle the atmosphere effectively.

- Ollama Fashions: Supplies pre-trained fashions comparable to Gemma3, that are used for each language and imaginative and prescient duties.

- Transformers: From Hugging Face, for mannequin loading and tokenization.

- LangChain: Orchestrates the chain of processing steps, from immediate creation to doc retrieval and era.

- Docling: Converts PDF paperwork into structured codecs, enabling extraction of textual content, tables, and pictures.

- Milvus: A vector database that shops doc embeddings and helps environment friendly similarity search.

- Hugging Face CLI: Used for logging into Hugging Face to entry sure fashions.

- Extra Utilities: Akin to Pillow for picture processing and IPython for show functionalities.

Additionally learn: A Complete Information to Constructing Multimodal RAG Techniques

Constructing Multimodal Rag with Gemma 3

We’re constructing multimodal rag: This method improves contextual understanding, accuracy, and relevance, particularly in fields like healthcare, analysis, and media evaluation. By leveraging cross-modal embeddings, hybrid retrieval methods, and vision-language fashions, multimodal RAG techniques can present richer and extra insightful responses. The important thing problem lies in effectively integrating and retrieving multimodal knowledge whereas sustaining coherence and scalability. As AI progresses, creating optimized architectures and retrieval methods shall be essential for unlocking the total potential of multimodal intelligence.

Terminal Setup with Colab-Xterm

First, we set up the colab-xterm extension to deliver a terminal atmosphere instantly into Colab. This enables us to run system instructions, set up packages, and handle our session extra flexibly.

!pip set up colab-xterm # Set up colab-xterm

%load_ext colabxterm # Load the xterm extension

%xterm # Launch an xterm terminal session in Colab

This terminal help is particularly helpful for putting in extra dependencies or managing background processes.

Putting in and Managing Ollama Fashions

We pull particular Ollama fashions into our surroundings utilizing easy shell instructions. For instance:

!ollama pull gemma3:4b

!ollama pull llama3.2

!ollama listingThese instructions make sure that now we have the mandatory language and imaginative and prescient fashions obtainable, such because the highly effective Gemma 3 mannequin, which is central to our multimodal processing.

Putting in Important Python Packages

The following step includes putting in a number of packages required for our pipeline. This contains libraries for deep studying, textual content processing, and doc dealing with:

! pip set up transformers pillow langchain_community langchain_huggingface langchain_milvus docling langchain_ollamaBy putting in these packages, we put together the atmosphere for the whole lot from doc conversion to retrieval-augmented era.

Logging and Hugging Face Authentication

Establishing logging is essential for monitoring pipeline operations:

import logging

logging.basicConfig(stage=logging.INFO)We additionally log in to Hugging Face utilizing their CLI to entry sure pre-trained fashions:

!huggingface-cli loginThis authentication step is critical for fetching mannequin artifacts and guaranteeing clean integration with Hugging Face’s ecosystem.

Configuring Imaginative and prescient and Language Fashions (Gemma 3)

The pipeline leverages the Gemma 3 mannequin for each imaginative and prescient and language duties. For the language aspect, we arrange the mannequin and tokenizer:

This twin setup permits the system to generate textual descriptions from photos, making the pipeline really multimodal.

Doc Conversion with Docling

1. Changing PDFs to Structured Paperwork

We make use of Docling’s DocumentConverter to transform PDFs into structured paperwork. The conversion course of includes extracting textual content, tables, and pictures from the supply PDFs:

from docling.document_converter import DocumentConverter, PdfFormatOption

from docling.datamodel.base_models import InputFormat

from docling.datamodel.pipeline_options import PdfPipelineOptions

pdf_pipeline_options = PdfPipelineOptions(

do_ocr=False,

generate_picture_images=True,

)

format_options = { InputFormat.PDF: PdfFormatOption(pipeline_options=pdf_pipeline_options) }

converter = DocumentConverter(format_options=format_options)

# Outline the sources (URLs) of the paperwork to be transformed.

# "https://arxiv.org/pdf/1706.03762"

sources = [

"https://www.pwc.com/jm/en/research-publications/pdf/basic-understanding-of-a-companys-financials.pdf"

]

# Convert the PDF paperwork from the sources into an inside doc format.

conversions = { supply: converter.convert(supply=supply).doc for supply in sources }Enter File

We’ll be utilizing PwC’s publicly obtainable monetary statements. I’ve included the PDF hyperlink, and also you’re welcome so as to add your individual supply hyperlinks as effectively!

2. Extracting and Chunking Content material

After conversion, we chunk the doc into manageable items, separating textual content from tables and pictures. This segmentation permits every element to be processed independently:

from docling_core.transforms.chunker.hybrid_chunker import HybridChunker

from langchain_core.paperwork import Doc

# Course of textual content chunks (excluding pure desk segments)

texts: listing[Document] = []

for supply, docling_document in conversions.gadgets():

for chunk in HybridChunker(tokenizer=embeddings_tokenizer).chunk(docling_document):

# Skip table-only chunks; course of tables individually

if len(chunk.meta.doc_items) == 1:

proceed

doc = Doc(

page_content=chunk.textual content,

metadata={"supply": supply, "ref": "reference particulars"}

)

texts.append(doc)This method not solely improves processing effectivity but in addition facilitates extra exact vector storage and retrieval later.

Picture Processing and Encoding

Pictures from the paperwork are processed utilizing Pillow. We convert photos into base64-encoded strings that may be embedded instantly into prompts:

import base64, io, PIL.Picture, PIL.ImageOps

def encode_image(picture: PIL.Picture.Picture, format: str = "png") -> str:

picture = PIL.ImageOps.exif_transpose(picture) or picture

picture = picture.convert("RGB")

buffer = io.BytesIO()

picture.save(buffer, format)

encoding = base64.b64encode(buffer.getvalue()).decode("utf-8")

return f"knowledge:picture/{format};base64,{encoding}"Subsequently, these photos are fed into our imaginative and prescient mannequin to generate descriptive textual content, enhancing the multimodal capabilities of our pipeline.

Making a Vector Database with Milvus

To allow quick and correct retrieval of doc embeddings, we arrange Milvus as our vector retailer:

import tempfile

from langchain_core.vectorstores import VectorStore

from langchain_milvus import Milvus

db_file = tempfile.NamedTemporaryFile(prefix="vectorstore_", suffix=".db", delete=False).title

vector_db: VectorStore = Milvus(

embedding_function=embeddings_model,

connection_args={"uri": db_file},

auto_id=True,

enable_dynamic_field=True,

index_params={"index_type": "AUTOINDEX"},

)Paperwork—whether or not textual content, tables, or picture descriptions—are then added to the vector database, enabling quick and correct similarity searches throughout question execution.

Constructing the Retrieval-Augmented Era (RAG) Chain

1. Immediate Creation and Doc Wrapping

Utilizing LangChain’s immediate templates, we create customized prompts to feed context and queries into our language mannequin:

from langchain.prompts import PromptTemplate

immediate = "{enter} Given the context: {context}"

prompt_template = PromptTemplate.from_template(template=immediate)Every retrieved doc is wrapped utilizing a doc immediate template, guaranteeing that the mannequin understands the construction of the enter context.

2. Assembling the RAG Pipeline

We mix the immediate with the vector retailer to create a retrieval chain that first fetches related paperwork after which makes use of them to generate a coherent reply:

from langchain.chains.retrieval import create_retrieval_chain

from langchain.chains.combine_documents import create_stuff_documents_chain

combine_docs_chain = create_stuff_documents_chain(

llm=mannequin,

immediate=prompt_template,

document_prompt=PromptTemplate.from_template(template="""

Doc {doc_id}

{page_content}"""),

document_separator="nn",

)

rag_chain = create_retrieval_chain(

retriever=vector_db.as_retriever(),

combine_docs_chain=combine_docs_chain,

)Queries are then executed towards this chain, retrieving context and producing responses primarily based on each the question and the saved doc embeddings.

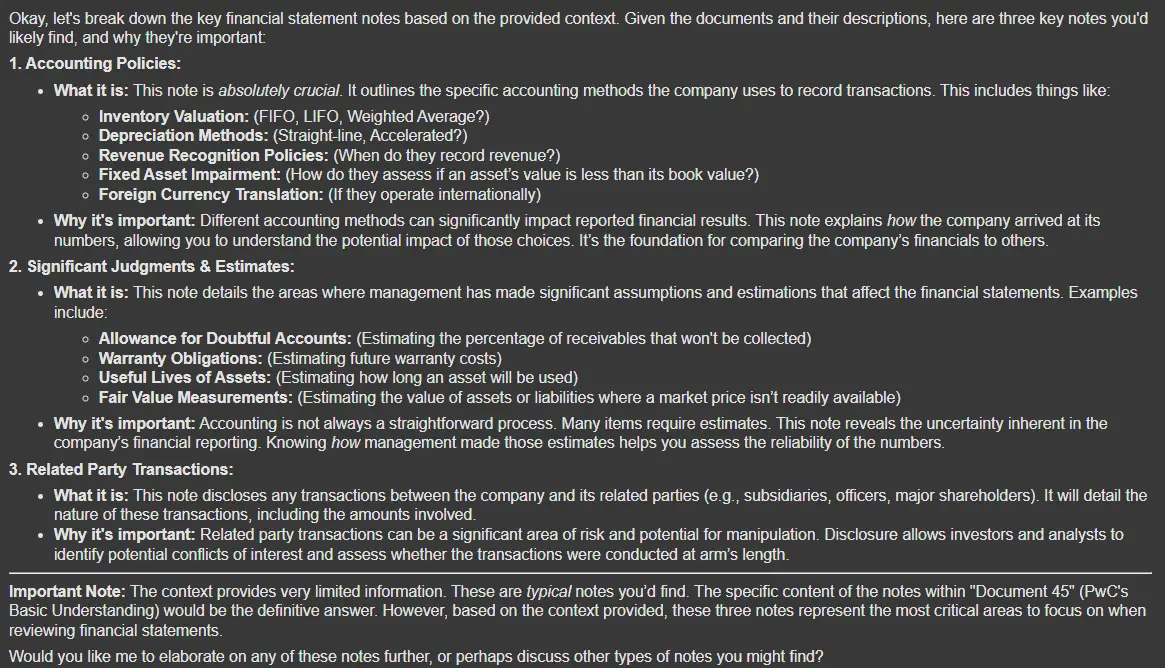

Executing Queries and Retrieving Info

As soon as the RAG chain is established, you’ll be able to run queries to retrieve related data out of your doc database. For instance:

question = "Clarify Three Key Monetary Statements Notes"

outputs = rag_chain.invoke({"enter": question})

Markdown(outputs['answer'])

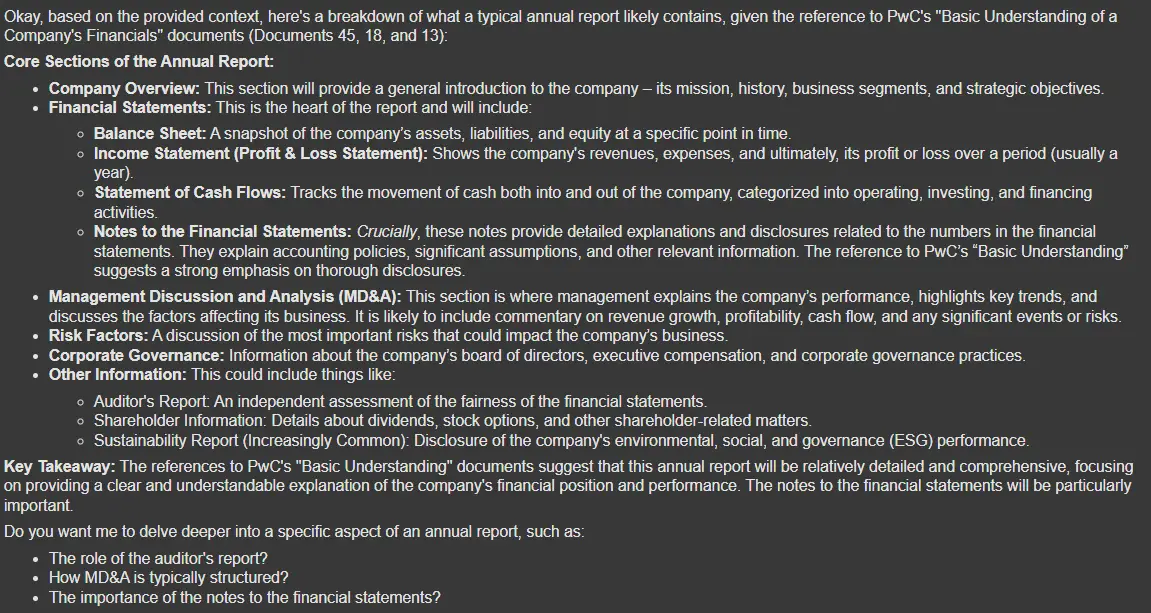

question = "inform me the Contents of an annual report"

outputs = rag_chain.invoke({"enter": question})

Markdown(outputs['answer'])

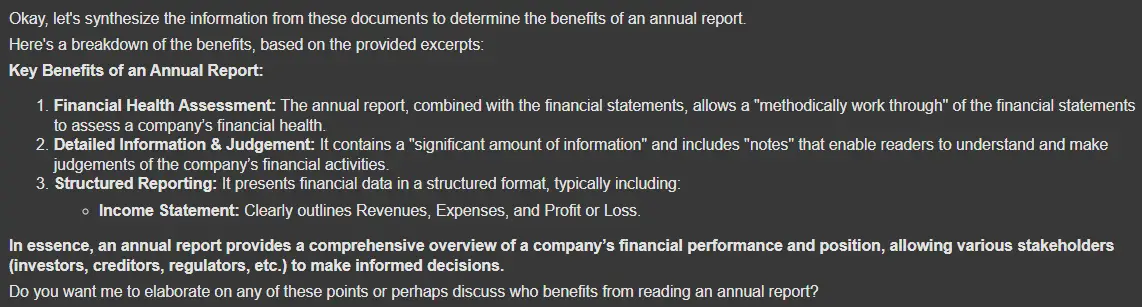

question = "what are the advantages of an annual report?"

outputs = rag_chain.invoke({"enter": question})

Markdown(outputs['answer'])

The identical course of could be utilized for varied queries, comparable to explaining monetary assertion notes or summarizing an annual report, thereby demonstrating the flexibility of the pipeline.

Right here’s the total code: AV-multimodal-gemma3-rag

Use Circumstances

This pipeline has quite a few functions:

- Monetary Reporting: Robotically extract and summarize key monetary statements, money stream parts, and annual report particulars.

- Doc Evaluation: Convert PDFs into structured knowledge for additional evaluation or machine studying duties.

- Multimodal Search: Allow search and retrieval from blended media paperwork, combining textual and visible content material.

- Enterprise Intelligence: Present fast insights into complicated paperwork by aggregating and synthesizing data throughout modalities.

Conclusion

On this tutorial, we demonstrated the way to construct a multimodal RAG with Gemma 3 in Google Colab. By integrating instruments like Colab-Xterm, Ollama fashions (Gemma 3), Docling, LangChain, and Milvus, we created a system able to processing textual content, tables, and pictures. This highly effective setup not solely permits efficient doc retrieval but in addition helps complicated question answering and evaluation in numerous functions. Whether or not you’re coping with monetary experiences, analysis papers, or enterprise intelligence duties, this pipeline affords a flexible and scalable answer.

Blissful coding, and revel in exploring the probabilities of multimodal retrieval-augmented era!

Login to proceed studying and revel in expert-curated content material.