A mild introduction to the newest multi-modal transfusion mannequin

Just lately, Meta and Waymo launched their newest paper — Transfusion: Predict the Subsequent Token and Diffuse Pictures with One Multi-Modal Mannequin, which integrates the favored transformer mannequin with the diffusion mannequin for multi-modal coaching and prediction functions.

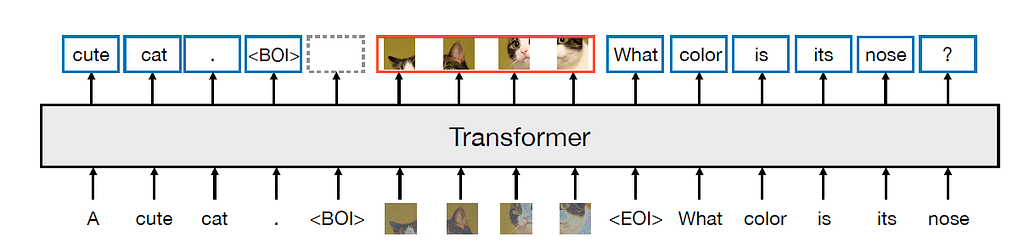

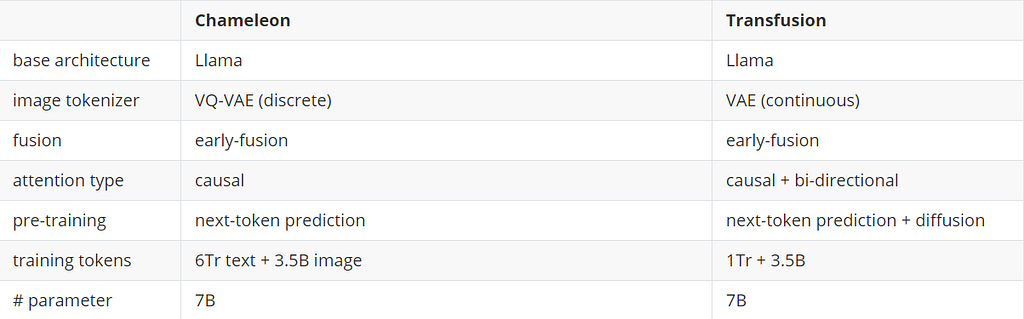

Like Meta’s earlier work, the Transfusion mannequin relies on the Llama structure with early fusion, which takes each the textual content token sequence and the picture token sequence and makes use of a single transformer mannequin to generate the prediction. However completely different from earlier artwork, the Transfusion mannequin addresses the picture tokens otherwise:

- The picture token sequence is generated by a pre-trained Variational Auto-Encoder half.

- The transformer consideration for the picture sequence is bi-directional slightly than causal.

Let’s talk about the next intimately. We’ll first evaluation the fundamentals, like auto-regressive and diffusion fashions, then dive into the Transfusion structure.

Auto-regressive Fashions

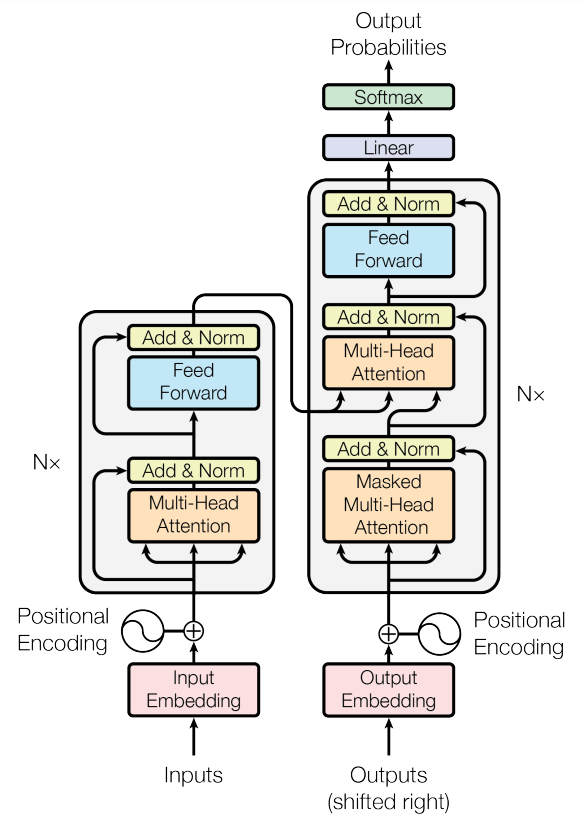

These days, giant language fashions (LLMs) are based on transformer architectures, which have been proposed within the Consideration is All You Want paper in 2017. The transformer structure comprises two components: the encoder and the decoder.

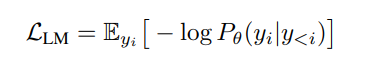

Masked Language Fashions like BERT use the encoder half pre-trained with randomly bidirectional masked token prediction duties (and subsequent sentence prediction). For auto-regressive fashions like the newest LLMs, the decoder half is normally educated on the subsequent token prediction activity, the place the LM loss is minimized:

Within the equation above, theta is the mannequin parameter set, and y_i is the token at index i in a sequence of size n. y<i are all of the tokens earlier than y_i.

Diffusion Fashions

What’s the diffusion mannequin? It’s a collection of deep studying fashions generally utilized in laptop imaginative and prescient (particularly for medical picture evaluation) for picture era/denoising and different functions. Probably the most well-known diffusion fashions is the DDPM, which is from the Denoising diffusion probabilistic fashions paper revealed in 2020. The mannequin is a parameterized Markov chain containing a from side to side transition, as proven under.

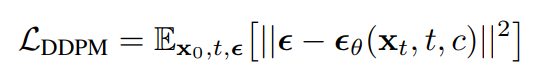

What’s a Markov chain? It’s a statistical course of during which the present step solely depends on the earlier step, and the reverse is vice versa. By assuming a Markov course of, the mannequin can begin with a clear picture by iteratively including Gaussian noise within the ahead course of (proper -> left within the determine above) and iteratively “study” the noise by utilizing a Unet-based structure within the reverse course of (left -> proper within the determine above). That’s why we are able to generally see the diffusion mannequin as a generative mannequin (when used from left to proper) and generally as a denoising mannequin (when used from proper to left). The DDPM loss is given under, the place the theta is the mannequin parameter set, epsilon is the recognized noise, and the epsilon_theta is the noise estimated by a deep studying mannequin (normally a UNet):

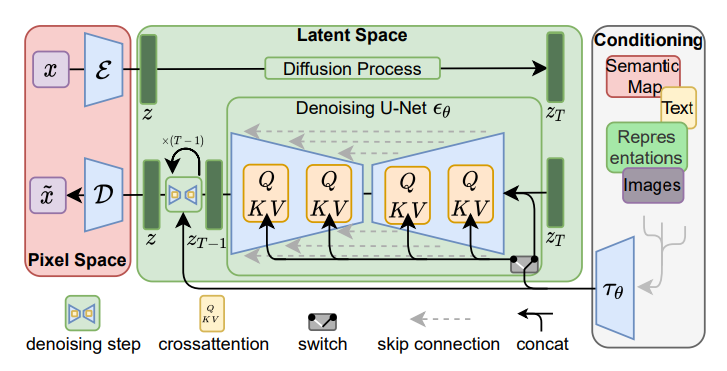

Diffusion Mannequin within the Latent House

The thought of diffusion was additional prolonged to the latent area within the CVPR’22 paper, the place the photographs are first “compressed” onto the latent area by utilizing the encoder a part of a pre-trained Variational Auto Encoder (VAE). Then, the diffusion and reverse processes are carried out on the latent area and mapped again to pixel area utilizing the decoder a part of the VAE. This might largely enhance the training pace and effectivity, as most calculations are carried out in a decrease dimensional area.

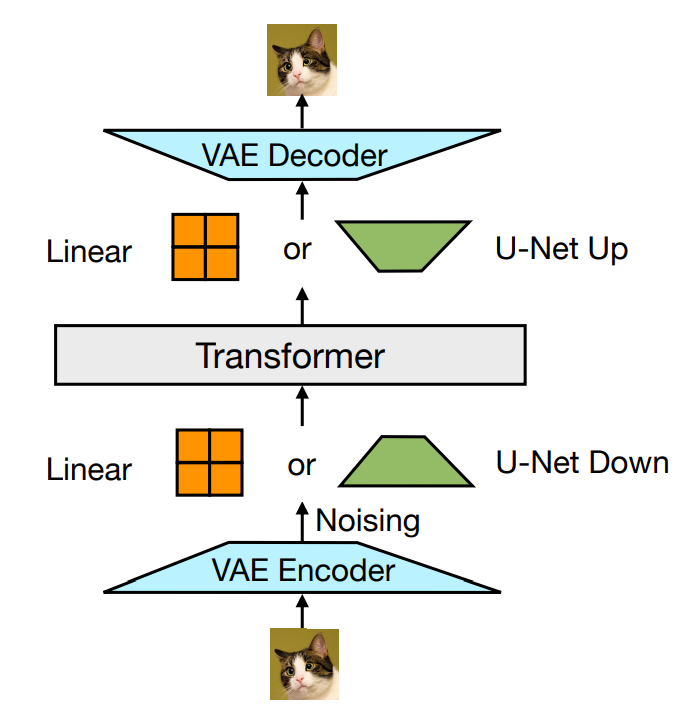

VAE-based Picture Transfusion

The core a part of the Transfusion mannequin is the fusion between the diffusion and the transformer for enter pictures. First, a picture is split right into a sequence of 8*8 patches; every patch is handed right into a pre-trained VAE encoder to “compress” into an 8-element latent vector illustration. Then, noise is added to the latent illustration and additional processed by a linear layer/U-Internet encoder to generate the “noisy” x_t. Third, the transformer mannequin processes the sequence of noisy latent representations. Final, the outputs are reversely processed by one other linear/U-Internet decoder earlier than utilizing a VAE decoder to generate the “true” x_0 picture.

Within the precise implementation, the start of the picture (BOI) token and the tip of the picture (EOI) token are padded to each side of the picture illustration sequence earlier than concatenating the textual content tokens. Self-attention for picture coaching is bi-directional consideration, whereas self-attention for textual content tokens is causal. On the coaching stage, the loss for the picture sequence is DDPM loss, whereas the remainder of the textual content tokens use the LM loss.

So why hassle? Why do we want such an advanced process for processing picture patch tokens? The paper explains that the token area for textual content and pictures is completely different. Whereas the textual content tokens are discrete, the picture tokens/patches are naturally steady. Within the earlier artwork, picture tokens should be “discretized” earlier than fusing into the transformer mannequin, whereas integrating the diffusion mannequin immediately might resolve this challenge.

Examine with state-of-the-art

The first multi-modal mannequin the paper compares to is the Chameleon mannequin, which Meta proposed earlier this 12 months. Right here, we evaluate the distinction between structure and coaching set dimension between the Chameleon-7B and Transfusion-7B.

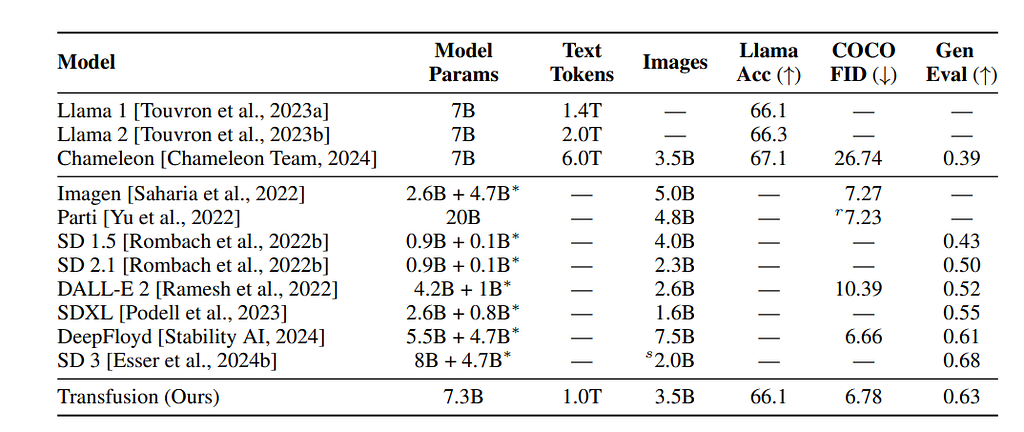

The paper lists the efficiency comparability over the Llama2 pre-training suite accuracy, COCO zero-shot Frechet Inception Distance (FID) and GenEval benchmark. We are able to see that the Transfusion performs significantly better than Chameleon on the image-related benchmarks (COCO and Gen) whereas dropping little or no margin in comparison with Chameleon, with the identical quantity of parameters.

Additional Feedback.

Though the thought of the paper is tremendous attention-grabbing, the “Diffusion” a part of the Transfusion is hardly an precise Diffusion, as there are solely two timestamps within the Markov course of. In addition to, the pre-trained VAE makes the mannequin now not strictly end-to-end. Additionally, the VAE + Linear/UNet + Transformer Encoder + Linear/UNet + VAE design seems so difficult, which makes the viewers can’t assist however ask, is there a extra elegant approach to implement this concept? In addition to, I beforehand wrote concerning the newest publication from Apple on the generalization advantages of utilizing autoregressive modelling on pictures, so it could be attention-grabbing to present a second thought to the “MIM + autoregressive” strategy.

When you discover this put up attention-grabbing and wish to talk about it, you’re welcome to depart a remark, and I’m completely satisfied to additional the dialogue there 🙂

References

- Zhou et al., Transfusion: Predict the Subsequent Token and Diffuse Pictures with One Multi-Modal Mannequin. arXiv 2024.

- Staff C. Chameleon: Combined-modal early-fusion basis fashions. arXiv preprint 2024.

- Touvron et al., Llama: Open and environment friendly basis language fashions. arXiv 2023.

- Rombach et al., Excessive-resolution picture synthesis with latent diffusion fashions. CVPR 2022.

- Ho et al., Denoising diffusion probabilistic fashions. NeurIPS 2020.

- Vaswani, Consideration is all you want. NeurIPS 2017.

- Kingma, Auto-encoding variational bayes. arXiv preprint 2013.

Transformer? Diffusion? Transfusion! was initially revealed in In the direction of Information Science on Medium, the place individuals are persevering with the dialog by highlighting and responding to this story.