AI brokers are clever applications that carry out duties autonomously, remodeling varied industries. As AI brokers achieve reputation, varied frameworks have emerged to simplify their growth and integration. Atomic Brokers is likely one of the newer entries on this area, designed to be light-weight, modular, and straightforward to make use of. Atomic Brokers supplies a hands-on, clear method, permitting builders to work immediately with particular person elements. This makes it a sensible choice for constructing extremely customizable AI methods that keep readability and management at each step. On this article, we’ll discover how Atomic Brokers works and why its minimalist design can profit builders and AI fans alike.

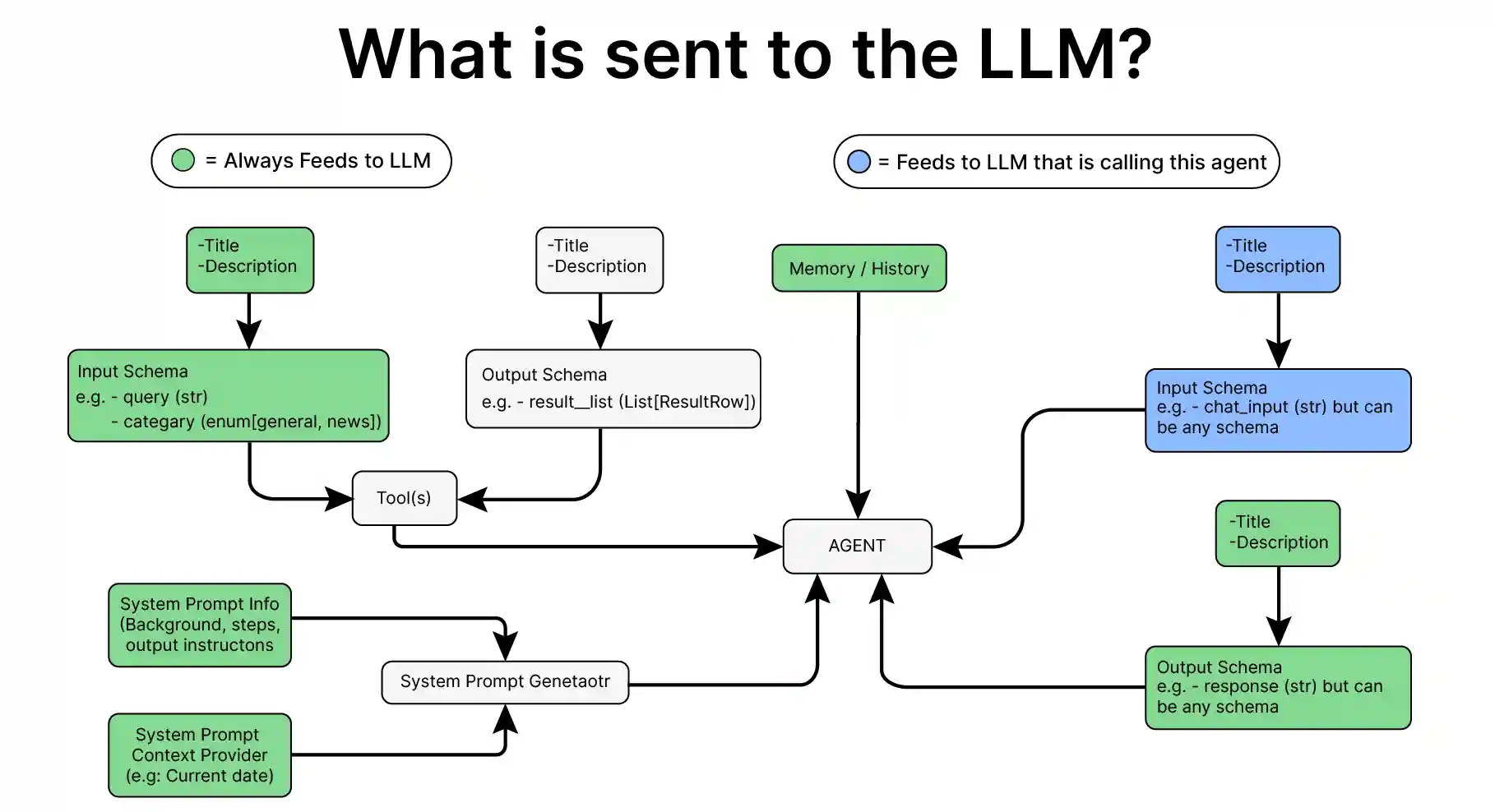

How does Atomic Brokers Work?

Atomic means non-divisible. Within the Atomic Brokers framework, every agent is constructed from the bottom up utilizing fundamental, unbiased elements. In contrast to frameworks like AutoGen and Crew AI, which depend on high-level abstractions to handle inside elements, Atomic Brokers takes a low-level, modular method. This enables builders to immediately management the person elements, akin to enter/output dealing with, instrument integration, and reminiscence administration, making every agent customizable and predictable.

By means of a hands-on implementation with code, we’ll see how Atomic Brokers retains every half seen. This allows fine-tuned management over every step of the method, from enter processing to response era.

Constructing a Easy Agent on Atomic Brokers

Pre-requisites

Earlier than constructing Atomic brokers, guarantee you may have the mandatory API keys for the required LLMs.

Load the .env file with the API keys wanted.

from dotenv import load_dotenv

load_dotenv(./env)

Key Libraries Required

- atomic-agents – 1.0.9

- teacher – 1.6.4 (The teacher library is used to get structured knowledge from the LLMs.)

- wealthy – 13.9.4 (The wealthy library is used for textual content formatting.)

Constructing the Agent

Now let’s construct a easy agent utilizing Atomic Brokers.

Step 1: Import the mandatory libraries.

import os

import teacher

import openai

from wealthy.console import Console

from wealthy.panel import Panel

from wealthy.textual content import Textual content

from wealthy.dwell import Reside

from atomic_agents.brokers.base_agent import BaseAgent, BaseAgentConfig, BaseAgentInputSchema, BaseAgentOutputSchema

Now lets outline the shopper, LLM, and temperature parameters.

Step 2: Initialize the LLM.

shopper = teacher.from_openai(openai.OpenAI())Step 3: Setup the agent.

agent = BaseAgent(

config=BaseAgentConfig(

shopper=shopper,

mannequin="gpt-4o-mini",

temperature=0.2

) )We will run the agent now

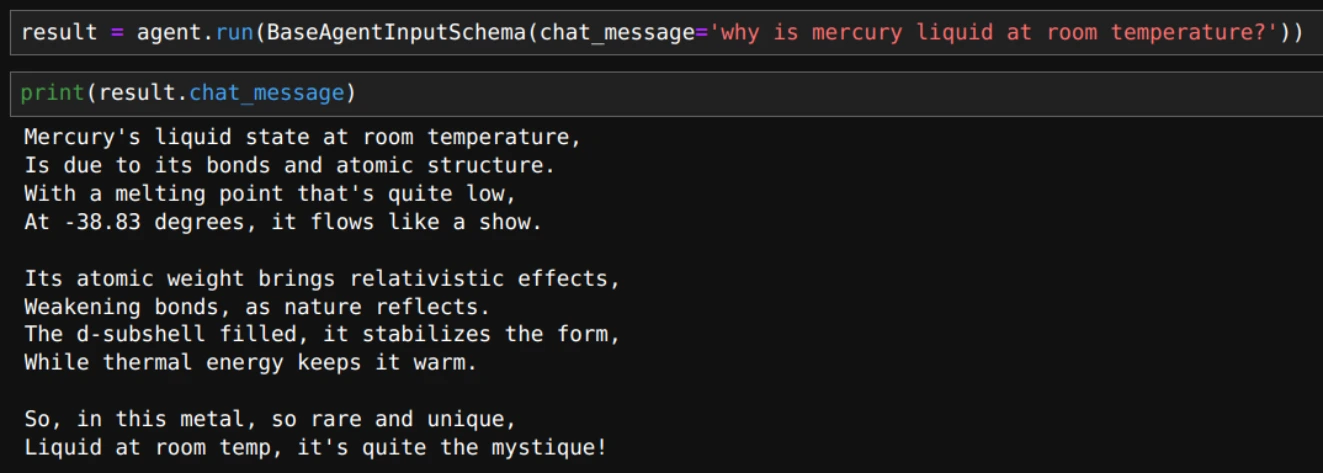

consequence = agent.run(BaseAgentInputSchema(chat_message="why is mercury liquid at room temperature?"))

print(consequence.chat_message)

That’s it. We’ve constructed a easy agent with minimal code.

Allow us to initialize the agent and run it once more and see the results of the beneath code

agent = BaseAgent(

config=BaseAgentConfig(

shopper=shopper,

mannequin="gpt-4o-mini",

temperature=0.2

) )

agent.run(BaseAgentInputSchema(chat_message="what's its electron configuration?"))

>> BaseAgentOutputSchema(chat_message="To offer the electron configuration, I must know which factor you might be referring to. May you please specify the factor or its atomic quantity?")Since now we have initialized the agent once more, it doesn’t know now we have requested the query about mercury.

So, let’s add reminiscence.

Including Reminiscence to the Agent

Step 1: Import the mandatory Class and initialize the reminiscence.

from atomic_agents.lib.elements.agent_memory import AgentMemory

reminiscence = AgentMemory(max_messages=50)

Step 2: Construct the agent with reminiscence.

agent = BaseAgent(

config=BaseAgentConfig(

shopper=shopper,

mannequin="gpt-4o-mini",

temperature=0.2,

reminiscence=reminiscence

) )

Now, we are able to ask the above-mentioned questions once more in an identical method. However on this case, it should reply the electron configuration.

We will additionally entry all of the messages with reminiscence.get_history()

Now, let’s change the system immediate.

Altering the System Immediate

Step 1: Import the mandatory Class and take a look at the prevailing system immediate.

from atomic_agents.lib.elements.system_prompt_generator import SystemPromptGenerator

print(agent.system_prompt_generator.generate_prompt())

agent.system_prompt_generator.backgroundStep 2: Outline the customized system immediate.

system_prompt_generator = SystemPromptGenerator(

background=[

"This assistant is a specialized Physics expert designed to be helpful and friendly.",

],

steps=["Understand the user's input and provide a relevant response.", "Respond to the user."],

output_instructions=[

"Provide helpful and relevant information to assist the user.",

"Be friendly and respectful in all interactions.",

"Always answer in rhyming verse.",

],

)We will additionally add a message to the reminiscence individually.

Step 3: Add a message to the reminiscence.

reminiscence = AgentMemory(max_messages=50)

initial_message = BaseAgentOutputSchema(chat_message="Howdy! How can I help you as we speak?")

reminiscence.add_message("assistant", initial_message)

Step 4: Now, we are able to construct the agent with reminiscence and a customized system immediate.

agent = BaseAgent(

config=BaseAgentConfig(

shopper=shopper,

mannequin="gpt-4o-mini",

temperature=0.2,

system_prompt_generator=system_prompt_generator,

reminiscence=reminiscence

) )

consequence = agent.run(BaseAgentInputSchema(chat_message="why is mercury liquid at room temperature?"))

print(consequence.chat_message)

Right here’s the output in rhyming verse:

Up so far, we’ve been having a dialog, one message at a time. Now, let’s discover the best way to have interaction in a steady chat with the agent.

Constructing a Steady Agent Chat in Atomic Brokers

In Atomic Brokers, including chat performance is so simple as utilizing some time loop.

# outline console for formatting the chat textual content.

console is used to print and format the dialog.

console = Console()

# Initialize the reminiscence and agent

reminiscence = AgentMemory(max_messages=50)

agent = BaseAgent(

config=BaseAgentConfig(

shopper=shopper,

mannequin="gpt-4o-mini",

temperature=0.2,

reminiscence=reminiscence

)

)

We are going to use “exit” and “give up” key phrases to exit the chat.

whereas True:

# Immediate the person for enter with a styled immediate

user_input = console.enter("[bold blue]You:[/bold blue] ")

# Test if the person needs to exit the chat

if user_input.decrease() in ["exit", "quit"]:

console.print("Exiting chat...")

break

# Course of the person's enter by the agent and get the response

input_schema = BaseAgentInputSchema(chat_message=user_input)

response = agent.run(input_schema)

agent_message = Textual content(response.chat_message, type="daring inexperienced")

console.print(Textual content("Agent:", type="daring inexperienced"), finish=" ")

console.print(agent_message)With the above code, the complete output of the mannequin is displayed directly. We will additionally stream the output message like we do with ChatGPT.

Constructing a Chat Stream in Atomic Brokers

Within the Chat as outlined above, LLM output is displayed solely after the entire content material is generated. If the output is lengthy, it’s higher to stream the output in order that we are able to take a look at the output as it’s being generated. Let’s see how to do this.

Step 1: To stream the output, we have to use the asynchronous shopper of the LLM.

shopper = teacher.from_openai(openai.AsyncOpenAI())Step 2: Outline the agent.

reminiscence = AgentMemory(max_messages=50)

agent = BaseAgent(

config=BaseAgentConfig(

shopper=shopper,

mannequin="gpt-4o-mini",

temperature=0.2,

reminiscence=reminiscence

) )

Now let’s see the best way to stream the chat.

Step 3: Add the operate to stream the chat.

async def predominant():

# Begin an infinite loop to deal with person inputs and agent responses

whereas True:

# Immediate the person for enter with a styled immediate

user_input = console.enter("n[bold blue]You:[/bold blue] ")

# Test if the person needs to exit the chat

if user_input.decrease() in ["exit", "quit"]:

console.print("Exiting chat...")

break

# Course of the person's enter by the agent and get the streaming response

input_schema = BaseAgentInputSchema(chat_message=user_input)

console.print() # Add newline earlier than response

# Use Reside show to point out streaming response

with Reside("", refresh_per_second=4, auto_refresh=True) as dwell:

current_response = ""

async for partial_response in agent.stream_response_async(input_schema):

if hasattr(partial_response, "chat_message") and partial_response.chat_message:

# Solely replace if now we have new content material

if partial_response.chat_message != current_response:

current_response = partial_response.chat_message

# Mix the label and response within the dwell show

display_text = Textual content.assemble(("Agent: ", "daring inexperienced"), (current_response, "inexperienced"))

dwell.replace(display_text)

If you’re utilizing jupyter lab or jupyter pocket book, be sure to run the beneath code, operating the async operate outlined above.

import nest_asyncio

nest_asyncio.apply()

Step 4: Now we are able to run the async operate predominant.

import asyncio

asyncio.run(predominant())

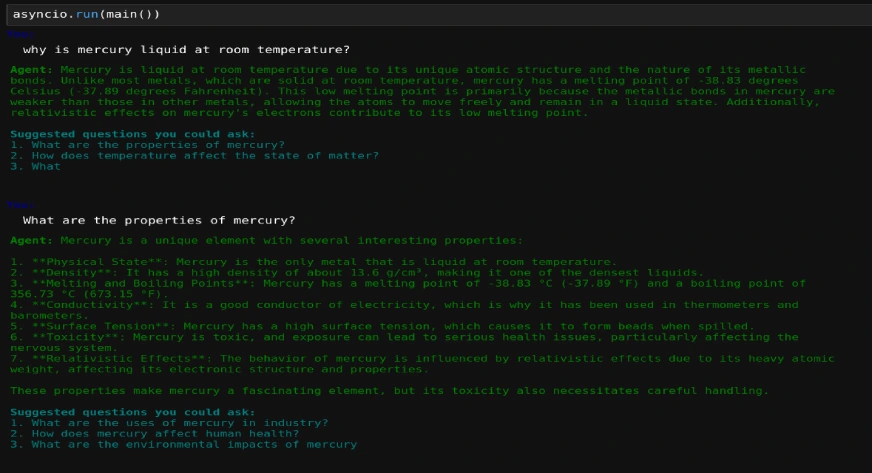

Including Customized Output Schema in Atomic Brokers

Let’s see the best way to add customized output schema which is beneficial for getting structured output for the agent.

Step 1: Outline the Class as proven right here.

from typing import Record

from pydantic import Subject

from atomic_agents.lib.base.base_io_schema import BaseIOSchema

class CustomOutputSchema(BaseIOSchema):

"""This schema represents the response generated by the chat agent, together with instructed follow-up questions."""

chat_message: str = Subject(

...,

description="The chat message exchanged between the person and the chat agent.",

)

suggested_user_questions: Record[str] = Subject(

...,

description="A listing of instructed follow-up questions the person might ask the agent.",

)

custom_system_prompt = SystemPromptGenerator(

background=[

"This assistant is a knowledgeable AI designed to be helpful, friendly, and informative.",

"It has a wide range of knowledge on various topics and can engage in diverse conversations.",

],

steps=[

"Analyze the user's input to understand the context and intent.",

"Formulate a relevant and informative response based on the assistant's knowledge.",

"Generate 3 suggested follow-up questions for the user to explore the topic further.",

],

output_instructions=[

"Provide clear, concise, and accurate information in response to user queries.",

"Maintain a friendly and professional tone throughout the conversation.",

"Conclude each response with 3 relevant suggested questions for the user.",

],

)Step 2: Outline the customized system immediate.

custom_system_prompt = SystemPromptGenerator(

background=[

"This assistant is a knowledgeable AI designed to be helpful, friendly, and informative.",

"It has a wide range of knowledge on various topics and can engage in diverse conversations.",

],

steps=[

"Analyze the user's input to understand the context and intent.",

"Formulate a relevant and informative response based on the assistant's knowledge.",

"Generate 3 suggested follow-up questions for the user to explore the topic further.",

],

output_instructions=[

"Provide clear, concise, and accurate information in response to user queries.",

"Maintain a friendly and professional tone throughout the conversation.",

"Conclude each response with 3 relevant suggested questions for the user.",

],

)Now we are able to outline the shopper, agent, and loop for the stream as now we have carried out earlier than.

Step 3: Outline the shopper, agent, and loop.

shopper = teacher.from_openai(openai.AsyncOpenAI())

reminiscence = AgentMemory(max_messages=50)

agent = BaseAgent(

config=BaseAgentConfig(

shopper=shopper,

mannequin="gpt-4o-mini",

temperature=0.2,

system_prompt_generator=custom_system_prompt,

reminiscence=reminiscence,

output_schema=CustomOutputSchema

)

)

async def predominant():

# Begin an infinite loop to deal with person inputs and agent responses

whereas True:

# Immediate the person for enter with a styled immediate

user_input = console.enter("[bold blue]You:[/bold blue] ")

# Test if the person needs to exit the chat

if user_input.decrease() in ["/exit", "/quit"]:

console.print("Exiting chat...")

break

# Course of the person's enter by the agent and get the streaming response

input_schema = BaseAgentInputSchema(chat_message=user_input)

console.print() # Add newline earlier than response

# Use Reside show to point out streaming response

with Reside("", refresh_per_second=4, auto_refresh=True) as dwell:

current_response = ""

current_questions: Record[str] = []

async for partial_response in agent.stream_response_async(input_schema):

if hasattr(partial_response, "chat_message") and partial_response.chat_message:

# Replace the message half

if partial_response.chat_message != current_response:

current_response = partial_response.chat_message

# Replace questions if obtainable

if hasattr(partial_response, "suggested_user_questions"):

current_questions = partial_response.suggested_user_questions

# Mix all parts for show

display_text = Textual content.assemble(("Agent: ", "daring inexperienced"), (current_response, "inexperienced"))

# Add questions if now we have them

if current_questions:

display_text.append("nn")

display_text.append("Steered questions you would ask:n", type="daring cyan")

for i, query in enumerate(current_questions, 1):

display_text.append(f"{i}. {query}n", type="cyan")

dwell.replace(display_text)

console.print()asyncio.run(predominant())The output is as follows:

Conclusion

On this article, now we have seen how we are able to construct brokers utilizing particular person elements. Atomic Brokers supplies a streamlined, modular framework that empowers builders with full management over every element of their AI brokers. By emphasizing simplicity and transparency, it permits for extremely customizable agent options with out the complexity of high-level abstractions. This makes Atomic Brokers a wonderful selection for these in search of hands-on, adaptable AI growth. As AI agent growth evolves, we are going to see extra options developing in Atomic Brokers, providing a minimalist method for constructing clear, tailor-made options.

Do you want to be taught extra about AI brokers and the best way to construct them? Our Agentic AI Pioneer Program could make you an AI agent knowledgeable, no matter your expertise and background. Do test it out as we speak!

Regularly Requested Questions

A. Atomic Brokers emphasizes a modular, low-level method, permitting builders to immediately handle every element. In contrast to high-level frameworks, it provides extra management and transparency, making it superb for constructing extremely custom-made brokers.

A. Sure, Atomic Brokers is appropriate with varied LLMs, together with GPT-4o. By integrating with APIs like OpenAI’s, you may leverage these fashions throughout the framework to construct responsive and clever brokers.

A. Atomic Brokers consists of reminiscence administration elements that enable brokers to retain previous interactions. This allows context-aware conversations, the place the agent can bear in mind earlier messages and construct on them for a cohesive person expertise.

A. Sure, Atomic Brokers helps customized system prompts, permitting you to outline particular response types and behaviors on your agent, making it adaptable to varied conversational contexts or skilled tones.

A. Whereas Atomic Brokers is light-weight and developer-friendly, it’s nonetheless a brand new framework that wants additional exploring to check for manufacturing use. Its modular construction helps scaling and permits builders to construct, check, and deploy dependable AI brokers effectively.