YOLO (You Solely Look As soon as) is a household of real-time object detection machine-learning algorithms. Object detection is a pc imaginative and prescient activity that makes use of neural networks to localize and classify objects in pictures. This activity has a variety of purposes, from medical imaging to self-driving automobiles. A number of machine-learning algorithms are used for object detection, one among which is convolutional neural networks (CNNs).

CNNs are the bottom for any YOLO mannequin, researchers and engineers use these fashions for duties like object detection and segmentation. YOLO fashions are open-source, and they’re extensively used within the area. These fashions have been enhancing from one model to the subsequent, leading to higher accuracy, efficiency, and extra capabilities. This text will discover all the YOLO household, we are going to begin from the unique to the newest, exploring their structure, use circumstances, and demos.

About us: Viso Suite is the entire pc imaginative and prescient for enterprises. Throughout the platform, groups can seamlessly construct, deploy, handle, and scale real-time purposes for implementation throughout industries. Viso Suite is use case-agnostic, that means that it performs all visible AI-associated duties together with folks counting, defect detection, and security monitoring. To study extra, e book a demo with our staff.

YOLOv1 The Unique

Earlier than introducing YOLO object detection, researchers used convolutional neural community (CNN) based mostly approaches like R-CNN and Quick R-CNN. These approaches used a two-step course of that predicted the bounding bins after which used regression to categorise objects in these bins. This method was gradual and resource-extensive, however YOLO fashions revolutionized object detection. When the primary YOLO was developed by Joseph Redmon and Ali Farhadi again in 2016, it overcame most issues with conventional object detection algorithms, with a brand new and enhanced structure.

Structure

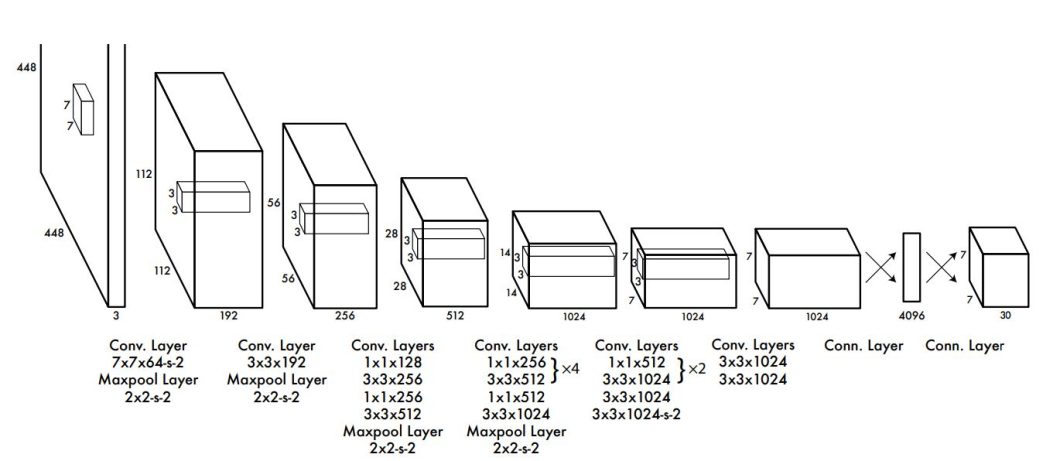

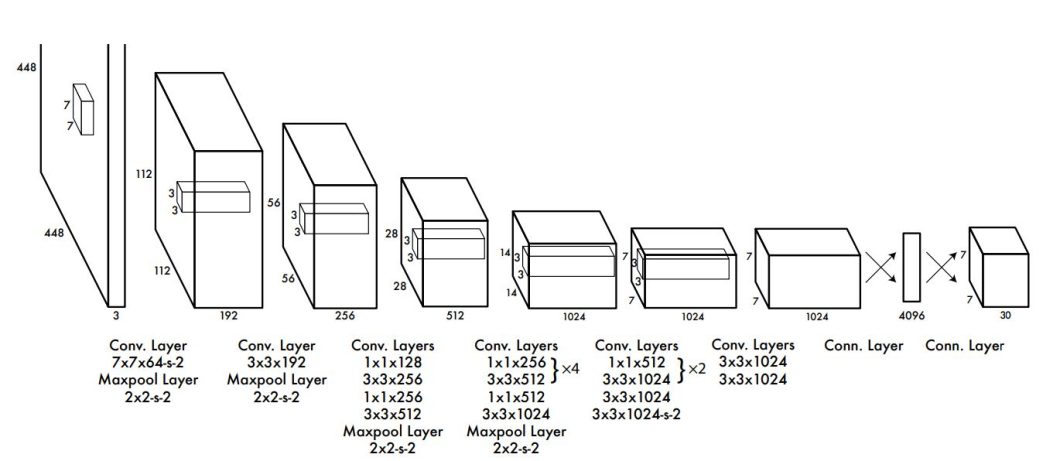

The unique YOLO structure consisted of 24 convolutional layers adopted by 2 absolutely linked layers impressed by the GoogLeNet mannequin for picture classification. The YOLOv1 method was the primary at its time.

The preliminary convolutional layers of the community extract options from the picture whereas the absolutely linked layers predict the output chances and coordinates. Which means that each the bounding bins and the classification occur in a single step. This one-step course of streamlines the operation and achieves real-time effectivity. As well as, the YOLO structure used the next optimization methods.

- Leaky ReLU Activation: Leaky ReLU helps to forestall the “dying ReLU” drawback, the place neurons can get caught in an inactive state throughout coaching.

- Dropout Regularization: YOLOv1 applies dropout regularization after the primary absolutely linked layer to forestall overfitting.

- Information Augmentation

How It Works

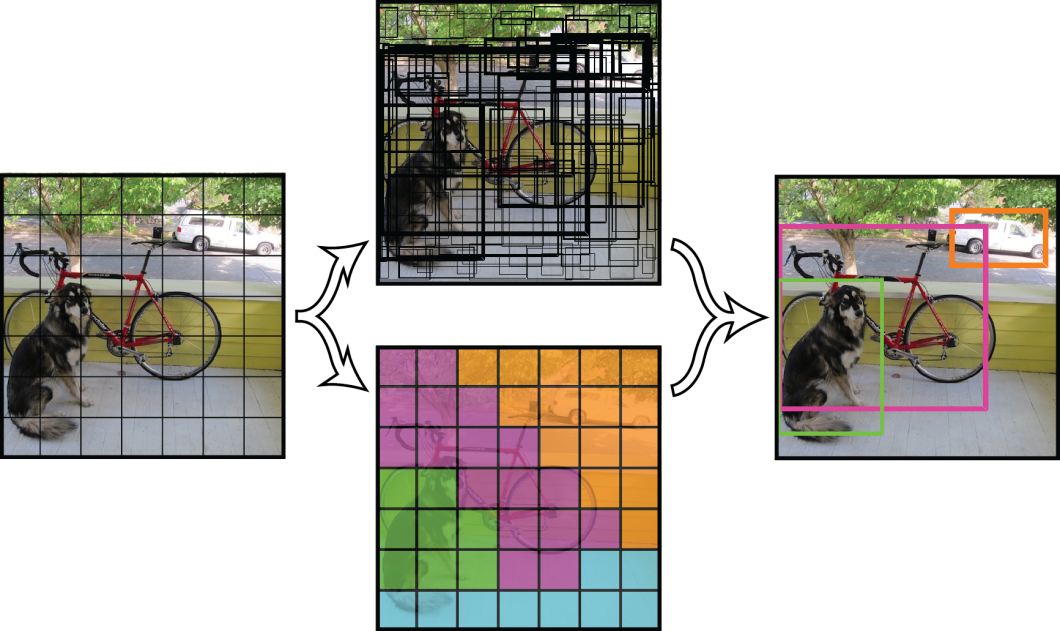

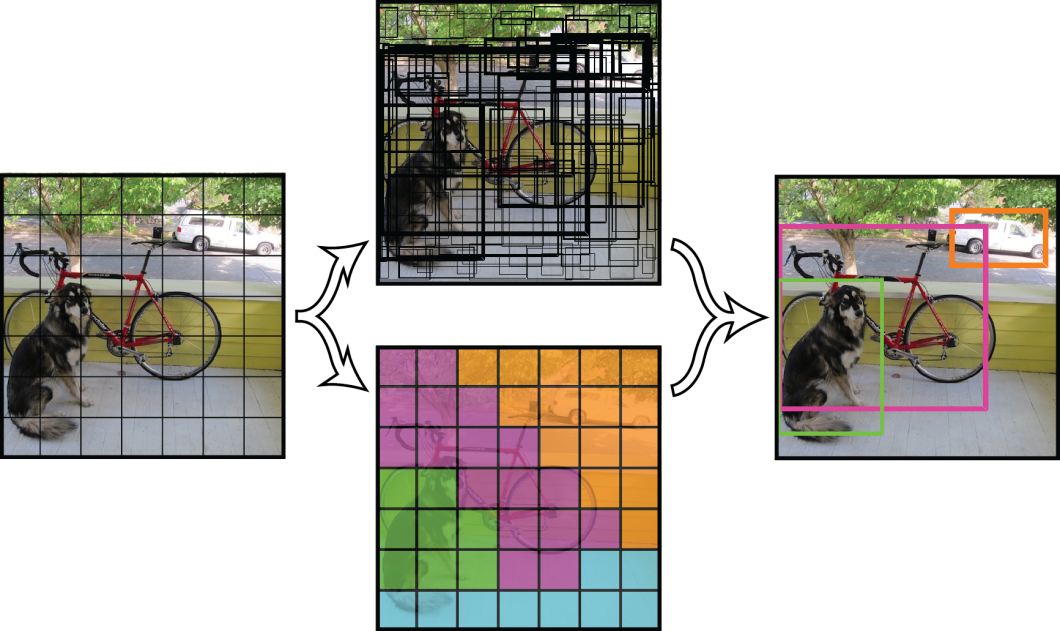

The essence of YOLO fashions is treating object detection as a regression drawback. The YOLO method is to use a single convolutional neural community (CNN) to the total picture. This community divides the picture into areas and predicts bounding bins and chances for every area.

These bounding bins are weighted by the anticipated chances. These weights can then be thresholded to point out solely the high-scoring detections.

YOLOv1 divides the enter picture right into a grid (SxS), and every grid cell is accountable for predicting bounding bins and sophistication chances for objects inside it. Every bounding field prediction features a confidence rating indicating the probability of an object being current within the field. The researchers calculate the arrogance scores utilizing methods like intersection over union (IOU), which can be utilized to filter the prediction. Regardless of the novelty and pace of the YOLO method, it confronted some limitations like the next.

- Generalization: YOLOv1 struggles to detect new objects, not seen in coaching precisely.

- Spatial constraints: In YOLOv1, every grid cell predicts solely two bins and may solely have one class, which makes it battle with small objects that seem in teams, similar to flocks of birds.

- Loss Perform Limitations: The YOLOv1 loss operate treats errors the identical in small and huge bounding bins. A small error in a big field is usually okay, however a small one has a a lot larger impact on IOU.

- Localization Errors: One main difficulty with YOLOv1 was accuracy, it usually mislocates the place objects are within the picture.

Now that we’ve the essential mechanism of YOLOs lined, let’s take a look at how the researchers upgraded this mannequin’s capabilities within the subsequent model.

YOLOv2 (YOLO9000)

YOLO9000 got here one 12 months after YOLOv1 to deal with the constraints in object detection datasets on the time. YOLO9000 was named that manner as a result of it will possibly detect over 9000 completely different object classes. This was transformative when it comes to accuracy and generalization.

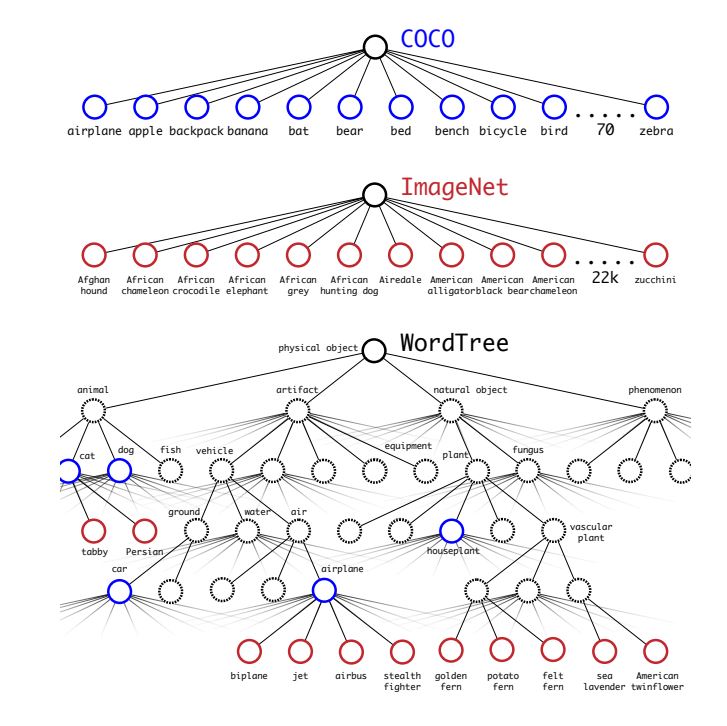

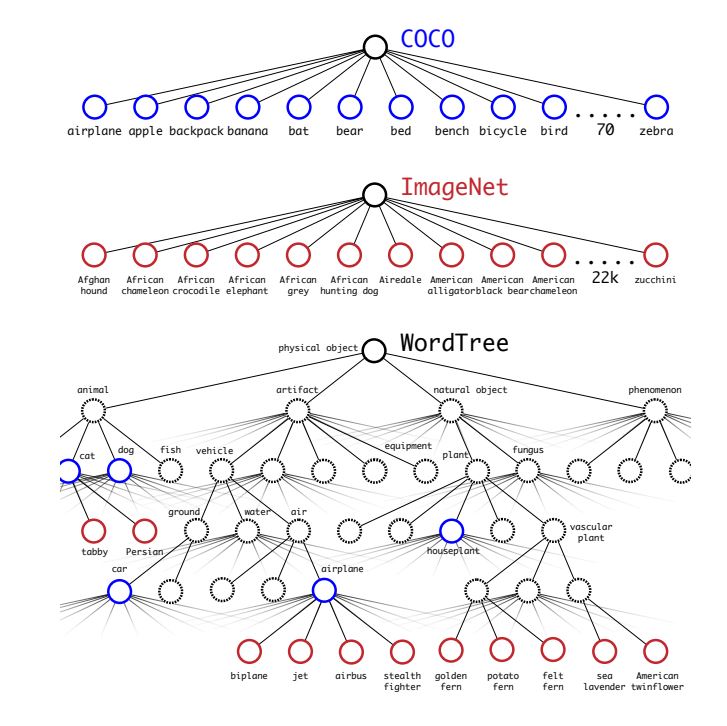

The researchers behind YOLO9000 proposed a singular joint coaching algorithm that trains object detectors on each detection and classification knowledge. This methodology leverages labeled detection pictures to study to exactly localize objects and makes use of classification pictures to extend its vocabulary and robustness.

By combining the options from completely different datasets, for each classification and detection, YOLO9000 confirmed an excellent enchancment over its predecessor YOLOv1. YOLO9000 was introduced as higher, stronger, and quicker.

- Hierarchical Classification: A technique utilized in YOLO9000 based mostly on the WordTree construction, permitting elevated generalization to unseen objects, and elevated vocabulary or vary of objects.

- Architectural Modifications: YOLO9000 launched just a few adjustments, like utilizing batch normalization for quicker coaching and stability, anchor bins or sliding window method, and makes use of Darknet-19 as a spine. Darknet-19 is a CNN with 19 layers designed to be correct and quick.

- Joint Coaching: An algorithm that permits the mannequin to make the most of the hierarchical classification framework and study from each classification and detection datasets like COCO and ImageNet.

Nonetheless, the YOLO household continued to enhance, subsequent we are going to take a look at YOLOv3.

YOLOv3 Little Modifications, Huge Results

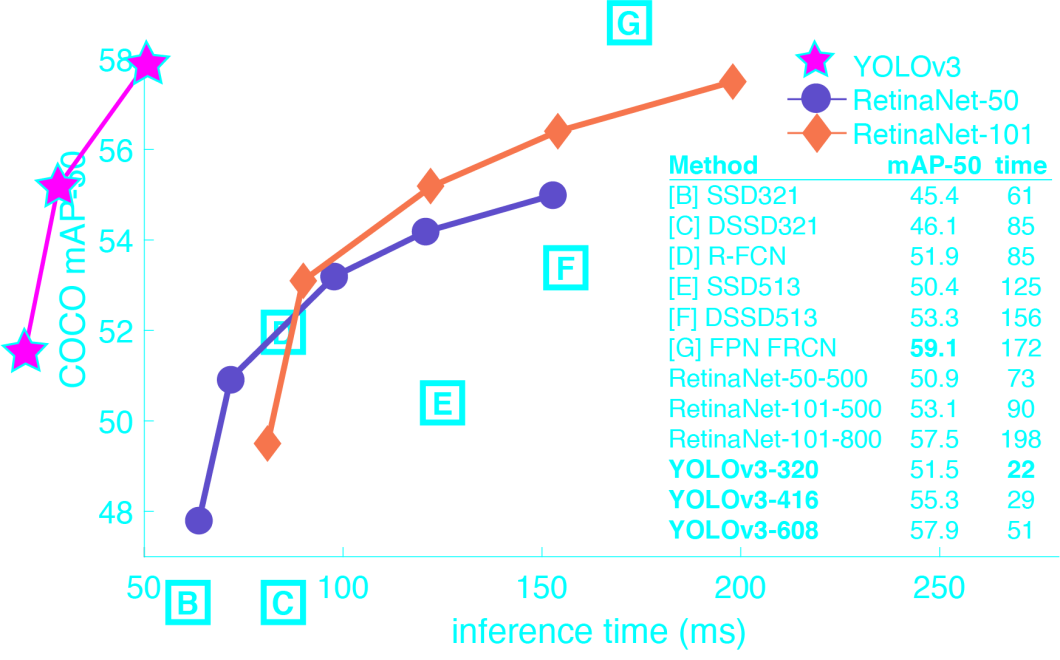

A few years later, the researchers behind YOLO got here up with the subsequent model, YOLOv3. Whereas YOLO9000 was a state-of-the-art mannequin, object detection generally had its limitations. Enhancing accuracy and pace is at all times one of many targets of object detection fashions, which was the intention of YOLOv3, little changes right here and there and we get a better-performing mannequin.

The enhancements begin with the bounding bins, whereas it nonetheless makes use of the sliding window method, YOLOv3 had some enhancements. YOLOv3 launched multi-scale predictions the place it predicts bounding bins at three completely different scales. This implies extra effectiveness in detecting objects of various sizes. This amongst different enhancements allowed YOLO again on the map of state-of-the-art fashions, with pace and accuracy trade-offs.

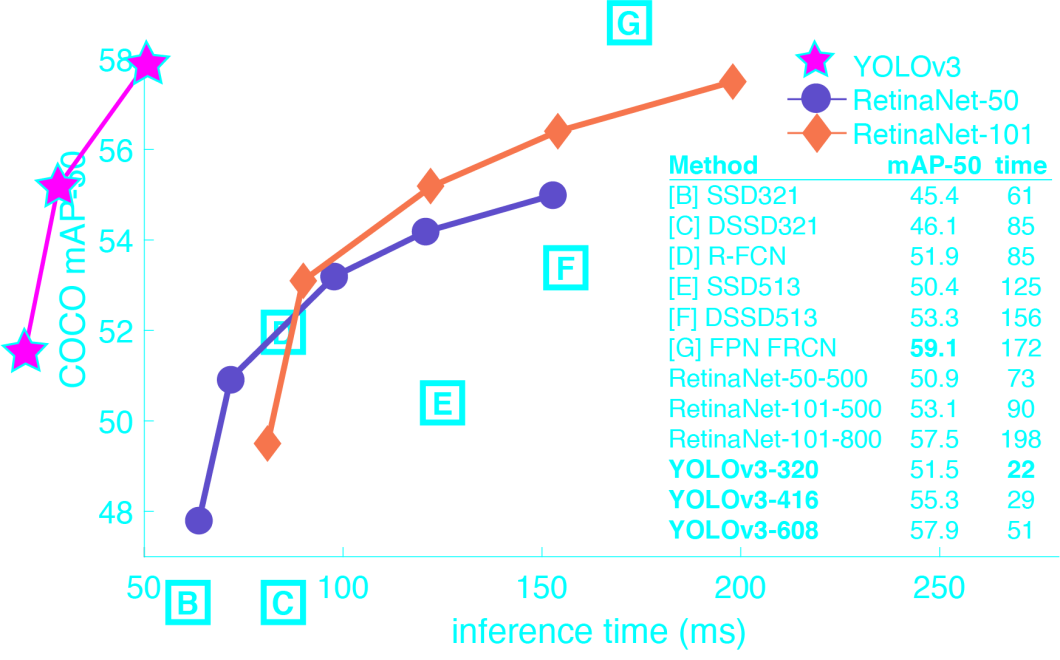

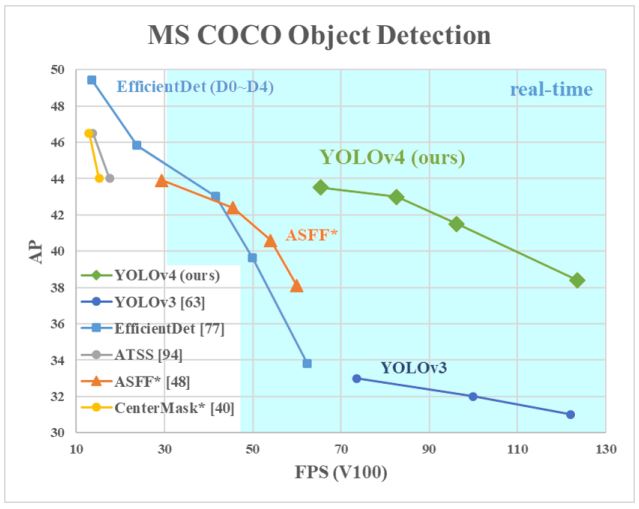

As seen within the graph YOLOv3 offered among the best speeds and accuracies utilizing the imply common precision (mAP-50) metric. Moreover, YOLOv3 launched different enhancements as follows.

- Spine: YOLOv3 makes use of a greater and greater CNN spine which is Darknet-53 which consists of 53 layers and is a hybrid method between Darknet-19 and deep studying residual networks (Resnets), however extra environment friendly than ResNet-101 or ResNet-152.

- Predictions Throughout Scales: YOLOv3 predicts bounding bins at three completely different scales, much like characteristic pyramid networks. This permits the mannequin to detect objects of assorted sizes extra successfully.

- Classifier: Unbiased logistic classifiers are used as a substitute of a softmax operate, permitting for a number of labels per field.

- Dataset: The researchers practice YOLOv3 on the COCO dataset solely.

Additionally, whereas much less vital, YOLOv3 fastened a little bit data-loading bug in YOLOv2, which helped by like 2 mAP factors. Up subsequent, let’s see how the YOLO mannequin advanced into YOLOv4.

YOLOv4 Optimization is Key

Persevering with the legacy of the YOLO household, YOLOv4 launched a number of enhancements and optimizations. Let’s dive deeper into the YOLOv4 mechanism.

Structure

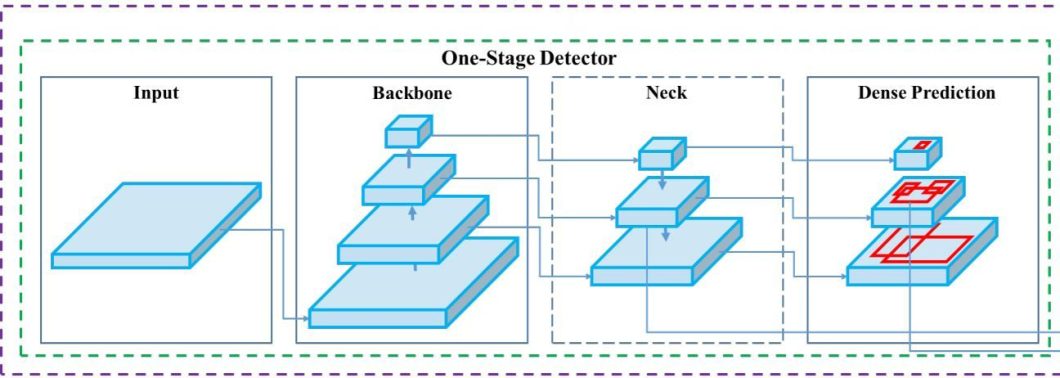

Essentially the most notable change is the three half structure, whereas YOLOv4 remains to be a one-stage object detection community, the structure entails 3 important parts, spine, head, and neck. This structure cut up was an important step within the evolution of YOLOs. The spine, head, and neck every have their very own performance in YOLOs.

The spine is the characteristic extraction half, normally a CNN that learns options throughout layers. Then the neck refines and combines the extracted options from completely different ranges of the spine, making a wealthy and informative characteristic illustration. Lastly, the top does the precise prediction, and outputs bounding bins, class chances, and objectness scores.

For YOLOv4 the researchers used the next parts for the spine, neck, and head.

- Spine: CSPDarknet53 is a convolutional neural community and spine for object detection that makes use of DarkNet-53 which makes use of a Cross Stage Partial Community (CSPNet) technique.

- Neck: Modified Spatial Pyramid Pooling (SPP) and Path aggregation community (PAN) had been used for YOLOv4, which produced extra granular characteristic extraction, higher coaching, and higher efficiency.

- Head: YOLOv4 employs the (anchor-based) structure of YOLOv3 as the top of YOLOv4.

This was not all that YOLOv4 launched, there was quite a lot of work on optimization and selecting the correct strategies and methods, let’s discover these subsequent.

Optimization

The YOLOv4 mannequin got here with two luggage of strategies, because the researchers launched within the paper: Bag of Freebies (BoF) and Bag of Specials (BoS). These strategies had been instrumental within the efficiency of YOLOv4, on this part, we are going to discover the essential strategies the researchers used.

- Mosaic Information Augmentation: This knowledge augmentation methodology combines 4 coaching pictures into one, enabling the mannequin to study to detect objects in a greater diversity of contexts and lowering the necessity for giant mini-batch sizes. The researchers used it as part of the BoF for the spine coaching.

- Self-Adversarial Coaching (SAT): A two-stage knowledge augmentation approach the place the community methods itself and modifies the enter picture to assume there’s no object. Then, it trains on this modified picture to enhance robustness and generalization. Researchers used it as part of the BoF for the detector or head of the community.

- Cross mini-batch Normalization (CmBN): A modification of Cross-Iteration Batch Normalization (CBN) that makes coaching extra appropriate for a single GPU. Utilized by researchers as a part of the BoF for the detector.

- Modified Spatial Consideration Module (SAM): The researchers modify the unique SAM from spatial-wise consideration to point-wise consideration, enhancing the mannequin’s means to give attention to essential options with out rising computational prices. Used as a part of the BoS as an extra block for the detector of the community.

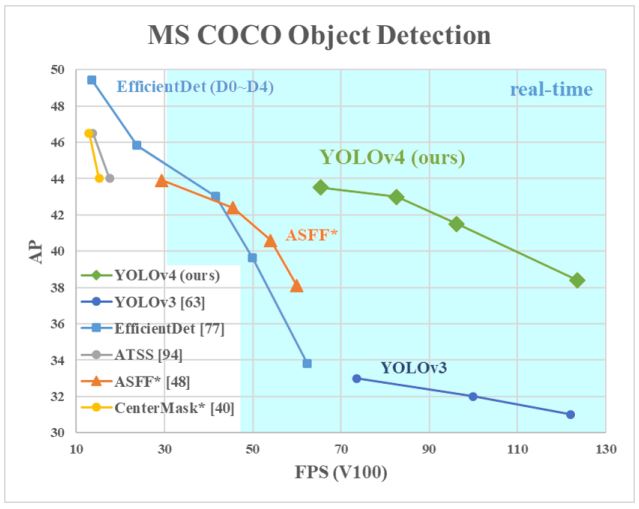

Nonetheless, this isn’t all, YOLOv4 used many different methods throughout the BoS and BoF, like Mish activation and cross-stage partial connections (CSP) for the spine as a part of the BoS. All these optimization modifications resulted in a state-of-the-art efficiency for YOLOv4, particularly in pace, but in addition accuracy.

YOLOv5 The Most Cherished

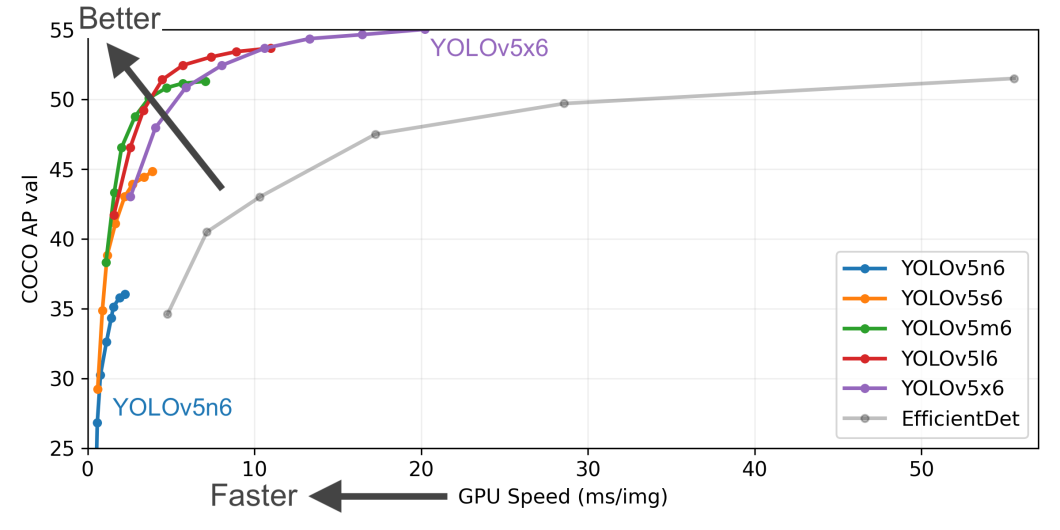

Whereas YOLOv5 didn’t include a devoted analysis paper, this mannequin has impressed all builders, engineers, and researchers. YOLOv5 got here only some months after YOLOv4, there wasn’t a lot enchancment, however it was barely quicker. Ultralytics designed YOLOv5 for simpler implementation, and extra detailed documentation with a number of languages assist, most notably YOLOv5 was constructed on Pytorch making it simply usable for builders.

On the similar time, its predecessors had been barely more durable to implement. Ultralytics introduced YOLOv5 because the world’s most liked imaginative and prescient AI, as well as, YOLOv5 got here with some nice options like completely different codecs for mannequin export, a coaching script to coach by yourself knowledge, and a number of coaching methods like Take a look at-Time Augmentation (TTA) and Mannequin Ensembling.

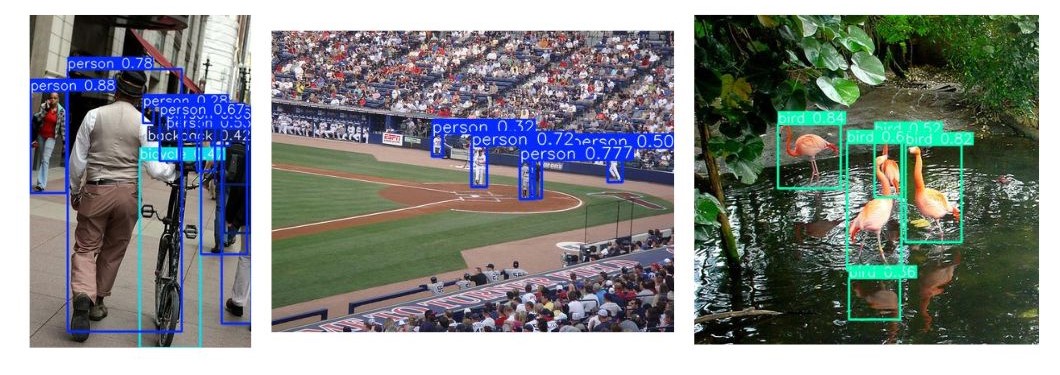

Demo

Since YOLOv5 may be very related in structure and dealing mechanism to YOLOv4 however has a better implementation we will strive it out simply. This part will take a look at a pre-trained YOLOv5 mannequin on our pictures. This implementation can be carried out on a Google Colab pocket book with Pytorch and can be straightforward for inexperienced persons. Let’s get began.

import torch

mannequin = torch.hub.load('ultralytics/yolov5', 'yolov5s', force_reload=True, trust_repo=True)

First, we import Pytorch and cargo the mannequin utilizing torch.hub.load, we are going to use the YOLOv5s mannequin which is a compact mannequin and really quick. Now that we’ve the mannequin, let’s load some take a look at pictures and inference it.

im = '/content material/n09835506_ballplayer.jpeg' # file, Path, PIL.Picture, OpenCV, nparray, listing outcomes = mannequin(im) # inference outcomes.save() #or .print(), .present(), .save(), .crop(), .pandas()

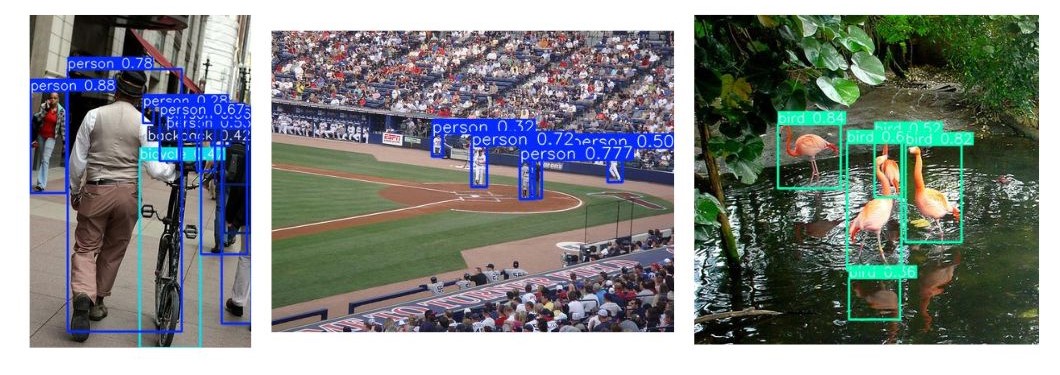

I can be utilizing some pattern pictures from the ImageNet dataset, the mannequin took round 17 milliseconds together with preprocessing to foretell pattern pictures. Beneath are the outcomes of three samples.

Ease of use, steady updates, an enormous group, and good documentation make YOLOv5 the proper compact mannequin that runs on mild {hardware} and provides respectable accuracy in nearly real-time. YOLO fashions continued to evolve after YOLOv5 to YOLOv6 let’s discover that within the subsequent part.

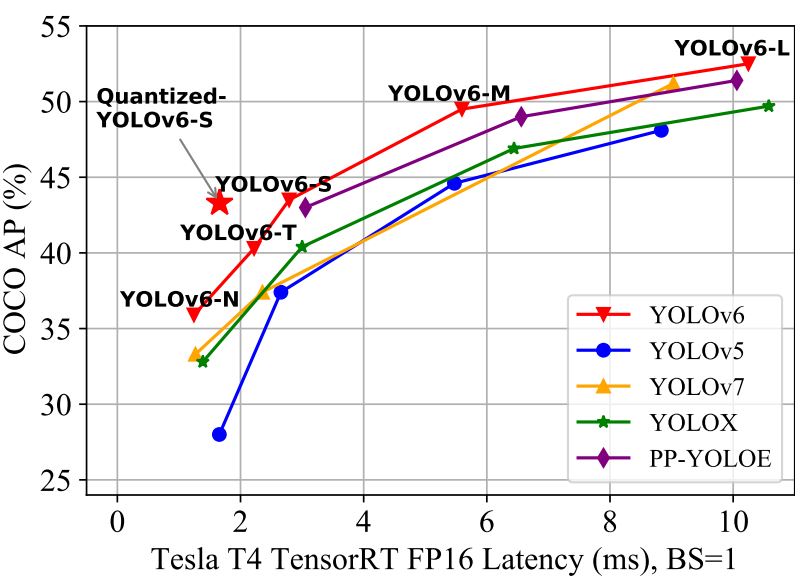

YOLOv6 Industrial High quality

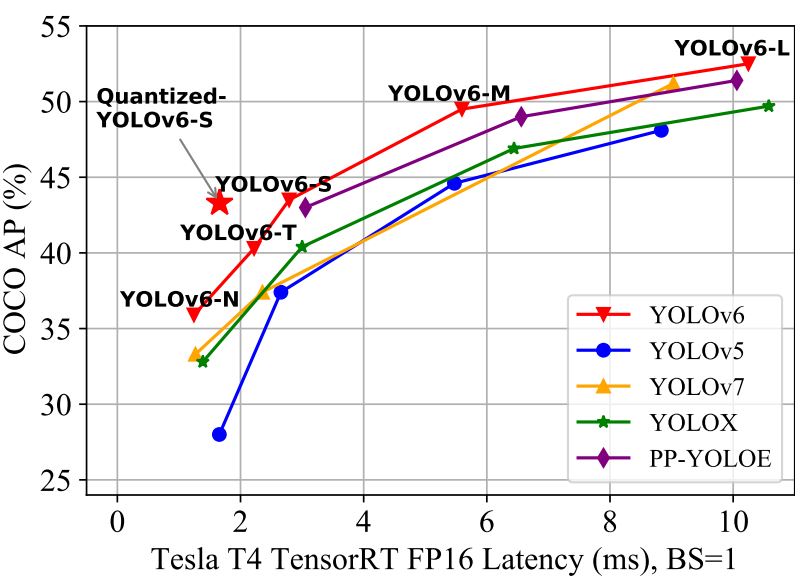

YOLOv6 emerges as a major evolution within the YOLO sequence, it introduces some key architectural and coaching adjustments to attain a greater stability between pace and accuracy. Notably, YOLOv6 distinguishes itself by specializing in industrial purposes. This industrial focus supplied deployment-ready networks and higher consideration of the constraints of real-world environments.

With the stability between pace and accuracy, it may be run on generally used {hardware}, such because the Tesla T4 GPU making the deployment of object detection in industrial settings simpler than ever. YOLOv6 was not the one mannequin out there on the time, there have been YOLOv5, YOLOX, and YOLOv7 all competing candidates for environment friendly detectors to deploy. Now, let’s talk about the adjustments that YOLOv6 launched.

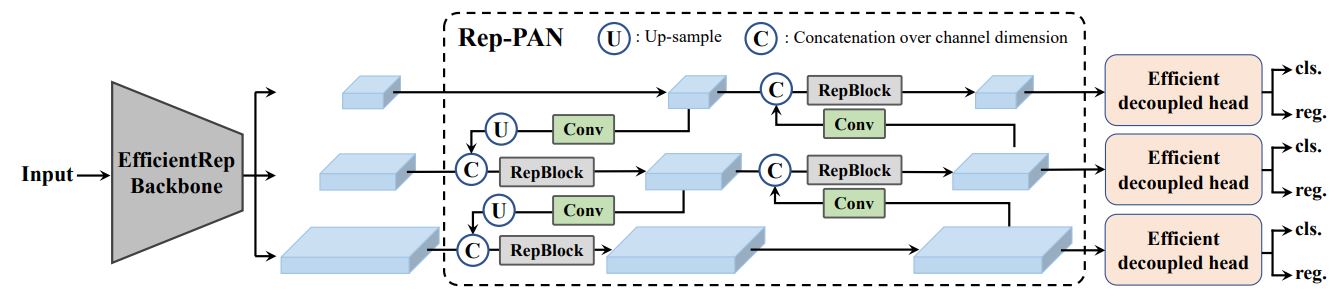

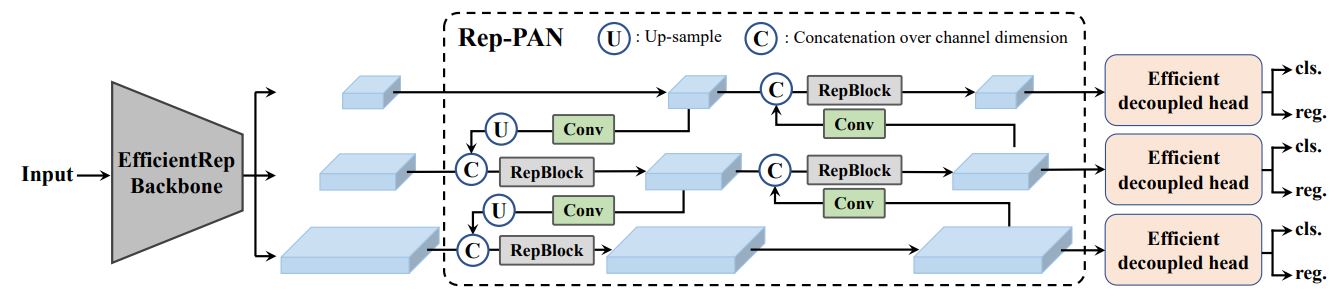

Structure

- Spine: The researchers construct the spine utilizing EfficientRep which is a hardware-aware CNN that includes RepBlock for small fashions (N and S) and CSPStackRep Block for bigger fashions (M and L).

- Neck: Makes use of Rep-PAN topology, enhancing the modified PAN topology from YOLOv4 and YOLOv5 with RepBlocks or CSPStackRep Blocks. Which supplies a extra environment friendly characteristic aggregation from completely different ranges of the spine.

- Head: YOLOv6 launched the Environment friendly Decoupled Head simplifying the design for improved effectivity. It makes use of a hybrid-channel technique, lowering the variety of center 3×3 convolutional layers and scaling the width collectively with the spine and neck.

YOLOv6 additionally incorporates a number of different methods to boost efficiency.

- Label Project: Makes use of Activity Alignment Studying (TAL) to deal with the misalignment between classification and field regression duties.

- Self-Distillation: It applies self-distillation to each classification and regression duties, enhancing the accuracy much more.

- Loss Perform: It employs VariFocal Loss for classification and a mixture of SIoU and GIoU Loss for regression.

YOLOv6 represents a refined and enhanced method to object detection, constructing upon the strengths of its predecessors whereas introducing modern options to deal with the challenges of real-world deployment. Its give attention to effectivity, accuracy, and industrial applicability makes it a precious instrument for industrial purposes.

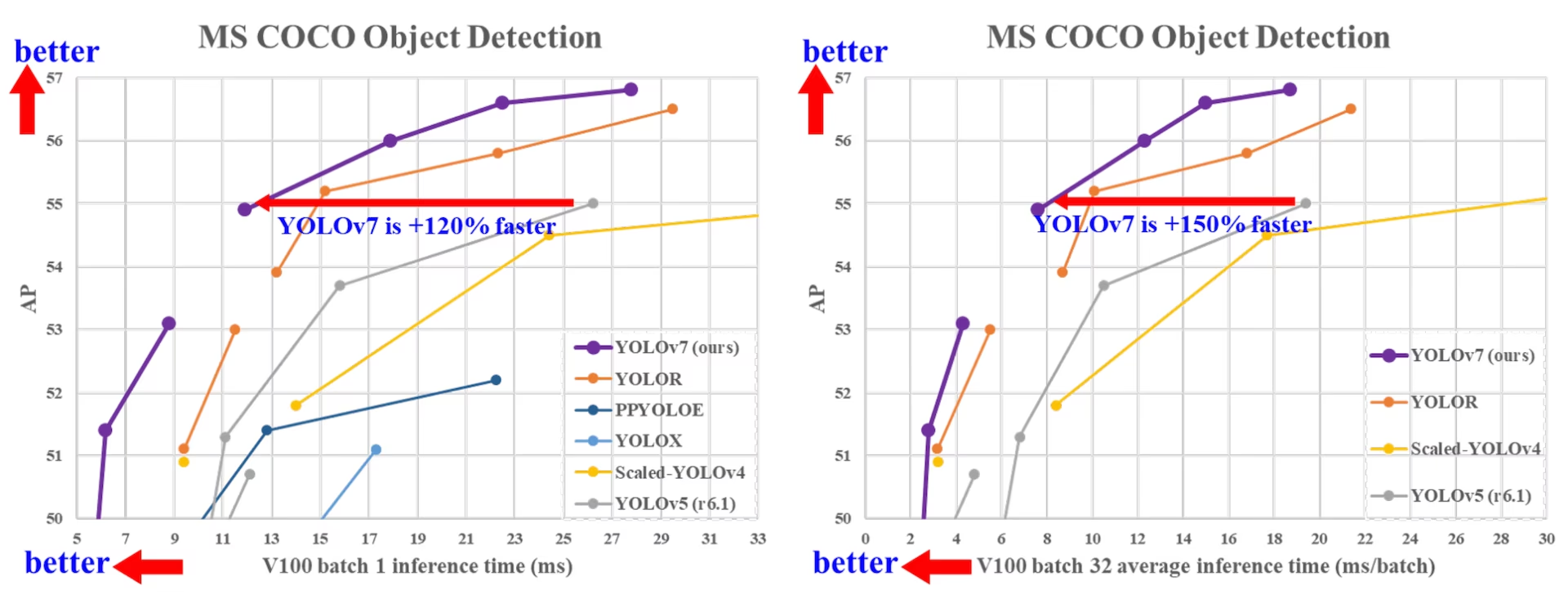

YOLOv7: Trainable bag-of-freebies

Whereas technically YOLOv6 was launched earlier than YOLOv7, the manufacturing model of YOLOv6 got here after YOLOv7 and surpassed it in efficiency. Nonetheless, YOLOv7 launched a novel idea calling it the trainable bag of freebies (BoF). This features a sequence of fine-grained refinements moderately than a whole overhaul.

These refinements are primarily targeted on optimizing the coaching course of and enhancing the mannequin’s means to study efficient representations with out considerably rising the computational prices. Following are a few of the key options YOLOv7 launched.

- Mannequin Re-parameterization: YOLOv7 proposes a deliberate re-parameterized mannequin, which is a method relevant to layers in several networks with the idea of gradient propagation path.

- Dynamic Label Project: The coaching of the mannequin with a number of output layers presents a brand new difficulty: “Tips on how to assign dynamic targets for the outputs of various branches?” To unravel this drawback, YOLOv7 introduces a brand new label project methodology known as coarse-to-fine lead guided label project.

- Prolonged and Compound Scaling: YOLOv7 proposes “prolong” and “compound scaling” strategies for the thing detector that may successfully make the most of parameters and computation.

YOLOv7 focuses on fine-grained refinements and optimization methods to boost the efficiency of real-time object detectors. Its emphasis on trainable bag-of-freebies, deep supervision, and architectural enhancements results in a major enhance in accuracy with out sacrificing pace, making it a precious development within the YOLO sequence. Nonetheless, the evolution retains going producing YOLOv8 which is our topic subsequent.

YOLOv8

YOLOv8 is an iteration within the YOLO sequence of real-time object detectors, providing cutting-edge efficiency when it comes to accuracy and pace. Nonetheless, YOLOv8 doesn’t have an official paper to it however much like YOLOv5 this was a user-friendly enhanced YOLO object detection mannequin. Developed by Ultralytics YOLOv8 introduces new options and optimizations that make it a really perfect selection for numerous object detection duties in a variety of purposes. Here’s a fast overview of its options.

- Superior Spine and Neck Architectures

- Anchor-free Break up Ultralytics Head: YOLOv8 adopts an anchor-free cut up Ultralytics head, which contributes to higher accuracy and a extra environment friendly detection course of in comparison with anchor-based approaches.

- Optimized Accuracy-Pace Tradeoff

Along with all that YOLOv8 is a well-maintained mannequin by Ultralytics providing a various vary of fashions, every specialised for particular duties in pc imaginative and prescient like detection, segmentation, classification, and pose detection. Since YOLOv8 has ease of use by the Ultralytics library let’s strive it in a demo.

Demo

This demo will merely use the Ultralytics library in Python to deduce YOLOv8 fashions. There are various methods and choices to deduce YOLO fashions, right here is a documentation of the prediction choices.

from ultralytics import YOLO

# Load a COCO-pretrained YOLOv8s mannequin

mannequin = YOLO("yolov8s.pt")

It will import the Ultralytics library and cargo the YOLOv8 small mannequin, which has a superb stability between accuracy and pace, if the Ultralytics library just isn’t already put in, make certain to put in it in your pocket book with: !pip set up ultralytics. Now, let’s take a look at it on some pictures.

outcomes = mannequin("/content material/metropolis.jpg", save=True, conf=0.5)

It will run the detection activity, save the consequence, and solely embody bounding bins of not less than 0.5 confidence. Beneath is the results of the pattern picture I used.

YOLOv8 fashions obtain SOTA efficiency throughout numerous benchmarking datasets. As an illustration, the YOLOv8n mannequin achieves a mAP (imply Common Precision) of 37.3 on the COCO dataset and a pace of 0.99 ms on A100 TensorRT. Subsequent, let’s see how the YOLO household advanced additional with YOLOv9.

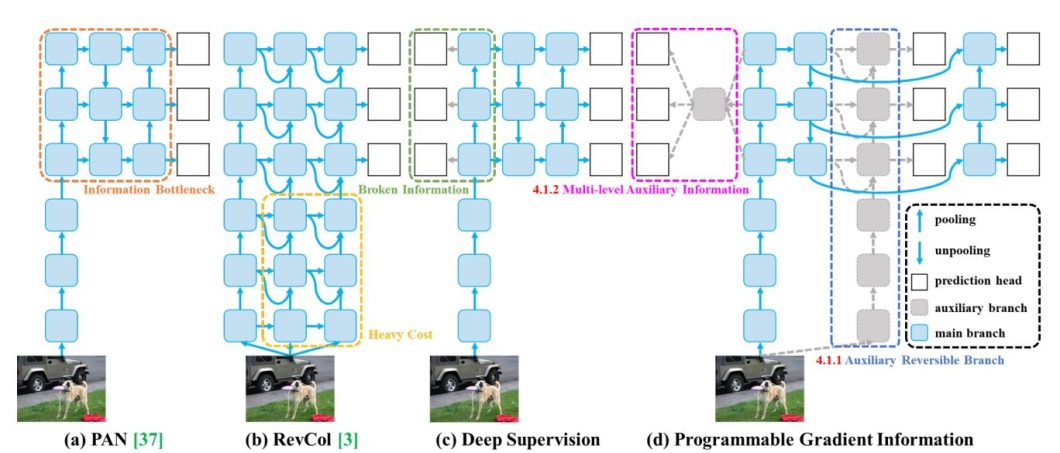

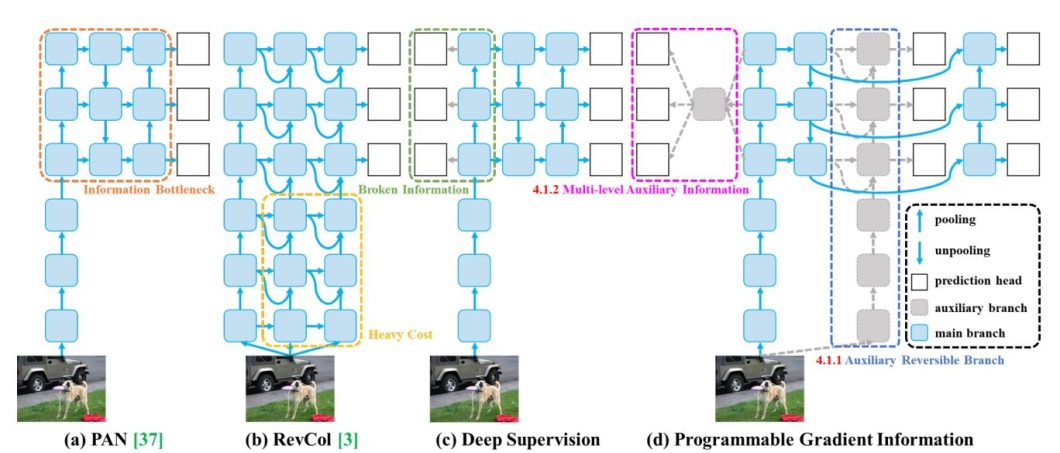

YOLOv9: Correct Studying

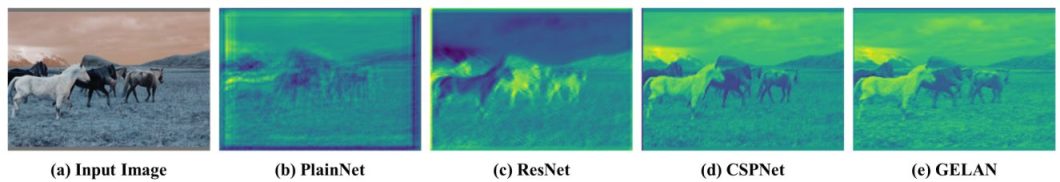

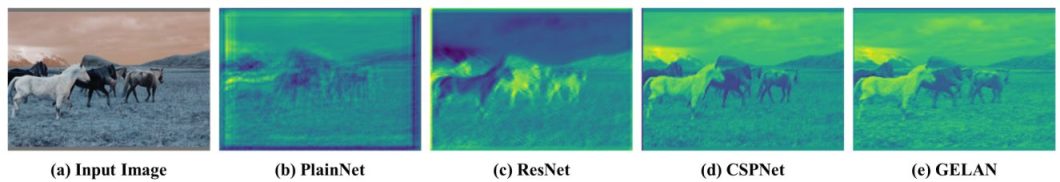

YOLOv9 takes a distinct method in comparison with its predecessors, by immediately addressing the problem of data loss in deep neural networks. It introduces the idea of Programmable Gradient Data (PGI) and a brand new structure known as Generalized Environment friendly Layer Aggregation Community (GELAN) to fight data bottlenecks and guarantee dependable gradient circulation throughout coaching.

The researchers launched YOLOv9 as a result of current strategies ignore the truth that when enter knowledge undergoes layer-by-layer characteristic extraction and spatial transformation, a considerable amount of data can be misplaced. This data loss results in unreliable gradients and hinders the mannequin’s means to study correct representations.

YOLOv9 introduces PGI, a novel methodology for producing dependable gradients by utilizing an auxiliary reversible department. This auxiliary department gives full enter data for calculating the target operate, making certain that the gradients used to replace the community weights are extra informative. The reversible nature of the auxiliary department ensures that no data is misplaced through the feedforward course of.

YOLOv9 additionally proposed GELAN as a brand new light-weight structure designed to maximise data circulation and facilitate the acquisition of related data for prediction. GELAN is a generalized model of the ELAN structure, making use of any computational block whereas sustaining effectivity and efficiency doable. The researchers designed it based mostly on gradient path planning, making certain environment friendly data circulation by the community.

YOLOv9 presents a refreshed perspective on object detection by specializing in data circulation and gradient high quality. The introduction of PGI and GELAN, units YOLOv9 aside from its predecessors. This give attention to the basics of data processing in deep neural networks results in improved efficiency and a greater explainability of the educational course of in object detection.

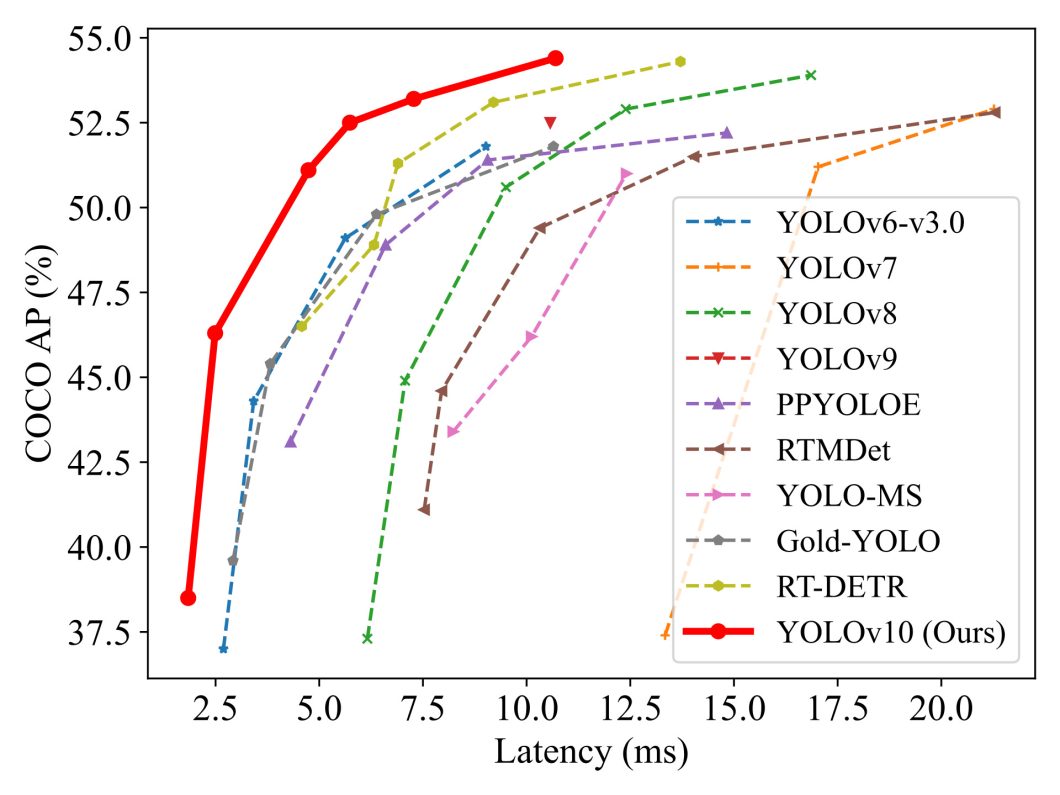

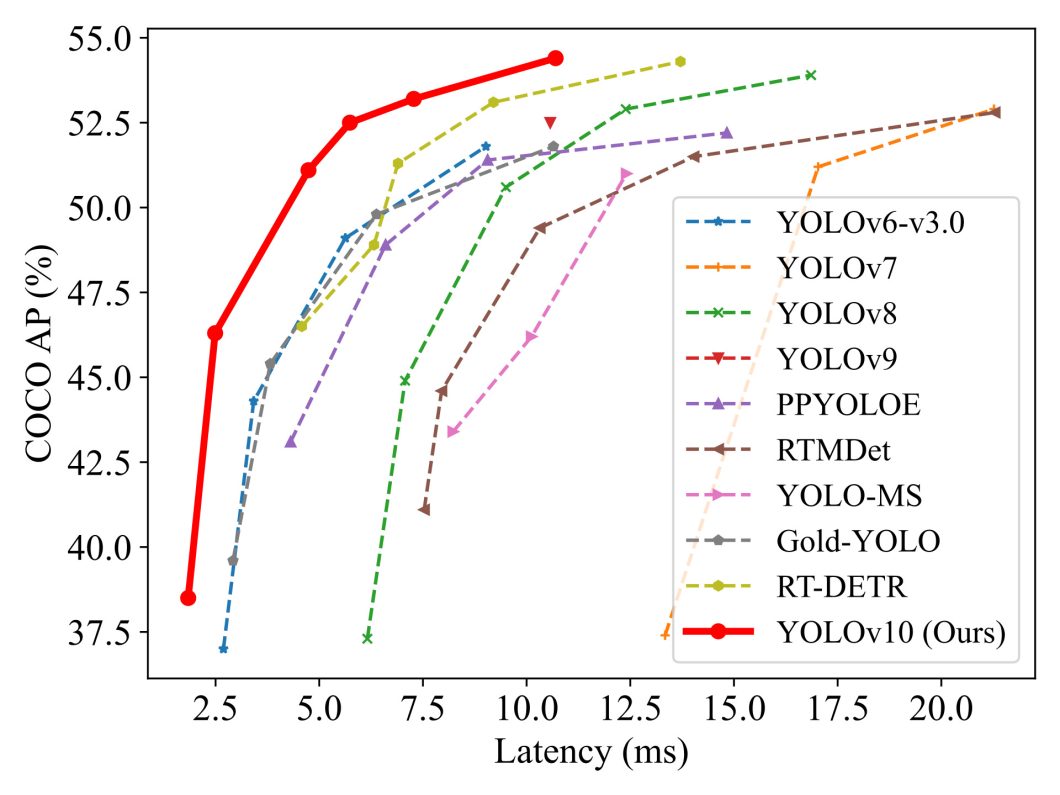

YOLOv10 Actual-Time Object Detection

The introduction of YOLOv10 was revolutionary for real-time end-to-end object detection. YOLOv10 surpassed all earlier pace and accuracy benchmarks, attaining precise real-time object detection. YOLOv10 eliminates the necessity for non-maximum suppression (NMS) post-processing with NMS-Free Detection.

This not solely improves inference pace but in addition simplifies the deployment course of. YOLOv10 launched just a few key options like NMS-free coaching and a holistic design method that made it excel in all metrics.

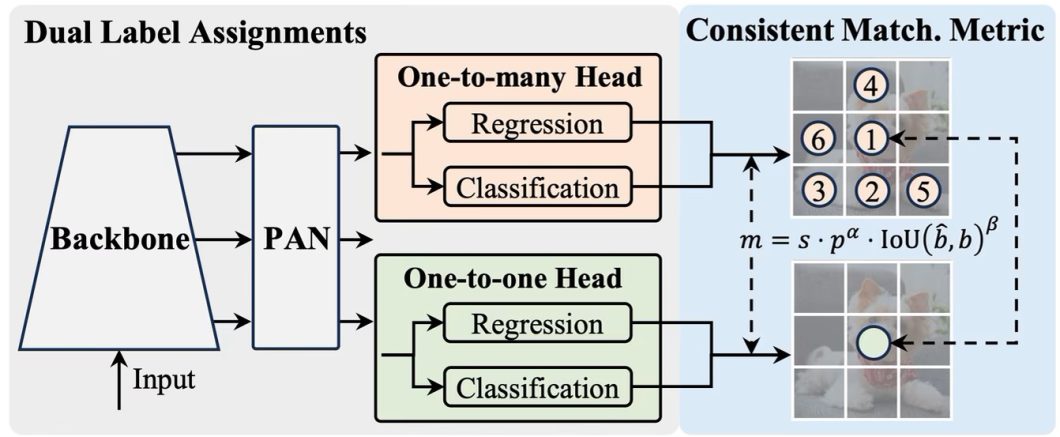

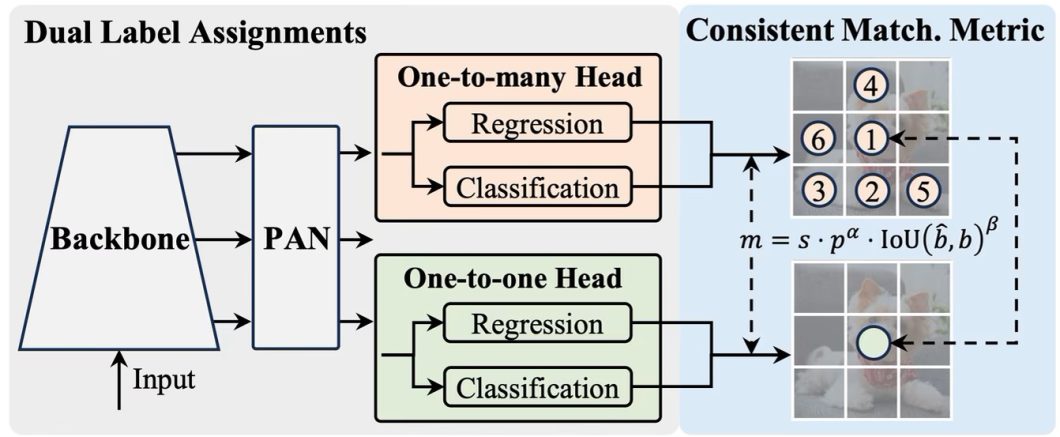

- NMS-Free Detection: YOLOv10 delivers a novel NMS-free coaching technique based mostly on constant twin assignments. It employs twin label assignments (one-to-many and one-to-one) and a constant matching metric to supply wealthy supervision throughout coaching whereas eliminating NMS throughout inference. Throughout inference, solely the one-to-one head is used, enabling NMS-free detection.

- Holistic Effectivity-Accuracy Pushed Design: YOLOv10 adopts a holistic method to mannequin design, optimizing numerous parts for each effectivity and accuracy. It introduces a light-weight classification head, spatial-channel decoupled downsampling, and rank-guided block design to cut back computational value.

YOLO11: Architectural Enhancements

YOLO11 was launched in September 2024. It went by a sequence of architectural refinements and a give attention to enhancing computational effectivity with out sacrificing accuracy.

It introduces novel parts just like the C3k2 block and the C2PSA block, which contribute to improved characteristic extraction and processing. This leads to a barely higher efficiency, however with a lot fewer parameters for the mannequin. Following are the important thing options of YOLO11.

- C3k2 Block: YOLO11 introduces the C3k2 block, a computationally environment friendly implementation of the Cross Stage Partial (CSP) Bottleneck. It replaces the C2f block within the spine and neck, and employs two smaller convolutions as a substitute of 1 giant convolution, lowering processing time.

- C2PSA Block: Introduces the Cross Stage Partial with Spatial Consideration (C2PSA) block after the Spatial Pyramid Pooling – Quick (SPPF) block to boost spatial consideration. This consideration mechanism permits the mannequin to focus extra successfully on essential areas throughout the picture, doubtlessly enhancing detection accuracy.

With this, we’ve mentioned the entire YOLO household of object detection fashions. However one thing tells me the evolution gained’t cease there, the innovation will proceed and we are going to see even higher performances sooner or later.

The Way forward for YOLO Fashions

The YOLO household has persistently pushed the boundaries of pc imaginative and prescient. It has advanced from a easy structure to a complicated system. Every model has launched novel options and expanded the vary of supported duties.

Wanting forward, the development of accelerating accuracy, pace, and multi-task capabilities will seemingly proceed. Potential areas of improvement embody the next.

- Improved Explainability: Making the mannequin’s decision-making course of extra clear.

- Enhanced Robustness: Making the mannequin extra resilient to difficult situations.

- Environment friendly Deployment: Optimizing the mannequin for numerous {hardware} platforms.

The developments in YOLO fashions have vital implications for numerous industries. YOLO’s means to carry out real-time object detection has the potential to alter how we work together with the visible world. Nonetheless, it is very important tackle moral concerns and potential biases. Guaranteeing equity, accountability, and transparency is essential for accountable innovation.